I’ve been watching mates attempt to onboard to Claude Code recently. Pedro Sant’Anna constructed a ravishing workflow system with orchestrators and brokers and high quality gates. Antonio Mele made a curated talent market organized by analysis stage. Each are genuinely helpful.

However I’m unsure that it’s what folks want to start out out, even supposing they’re each in that previous custom on-line of being “starter kits” or “better of lists”. And I need to inform you why. And to do it, I’m going to speak about some economics jargon, and I’m going to offer you a idea of Claude Code I’ve which is Claude Code is a foam mattress that you need to sleep on some time, let it conform to you, and solely then will you sleep nicely by the evening.

However then earlier than we get into my reminiscence foam idea of Claude Code, I need to set the stage and current the present state of affairs to you because it pertains to Claude Code. I’ve stated this earlier than and I need to say it yet another time. There are varioous related concepts in economics I need to current that can assist you perceive CC adoption proper now. And they’re this:

They don’t imply the identical factor, per se, however I’m going to make use of them as if they’re the identical factor as a result of I believe possibly this may help a few of you studying this work out the place you slot in the scheme of this. I believe should you’re studying this, you’re nearly actually extra prone to be the entrant than the incumbent; you usually tend to be the marginal person than the common person; and also you usually tend to be within the intensive margins of demand than the intensive ones.

In easy agency competitors video games, you typically may have some agency who bought there first and another person who’s pondering of coming into. These video games are intrinsically about competitors with each other, and in that sense the metaphor isn’t tremendous useful for understanding Claude Code adoption. I don’t suppose you or I are in competitors with software program engineers over using Claude Code, however nonetheless I believe the metaphor is useful as a result of it represents the truth that for most likely 16 months there was a gaggle of Claude Code customers referred to as “software program engineers” or “pc scientists” who program for a dwelling, producing merchandise for corporations which are bought in product markets. They supply labor in enter markets, paid wages, which they use to purchase merchandise produced and bought by corporations. Their work is efficacious to the agency who produce items and companies valued by households. The well-known round movement diagram in economics we usually train in our ideas courses on the primary day of sophistication.

Properly, let’s face it. If you’re studying this, you’re almost certainly a PhD or aspiring PhD within the utilized social sciences like economics, political science, training, legislation, accounting, sociology, and the like. You might also be within the qualitative ones too. I truly suppose Claude Code is ideal for anybody whose work lives inside “directories on their pc” — whose tasks are in some sense the folders even. I imply, isn’t that in a single sense what analysis is? Analysis is folders. Educating is folders. Positive, it’s greater than that clearly, nevertheless it’s saved on computer systems. All of the data related to analysis and instructing lives in a sequence of directories, folders and branches that exist within a sequence of 0s and 1s on our pc which we navigate by way of a conceptual mannequin that could be a hierarchy of folders. And that’s actually who Claude Code is for. Claude Code is for anybody whose work lives in a hierarchy of folders and directories. Which suggests what precisely? That Claude Code is for everybody as a result of I simply described everybody!

However, right here’s the issue — it’s nearly probably the most alien kinds of software program any of us has ever encountered as a result of this isn’t your dad or mum’s software program. It’s not actually exogenous in nature. It’s very fluid, it’s very malleable, it’s adaptive. It’s like water. It’s like attempting to code with water. And so understandably, it’s bizarre and nobody actually may even comprehend what meaning, as a result of they haven’t any body of reference and the writings of incumbents, principally engineers at this level, is just not terribly helpful, or at the least doesn’t appear to be, as a result of the phrases are completely different, and the duties described don’t even appear near what you do every day aside from one phrase — code. And even you then don’t typically acknowledge the coding in what’s being described.

In order that’s what I imply by incumbent vs entrant. All of us right here I believe are the entrants, and most if not all the writings about Claude Code are by incumbents. Even when it’s social scientists, should you look carefully, it’s often choosing on the extra laborious core coders of the set of social scientists there’s. So even then, you could really feel such as you’re listening to somebody out of your tribe, however who had all the time been one of many extra technical individuals out of your tribe, explaining issues that you just nonetheless can’t perceive.

And that’s as a result of proper now, the common author and the common person of Claude Code stays in that programmer/engineer/pc scientist set of incumbents. And also you and I most likely are the marginal customers, the entrants, the intensive margin set of demand. We’re coming in to Claude Code, and it’s not clear tips on how to do it for a lot of the intensive margin as a result of by definition, the intensive margin is completely different than the intensive margin in a single essential method — the intensive margin group of customers are already there, already chosen in based mostly on some innate attribute that the intensive margin who isn’t there but doesn’t possess. Perhaps it’s some shadow value that for the intensive margin was all the time already very low, however for the intensive margin was all the time very very excessive. Which leads me to my subsequent level.

I’m going to make a daring declare. The factor about Claude Code that no person’s written but, or possibly needs to confess, is that there is no such thing as a actual onramp to utilizing Claude Code productively — to utilizing it as an actual labor enhancing device. Not likely. And right here’s why.

I’ve had hassle sleeping for a decade now. It began out due to nervousness issues as a consequence of household stuff. Lengthy story, not essential. However then that stuff wasn’t there anymore, however the sleep issues remained. Which made me marvel — was the household stuff masking some unobserved variable and that each one alongside it had been that, name it ageing or one thing, that had been the issue?

My ex-wife was so good at selecting stuff out. Selecting out the furnishings, selecting out the colours, the paint, the home even. The meals, the eating places, the music, the ambiance. She was — is — elegant, stylish, considerate, and simply affected person. She knew what she preferred and was prepared to attend till she discovered it. Which drove me loopy typically as a result of we’d wait and wait and wait on essential issues like a sofa. However then when that sofa bought right here, wow. That basically was the right sofa. And I believe we made a superb crew as a result of I truly had no opinion in any respect within the course of as long as I might get my work carried out.

Properly, it was like that with the mattress. I by no means had hassle sleeping on our mattresses as a result of she was a wizard and discovering that mattress. However see I’m impulsive. When met with a ton of choices, I take the primary one. I need it over with. And it’s mainly the best choice in … by no means. It by no means works. So after we bought divorced, and I used to be dwelling by myself, I had to purchase a mattress, and I knew this was an issue, so I had anticipate the issue and resolve it. I knew that I had no concept tips on how to ex ante choose a mattress. I couldn’t anticipate what I wanted or needed till I had it, and hated it and needed to return it. So I simply took the straightforward method out — I purchased a mattress manufactured from reminiscence foam.

I bought the reminiscence foam as a result of I didn’t know what I wanted, and I didn’t know what I needed, besides that I needed to sleep. That’s all I knew. And reminiscence foam appeared interesting exactly as a result of reminiscence foam is endogenous. It endogenous materials. Endogenous to what? It’s endogenous to me. To my physique form. To my fashion of sleeping. And so right here’s the factor that reminiscence foam does — it learns. It listens. It pays consideration to me. And that’s how it’s profitable.

Claude Code is a reminiscence foam mattress. It’s not a preconceived exogenous piece of software program. And the rationale that is essential to know is as a result of each different piece of software program you’ve ever used had guidelines. It was exogenous. Whenever you stated “I need to study R”, what did you imply? You meant I needed to study packages, and methods of pondering, a sure programming language logic. As a result of should you might study that programming language logic, then you could possibly study the talents, after which you could possibly carry out the duties that solely might carried out with that language, by you. I name that exogenous software program.

Claude Code is just not exogenous software program. Claude Code is just not a agency mattress. Claude Code is just not a mushy mattress. Claude Code is an endogenous mattress, it’s reminiscence foam, it’s endogenous software program, it conforms to you, it adapts to your fashion, not the opposite method round.

And I believe that’s the reason it confuses folks, possibly intimidates them even. But when I’m proper, and I’m proper, then what it means is that you just can not take one other particular person’s starter package as a result of the actual fact is one other particular person’s starter package is predicated on their form, their physique, their fashion of labor. There isn’t a such factor as a “right form” within the reminiscence foam of a mattress. The proper form within the reminiscence of a reminiscence foam mattress is the form that shaped that held your physique so nicely that you just slept by the evening.

And Claude Code — what nobody actually understands, I believe — is so endogenous, so malleable, to how you’re employed that not solely do the common customers not likely perceive what the marginal customers want — I don’t even suppose Anthropic, who made Claude Code, absolutely perceive this.

There actually is not any substitute then for this. Nobody can train you to make use of Claude Code to change into productive in your analysis and instructing. There’s nothing to show! There’s no “immediate engineering” with Claude Code — frankly, there by no means was any immediate engineering with chatbots both. Immediate engineering is like when somebody is attempting to show folks tips on how to have conversations on a date. Positive, I suppose there’s such a factor, nevertheless it requires far, way more talent at having a dialog with an actual particular person than it does with LLMs, as a result of LLMs are just like the Mister Rogers of software program. They’re so good at listening and paying consideration that you could misspell and babble and go on pointless tangents, they usually nonetheless by some means work out what you are attempting to say. I don’t suppose that’s immediate engineering — I believe that’s LLMs simply being LLMs and being actually good at it.

It’s the identical with Claude Code. You simply have to make use of it, you simply should belief that within the restrict — and belief me, you attain that future limiting level inside no time, significantly — it would determine you out. That’s the factor — it gained’t be you determining Claude Code. It’ll be Claude Code figuring you out! So that you simply should do it. You don’t begin with starter kits. You begin with directories. I imply it! You begin with this:

Please go searching this listing and inform me what the hell is occurring in right here. It seems like a hoarder’s nest! Please assist me make this deck of slides for my class! I’m overwhelmed with work. Use beamer, and set up it if it’s not put in. I don’t even know tips on how to use that frankly. Do the entire thing for me. I’m anxious in regards to the class, and I’m anxious in regards to the analysis undertaking, and I’m anxious about you. I need assistance. So please make a ravishing, lovely deck based mostly on this chapter, on this factor we’re doing at school, and right here — right here’s final yr’s exams, right here’s final yr’s homework. Right here’s a few papers. Simply please learn them, and give you a ravishing deck. Make my life simpler, not tougher. I’m dwelling on my funds constraint and should you may help me, I’ll actually admire it.

That’s how you employ Claude Code. I do know I sound like a cult chief. However I’m not. I’m simply somebody who lastly began to sleep by the evening after years of sleepless nights.

Once I say one thing dramatic like “Claude Code modified my life”, the stunning factor is that I truly imply it. I do know I imply it as a result of I’ve been utilizing it practically nonstop since mid November. You already know the proof I’ve too? I typed this into Claude Code yesterday:

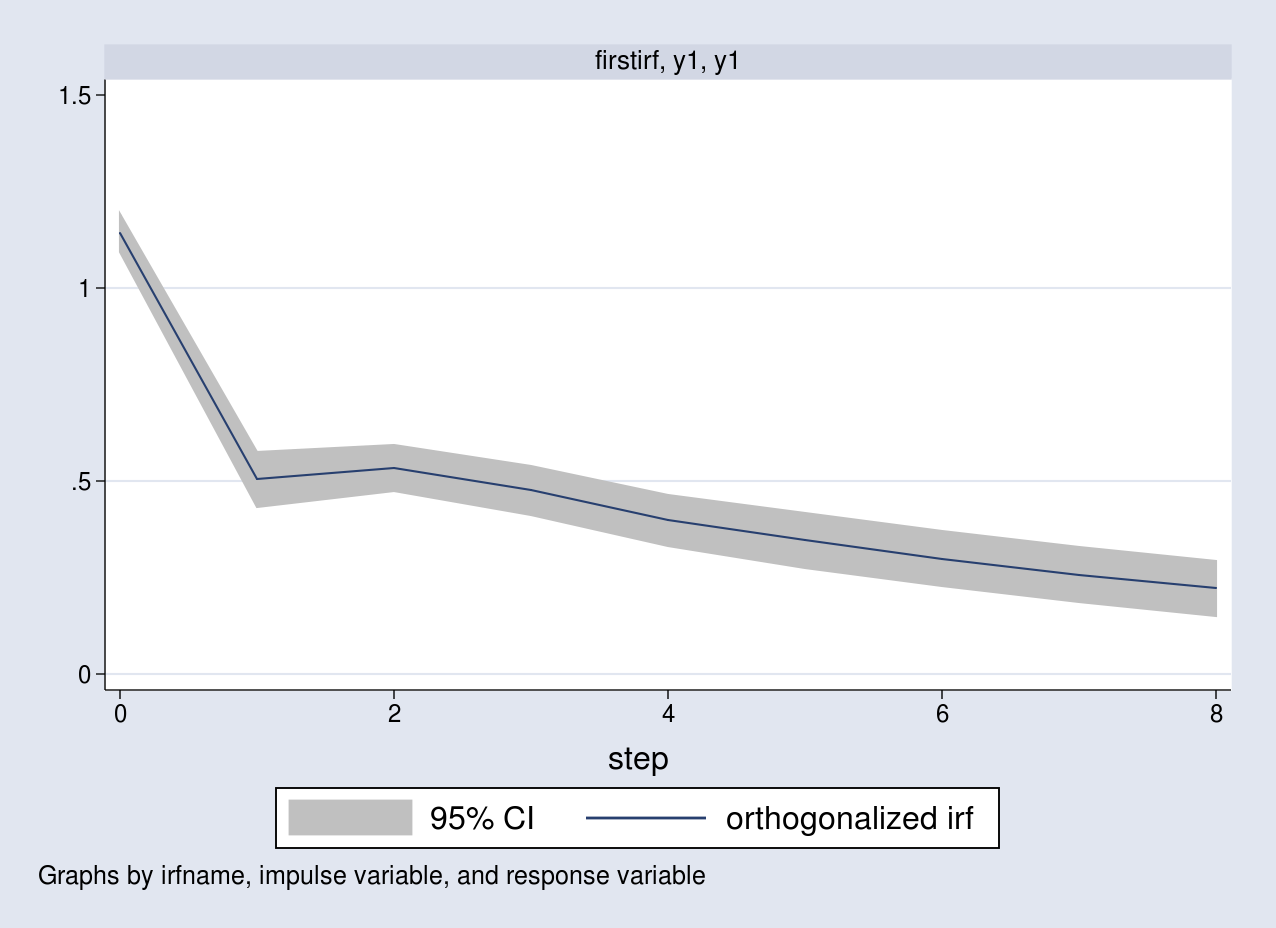

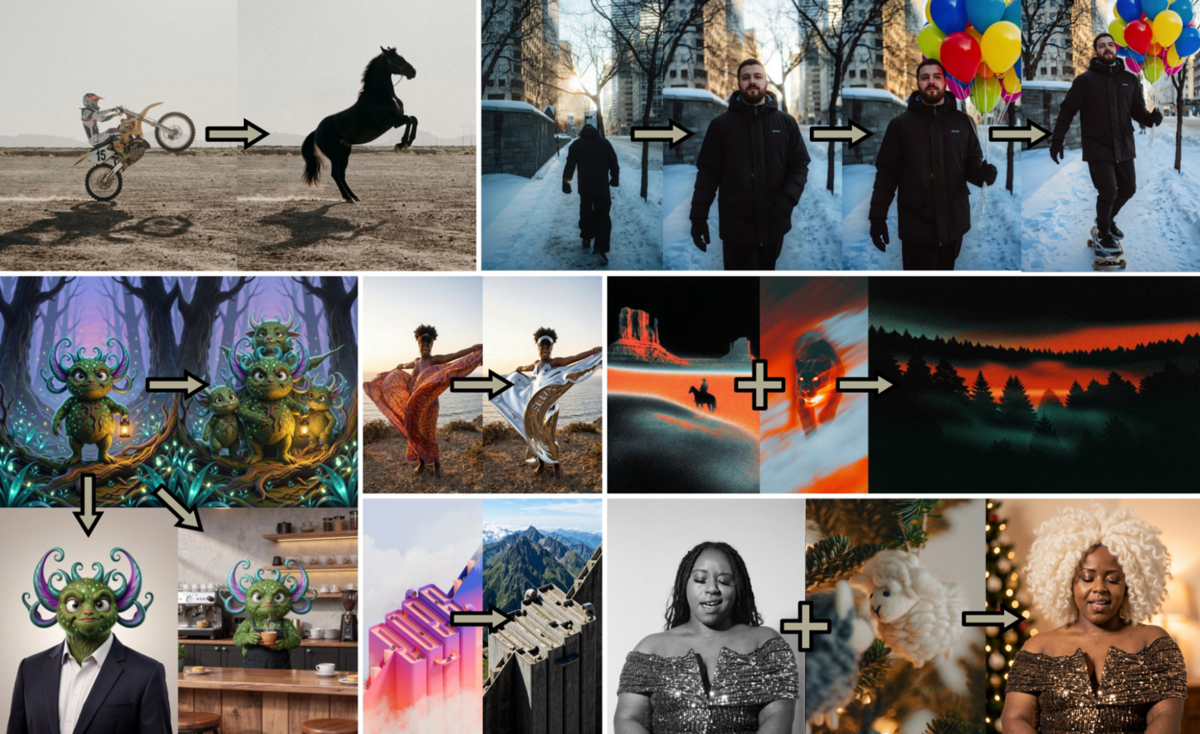

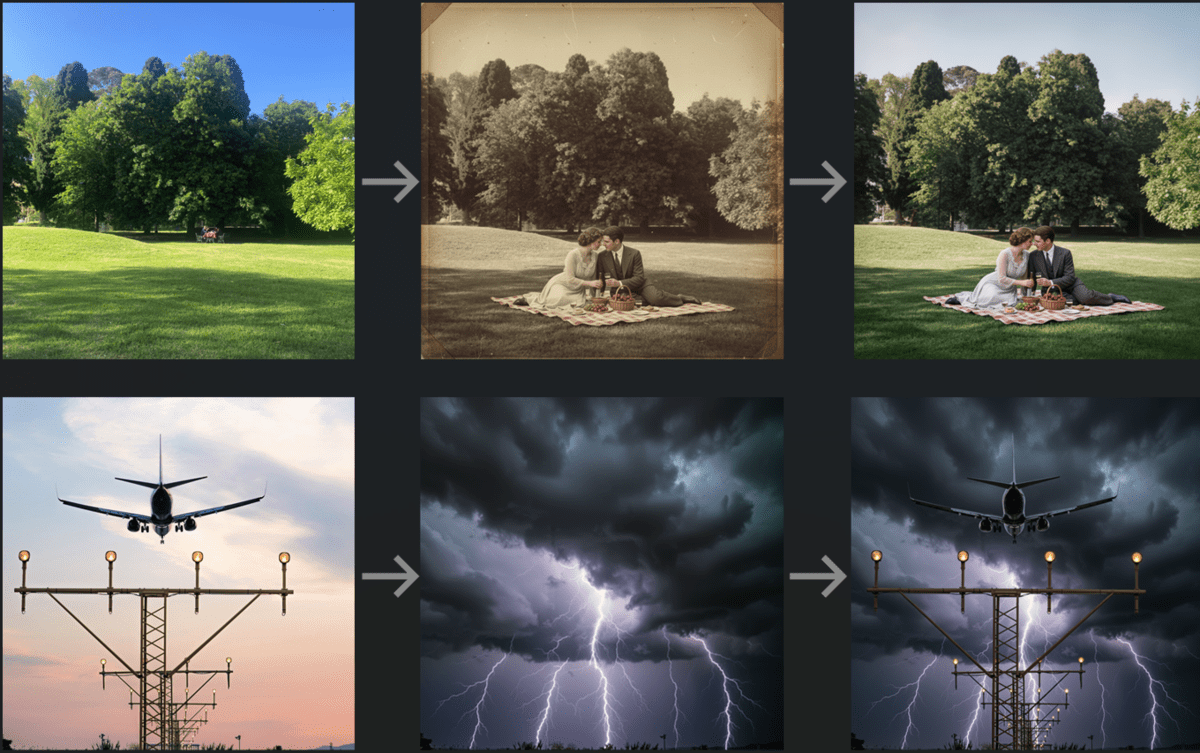

/insightsWhenever you kind in /insights into Claude Code, it analyzes the final six weeks of your work. Offers you a report on what’s working, and what isn’t working. I did that, and it produced an interactive html, however I don’t suppose by way of html. I believe by way of decks as a result of decks inform tales. So I requested Claude Code to make my /insights right into a “lovely deck” that adopted “the rhetoric of decks” philosophy I’ve outlined on my MixtapeTools repo. And I’m going to share it. That is my workflow, and it’s apparently based mostly on the impossibly excessive variety of 1,642 hours of use. That quantity can’t be proper as a result of that may imply I’ve been utilizing Claude Code for one thing like 70 full days since its launch on the Mac desktop app in November! And but after I give it some thought, I’ve burning 14 hour days for months getting courses collectively, ending main tasks that have been dying on the vine, and assembly deadlines — at a stage of productiveness I’ve by no means had, not on this sustained stage. All whereas nonetheless managing to hang around with some mates right here in Boston. (It’s additionally attainable it’s counting issues I’ve been operating in parallel although).

So, right here’s my deck description of how I work — that is Claude Code’s interpretation of how I work, and what he suggests I do in a different way going ahead. It’s purely an outline of my mattress although. Yours shall be completely different, and yours shall be excellent for you.

If the mattress conforms to you, then meaning each particular person’s starter package is only a reflection of their manifestation of the Claude Code workflow that labored for them. It’s like when somebody provides you recommendation on tips on how to research for exams, and the recommendation is to make use of 16 completely different coloured markers. And also you’re like, “okay I’m coloration blind however I suppose I’m going to do this.” And unsurprisingly, it doesn’t work.

I’m not saying don’t use starter kits. I’m saying that in the long term, your workflow shall be Claude Code and also you working so intensively collectively on duties in the direction of success that it’s nearly as should you aren’t utilizing a preexisting software program anymore as a lot as you’re utilizing the software program that you just and Claude Code invented collectively. There gained’t be “studying R”. There shall be “that is how I work, that is what works for me, that is how I’ve managed to change into profitable at my job utilizing Claude Code”. And for some it seems a method, and for others it seems one other method, and neither is correct, and neither is flawed.

The sinking into the reminiscence foam on my facet of the mattress is simply that — it’s my facet of the mattress. It’s my shell. Nobody else might most likely even sleep there efficiently till that reminiscence foam “forgot” my physique. The shell is just not transferable. What’s transferable is the concept that the mattress will conform to you and your fashion of labor. You simply have to make use of it and belief me.

Because of this I write the way in which I write about Claude Code the way in which that I do. I believe you simply should see somebody utilizing it. The guerrilla sequence, the movies, the real-time fumbling round. The extra you see it, the extra assured you’ll really feel that it’s simple, not laborious.

I’m not on right here attempting to show a device to readers as a result of I actually suppose there is no such thing as a such factor as that. I simply need to illustrate to you the way in which that the individuals who promote reminiscence foam say that it really works. They simply say “lay down in it for just a few weeks”.

Folks on the intensive margin don’t want a workflow diagram. It gained’t assist them anyway. I believe the principle factor that helps is to look at somebody fumble by it, utilizing it for the kinds of duties they do every single day. That’s what is going to assist. And that’s story greater than documentation. I believe that’s the way you unlock the door to the intensive margin. Not by starter kits and documentation — let different folks see you mendacity down on it, they usually’ll get it. They’ll determine it out as long as they leap in.

So many issues are like that. So many issues can’t be taught; they’ll solely be discovered. Claude Code can’t be taught. Utilizing AI Brokers for work can’t be taught. It may possibly solely be discovered. So I encourage you to try this.