Introduction

Synthetic intelligence (AI) has moved from laboratory demonstrations to on a regular basis infrastructure. In 2026, algorithms drive digital assistants, predictive healthcare, logistics, autonomous automobiles and the very platforms we use to speak. This ubiquity guarantees effectivity and innovation, nevertheless it additionally exposes society to critical dangers that demand consideration. Potential issues with AI aren’t simply hypothetical situations: many are already impacting people, organizations and governments. Clarifai, as a frontrunner in accountable AI growth and mannequin orchestration, believes that highlighting these challenges—and proposing concrete options—is significant for guiding the business towards secure and moral deployment.

The next article examines the foremost dangers, risks and challenges of synthetic intelligence, specializing in algorithmic bias, privateness erosion, misinformation, environmental affect, job displacement, psychological well being, safety threats, security of bodily methods, accountability, explainability, world regulation, mental property, organizational governance, existential dangers and area‑particular case research. Every part offers a fast abstract, in‑depth dialogue, knowledgeable insights, inventive examples and ideas for mitigation. On the finish, a FAQ solutions frequent questions. The purpose is to offer a worth‑wealthy, authentic evaluation that balances warning with optimism and sensible options.

Fast Digest

The short digest under summarizes the core content material of this text. It presents a excessive‑stage overview of the key issues and options to assist readers orient themselves earlier than diving into the detailed sections.

|

Threat/Problem

|

Key Situation

|

Probability & Influence (2026)

|

Proposed Options

|

|

Algorithmic Bias

|

Fashions perpetuate social and historic biases, inflicting discrimination in facial recognition, hiring and healthcare selections.

|

Excessive probability, excessive affect; bias is pervasive resulting from historic knowledge.

|

Equity toolkits, numerous datasets, bias audits, steady monitoring.

|

|

Privateness & Surveillance

|

AI’s starvation for knowledge results in pervasive surveillance, mass knowledge misuse and techno‑authoritarianism.

|

Excessive probability, excessive affect; knowledge assortment is accelerating.

|

Privateness‑by‑design, federated studying, consent frameworks, robust regulation.

|

|

Misinformation & Deepfakes

|

Generative fashions create reasonable artificial content material that undermines belief and may affect elections.

|

Excessive probability, excessive affect; deepfakes proliferate rapidly.

|

Labeling guidelines, governance our bodies, bias audits, digital literacy campaigns.

|

|

Environmental Influence

|

AI coaching and inference eat huge power and water; knowledge facilities could exceed 1,000 TWh by 2026.

|

Medium probability, reasonable to excessive affect; generative fashions drive useful resource use.

|

Inexperienced software program, renewable‑powered computing, effectivity metrics.

|

|

Job Displacement

|

Automation may substitute as much as 40 % of jobs by 2025, exacerbating inequality.

|

Excessive probability, excessive affect; whole sectors face disruption.

|

Upskilling, authorities assist, common primary earnings pilots, AI taxes.

|

|

Psychological Well being & Human Company

|

AI chatbots in remedy threat stigmatizing or dangerous responses; overreliance can erode crucial considering.

|

Medium probability, reasonable affect; dangers rise as adoption grows.

|

Human‑in‑the‑loop, regulated psychological‑well being apps, AI literacy packages.

|

|

Safety & Weaponization

|

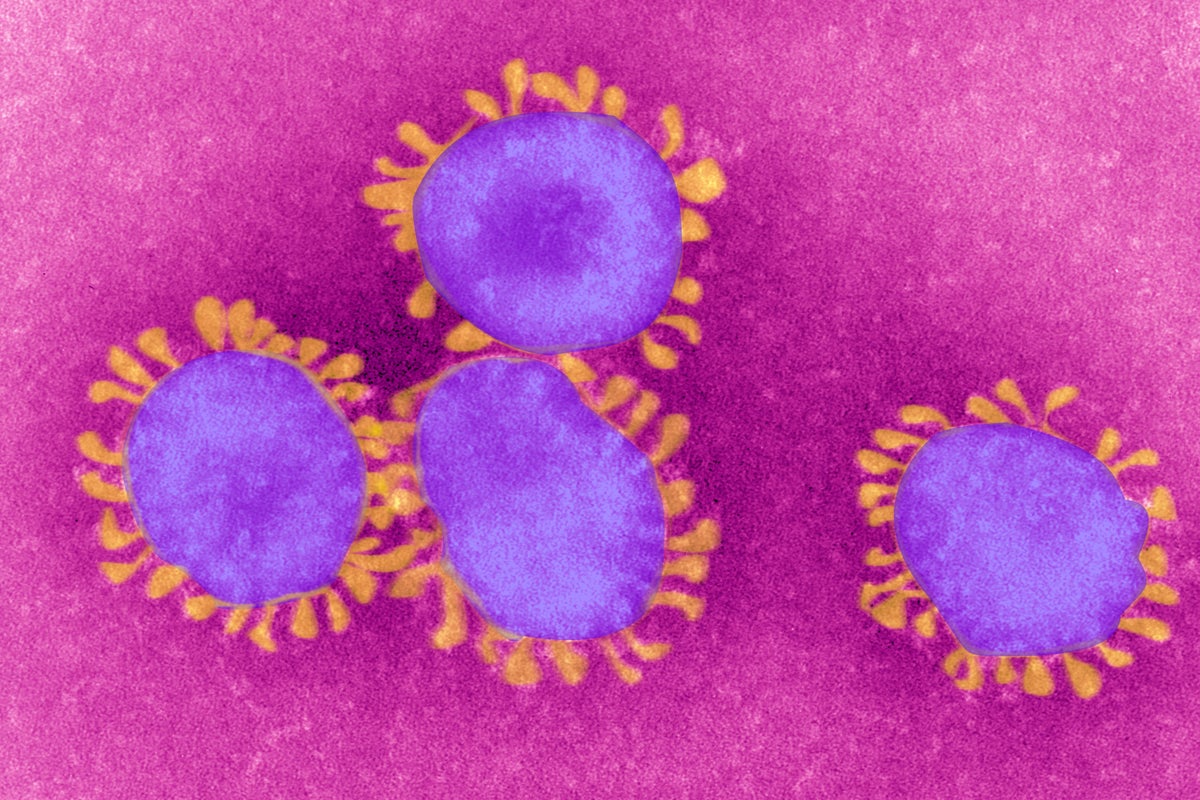

AI amplifies cyber‑assaults and might be weaponized for bioterrorism or autonomous weapons.

|

Excessive probability, excessive affect; risk vectors develop quickly.

|

Adversarial coaching, purple teaming, worldwide treaties, safe {hardware}.

|

|

Security of Bodily Methods

|

Autonomous automobiles and robots nonetheless produce accidents and accidents; legal responsibility stays unclear.

|

Medium probability, reasonable affect; security varies by sector.

|

Security certifications, legal responsibility funds, human‑robotic interplay tips.

|

|

Accountability & Accountability

|

Figuring out legal responsibility when AI causes hurt is unresolved; “who’s accountable?” stays open.

|

Excessive probability, excessive affect; accountability gaps hinder adoption.

|

Human‑in‑the‑loop insurance policies, authorized frameworks, mannequin audits.

|

|

Transparency & Explainability

|

Many AI methods operate as black bins, hindering belief.

|

Medium probability, reasonable affect.

|

Explainable AI (XAI), mannequin playing cards, regulatory necessities.

|

|

World Regulation & Compliance

|

Regulatory frameworks stay fragmented; AI races threat misalignment.

|

Excessive probability, excessive affect.

|

Harmonized legal guidelines, adaptive sandboxes, world governance our bodies.

|

|

Mental Property

|

AI coaching on copyrighted materials raises possession disputes.

|

Medium probability, reasonable affect.

|

Choose‑out mechanisms, licensing frameworks, copyright reform.

|

|

Organizational Governance & Ethics

|

Lack of inner AI insurance policies results in misuse and vulnerability.

|

Medium probability, reasonable affect.

|

Ethics committees, codes of conduct, third‑occasion audits.

|

|

Existential & Lengthy‑Time period Dangers

|

Concern of tremendous‑clever AI inflicting human extinction persists.

|

Low probability, catastrophic affect; lengthy‑time period however unsure.

|

Alignment analysis, world coordination, cautious pacing.

|

|

Area‑Particular Case Research

|

AI manifests distinctive dangers in finance, healthcare, manufacturing and agriculture.

|

Various probability and affect by business.

|

Sector‑particular laws, moral tips and greatest practices.

|

Algorithmic Bias & Discrimination

Fast Abstract: What’s algorithmic bias and why does it matter? — AI methods inherit and amplify societal biases as a result of they be taught from historic knowledge and flawed design decisions. This results in unfair selections in facial recognition, lending, hiring and healthcare, harming marginalized teams. Efficient options contain equity toolkits, numerous datasets and steady monitoring.

Understanding Algorithmic Bias

Algorithmic bias happens when a mannequin’s outputs disproportionately have an effect on sure teams in a approach that reproduces present social inequities. As a result of AI learns patterns from historic knowledge, it could actually embed racism, sexism or different prejudices. As an example, facial‑recognition methods misidentify darkish‑skinned people at far greater charges than mild‑skinned people, a discovering documented by Pleasure Buolamwini’s Gender Shades undertaking. In one other case, a healthcare threat‑prediction algorithm predicted that Black sufferers have been more healthy than they have been, as a result of it used healthcare spending slightly than medical outcomes as a proxy. These examples present how flawed proxies or incomplete datasets produce discriminatory outcomes.

Bias isn’t restricted to demographics. Hiring algorithms could favor youthful candidates by screening resumes for “digital native” language, inadvertently excluding older employees. Equally, AI used for parole selections, such because the COMPAS algorithm, has been criticized for predicting greater recidivism charges amongst Black defendants in contrast with white defendants for a similar offense. Such biases harm belief and create authorized liabilities. Below the EU AI Act and the U.S. Equal Employment Alternative Fee, organizations utilizing AI for prime‑affect selections may face fines in the event that they fail to audit fashions and guarantee equity.

Mitigation & Options

Decreasing algorithmic bias requires holistic motion. Technical measures embody utilizing numerous coaching datasets, using equity metrics (e.g., equalized odds, demographic parity) and implementing bias detection and mitigation toolkits like these in Clarifai’s platform. Organizational measures contain conducting pre‑deployment audits, usually monitoring outputs throughout demographic teams and documenting fashions with mannequin playing cards. Coverage measures embody requiring AI builders to show non‑discrimination and keep human oversight. The NIST AI Threat Administration Framework and the EU AI Act suggest threat‑tiered approaches and impartial auditing.

Clarifai integrates equity evaluation instruments in its compute orchestration workflows. Builders can run fashions in opposition to balanced datasets, examine outcomes and modify coaching to scale back disparate affect. By orchestrating a number of fashions and cross‑evaluating outcomes, Clarifai helps determine biases early and suggests various algorithms.

Professional Insights

- Pleasure Buolamwini and the Gender Shades undertaking uncovered how industrial facial‑recognition methods had error charges of as much as 34 % for darkish‑skinned girls in contrast with <1 % for mild‑skinned males. Her work underscores the necessity for numerous coaching knowledge and impartial audits.

- MIT Sloan researchers attribute AI bias to flawed proxies, unbalanced coaching knowledge and the character of generative fashions, which optimize for plausibility slightly than reality. They suggest retrieval‑augmented technology and publish‑hoc corrections.

- Coverage consultants advocate for necessary bias audits and numerous datasets in excessive‑threat AI functions. Regulators just like the EU and U.S. labour businesses have begun requiring affect assessments.

- Clarifai’s view: We imagine equity begins within the knowledge pipeline. Our mannequin inference instruments embody equity testing modules and steady monitoring dashboards in order that AI methods stay truthful as actual‑world knowledge drifts.

Information Privateness, Surveillance & Misuse

Fast Abstract: How does AI threaten privateness and allow surveillance? — AI’s urge for food for knowledge fuels mass assortment and surveillance, enabling unauthorized profiling and misuse. With out safeguards, AI can turn out to be an instrument of techno‑authoritarianism. Privateness‑by‑design and sturdy laws are important.

The Information Starvation of AI

AI thrives on knowledge: the extra examples an algorithm sees, the higher it performs. Nonetheless, this knowledge starvation results in intrusive knowledge assortment and storage practices. Private data—from shopping habits and site histories to biometric knowledge—is harvested to coach fashions. With out acceptable controls, organizations could interact in mass surveillance, utilizing facial recognition to observe public areas or observe workers. Such practices not solely erode privateness but in addition threat abuse by authoritarian regimes.

An instance is the widespread deployment of AI‑enabled CCTV in some international locations, combining facial recognition with predictive policing. Information leaks and cyber‑assaults additional compound the issue; unauthorized actors could siphon delicate coaching knowledge and compromise people’ safety. In healthcare, affected person information used to coach diagnostic fashions can reveal private particulars if not anonymized correctly.

Regulatory Patchwork & Techno‑Authoritarianism

The regulatory panorama is fragmented. Areas just like the EU implement strict privateness via GDPR and the upcoming EU AI Act; California has the CPRA; India has launched the Digital Private Information Safety Act; and China’s PIPL units out its personal regime. But these legal guidelines differ in scope and enforcement, creating compliance complexity for world companies. Authoritarian states exploit AI to observe residents, utilizing AI surveillance to regulate speech and suppress dissent. This techno‑authoritarianism exhibits how AI could be misused when unchecked.

Mitigation & Options

Efficient knowledge governance requires privateness‑by‑design: accumulating solely what is required, anonymizing knowledge, and implementing federated studying in order that fashions be taught from decentralized knowledge with out transferring delicate data. Consent frameworks ought to guarantee people perceive what knowledge is collected and may decide out. Corporations should embed knowledge minimization and sturdy cybersecurity protocols and adjust to world laws. Instruments like Clarifai’s native runners enable organizations to deploy fashions inside their very own infrastructure, guaranteeing knowledge by no means leaves their servers.

Professional Insights

- The Cloud Safety Alliance warns that AI’s knowledge urge for food will increase the danger of privateness breaches and emphasizes privateness‑by‑design and agile governance to answer evolving laws.

- ThinkBRG’s knowledge safety evaluation studies that solely about 40 % of executives really feel assured about complying with present privateness legal guidelines, and fewer than half have complete inner safeguards. This hole underscores the necessity for stronger governance.

- Clarifai’s perspective: Our compute orchestration platform contains coverage enforcement options that enable organizations to limit knowledge flows and mechanically apply privateness transforms (like blurring faces or redacting delicate textual content) earlier than fashions course of knowledge. This reduces the danger of unintentional knowledge publicity and enhances compliance.

Misinformation, Deepfakes & Disinformation

Fast Abstract: How do AI‑generated deepfakes threaten belief and democracy? — Generative fashions can create convincing artificial textual content, photographs and movies that blur the road between reality and fiction. Deepfakes undermine belief in media, polarize societies and should affect elections. Multi‑stakeholder governance and digital literacy are important countermeasures.

The Rise of Artificial Media

Generative adversarial networks (GANs) and transformer‑primarily based fashions can fabricate reasonable photographs, movies and audio indistinguishable from actual content material. Viral deepfake movies of celebrities and politicians flow into broadly, eroding public confidence. Throughout election seasons, AI‑generated propaganda and personalised disinformation campaigns can goal particular demographics, skewing discourse and doubtlessly altering outcomes. As an example, malicious actors can produce faux speeches from candidates or fabricate scandals, exploiting the velocity at which social media amplifies content material.

The problem is amplified by low cost and accessible generative instruments. Hobbyists can now produce believable deepfakes with minimal technical experience. This democratization of artificial media means misinformation can unfold quicker than reality‑checking sources can sustain.

Coverage Responses & Options

Governments and organizations are struggling to catch up. India’s proposed labeling guidelines mandate that AI‑generated content material comprise seen watermarks and digital signatures. The EU Digital Providers Act requires platforms to take away dangerous deepfakes promptly and introduces penalties for non‑compliance. Multi‑stakeholder initiatives suggest a tiered regulation method, balancing innovation with hurt prevention. Digital literacy campaigns train customers to critically consider content material, whereas builders are urged to construct explainable AI that may determine artificial media.

Clarifai presents deepfake detection instruments leveraging multimodal fashions to identify refined artifacts in manipulated photographs and movies. Mixed with content material moderation workflows, these instruments assist social platforms and media organizations flag and take away dangerous deepfakes. Moreover, the platform can orchestrate a number of detection fashions and fuse their outputs to extend accuracy.

Professional Insights

- The Frontiers in AI coverage matrix proposes world governance our bodies, labeling necessities and coordinated sanctions to curb disinformation. It emphasizes that technical countermeasures have to be coupled with training and regulation.

- Brookings students warn that whereas existential AI dangers seize headlines, policymakers should prioritize pressing harms like deepfakes and disinformation.

- Reuters reporting on India’s labeling guidelines highlights how seen markers may turn out to be a worldwide commonplace for deepfake regulation.

- Clarifai’s stance: We view disinformation as a risk not solely to society but in addition to accountable AI adoption. Our platform helps content material verification pipelines that cross‑verify multimedia content material in opposition to trusted databases and supply confidence scores that may be fed again to human moderators.

Environmental Influence & Sustainability

Fast Abstract: Why does AI have a big environmental footprint? — Coaching and working AI fashions require important electrical energy and water, with knowledge facilities consuming as much as 1,050 TWh by 2026. Giant fashions like GPT‑3 emit a whole lot of tons of CO₂ and require large water for cooling. Sustainable AI practices should turn out to be the norm.

The Vitality and Water Value of AI

AI computations are useful resource‑intensive. World knowledge middle electrical energy consumption was estimated at 460 terawatt‑hours in 2022 and will exceed 1,000 TWh by 2026. Coaching a single massive language mannequin, resembling GPT‑3, consumes round 1,287 MWh of electrical energy and emits 552 tons of CO₂. These emissions are akin to driving dozens of passenger vehicles for a 12 months.

Information facilities additionally require copious water for cooling. Some hyperscale services use as much as 22 million liters of potable water per day. When AI workloads are deployed in low‑ and center‑earnings international locations (LMICs), they’ll pressure fragile electrical grids and water provides. AI expansions in agritech and manufacturing could battle with native water wants and contribute to environmental injustice.

Towards Sustainable AI

Mitigating AI’s environmental footprint includes a number of methods. Inexperienced software program engineering can enhance algorithmic effectivity—decreasing coaching rounds, utilizing sparse fashions and optimizing code. Corporations ought to energy knowledge facilities with renewable power and implement liquid cooling or warmth reuse methods. Lifecycle metrics such because the AI Vitality Rating and Software program Carbon Depth present standardized methods to measure and examine power use. Clarifai permits builders to run native fashions on power‑environment friendly {hardware} and orchestrate workloads throughout completely different environments (cloud, on‑premise) to optimize for carbon footprint.

Professional Insights

- MIT researchers spotlight that generative AI’s inference could quickly dominate power consumption, calling for complete assessments that embody each coaching and deployment. They advocate for “systematic transparency” about power and water utilization.

- IFPRI analysts warn that deploying AI infrastructure in LMICs could compromise meals and water safety, urging policymakers to judge commerce‑offs.

- NTT DATA’s white paper proposes metrics like AI Vitality Rating and Software program Carbon Depth to information sustainable growth and requires round‑economic system {hardware} design.

- Clarifai’s dedication: We assist sustainable AI by providing power‑environment friendly inference choices and enabling clients to decide on renewable‑powered compute. Our orchestration platform can mechanically schedule useful resource‑intensive coaching on greener knowledge facilities and modify primarily based on actual‑time power costs.

Job Displacement & Financial Inequality

Fast Abstract: Will AI trigger mass unemployment or widen inequality? — AI automation may substitute as much as 40 % of jobs by 2025, hitting entry‑stage positions hardest. With out proactive insurance policies, the advantages of automation could accrue to some, growing inequality. Upskilling and social security nets are important.

The Panorama of Automation

AI automates duties throughout manufacturing, logistics, retail, journalism, regulation and finance. Analysts estimate that just about 40 % of jobs might be automated by 2025, with entry‑stage administrative roles seeing declines of round 35 %. Robotics and AI have already changed sure warehouse jobs, whereas generative fashions threaten to displace routine writing duties.

The distribution of those results is uneven. Low‑talent and repetitive jobs are extra vulnerable, whereas inventive and strategic roles could persist however require new expertise. With out intervention, automation could deepen financial inequality, significantly affecting youthful employees, girls and other people in creating economies.

Mitigation & Options

Mitigating job displacement includes training and coverage interventions. Governments and corporations should spend money on reskilling and upskilling packages to assist employees transition into AI‑augmented roles. Inventive industries can deal with human‑AI collaboration slightly than alternative. Insurance policies resembling common primary earnings (UBI) pilots, focused unemployment advantages or “robotic taxes” can cushion the financial shocks. Corporations ought to decide to redeploying employees slightly than laying them off. Clarifai’s coaching programs on AI and machine studying assist organizations upskill their workforce, and the platform’s mannequin orchestration streamlines integration of AI with human workflows, preserving significant human roles.

Professional Insights

- Forbes analysts predict governments could require corporations to reinvest financial savings from automation into workforce growth or social packages.

- The Stanford AI Index Report notes that whereas AI adoption is accelerating, accountable AI ecosystems are nonetheless rising and standardized evaluations are uncommon. This suggests a necessity for human‑centric metrics when evaluating automation.

- Clarifai’s method: We advocate for co‑augmentation—utilizing AI to enhance slightly than substitute employees. Our platform permits corporations to deploy fashions as co‑pilots with human supervisors, guaranteeing that people stay within the loop and that expertise switch happens.

Psychological Well being, Creativity & Human Company

Fast Abstract: How does AI have an effect on psychological well being and our inventive company? — Whereas AI chatbots can provide companionship or remedy, they’ll additionally misjudge psychological‑well being points, perpetuate stigma and erode crucial considering. Overreliance on AI could scale back creativity and result in “mind rot.” Human oversight and digital mindfulness are key.

AI Remedy and Psychological Well being Dangers

AI‑pushed psychological‑well being chatbots provide accessibility and anonymity. But, researchers at Stanford warn that these methods could present inappropriate or dangerous recommendation and exhibit stigma of their responses. As a result of fashions are educated on web knowledge, they might replicate cultural biases round psychological sickness or counsel harmful interventions. Moreover, the phantasm of empathy could stop customers from looking for skilled assist. Extended reliance on chatbots can erode interpersonal expertise and human connection.

Creativity, Consideration and Human Company

Generative fashions can co‑write essays, generate music and even paint. Whereas this democratizes creativity, it additionally dangers diminishing human company. Research counsel that heavy use of AI instruments could scale back crucial considering and inventive drawback‑fixing. Algorithmic suggestion engines on social platforms can create echo chambers, lowering publicity to numerous concepts and harming psychological effectively‑being. Over time, this will likely result in what some researchers name “mind rot,” characterised by decreased consideration span and diminished curiosity.

Mitigation & Options

Psychological‑well being functions should embody human supervisors, resembling licensed therapists reviewing chatbot interactions and stepping in when wanted. Regulators ought to certify psychological‑well being AI and require rigorous testing for security. Customers can apply digital mindfulness by limiting reliance on AI for selections and preserving inventive areas free from algorithmic interference. AI literacy packages in colleges and workplaces can train crucial analysis of AI outputs and encourage balanced use.

Clarifai’s platform helps nice‑tuning for psychological‑well being use instances with safeguards, resembling toxicity filters and escalation protocols. By integrating fashions with human evaluate, Clarifai ensures that delicate selections stay beneath human oversight.

Professional Insights

- Stanford researchers Nick Haber and Jared Moore warning that remedy chatbots lack the nuanced understanding wanted for psychological‑well being care and should reinforce stigma if left unchecked. They suggest utilizing LLMs for administrative assist or coaching simulations slightly than direct remedy.

- Psychological research hyperlink over‑publicity to algorithmic suggestion methods to nervousness, decreased consideration spans and social polarization.

- Clarifai’s viewpoint: We advocate for human‑centric AI that enhances human creativity slightly than changing it. Instruments like Clarifai’s mannequin inference service can act as inventive companions, providing ideas whereas leaving closing selections to people.

Safety, Adversarial Assaults & Weaponization

Fast Abstract: How can AI be misused in cybercrime and warfare? — AI empowers hackers to craft refined phishing, malware and mannequin‑stealing assaults. It additionally permits autonomous weapons, bioterrorism and malicious propaganda. Sturdy safety practices, adversarial coaching and world treaties are important.

Cybersecurity Threats & Adversarial ML

AI will increase the size and class of cybercrime. Generative fashions can craft convincing phishing emails that keep away from detection. Malicious actors can deploy AI to automate vulnerability discovery or create polymorphic malware that modifications its signature to evade scanners. Mannequin‑stealing assaults extract proprietary fashions via API queries, enabling rivals to repeat or manipulate them. Adversarial examples—perturbed inputs—could cause AI methods to misclassify, posing critical dangers in crucial domains like autonomous driving and medical diagnostics.

Weaponization & Malicious Use

The Middle for AI Security categorizes catastrophic AI dangers into malicious use (bioterrorism, propaganda), AI race incentives that encourage chopping corners on security, organizational dangers (knowledge breaches, unsafe deployment), and rogue AIs that deviate from meant targets. Autonomous drones and deadly autonomous weapons (LAWs) may determine and have interaction targets with out human oversight. Deepfake propaganda can incite violence or manipulate public opinion.

Mitigation & Options

Safety have to be constructed into AI methods. Adversarial coaching can harden fashions by exposing them to malicious inputs. Crimson teaming—simulated assaults by consultants—identifies vulnerabilities earlier than deployment. Sturdy risk detection fashions monitor inputs for anomalies. On the coverage facet, worldwide agreements like an expanded Conference on Sure Typical Weapons may ban autonomous weapons. Organizations ought to undertake the NIST Adversarial ML tips and implement safe {hardware}.

Clarifai presents mannequin hardening instruments, together with adversarial instance technology and automatic purple teaming. Our compute orchestration permits builders to run these checks at scale throughout a number of deployment environments.

Professional Insights

- Middle for AI Security researchers emphasize that malicious use, AI race dynamics and rogue AI may trigger catastrophic hurt and urge governments to control dangerous applied sciences.

- The UK authorities warns that generative AI will amplify digital, bodily and political threats and requires coordinated security measures.

- Clarifai’s safety imaginative and prescient: We imagine that the “purple group as a service” mannequin will turn out to be commonplace. Our platform contains automated safety assessments and integration with exterior risk intelligence feeds to detect rising assault vectors.

Security of Bodily Methods & Office Accidents

Fast Abstract: Are autonomous automobiles and robots secure? — Though self‑driving automobiles could also be safer than human drivers, proof is tentative and crashes nonetheless happen. Automated workplaces create new harm dangers and a legal responsibility void. Clear security requirements and compensation mechanisms are wanted.

Autonomous Automobiles & Robots

Self‑driving vehicles and supply robots are more and more frequent. Research counsel that Waymo’s autonomous taxis crash at barely decrease charges than human drivers, but they nonetheless depend on distant operators. Regulation is fragmented; there isn’t any complete federal commonplace within the U.S., and only some states have permitted driverless operations. In manufacturing, collaborative robots (cobots) and automatic guided automobiles could trigger sudden accidents if sensors malfunction or software program bugs come up.

Office Accidents & Legal responsibility

The Fourth Industrial Revolution introduces invisible accidents: employees monitoring automated methods could undergo stress from steady surveillance or repetitive pressure, whereas AI methods could malfunction unpredictably. When accidents happen, it’s typically unclear who’s liable: the developer, the deployer or the operator. The United Nations College notes a accountability void, with present labour legal guidelines sick‑ready to assign blame. Proposals embody creating an AI legal responsibility fund to compensate injured employees and harmonizing cross‑border labour laws.

Mitigation & Options

Guaranteeing security requires certification packages for AI‑pushed merchandise (e.g., ISO 31000 threat administration requirements), sturdy testing earlier than deployment and fail‑secure mechanisms that enable human override. Corporations ought to set up employee compensation insurance policies for AI‑associated accidents and undertake clear reporting of incidents. Clarifai helps these efforts by providing mannequin monitoring and efficiency analytics that detect uncommon behaviour in bodily methods.

Professional Insights

- UNU researchers spotlight the accountability vacuum in AI‑pushed workplaces and name for worldwide labour cooperation.

- Brookings commentary factors out that self‑driving automobile security continues to be aspirational and that shopper belief stays low.

- Clarifai’s contribution: Our platform contains actual‑time anomaly detection modules that monitor sensor knowledge from robots and automobiles. If efficiency deviates from anticipated patterns, alerts are despatched to human supervisors, serving to to forestall accidents.

Accountability, Accountability & Legal responsibility

Fast Abstract: Who’s accountable when AI goes flawed? — Figuring out accountability for AI errors stays unresolved. When an AI system makes a dangerous determination, it’s unclear whether or not the developer, deployer or knowledge supplier needs to be liable. Insurance policies should assign accountability and require human oversight.

The Accountability Hole

AI operates autonomously but is created and deployed by people. When issues go flawed—be it a discriminatory mortgage denial or a car crash—assigning blame turns into advanced. The EU’s upcoming AI Legal responsibility Directive makes an attempt to make clear legal responsibility by reversing the burden of proof and permitting victims to sue AI builders or deployers. Within the U.S., debates round Part 230 exemptions for AI‑generated content material illustrate comparable challenges. With out clear accountability, victims could also be left with out recourse and corporations could also be tempted to externalize accountability.

Proposals for Accountability

Consultants argue that people should stay within the determination loop. Which means AI instruments ought to help, not substitute, human judgment. Organizations ought to implement accountability frameworks that determine the roles liable for knowledge, mannequin growth and deployment. Mannequin playing cards and algorithmic affect assessments assist doc the scope and limitations of methods. Authorized proposals embody establishing AI legal responsibility funds just like vaccine harm compensation schemes.

Clarifai helps accountability by offering audit trails for every mannequin determination. Our platform logs inputs, mannequin variations and determination rationales, enabling inner and exterior audits. This transparency helps decide accountability when points come up.

Professional Insights

- Forbes commentary emphasizes that the “buck should cease with a human” and that delegating selections to AI doesn’t absolve organizations of accountability.

- The United Nations College suggests establishing an AI legal responsibility fund to compensate employees or customers harmed by AI and requires harmonized legal responsibility laws.

- Clarifai’s place: Accountability is a shared accountability. We encourage customers to configure approval pipelines the place human determination makers evaluate AI outputs earlier than actions are taken, particularly for prime‑stakes functions.

Lack of Transparency & Explainability (Black Field Drawback)

Fast Abstract: Why are AI methods typically opaque? — Many AI fashions function as black bins, making it obscure how selections are made. This opacity breeds distrust and hinders accountability. Explainable AI strategies and regulatory transparency necessities can restore confidence.

The Black Field Problem

Fashionable AI fashions, significantly deep neural networks, are advanced and non‑linear. Their determination processes usually are not simply interpretable by people. Some corporations deliberately maintain fashions proprietary to guard mental property, additional obscuring their operation. In excessive‑threat settings like healthcare or lending, such opacity can stop stakeholders from questioning or interesting selections. This drawback is compounded when customers can’t entry coaching knowledge or mannequin architectures.

Explainable AI (XAI)

Explainability goals to open the black field. Methods like LIME, SHAP and Built-in Gradients present publish‑hoc explanations by approximating a mannequin’s native behaviour. Mannequin playing cards and datasheets for datasets doc the mannequin’s coaching knowledge, efficiency throughout demographics and limitations. The DARPA XAI program and NIST explainability tips assist analysis on strategies to demystify AI. Regulatory frameworks just like the EU AI Act require excessive‑threat AI methods to be clear, and the NIST AI Threat Administration Framework encourages organizations to undertake XAI.

Clarifai’s platform mechanically generates mannequin playing cards for every deployed mannequin, summarizing efficiency metrics, equity evaluations and interpretability strategies. This will increase transparency for builders and regulators.

Professional Insights

- Forbes consultants argue that fixing the black‑field drawback requires each technical improvements (explainability strategies) and authorized strain to power transparency.

- NIST advocates for layered explanations that adapt to completely different audiences (builders, regulators, finish customers) and stresses that explainability mustn’t compromise privateness or safety.

- Clarifai’s dedication: We champion explainable AI by integrating interpretability frameworks into our mannequin inference companies. Customers can examine characteristic attributions for every prediction and modify accordingly.

World Governance, Regulation & Compliance

Fast Abstract: Can we harmonize AI regulation throughout borders? — Present legal guidelines are fragmented, from the EU AI Act to the U.S. government orders and China’s PIPL, making a compliance maze. Regulatory lag and jurisdictional fragmentation threat an AI arms race. Worldwide cooperation and adaptive sandboxes are obligatory.

The Patchwork of AI Regulation

International locations are racing to control AI. The EU AI Act establishes threat tiers and strict obligations for prime‑threat functions. The U.S. has issued government orders and proposed an AI Invoice of Rights, however lacks complete federal laws. China’s PIPL and draft AI laws emphasize knowledge localization and safety. Brazil’s LGPD, India’s labeling guidelines and Canada’s AI and Information Act add to the complexity. With out harmonization, corporations face compliance burdens and should search regulatory arbitrage.

Evolving Traits & Regulatory Lag

Regulation typically lags behind know-how. As generative fashions quickly evolve, policymakers battle to anticipate future developments. The Frontiers in AI coverage suggestions name for tiered laws, the place excessive‑threat AI requires rigorous testing, whereas low‑threat functions face lighter oversight. Multi‑stakeholder our bodies such because the Organisation for Financial Co‑operation and Improvement (OECD) and the United Nations are discussing world requirements. In the meantime, some governments suggest AI sandboxes—managed environments the place builders can check fashions beneath regulatory supervision.

Mitigation & Options

Harmonization requires worldwide cooperation. Entities just like the OECD AI Rules and the UN AI Advisory Board can align requirements and foster mutual recognition of certifications. Adaptive regulation ought to enable guidelines to evolve with technological advances. Compliance frameworks just like the NIST AI Threat Administration Framework and ISO/IEC 42001 present baseline steering. Clarifai assists clients by offering regulatory compliance instruments, together with templates for documenting affect assessments and flags for regional necessities.

Professional Insights

- The Social Market Basis advocates a actual‑choices method: policymakers ought to proceed cautiously, permitting room to be taught and adapt laws.

- CAIS steering emphasizes audits and security analysis to align AI incentives.

- Clarifai’s viewpoint: We assist world cooperation and take part in business requirements our bodies. Our compute orchestration platform permits builders to run fashions in several jurisdictions, complying with native guidelines and demonstrating greatest practices.

Mental Property, Copyright & Possession

Fast Abstract: Who owns AI‑generated content material and coaching knowledge? — AI typically learns from copyrighted materials, elevating authorized disputes about truthful use and compensation. Possession of AI‑generated works is unclear, leaving creators and customers in limbo. Choose‑out mechanisms and licensing schemes can deal with these conflicts.

The Copyright Conundrum

AI fashions prepare on huge corpora that embody books, music, artwork and code. Artists and authors argue that this constitutes copyright infringement, particularly when fashions generate content material within the fashion of residing creators. A number of lawsuits have been filed, looking for compensation and management over how knowledge is used. Conversely, builders argue that coaching on publicly out there knowledge constitutes truthful use and fosters innovation. Courtroom rulings stay blended, and regulators are exploring potential options.

Possession of AI‑Generated Works

Who owns a piece produced by AI? Present copyright frameworks sometimes require human authorship. When a generative mannequin composes a music or writes an article, it’s unclear whether or not possession belongs to the person, the developer, or nobody. Some jurisdictions (e.g., Japan) enable AI‑generated works into the general public area, whereas others grant rights to the human who prompted the work. This uncertainty discourages funding and innovation.

Mitigation & Options

Options embody decide‑out or decide‑in licensing schemes that enable creators to exclude their work from coaching datasets or obtain compensation when their work is used. Collective licensing fashions just like these utilized in music royalties may facilitate cost flows. Governments could must replace copyright legal guidelines to outline AI authorship and make clear legal responsibility. Clarifai advocates for clear knowledge sourcing and helps initiatives that enable content material creators to regulate how their knowledge is used. Our platform offers instruments for customers to hint knowledge provenance and adjust to licensing agreements.

Professional Insights

- Forbes analysts be aware that courtroom instances on AI and copyright will form the business; whereas some rulings enable AI to coach on copyrighted materials, others level towards extra restrictive interpretations.

- Authorized students suggest new “AI rights” frameworks the place AI‑generated works obtain restricted safety but in addition require licensing charges for coaching knowledge.

- Clarifai’s place: We assist moral knowledge practices and encourage builders to respect artists’ rights. By providing dataset administration instruments that observe origin and license standing, we assist customers adjust to rising copyright obligations.

Organizational Insurance policies, Governance & Ethics

Fast Abstract: How ought to organizations govern inner AI use? — With out clear insurance policies, workers could deploy untested AI instruments, resulting in privateness breaches and moral violations. Organizations want codes of conduct, ethics committees, coaching and third‑occasion audits to make sure accountable AI adoption.

The Want for Inner Governance

AI isn’t solely constructed by tech corporations; organizations throughout sectors undertake AI for HR, advertising, finance and operations. Nonetheless, workers could experiment with AI instruments with out understanding their implications. This may expose corporations to privateness breaches, copyright violations and reputational harm. With out clear tips, shadow AI emerges as workers use unapproved fashions, resulting in inconsistent practices.

Moral Frameworks & Insurance policies

Organizations ought to implement codes of conduct that outline acceptable AI makes use of and incorporate moral rules like equity, accountability and transparency. AI ethics committees can oversee excessive‑affect initiatives, whereas incident reporting methods be certain that points are surfaced and addressed. Third‑occasion audits confirm compliance with requirements like ISO/IEC 42001 and the NIST AI RMF. Worker coaching packages can construct AI literacy and empower workers to determine dangers.

Clarifai assists organizations by providing governance dashboards that centralize mannequin inventories, observe compliance standing and combine with company threat methods. Our native runners allow on‑premise deployment, mitigating unauthorized cloud utilization and enabling constant governance.

Professional Insights

- ThoughtSpot’s information recommends steady monitoring and knowledge audits to make sure AI methods stay aligned with company values.

- Forbes evaluation warns that failure to implement organizational AI insurance policies may end in misplaced belief and authorized legal responsibility.

- Clarifai’s perspective: We emphasize training and accountability inside organizations. By integrating our platform’s governance options, companies can keep oversight over AI initiatives and align them with moral and authorized necessities.

Existential & Lengthy‑Time period Dangers

Fast Abstract: May tremendous‑clever AI finish humanity? — Some worry that AI could surpass human management and trigger extinction. Present proof suggests AI progress is slowing and pressing harms deserve extra consideration. Nonetheless, alignment analysis and world coordination stay essential.

The Debate on Existential Threat

The idea of tremendous‑clever AI—able to recursive self‑enchancment and unbounded development—raises considerations about existential threat. Thinkers fear that such an AI may develop targets misaligned with human values and act autonomously to realize them. Nonetheless, some students argue that present AI progress has slowed, and the proof for imminent tremendous‑intelligence is weak. They contend that specializing in lengthy‑time period, hypothetical dangers distracts from urgent points like bias, disinformation and environmental affect.

Preparedness & Alignment Analysis

Even when the probability of existential threat is low, the affect could be catastrophic. Due to this fact, alignment analysis—guaranteeing that superior AI methods pursue human‑suitable targets—ought to proceed. The Way forward for Life Institute’s open letter known as for a pause on coaching methods extra highly effective than GPT‑4 till security protocols are in place. The Middle for AI Security lists rogue AI and AI race dynamics as areas requiring consideration. World coordination can be certain that no single actor unilaterally develops unsafe AI.

Professional Insights

- Way forward for Life Institute signatories—together with outstanding scientists and entrepreneurs—urge policymakers to prioritize alignment and security analysis.

- Brookings evaluation argues that sources ought to deal with speedy harms whereas acknowledging the necessity for lengthy‑time period security analysis.

- Clarifai’s place: We assist openness and collaboration in alignment analysis. Our mannequin orchestration platform permits researchers to experiment with security strategies (e.g., reward modeling, interpretability) and share findings with the broader neighborhood.

Area‑Particular Challenges & Case Research

Fast Abstract: How do AI dangers differ throughout industries? — AI presents distinctive alternatives and pitfalls in finance, healthcare, manufacturing, agriculture and inventive industries. Every sector faces distinct biases, security considerations and regulatory calls for.

Finance

AI in finance hastens credit score selections, fraud detection and algorithmic buying and selling. But it additionally introduces bias in credit score scoring, resulting in unfair mortgage denials. Regulatory compliance is sophisticated by SEC proposals and the EU AI Act, which classify credit score scoring as excessive‑threat. Guaranteeing equity requires steady monitoring and bias testing, whereas defending customers’ monetary knowledge requires sturdy cybersecurity. Clarifai’s mannequin orchestration permits banks to combine a number of scoring fashions and cross‑validate them to scale back bias.

Healthcare

In healthcare, AI diagnostics promise early illness detection however carry the danger of systemic bias. A broadly cited case concerned a threat‑prediction algorithm that misjudged Black sufferers’ well being resulting from utilizing healthcare spending as a proxy. Algorithmic bias can result in misdiagnoses, authorized legal responsibility and reputational harm. Regulatory frameworks such because the FDA’s Software program as a Medical System tips and the EU Medical System Regulation require proof of security and efficacy. Clarifai’s platform presents explainable AI and privacy-preserving processing for healthcare functions.

Manufacturing

Visible AI transforms manufacturing by enabling actual‑time defect detection, predictive upkeep and generative design. Voxel51 studies that predictive upkeep reduces downtime by as much as 50 % and that AI‑primarily based high quality inspection can analyze elements in milliseconds. Nonetheless, unsolved issues embody edge computation latency, cybersecurity vulnerabilities and human‑robotic interplay dangers. Requirements like ISO 13485 and IEC 61508 information security, and AI‑particular tips (e.g., the EU Equipment Regulation) are rising. Clarifai’s pc imaginative and prescient APIs, built-in with edge computing, assist producers deploy fashions on‑website, decreasing latency and bettering reliability.

Agriculture

AI facilitates precision agriculture, optimizing irrigation and crop yields. Nonetheless, deploying knowledge facilities and sensors in low‑earnings international locations can pressure native power and water sources, exacerbating environmental and social challenges. Policymakers should stability technological advantages with sustainability. Clarifai helps agricultural monitoring by way of satellite tv for pc imagery evaluation however encourages purchasers to think about environmental footprints when deploying fashions.

Inventive Industries

Generative AI disrupts artwork, music and writing by producing novel content material. Whereas this fosters creativity, it additionally raises copyright questions and the worry of inventive stagnation. Artists fear about dropping livelihoods and about AI erasing distinctive human views. Clarifai advocates for human‑AI collaboration in inventive workflows, offering instruments that assist artists with out changing them.

Professional Insights

- Lumenova’s finance overview stresses the significance of governance, cybersecurity and bias testing in monetary AI.

- Baytech’s healthcare evaluation warns that algorithmic bias poses monetary, operational and compliance dangers.

- Voxel51’s commentary highlights manufacturing’s adoption of visible AI and notes that predictive upkeep can scale back downtime dramatically.

- IFPRI’s evaluation stresses the commerce‑offs of deploying AI in agriculture, particularly relating to water and power.

- Clarifai’s function: Throughout industries, Clarifai offers area‑tuned fashions and orchestration that align with business laws and moral issues. For instance, in finance we provide bias‑conscious credit score scoring; in healthcare we offer privateness‑preserving imaginative and prescient fashions; and in manufacturing we allow edge‑optimized pc imaginative and prescient.

Organizational & Societal Psychological Well being (Echo Chambers, Creativity & Neighborhood)

Fast Abstract: Do suggestion algorithms hurt psychological well being and society? — AI‑pushed suggestions can create echo chambers, enhance polarization, and scale back human creativity. Balancing personalization with variety and inspiring digital detox practices can mitigate these results.

Echo Chambers & Polarization

Social media platforms depend on recommender methods to maintain customers engaged. These algorithms be taught preferences and amplify comparable content material, typically resulting in echo chambers the place customers are uncovered solely to love‑minded views. This may polarize societies, foster extremism and undermine empathy. Filter bubbles additionally have an effect on psychological well being: fixed publicity to outrage‑inducing content material will increase nervousness and stress.

Creativity & Consideration

When algorithms curate each side of our data food plan, we threat dropping inventive exploration. People could depend on AI instruments for thought technology and thus keep away from the productive discomfort of authentic considering. Over time, this may end up in decreased consideration spans and shallow engagement. It is very important domesticate digital habits that embody publicity to numerous content material, offline experiences and deliberate creativity workout routines.

Mitigation & Options

Platforms ought to implement variety necessities in suggestion methods, guaranteeing customers encounter quite a lot of views. Regulators can encourage transparency about how content material is curated. People can apply digital detox and have interaction in neighborhood actions that foster actual‑world connections. Academic packages can train crucial media literacy. Clarifai’s suggestion framework incorporates equity and variety constraints, serving to purchasers design recommender methods that stability personalization with publicity to new concepts.

Professional Insights

- Psychological analysis hyperlinks algorithmic echo chambers to elevated polarization and nervousness.

- Digital wellbeing advocates suggest practices like display‑free time and mindfulness to counteract algorithmic fatigue.

- Clarifai’s dedication: We emphasize human‑centric design in our suggestion fashions. Our platform presents variety‑conscious suggestion algorithms that may scale back echo chamber results, and we assist purchasers in measuring the social affect of their recommender methods.

Conclusion & Name to Motion

The 2026 outlook for synthetic intelligence is a examine in contrasts. On one hand, AI continues to drive breakthroughs in medication, sustainability and inventive expression. On the opposite, it poses important dangers and challenges—from algorithmic bias and privateness violations to deepfakes, environmental impacts and job displacement. Accountable growth isn’t optionally available; it’s a prerequisite for realizing AI’s potential.

Clarifai believes that collaborative governance is crucial. Governments, business leaders, academia and civil society should be a part of forces to create harmonized laws, moral tips and technical requirements. Organizations ought to combine accountable AI frameworks such because the NIST AI RMF and ISO/IEC 42001 into their operations. People should domesticate digital mindfulness, staying knowledgeable about AI’s capabilities and limitations whereas preserving human company.

By addressing these challenges head‑on, we will harness the advantages of AI whereas minimizing hurt. Continued funding in equity, privateness, sustainability, safety and accountability will pave the best way towards a extra equitable and human‑centric AI future. Clarifai stays dedicated to offering instruments and experience that assist organizations construct AI that’s reliable, clear and useful.

Incessantly Requested Questions (FAQs)

Q1. What are the largest risks of AI?

The foremost risks embody algorithmic bias, privateness erosion, deepfakes and misinformation, environmental affect, job displacement, psychological‑well being dangers, safety threats and lack of accountability. Every of those areas presents distinctive challenges requiring technical, regulatory and societal responses.

Q2. Can AI actually be unbiased?

It’s troublesome to create a totally unbiased AI as a result of fashions be taught from historic knowledge that comprise societal biases. Nonetheless, bias could be mitigated via numerous datasets, equity metrics, audits and steady monitoring.

Clarifai offers a complete compute orchestration platform that features equity testing, privateness controls, explainability instruments and safety assessments. Our mannequin inference companies generate mannequin playing cards and logs for accountability, and native runners enable knowledge to remain on-premise for privateness and compliance.

This autumn. Are deepfakes unlawful?

Legality varies by jurisdiction. Some international locations, resembling India, suggest necessary labeling and penalties for dangerous deepfakes. Others are drafting legal guidelines (e.g., the EU Digital Providers Act) to handle artificial media. Even the place authorized frameworks are incomplete, deepfakes could violate defamation, privateness or copyright legal guidelines.

Q5. Is a brilliant‑clever AI imminent?

Most consultants imagine that normal tremendous‑clever AI continues to be far-off and that present AI progress has slowed. Whereas alignment analysis ought to proceed, pressing consideration should deal with present harms like bias, privateness, misinformation and environmental affect.