Can a compact late interplay retriever index as soon as and ship correct cross lingual search with quick inference? Liquid AI launched LFM2-ColBERT-350M, a compact late interplay retriever for multilingual and cross-lingual search. Paperwork could be listed in a single language, queries could be written in lots of languages, and the system retrieves with excessive accuracy. The Liquid AI group reviews inference velocity on par with fashions which might be 2.3 instances smaller, which is attributed to the LFM2 spine. The mannequin is out there with a Hugging Face demo and an in depth mannequin card for integration in retrieval augmented era programs.

What late interplay means and why it issues?

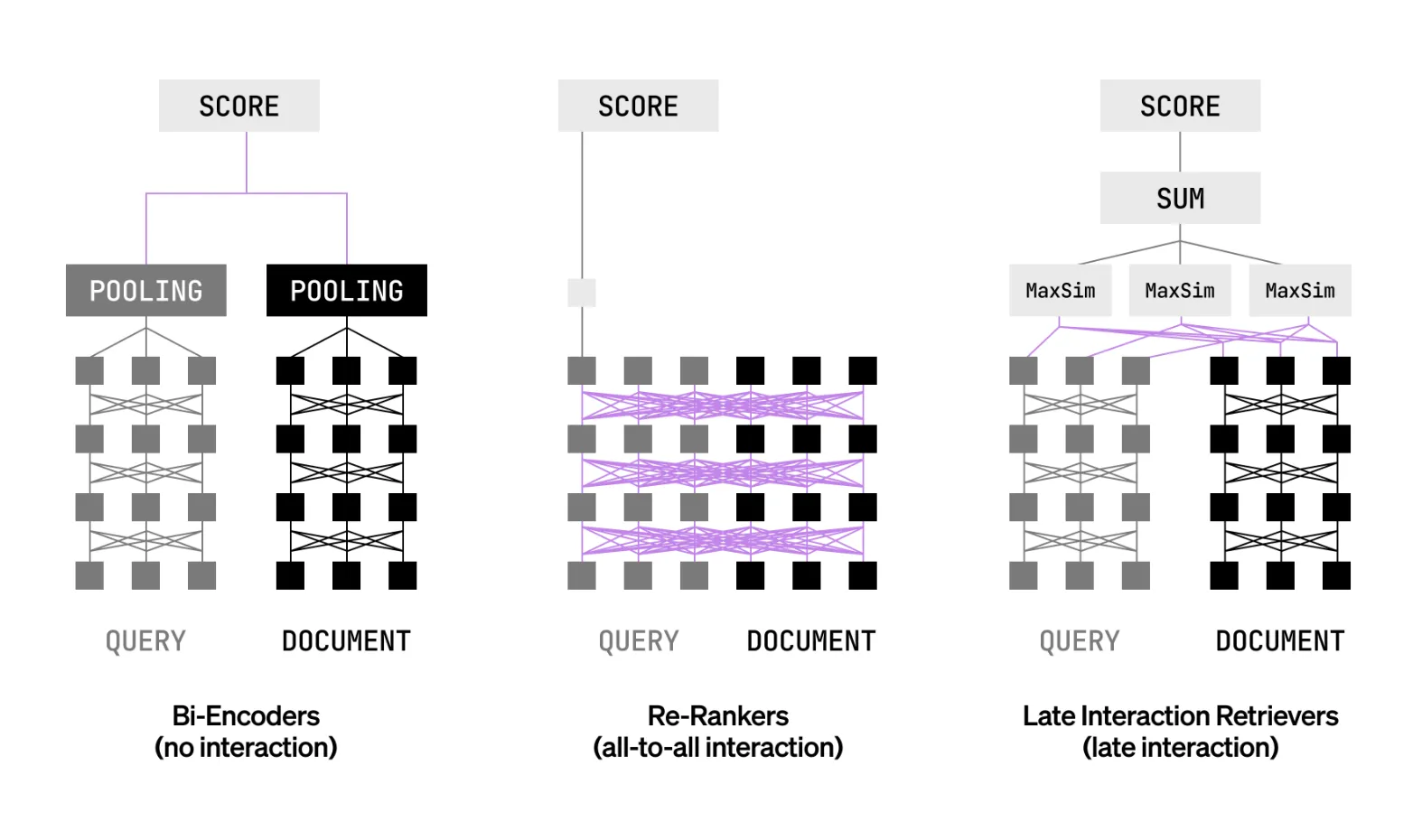

Most manufacturing programs use bi-encoders for velocity or cross encoders for accuracy. Late interplay goals to mix each benefits. Queries and paperwork are encoded individually on the token stage. The system compares token vectors at question time utilizing operations akin to MaxSim. This preserves fantastic grained token interactions with out the total value of joint cross consideration. It permits pre-computation for paperwork and improves precision at rating time. It may function a primary stage retriever and likewise as a ranker in a single cross.

Mannequin specification

LFM2-ColBERT-350M has 350 million whole parameters. There are 25 layers, with 18 convolution blocks, 6 consideration blocks, and 1 dense layer. The context size is 32k tokens. The vocabulary measurement is 65,536. The similarity perform is MaxSim. The output dimensionality is 128. Coaching precision is BF16. The license is LFM Open License v1.0.

Languages, supported and evaluated

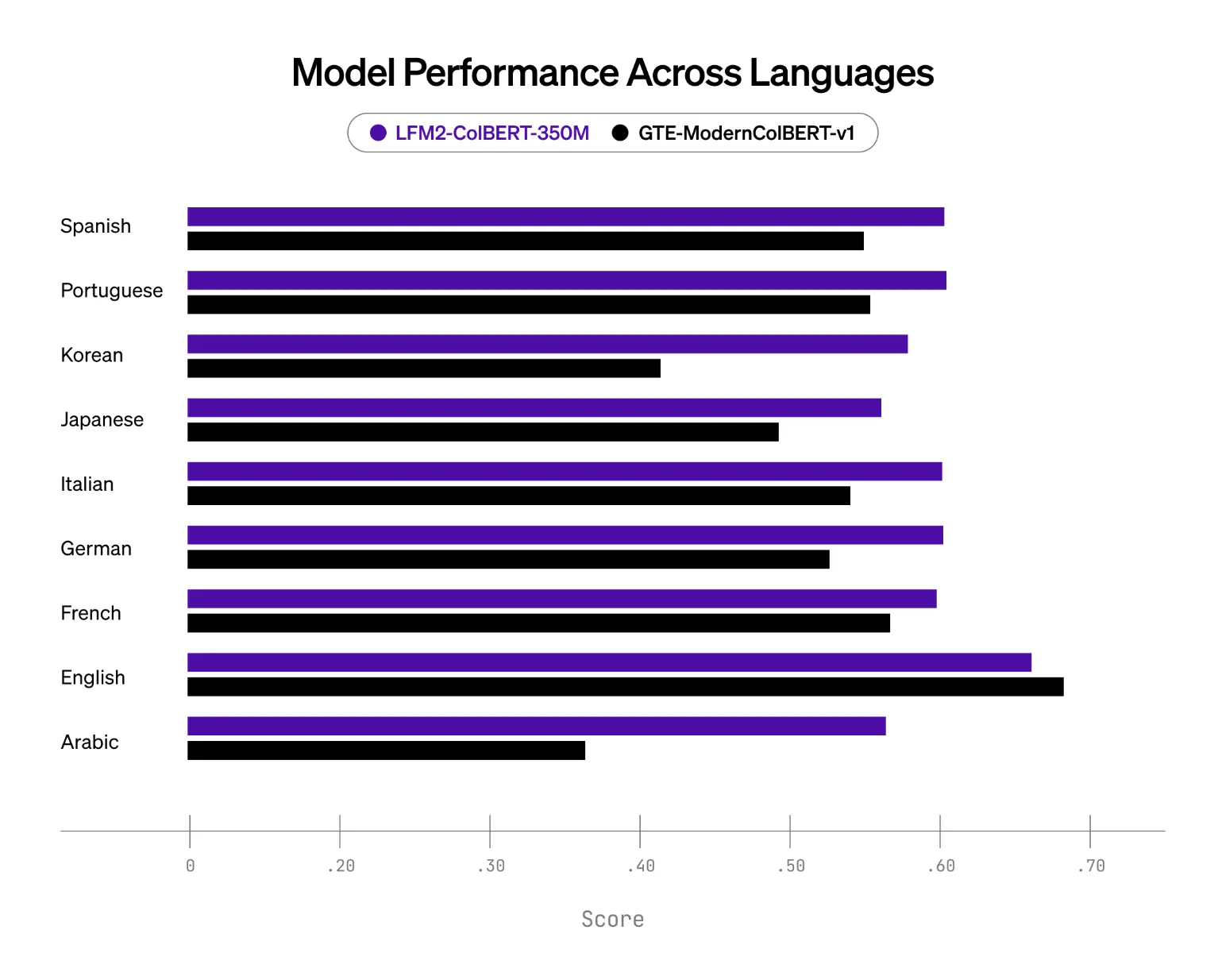

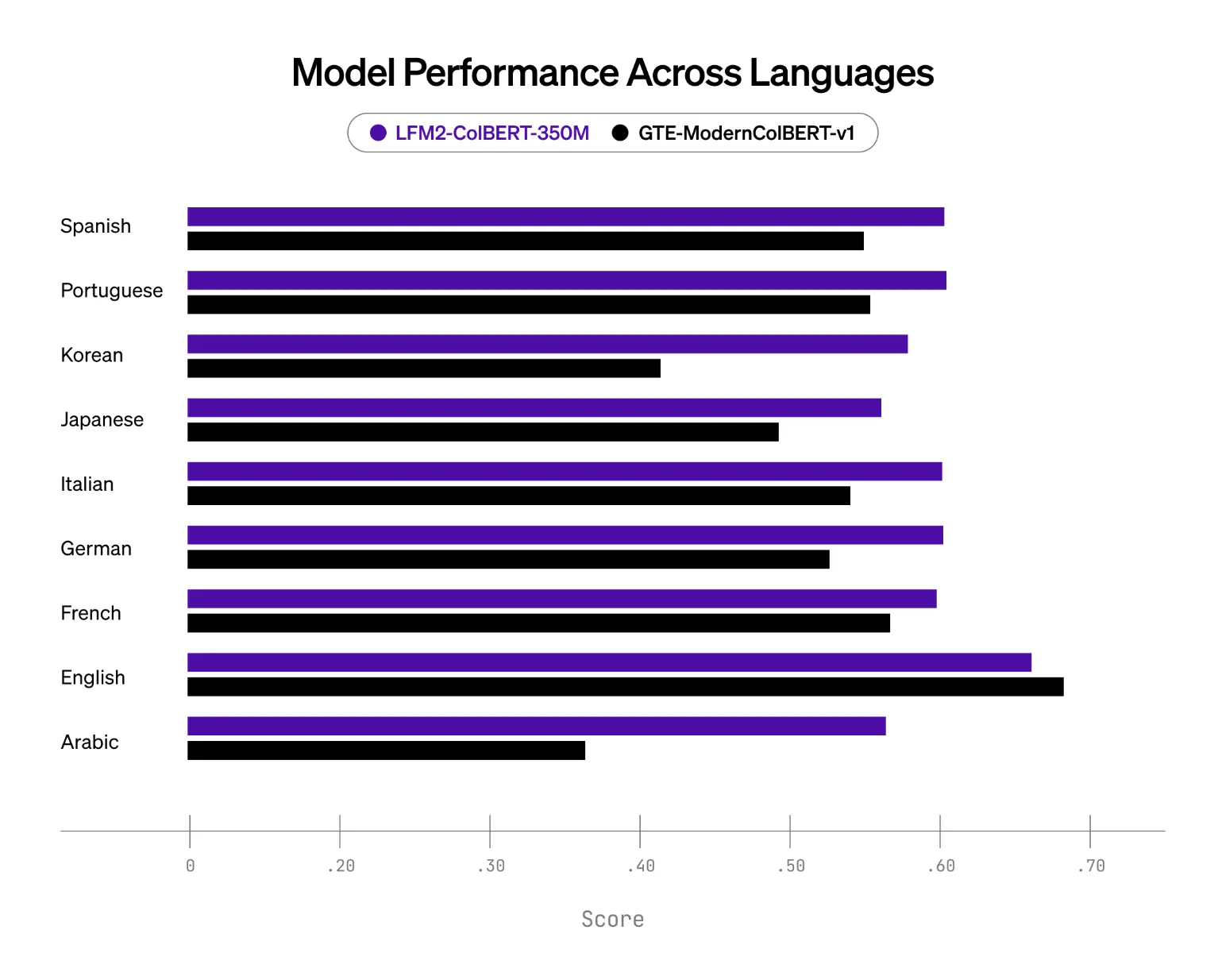

The mannequin helps 8 languages. These are English, Arabic, Chinese language, French, German, Japanese, Korean, and Spanish. The analysis provides Italian and Portuguese, which brings the matrix to 9 languages for cross comparisons of doc and question languages. This distinction is related when planning deployments that should cowl particular buyer markets.

Analysis setup and key outcomes

Liquid AI extends the NanoBEIR benchmark with Japanese and Korean and publishes the extension for reproducibility. On this setup, LFM2-ColBERT-350M exhibits stronger multilingual functionality than the baseline late interplay mannequin on this class, which is GTE-ModernColBERT-v1 at 150M parameters. The most important good points seem in German, Arabic, Korean, and Japanese, whereas English efficiency is maintained.

Key Takeaways

- Token-level scoring with MaxSim preserves fine-grained interactions whereas maintaining separate encoders, so doc embeddings could be precomputed and queried effectively.

- Paperwork could be listed in a single language and retrieved in lots of. The mannequin card lists 8 supported languages, whereas evaluations span 9 languages for cross-lingual pairs.

- On the NanoBEIR multilingual extension, LFM2-ColBERT-350M outperforms the prior late-interaction baseline (GTE-ModernColBERT-v1 at 150M) and maintains English efficiency.

- Inference velocity is reported on par with fashions 2.3× smaller throughout batch sizes, attributed to the LFM2 spine.

Editorial Notes

Liquid AI’s LFM2-ColBERT-350M applies late interplay ColBERT with MaxSim, it encodes queries and paperwork individually, then scores token vectors at question time, which preserves token stage interactions and allows precomputed doc embeddings for scale. It targets multilingual and cross lingual retrieval, index as soon as and question in lots of languages, with evaluations described on a NanoBEIR multilingual extension. Liquid AI group reviews inference velocity on par with fashions 2.3 instances smaller, attributed to the LFM2 spine. General, late interplay on the nano scale appears manufacturing prepared for multilingual RAG trials.

Try the Mannequin Weights, Demo and Technical particulars. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you possibly can be part of us on telegram as properly.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.