Utilizing a big language mannequin for the primary time typically seems like you might be holding uncooked intelligence in your palms. They have an inclination to put in writing, summarize, and cause extraordinarily properly. Nonetheless, you construct and ship an actual product, and all the cracks within the mannequin present themselves. It doesn’t keep in mind what you stated yesterday, and it begins to make issues up when it runs out of context. This isn’t as a result of the mannequin isn’t clever. It’s as a result of the mannequin is remoted from the surface world, and it’s constrained by context home windows that act like just a little whiteboard. This could’t be overcome with a greater immediate – you want an precise context across the mannequin. That is the place context engineering involves the rescue. This text acts as the great information on context engineering, defining the phrase and describing the processes concerned.

The issue nobody can escape

LLMs are good however restricted of their scope. That is partly as a consequence of them having:

- No entry to non-public paperwork

- No reminiscence of previous conversations

- Restricted context window

- Hallucination beneath stress

- Degradation when the context window will get too large

Whereas a number of the limitations are essential (missing entry to non-public paperwork), within the case of restricted reminiscence, hallucination and restricted context window, it’s not. This posits context engineering as the answer, not an add-on.

What’s Context Engineering?

Context engineering is the method of structuring the complete enter offered to a big language mannequin to reinforce its accuracy and reliability. It includes structuring and optimizing the prompts in a means that an LLM will get all of the “context” that it must generate a solution that precisely matches the required output.

Learn extra: What’s Context Engineering?

What does it supply?

Context engineering exists because the observe of feeding the mannequin precisely the proper information, in the proper order, on the proper time, utilizing an orchestrated structure. It’s not about altering the mannequin itself, however about constructing the bridges that join it to the surface world, retrieving exterior information, connecting it to reside instruments, and giving it a reminiscence to floor its responses in details, not simply its coaching information. This isn’t restricted to the immediate, therefore making it completely different from immediate engineering. It’s applied at a system design degree.

Context engineering has much less to do with what the consumer can put contained in the immediate, and extra with the structure alternative of the mannequin utilized by the developer.

The Constructing Blocks

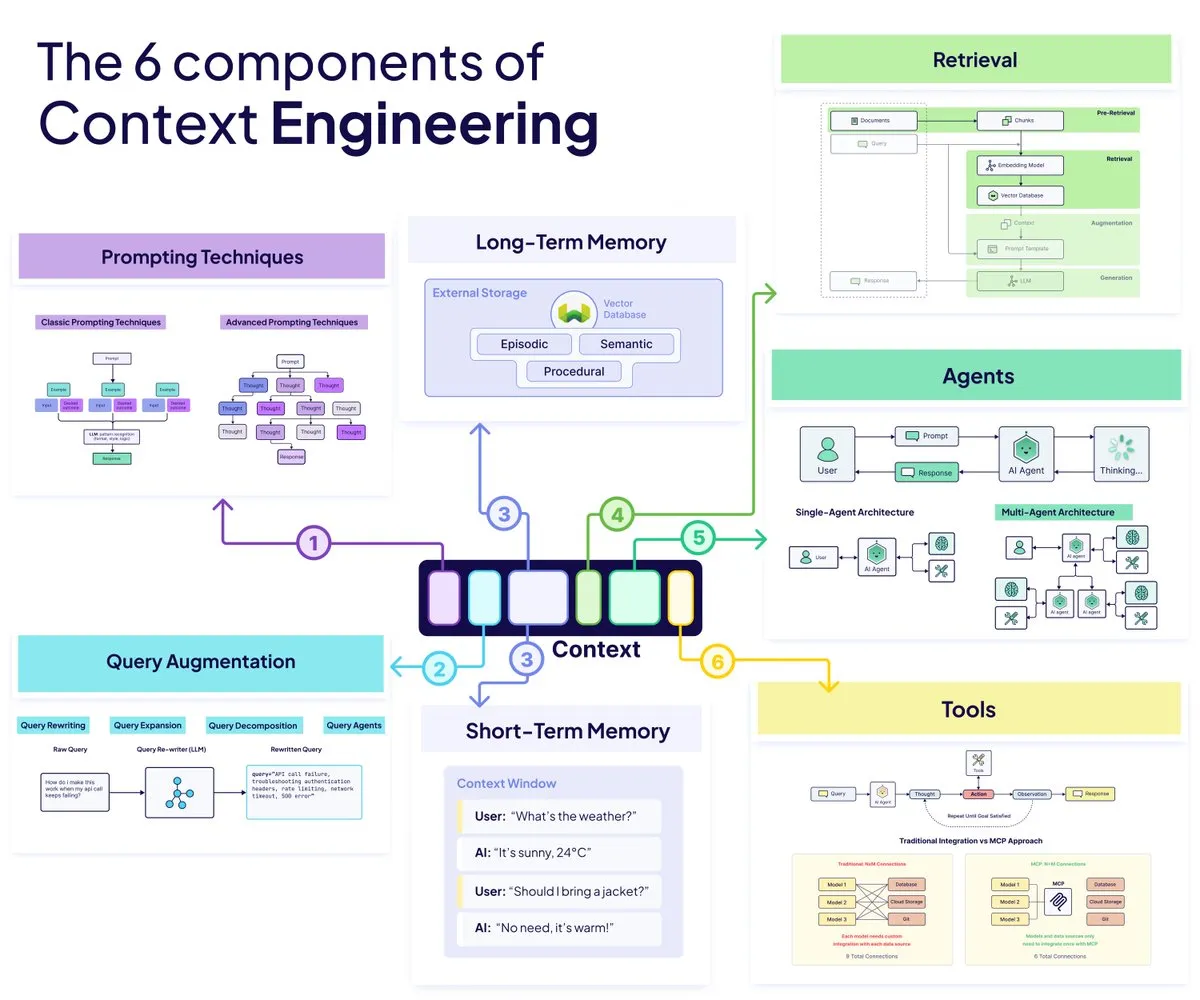

Listed here are the 6 constructing blocks of Content material Engineering framework:

1. Brokers

AI Brokers are the a part of your system that decides what to do subsequent. They learn the scenario, choose the proper instruments, modify their method, and ensure the mannequin shouldn’t be guessing blindly. As a substitute of a inflexible pipeline, brokers create a versatile loop the place the system can assume, act, and proper itself.

- They break down duties into steps

- They route info the place it must go

- They hold the entire workflow from collapsing when issues change

2. Question Augmentation

Question augmentation cleans up regardless of the consumer throws on the mannequin. Actual customers are messy, and this layer turns their enter into one thing the system can truly work with. By rewriting, increasing, or breaking the question into smaller elements, you make sure the mannequin is trying to find the proper factor as an alternative of the flawed factor.

- Rewriting removes noise and provides readability

- Growth broadens the search when intent is imprecise

- Decomposition handles complicated multi query prompts

3. Retrieval

Knowledge Retrieval through. Retrieval Augmented Technology, is the way you floor the one most related piece of data from an enormous information base. You chunk paperwork in a means the mannequin can perceive, pull the proper slice on the proper time, and provides the mannequin the details it wants with out overwhelming its context window.

- Chunk measurement impacts each accuracy and understanding

- Pre chunking speeds issues up

- Publish chunking adapts to tough queries

4. Prompting Strategies

Prompting strategies steer the mannequin’s reasoning as soon as the proper info is in entrance of it. You form how the mannequin thinks, the way it explains its steps, and the way it interacts with instruments or proof. The fitting immediate construction can flip a fuzzy reply right into a assured one.

- Chain of Thought encourages stepwise reasoning

- Few shot examples present the best final result

- ReAct pairs reasoning with actual actions

5. Reminiscence

Reminiscence provides your system continuity. It retains observe of what occurred earlier, what the consumer prefers, and what the agent has realized thus far. With out reminiscence, your mannequin resets each time. With it, the system turns into smarter, sooner, and extra private.

- Brief time period reminiscence lives contained in the context window

- Long run reminiscence stays in exterior storage

- Working reminiscence helps multi step flows

6. Instruments

Instruments let the mannequin attain past textual content and work together with the true world. With the proper toolset, the mannequin can fetch information, execute actions, or name APIs as an alternative of guessing. This turns an assistant into an precise operator that may get issues carried out.

- Perform calling creates structured actions

- MCP standardizes how fashions entry exterior methods

- Good software descriptions forestall errors

How do they work collectively?

Paint an image of a contemporary AI app:

- Consumer sends a messy question

- Question agent rewrites it

- Retrieval system finds proof through good chunking

- Agent validates information

- Instruments pull real-time exterior information

- Reminiscence shops and retrieves context

Image it like this:

The consumer sends a messy question. The question agent receives it and rewrites it for readability. The RAG system finds proof throughout the question through good chunking. The agent receives this info and checks its authenticity and integrity. This info is used to make applicable calls through MCP to tug real-time information. The reminiscence shops info and context obtained throughout this retrieval and cleansing.

This info will be retrieved afterward to get again on observe, in-case related context is required. This protects redundant processing and permits processed info retrieval for future use.

Actual-world examples

Listed here are some actual world functions of a context engineering structure:

- Helpers for Buyer Assist: Brokers revise imprecise buyer inquiries, extract product-specific paperwork, test previous tickets in long-term reminiscence, and use instruments to fetch order standing. The mannequin doesn’t guess; it responds with recognized context.

- Inside Information Assistants for Groups: Staff ask messy, half-formed questions. Question augmentation cleans them up, retrieval finds the correct coverage or technical doc, and reminiscence remembers previous conversations. Now, the agent serves as a reliable inside layer of looking out and reasoning to assist.

- AI Analysis Co-Pilots: The system breaks down complicated analysis inquiries into its element elements, retrieves related papers utilizing semantic or hierarchical chunking, and synthesizes the outcomes. Instruments are capable of entry reside datasets whereas reminiscence will hold observe of earlier hypotheses, notes, and so forth.

- Workflow Automation Brokers: The agent plans a process with many steps, calls APIs, checks calendars, updates databases, and makes use of long-term reminiscence to personalize the motion. Retrieval brings applicable guidelines or SOPs into the workflow to maintain it authorized or correct.

- Area-Particular Assistants: Retrieval pulls in verified paperwork, pointers, or laws. Reminiscence shops earlier instances. Instruments entry reside methods or datasets. Question rewriting reduces consumer ambiguity to maintain mannequin grounded and protected.

What this implies for the way forward for AI engineering

With context engineering, the main target is not on an ongoing dialog with a mannequin, however as an alternative on designing the ecosystem context that may allow the mannequin to carry out intelligently. This isn’t nearly prompts, retrieval methods, or cobbled collectively structure. It’s a tightly coordinated system the place brokers resolve what to do, queries get cleaned up, the proper details present up on the proper time, reminiscence carries previous context ahead, and instruments let the mannequin act in the true world.

These parts will proceed to develop and evolve, although. What’s going to outline the extra profitable fashions, apps, or instruments are those constructed on intentional, deliberative context design. Greater fashions alone received’t get us there, however higher engineering will. The long run will belong to the builders, those that thought concerning the setting simply as a lot as they thought concerning the fashions.

Incessantly Requested Questions

A. It fixes the disconnect between an LLM’s intelligence and its restricted consciousness. By controlling what info reaches the mannequin and when, you keep away from hallucination, lacking context, and the blind spots that break real-world AI apps.

A. Immediate engineering shapes directions. Context engineering shapes the complete system across the mannequin, together with retrieval, reminiscence, instruments, and question dealing with. It’s an architectural self-discipline, not a immediate tweak.

A. Greater home windows nonetheless get noisy, gradual, and unreliable. Fashions lose focus, combine unrelated particulars, and hallucinate extra. Good context beats sheer measurement.

A. No. It improves any AI software that wants reminiscence, software use, multi-step reasoning, or interplay with non-public or dynamic information.

A. Robust system design considering, familiarity with brokers, RAG pipelines, reminiscence shops, and gear integration. The objective is orchestrating info, not simply calling an LLM.

Login to proceed studying and luxuriate in expert-curated content material.