Introduction

In at present’s digital economic system, organizations of each measurement depend upon cloud platforms to ship scalable purposes, crunch information and assist distant groups. But working your personal cloud infrastructure is advanced and useful resource‑intensive. It’s good to architect resilient networks, patch servers at odd hours and preserve compliance throughout a number of jurisdictions. Managed cloud has emerged as a solution to offload this burden to specialists. Market analysts estimate that the worldwide cloud‑managed providers market was value USD 134.44 billion in 2024 and will attain USD 305.16 billion by 2030, increasing at a 14.7 % compound annual progress fee. Rising complexity, talent shortages and the necessity for value optimization are fueling this shift.

This information explains what managed cloud means, the way it differs from different cloud fashions and why it’s turning into the default for a lot of AI‑enabled tasks. You’ll discover sensible insights on selecting a supplier, mitigating dangers and profiting from rising tendencies akin to AI‑pushed operations and multi‑cloud methods. Wherever related, the article illustrates how Clarifai’s compute orchestration, mannequin inference and native runner options match into the image. The aim is to provide you an EEAT‑optimized, editorial‑type overview that delivers each depth and readability.

Fast Digest

- Managed cloud outlined: It’s a mannequin the place a 3rd‑occasion service supplier manages and operates your cloud infrastructure, purposes and providers. Suppliers deal with provisioning, safety, monitoring and optimization so your workforce can give attention to innovation.

- Service fashions: Managed cloud spans infrastructure (IaaS), platforms (PaaS), purposes (SaaS), naked‑steel‑as‑a‑service and storage‑as‑a‑service. Understanding these fashions helps align your workloads with the best degree of abstraction.

- Advantages & drawbacks: Organizations select managed cloud for personalisation, scalability, value management, safety and improved availability. The commerce‑offs embrace dependence on suppliers, multi‑tenant safety issues and diminished management.

- Comparisons: Managed cloud sits between self‑managed infrastructure and easy hosted environments. It presents better customization than hosted cloud however shifts extra accountability to the supplier than unmanaged public cloud.

- AI & rising tendencies: AI workloads drive new calls for for GPUs, information pipelines and orchestration. Analysts predict AI infrastructure spending will exceed USD 2 trillion by 2026, and cloud platforms are embedding agentic AI for autonomous operations. Multi‑cloud methods, FinOps and stringent governance are additionally reshaping managed cloud.

- Selecting a supplier: Consider experience, service‑degree agreements (SLAs), availability, assist and pricing transparency. Take into account business expertise, catastrophe restoration capabilities and skill to scale with AI workloads.

What Is Managed Cloud?

What does “managed cloud” actually imply?

A managed cloud service is a type of cloud computing through which a specialised supplier is absolutely or partially accountable for the administration, upkeep and operation of your cloud setting. As a substitute of shopping for and sustaining servers, software program and networking {hardware} your self, you subscribe to a managed service and entry sources by way of an internet interface or API. The supplier ensures your infrastructure runs effectively, handles configuration and patching, optimizes efficiency and implements safety measures.

In unmanaged public cloud fashions, prospects provision digital machines or container clusters and should configure working techniques, networking, monitoring and backups. Managed cloud suppliers add an operational layer on high of cloud sources. They deal with duties like:

- Provisioning and configuration – organising servers, storage and networks in response to finest practices.

- Steady monitoring and optimization – utilizing superior instruments to observe efficiency and mechanically regulate capability or repair points.

- Safety and compliance – implementing entry controls, encryption and vulnerability administration.

- Backup and catastrophe restoration – mechanically backing up information and restoring it after an outage.

- Patching and updates – making use of software program updates behind the scenes with out downtime.

By outsourcing these obligations, organizations free technical groups from routine upkeep and might give attention to constructing merchandise and delivering worth. Managed cloud isn’t restricted to public cloud; suppliers can function non-public clouds or handle hybrid deployments throughout a number of platforms.

Knowledgeable Insights

- Operational agility: Giving operational management to specialists accelerates time to market and permits groups to experiment with out worrying about infrastructure upkeep.

- Value predictability: Subscription or pay‑as‑you‑go fashions assist align spending with utilization and keep away from surprising capital expenditures.

- Business expertise issues: Search suppliers with expertise in your sector; regulated industries require nuanced compliance data.

- Clarifai’s function: Clarifai’s compute orchestration simplifies deploying AI fashions on managed cloud or on‑prem environments, making certain that workloads are positioned on the best sources with out handbook intervention.

Instance

Suppose a startup constructing a pc‑imaginative and prescient app needs to keep away from hiring a DevOps workforce. By selecting a managed cloud supplier, the founders can add their container photographs, choose desired areas and depend on automated scaling and safety. Clarifai’s inference API and native runner can then run fashions both within the managed cloud or on edge gadgets, giving flexibility with out added operational complexity.

Managed Cloud Service Fashions

What sorts of providers fall beneath managed cloud?

Managed cloud encompasses varied service fashions, every abstracting totally different layers of the expertise stack. The primary classes are infrastructure‑as‑a‑service (IaaS), platform‑as‑a‑service (PaaS), software program‑as‑a‑service (SaaS), naked‑steel‑as‑a‑service (BMaaS) and storage‑as‑a‑service (STaaS).

- IaaS (Managed Infrastructure): Suppliers hire digital computing sources—compute, storage and networking—on demand. Prospects retain management over working techniques and software environments however delegate {hardware} upkeep, virtualization and scaling. Managed IaaS typically consists of automated provisioning, patch administration and useful resource optimization.

- PaaS: This mannequin presents a whole improvement setting together with working techniques, middleware and databases. Builders can construct, check and deploy purposes with out managing underlying servers. Managed PaaS providers usually combine steady integration/steady deployment (CI/CD), monitoring and safety insurance policies.

- SaaS: Total purposes are delivered over the web on a subscription foundation. Managed SaaS relieves prospects from managing something past person entry and configuration; the supplier handles upgrades, uptime and information safety.

- Naked‑Steel‑as‑a‑Service (BMaaS): Suppliers deploy devoted bodily servers for patrons. In contrast to virtualized IaaS, BMaaS provides nearly whole management over {hardware} configuration whereas nonetheless outsourcing facility administration, energy and cooling.

- Storage‑as‑a‑Service (STaaS): Organizations subscribe to uncooked storage capability and entry it by way of APIs or community protocols. Managed STaaS consists of replication, snapshot administration and capability scaling.

The best mannequin relies on your software’s complexity and compliance necessities. As an illustration, AI coaching workloads typically require BMaaS or GPU‑enabled IaaS to realize deterministic efficiency, whereas deploying net purposes is likely to be simpler with PaaS.

Knowledgeable Insights

- Hybrid fashions: Many suppliers mix these providers into bespoke bundles that match workload necessities. For instance, a PaaS answer could run on a managed IaaS basis with STaaS for persistent information.

- Edge and native deployments: Managed providers more and more lengthen to on‑prem or edge gadgets; Clarifai’s native runner lets customers run inference domestically whereas central orchestration stays within the cloud.

- Avoiding vendor lock‑in: Selecting open requirements and containerization (e.g., Kubernetes) helps preserve portability throughout service fashions.

- Steady optimization: Whatever the mannequin, managed providers ought to embrace monitoring instruments to proper‑measurement sources and management prices.

Instance

A fintech firm may use managed IaaS for its core banking platform, PaaS for buyer‑going through net apps, SaaS for CRM and BMaaS for prime‑frequency buying and selling algorithms that require predictable latency. This layered method permits every workload to make use of an optimum degree of abstraction whereas centralizing operations by means of a single managed cloud supplier.

How Managed Cloud Works

How do suppliers handle cloud infrastructure in your behalf?

Managed cloud providers work by transferring day‑to‑day operational obligations to a supplier. Prospects entry sources by means of dashboards or APIs whereas the supplier runs and optimizes the underlying infrastructure.

The everyday lifecycle of a managed cloud engagement includes a number of phases:

- Evaluation: The supplier assesses your current workloads, compliance necessities and enterprise targets to design a tailor-made answer.

- Design & deployment: Engineers deploy digital machines, containers or naked‑steel servers in response to agreed architectures, configure networks and arrange monitoring and safety controls.

- Steady monitoring: Automated instruments observe efficiency, useful resource utilization and safety occasions 24/7, producing alerts and suggestions.

- Help and upkeep: Suppliers supply technical assist, apply patches and carry out upgrades with out disrupting workloads.

- Optimization: Ongoing tuning ensures proper‑sizing of compute and storage sources, value optimization and improved efficiency.

Managed providers could also be delivered from public clouds, non-public information facilities or a hybrid of each. Prospects usually pay by way of month-to-month subscription or consumption‑primarily based billing. Clear pricing and detailed dashboards assist observe useful resource utilization and budgets.

Knowledgeable Insights

- Automation is vital: Suppliers depend on automation and Infrastructure‑as‑Code to provision sources, implement insurance policies and forestall configuration drift. This additionally allows speedy scaling and reproducibility.

- Position of SLAs: Service Stage Agreements outline uptime ensures, response occasions and efficiency metrics. Consider SLA phrases intently to make sure they align with your enterprise wants.

- Information sovereignty: For regulated industries, make sure the supplier can deploy workloads in particular areas and preserve required information residency.

- Clarifai orchestration: Clarifai’s compute orchestration manages AI pipelines throughout GPU clusters and CPUs, abstracting infrastructure particulars so builders can give attention to mannequin logic.

Instance

Take into account a retail firm launching a vacation promotion. A managed cloud supplier can mechanically scale net servers and databases to deal with site visitors spikes, implement WAF protections towards bots and patch vulnerabilities on the fly. The retailer’s engineers monitor dashboards and regulate enterprise logic whereas the supplier ensures the underlying infrastructure stays resilient.

Advantages of Managed Cloud

Why do organizations embrace managed cloud providers?

Corporations undertake managed cloud to enhance agility, management prices, improve safety and entry experience. The mannequin tailors sources to workloads and frees inside groups from upkeep.

Customization and experience. Managed providers are tailor-made to your particular workloads quite than providing a one‑measurement‑matches‑all setting. Suppliers carry specialised experience in cloud structure, DevOps and safety, which small groups could lack.

Scalability and adaptability. Managed cloud allows on‑demand scaling of compute, storage and community capability. This elasticity helps seasonal spikes or AI coaching runs with out upfront funding.

Value‑effectiveness. With pay‑as‑you‑use billing, you solely pay for sources consumed. Outsourcing reduces capital expenditures and mitigates the necessity to rent specialised employees.

Safety and compliance. Suppliers implement sturdy safety measures, together with encryption, entry management and steady menace monitoring. This helps meet business rules and reduces the chance of misconfiguration. Based on market analysis, safety providers accounted for over 26 % of the cloud‑managed providers market in 2024.

Reliability and resilience. Managed providers make use of redundancy and failover mechanisms to make sure excessive availability. Catastrophe restoration capabilities velocity up restoration after outages or information loss.

Give attention to innovation. By outsourcing infrastructure administration, organizations can think about constructing merchandise, experimenting with new options and leveraging AI. Managed cloud typically consists of entry to reducing‑edge applied sciences akin to GPUs, serverless features and AI providers.

Knowledgeable Insights

- Enterprise alignment: Managed cloud aligns IT spending with enterprise worth; funds shift from capital expenditures to operational bills, making budgeting extra predictable.

- Aggressive benefit: Organizations that harness managed cloud can iterate quicker, reply to buyer calls for shortly and incorporate AI options forward of slower rivals.

- Compliance peace of thoughts: Suppliers typically have certifications (SOC 2, ISO 27001, HIPAA) that simplify compliance audits.

- Clarifai synergy: For AI tasks, managed cloud with GPU accelerators paired with Clarifai’s mannequin inference permits groups to deploy and scale AI options with out mastering low‑degree {hardware} provisioning.

Instance

A healthcare startup constructing a medical imaging platform chooses a managed cloud to fulfill HIPAA necessities. The supplier provides encrypted storage, audit trails and automatic patching. In the meantime, the startup’s engineers give attention to coaching pc‑imaginative and prescient fashions utilizing Clarifai’s platform and scaling inference by means of managed GPU cases throughout peak diagnostic workloads.

Drawbacks and Challenges

What are the potential downsides of managed cloud?

Regardless of its benefits, managed cloud introduces new dangers and commerce‑offs. Dependence on third‑occasion suppliers can have an effect on management, prices and safety.

Supplier dependence. When a supplier controls your infrastructure, any service outage or strategic shift on their finish can disrupt your operations. Organizations should assess the supplier’s monetary stability and assist responsiveness.

Multi‑tenant safety issues. Managed providers typically use multi‑tenant architectures; insufficient isolation can expose delicate information. Strict entry controls and encryption are non‑negotiable.

Restricted management and customization. Suppliers could limit how sources are configured or which instruments you should utilize. This may be problematic for area of interest workloads requiring unconventional configurations.

Vendor lock‑in. Relying closely on proprietary tooling could make migration tough. To mitigate this, select suppliers that assist open requirements and moveable artifacts akin to containers and Terraform scripts.

Value unpredictability. Whereas pay‑as‑you‑go fashions supply flexibility, surprising spikes can happen if workloads aren’t optimized or monitored. Implement FinOps practices to forecast and management cloud spend.

Compliance and sovereignty. Some industries require information to reside inside particular jurisdictions. Not all suppliers supply granular management over information location, which might complicate compliance methods.

Knowledgeable Insights

- Due diligence: Consider a supplier’s observe report for uptime, transparency and safety. Carry out audits and request compliance certifications.

- Shared accountability: Even in managed cloud, prospects share accountability for software‑degree safety, information governance and id administration.

- Exit technique: Plan for migration or multi‑cloud situations early to keep away from vendor lock‑in. Infrastructure‑as‑Code and containerization are beneficial instruments for portability.

- Clarifai perspective: Clarifai’s platform permits deployment on managed cloud or on‑prem utilizing the identical APIs, providing flexibility in case your infrastructure technique evolves.

Instance

A media firm migrates to a managed cloud to speed up content material supply. Months later, the supplier adjustments its pricing mannequin, rising egress prices. As a result of the corporate didn’t optimize bandwidth utilization or implement finances alerts, prices rise unexpectedly. By adopting FinOps instruments and negotiating new SLAs, the corporate regains management.

Managed Cloud vs. Different Cloud Approaches

How does managed cloud evaluate to hosted and self‑managed clouds?

Managed cloud sits between easy internet hosting and do‑it‑your self cloud computing. It offers extra customization than hosted providers and shifts extra accountability to the supplier than unmanaged public cloud.

Hosted cloud. In a hosted or “furnished residence” mannequin, the supplier owns the infrastructure and provides you entry to pre‑configured environments with restricted customization. You deal with configuration, scaling and monitoring your self. This feature is fast to arrange and fits standardized workloads.

Managed cloud. Consider managed cloud as having an architect design and preserve your customized dwelling. You select the platforms and configure excessive‑degree settings; the supplier actively manages patching, scaling, efficiency tuning, backups and compliance. It’s excellent for advanced workloads requiring customization and professional steering.

Self‑managed cloud (public cloud). Public cloud suppliers ship uncooked infrastructure on a pay‑per‑use foundation. You’ve full management over the way you configure, safe and function sources however should preserve them your self.

Naked steel. On naked steel servers, you management {hardware} solely. This fits latency‑delicate or regulated workloads however calls for vital in‑home experience and capital funding.

|

Strategy

|

Management & Accountability

|

Very best For

|

|

Hosted

|

Minimal customization; buyer handles software configuration and scaling

|

Standardized workloads with predictable necessities

|

|

Managed

|

Shared management; supplier manages infrastructure, safety and scaling; buyer configures purposes

|

Dynamic workloads needing professional operations and compliance

|

|

Self‑Managed

|

Full management; buyer configures, patches and displays infrastructure

|

Organizations with sturdy DevOps capabilities and area of interest necessities

|

|

Naked Steel

|

Full management of {hardware}; buyer maintains servers

|

Excessive‑efficiency, regulated or latency‑delicate workloads

|

Knowledgeable Insights

- Hybrid methods: Many enterprises mix managed and self‑managed clouds. For instance, they run baseline workloads on a managed platform and burst into public cloud throughout peak demand.

- Value vs. management: Managed clouds are typically costlier than uncooked infrastructure, however the operational financial savings typically outweigh the premium.

- Cultural match: Groups with sturdy DevOps and SRE abilities could choose self‑managed options; groups targeted on product improvement profit from managed providers.

- Clarifai perception: Clarifai helps deployment throughout managed and self‑managed environments, making it simpler emigrate fashions as your technique evolves.

Instance

A SaaS vendor chooses managed cloud for its core software as a result of uptime, safety and compliance are paramount. For its improvement setting, nevertheless, engineers use self‑managed sources to experiment freely. This hybrid method balances management and operational effectivity.

Managed Cloud for AI and Machine Studying

How does managed cloud assist AI and ML workloads?

AI and machine‑studying workloads demand giant computational sources, specialised {hardware} and streamlined information pipelines. Managed cloud offers GPU‑enabled infrastructure, automated scaling and operational experience to fulfill these calls for. Analysts predict that world AI infrastructure spending will surpass USD 2 trillion by 2026, highlighting the significance of environment friendly orchestration.

Excessive‑efficiency {hardware}. AI coaching and inference typically require GPUs, tensor processing items (TPUs) or specialised accelerators. Managed cloud suppliers supply prepared‑to‑use GPU cases and naked‑steel servers, eliminating procurement delays. In addition they deal with driver updates and upkeep.

Scalable information pipelines. Machine‑studying workflows contain ingesting, processing and storing giant volumes of information. Managed platforms combine managed information providers—like object storage, databases and streaming—to construct sturdy pipelines. Automated scaling ensures constant throughput throughout peak masses.

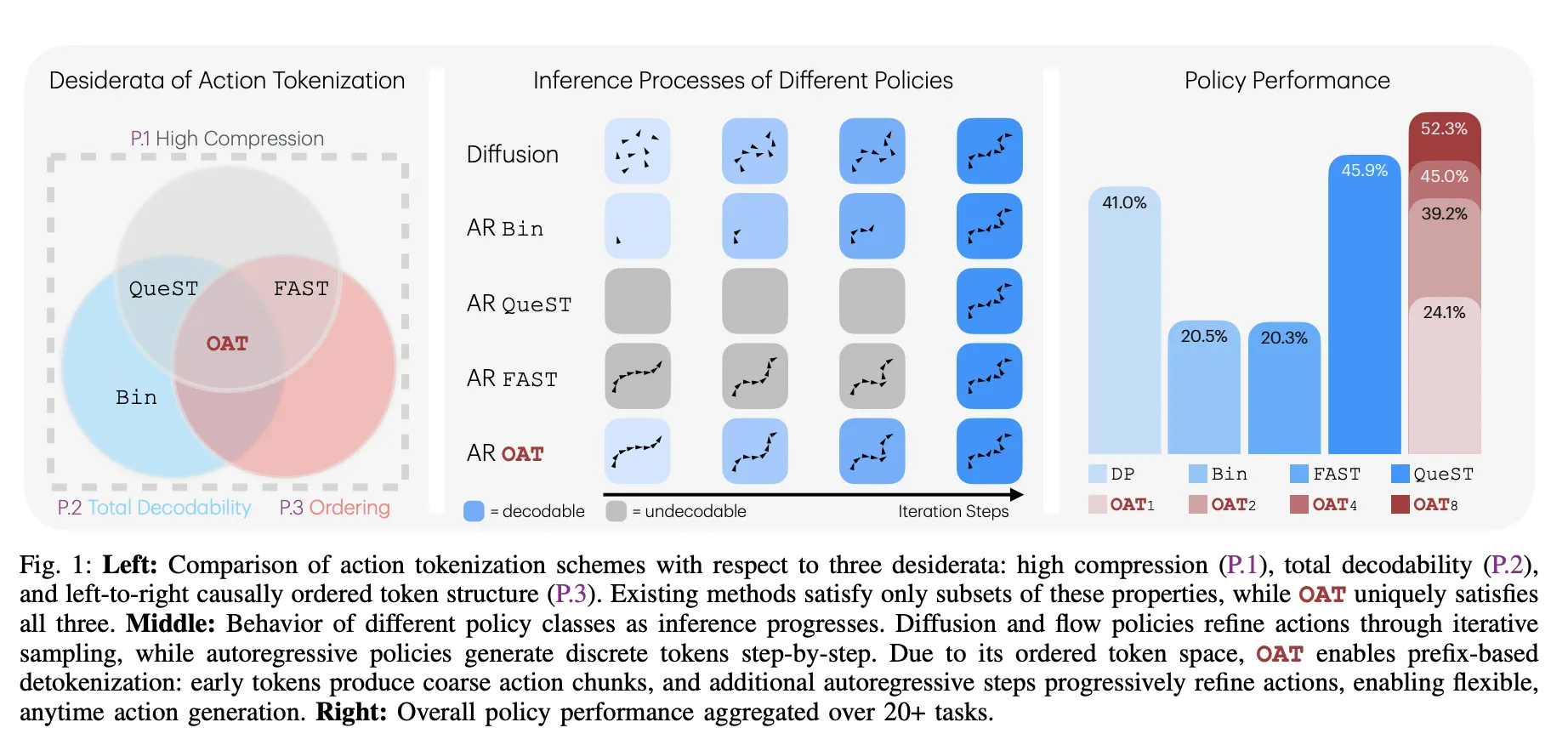

Mannequin orchestration and deployment. Deploying fashions into manufacturing includes packaging, routing and monitoring. Clarifai’s compute orchestration helps builders choose the best runtimes and {hardware} for every mannequin, whether or not hosted within the cloud or run domestically on the Clarifai native runner. Managed environments assist Kubernetes or serverless frameworks to auto‑scale inference workloads.

AIOps and autonomous cloud. Rising managed providers embed AI brokers that optimize useful resource utilization, detect anomalies and self‑heal infrastructure. Governance frameworks and guardrails are important to make sure these autonomous techniques align with enterprise insurance policies.

Value administration. AI workloads can drive unpredictable prices as a result of variable GPU utilization. Managed suppliers incorporate FinOps instruments to trace spend and advocate optimizations.

Knowledgeable Insights

- Information locality: For privateness or latency causes, working fashions on edge gadgets utilizing Clarifai’s native runner can scale back cloud dependencies whereas nonetheless benefiting from centralized orchestration.

- Experimentation vs. manufacturing: Use self‑managed environments for R&D and managed cloud for manufacturing AI providers requiring excessive availability and compliance.

- Rising {hardware}: As AI fashions evolve, keep watch over new accelerators (e.g., Graphcore, Cerebras). Managed suppliers typically undertake these early.

- Governance: Implement accountable AI practices (equity, explainability) on high of managed platforms and make sure the supplier’s insurance policies align with moral requirements.

Instance

A logistics firm needs to deploy actual‑time route optimization utilizing reinforcement studying. Managed cloud offers GPU clusters for coaching and inference together with streaming information providers. Clarifai’s orchestration mechanically provisions GPU nodes for mannequin retraining in a single day, whereas the native runner permits the inference part to run on edge gadgets in supply vans, decreasing latency and bandwidth use.

Business Use Circumstances & Purposes

The place does managed cloud make the largest affect?

Managed cloud providers are versatile and assist a variety of industries and purposes. They’re notably beneficial in contexts requiring scalability, excessive availability and regulatory compliance.

Catastrophe restoration and resilience. Organizations use managed cloud for backup and catastrophe restoration options; failover might be computerized, and there’s no want to keep up secondary information facilities.

Large information analytics. Massive datasets from IoT sensors, transactions or analysis require scalable compute and storage. Managed platforms present the capability for processing frameworks like Spark or Hadoop.

Web of Issues (IoT). IoT gadgets generate steady streams of information. Managed providers provide the infrastructure, velocity and assist to gather, retailer and analyze this information.

Regulated industries. Sectors akin to banking, insurance coverage and healthcare demand strict compliance and information safety. Managed suppliers supply devoted or non-public cloud choices with audit logging, encryption and area‑particular deployments. In 2024 the BFSI sector held the biggest share of the cloud‑managed providers market.

Media and leisure. Media workflows contain transcoding, rendering and streaming at scale. Managed GPU providers speed up these duties and guarantee clean supply.

Analysis and excessive‑efficiency computing. Scientific simulations and AI analysis profit from naked‑steel GPU clusters and excessive‑bandwidth storage obtainable by means of managed cloud.

Edge‑AI purposes. Combining managed cloud for orchestration with edge deployment by way of native runners allows actual‑time AI in retail shops, manufacturing amenities and autonomous automobiles.

Knowledgeable Insights

- Sector‑particular compliance: Healthcare workloads require HIPAA compliance; finance requires PCI DSS and GDPR; suppliers ought to have related certifications.

- Latency issues: For actual‑time processing (e.g., autonomous driving), edge deployments scale back spherical‑journey delay; managed cloud orchestrates updates and mannequin versioning.

- Information gravity: Massive datasets are costly to maneuver. Consider managed suppliers’ community egress insurance policies and availability of regional information facilities.

- Clarifai applicability: Clarifai’s AI platform is used throughout industries akin to retail (visible search), manufacturing (defect detection) and utilities (predictive upkeep). Managed cloud ensures the underlying compute is all the time obtainable, whereas Clarifai handles mannequin lifecycle administration.

Instance

A financial institution launches a fraud detection system powered by machine studying. Managed cloud ensures that transaction streams are processed on safe, compliant infrastructure with encryption and audit controls. The system scales mechanically throughout excessive transaction durations and integrates Clarifai’s anomaly detection fashions to identify suspicious patterns.

Safety, Compliance & Governance

How do managed cloud providers tackle safety and regulatory necessities?

Safety and compliance are paramount in managed cloud. Suppliers implement layered safety and governance frameworks to safeguard information and preserve belief. Safety providers now characterize greater than 26 % of the cloud‑managed providers market.

Entry management and id administration. Robust authentication and function‑primarily based entry management (RBAC) forestall unauthorized entry to cloud sources. Identification turns into the inspiration of cloud safety. Suppliers combine single signal‑on (SSO), multi‑issue authentication and secrets and techniques administration.

Information encryption and privateness. Information is encrypted at relaxation and in transit. Managed platforms supply key administration providers, disk encryption and safe object storage. Prospects ought to be sure that encryption keys might be saved and rotated in response to compliance insurance policies.

Menace detection and response. Steady monitoring detects anomalies and potential intrusions. AI‑pushed safety instruments automate detection, implement insurance policies and generate remediation actions.

Compliance frameworks. Suppliers certify their providers towards rules akin to GDPR, HIPAA, SOC 2 and PCI DSS, giving prospects a head begin on compliance. Audits and proof reporting simplify regulatory critiques.

Governance and guardrails. As cloud platforms turn into extra autonomous, governance strikes to the forefront. Insurance policies codify acceptable configurations, value controls and information residency. Infrastructure‑as‑Code and coverage‑as‑code instruments implement guardrails throughout multi‑cloud environments.

Knowledgeable Insights

- Shared accountability mannequin: Even with managed providers, prospects should guarantee safe software code, applicable id insurance policies and information classification.

- Zero‑belief structure: Assume no implicit belief; confirm each request. Managed suppliers ought to assist micro‑segmentation and id‑centric networks.

- Incident response: Overview how shortly the supplier detects and responds to safety incidents. Ask about their incident administration processes and communication protocols.

- Clarifai issues: Clarifai encrypts information in transit and at relaxation. When deploying fashions by way of managed cloud, be sure that API keys and tokens are saved securely and rotated frequently.

Instance

A pharmaceutical firm should adjust to GDPR and HIPAA. Its managed cloud supplier presents regional information facilities in Europe, sturdy encryption and steady compliance monitoring. Coverage‑as‑code enforces that solely approved researchers can entry delicate datasets. When the corporate deploys an AI mannequin utilizing Clarifai’s API, API keys are saved in a managed secrets and techniques vault, and entry logs are streamed to a safety data and occasion administration (SIEM) system for actual‑time evaluation.

Selecting a Managed Cloud Supplier

What elements do you have to think about when deciding on a supplier?

Choosing the best accomplice determines how properly managed cloud works on your group. Assess distributors throughout experience, SLAs, reliability, assist and pricing.

Experience and expertise. Search for suppliers with confirmed expertise within the applied sciences and industries related to your workloads. Consider certifications, buyer testimonials and case research.

Service Stage Agreements (SLAs). SLAs outline uptime ensures, response occasions and efficiency metrics. Make sure the supplier’s commitments align with your enterprise necessities.

Availability and reliability. Excessive availability requires redundant techniques, a number of information facilities and sturdy catastrophe restoration plans. Examine how suppliers deal with failovers and information replication.

Help and upkeep. Select distributors that provide complete assist, together with 24/7 monitoring, patching and upgrades. Consider communication channels (chat, cellphone, e mail) and escalation procedures.

Value and scalability. Transparency in pricing is essential. Search suppliers with versatile billing fashions and the flexibility to scale providers up or down with out hidden charges. FinOps instruments assist forecast and management spending.

Safety posture. Ask for certifications (ISO 27001, SOC 2 Kind II), encryption practices and incident response protocols. Consider whether or not they assist compliance frameworks related to your sector.

Cultural match. A supplier’s communication type, documentation high quality and willingness to collaborate affect day‑to‑day operations. Take into account trial tasks or proof‑of‑idea engagements.

Knowledgeable Insights

- Vendor diversification: Keep away from focus threat by adopting multi‑cloud methods or backup suppliers for essential workloads.

- Integration with current instruments: Examine compatibility together with your CI/CD pipelines, monitoring instruments and infrastructure‑as‑code frameworks.

- Exit issues: Perceive the way to retrieve information and infrastructure definitions if you might want to swap suppliers.

- Clarifai integration: Select suppliers that assist GPU cases and container orchestration frameworks appropriate with Clarifai’s runtime. This ensures clean deployment of AI fashions throughout environments.

Instance

A SaaS firm evaluating managed suppliers compares three candidates. Supplier A presents aggressive pricing however restricted SLA ensures; Supplier B makes a speciality of monetary providers and has sturdy compliance credentials; Supplier C integrates seamlessly with Terraform and Kubernetes, aligning with the corporate’s DevOps practices. After scoring every towards standards—experience, SLAs, reliability, assist, value and integration—the corporate selects Supplier C and runs a pilot earlier than migrating absolutely.

Rising Traits & Future Outlook

What is going to form managed cloud within the coming years?

The managed cloud panorama is evolving quickly. AI‑pushed automation, refined governance and multi‑cloud methods are redefining how cloud providers are consumed. Listed here are the important thing tendencies to observe.

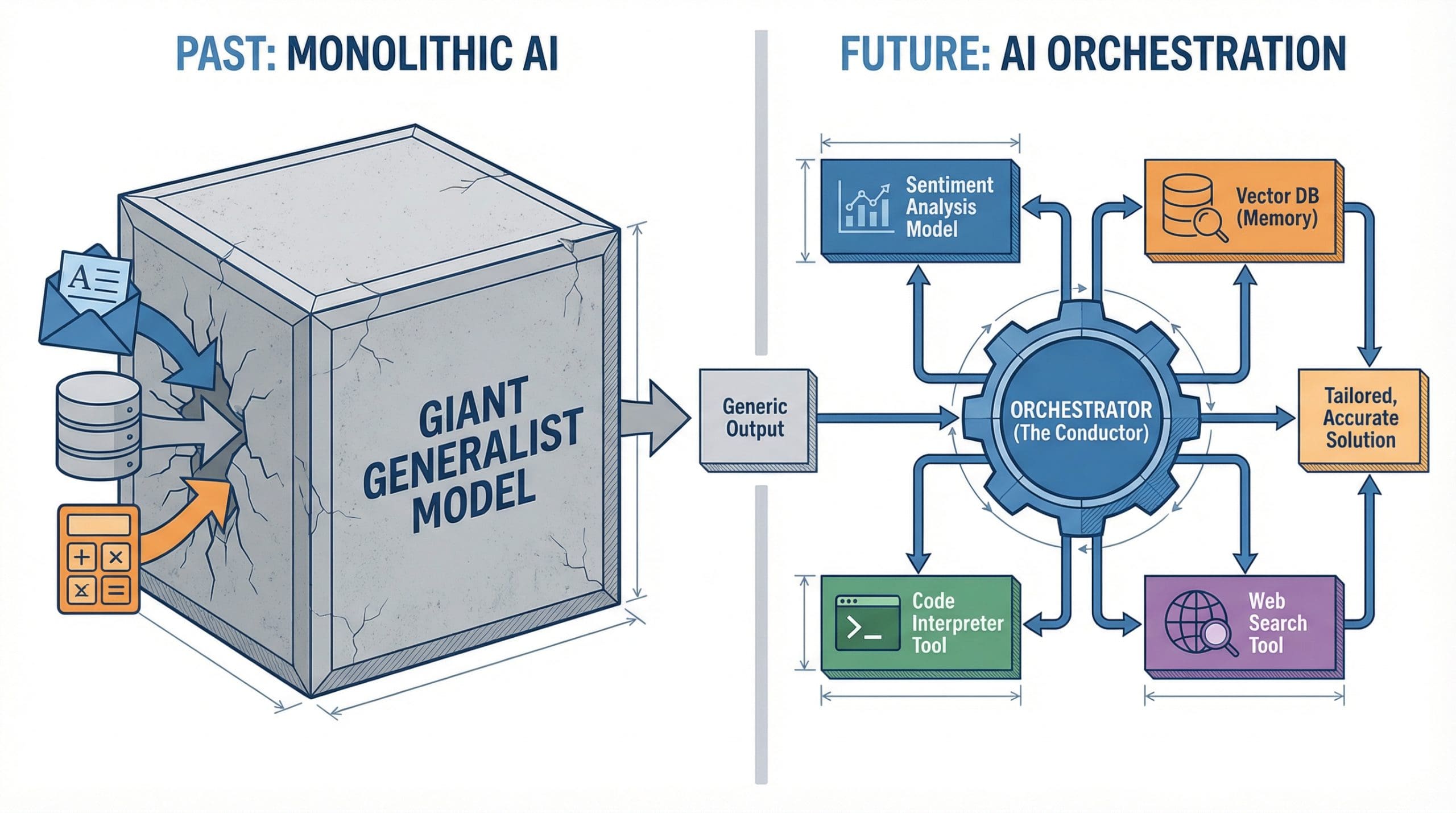

Agentic AI and autonomous clouds. Cloud platforms are embedding AI brokers that carry out duties, optimize workflows and orchestrate providers with minimal human intervention. These brokers regulate sources, detect anomalies and remediate points. Clear guardrails and moral tips are important to make sure they align with enterprise intent.

Governance and guardrails. As automation will increase, organizations are prioritizing governance frameworks to keep up visibility and management. Coverage‑as‑code instruments implement safety, value and compliance guidelines throughout environments.

Information administration and belief. Information high quality, lineage and entry controls turn into strategic differentiators. Managed platforms will present constructed‑in information governance and monitoring instruments to make sure dependable insights.

Identification‑centric safety. Identification will turn into the inspiration of cloud safety. Fantastic‑grained authorization and authentication are essential as AI and API ecosystems proliferate.

FinOps for AI workloads. Cloud value administration is extending past compute and storage to incorporate AI workloads. Organizations will undertake self-discipline round budgeting, forecasting and optimizing useful resource utilization.

Multi‑cloud and hybrid methods. To keep away from vendor lock‑in and enhance resilience, enterprises will proceed embracing multi‑cloud methods. Unified visibility and orchestration instruments will likely be important for managing complexity.

Sustainability and inexperienced computing. Suppliers are investing in power‑environment friendly information facilities and carbon‑conscious workloads. Prospects could prioritize suppliers with renewable power commitments and carbon reporting.

Edge computing and native runners. Managed providers will lengthen to edge areas, enabling low‑latency processing near information sources. Clarifai’s native runner exemplifies how inference can run on‑system whereas orchestration stays centralized.

Platform engineering and inside developer platforms (IDPs). Organizations are constructing IDPs to supply self‑service interfaces for builders whereas making certain compliance and safety. Managed cloud will underpin these platforms, offering elastic infrastructure and coverage enforcement.

Knowledgeable Insights

- Holistic AI operations: AIOps will evolve into broader AI‑pushed operations that mix observability, predictive analytics and automatic remediation.

- Regulatory pressures: Governments are drafting rules round AI security, information sovereignty and cloud focus threat. Managed suppliers should adapt shortly to stay compliant.

- Customized silicon: Hyperscalers are creating customized chips for AI and normal computing. Managed providers will make these accelerators accessible to prospects with out capital funding.

- Clarifai’s imaginative and prescient: As fashions develop in complexity, Clarifai is investing in orchestration instruments that mechanically allocate the right combination of cloud, edge and on‑prem sources for coaching and inference, balancing efficiency with value and compliance.

Instance

Think about a logistics community the place 1000’s of supply drones talk with a central management system. Within the close to future, autonomous cloud brokers will monitor every drone’s telemetry, predict upkeep wants and reroute packages primarily based on climate and site visitors. Governance insurance policies will guarantee privateness, security and value constraints. FinOps instruments will allocate GPU sources for actual‑time pc‑imaginative and prescient fashions solely when vital, and edge runners will course of information on drones to reduce latency.

Continuously Requested Questions

Q1: Can I take advantage of managed cloud for delicate information?

Sure. Many managed cloud suppliers supply non-public or devoted environments with encryption and compliance certifications (HIPAA, GDPR). You will need to nonetheless implement software‑degree safety and entry controls.

Q2: Is managed cloud costlier than working my very own infrastructure?

It may be costlier on a per‑useful resource foundation, however operational financial savings, diminished staffing wants and quicker time to market typically offset the premium. FinOps practices assist handle prices.

Q3: How does Clarifai match right into a managed cloud technique?

Clarifai offers AI fashions and instruments for pc imaginative and prescient and language processing. Its compute orchestration and native runner will let you run inference on managed cloud or on‑prem gadgets with out managing underlying {hardware}. It’s appropriate with container orchestration techniques utilized by managed cloud suppliers.

This autumn: Can I migrate away from a managed cloud supplier later?

Sure, however planning is essential. Use Infrastructure‑as‑Code (e.g., Terraform) and moveable artifacts (containers, APIs) to keep up flexibility. Some suppliers help with migration or multi‑cloud methods.

Q5: Do managed cloud providers assist Kubernetes and containers?

Most suppliers supply managed Kubernetes or serverless container providers. These simplify deployment and scaling of containerized purposes whereas the supplier handles cluster administration.