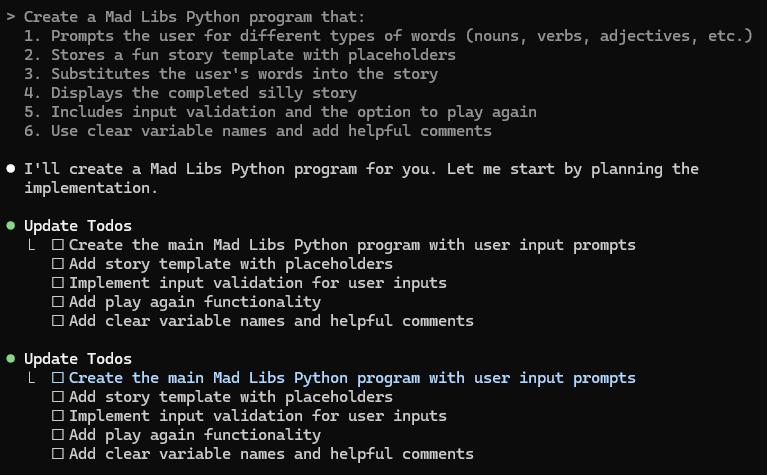

Picture by Writer

# Introduction

There are quite a few instruments for processing datasets at this time. All of them declare — in fact they do — that they’re the perfect and the appropriate selection for you. However are they? There are two essential necessities these instruments ought to fulfill: they need to simply carry out on a regular basis information evaluation operations and accomplish that rapidly, even below the strain of huge datasets.

To find out the perfect device amongst DuckDB, SQLite, and Pandas, we examined them below these circumstances.

First, we gave them solely on a regular basis analytical duties: summing values, grouping by classes, filtering with circumstances, and multi-field aggregations. This mirrored how analysts really work with actual datasets, in comparison with situations designed to showcase the perfect traits of a device.

Second, we carried out these operations on a Kaggle dataset with over 1 million rows. It’s a sensible tipping level — sufficiently small to run on a single machine, but giant sufficient that reminiscence strain and question velocity begin to reveal clear variations between instruments.

Let’s see how these checks went.

# The Dataset We Used

// Dataset Overview

We used the Financial institution dataset from Kaggle. This dataset accommodates over 1 million rows, comprising 5 columns:

| Column Identify |

Description |

| Date |

The date the transaction occurred |

| Area |

The enterprise class or kind (RETAIL, RESTAURANT) |

| Location |

Geographic area (Goa, Mathura) |

| Worth |

Transaction worth |

| Transaction_count |

The full variety of transactions on that day |

This dataset is generated utilizing Python. Whereas it might not totally resemble real-life information, its measurement and construction are ample to check and evaluate the efficiency variations between the instruments.

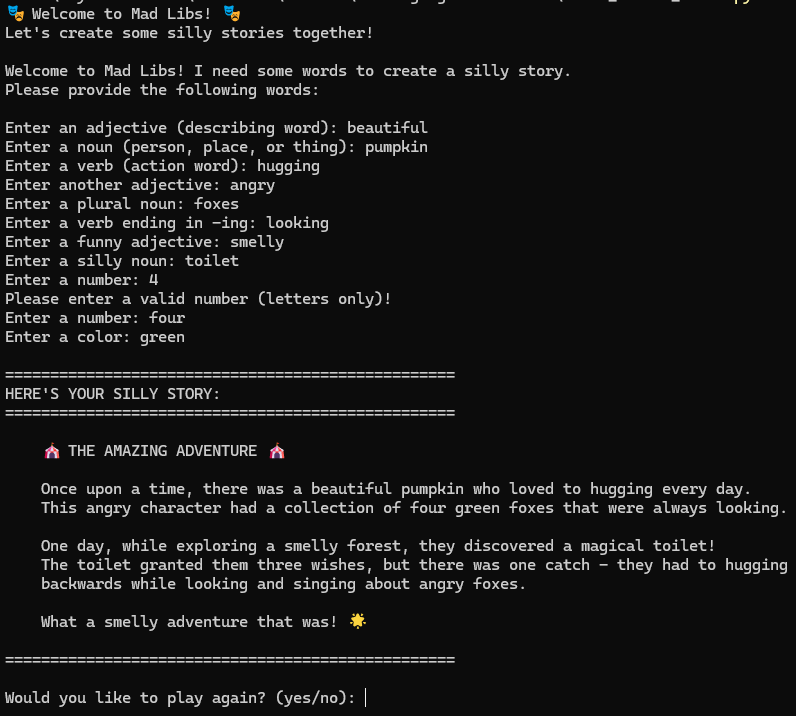

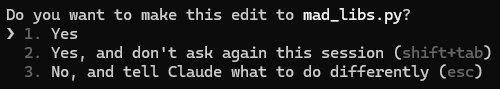

// Peeking Into the Information with Pandas

We used Pandas to load the dataset right into a Jupyter pocket book and study its basic construction, dimensions, and null values. Right here is the code.

import pandas as pd

df = pd.read_excel('bankdataset.xlsx')

print("Dataset form:", df.form)

df.head()

Right here is the output.

In order for you a fast reference to frequent operations when exploring datasets, try this helpful Pandas Cheat Sheet.

Earlier than benchmarking, let’s see the right way to arrange the setting.

# Setting Up a Honest Testing Surroundings

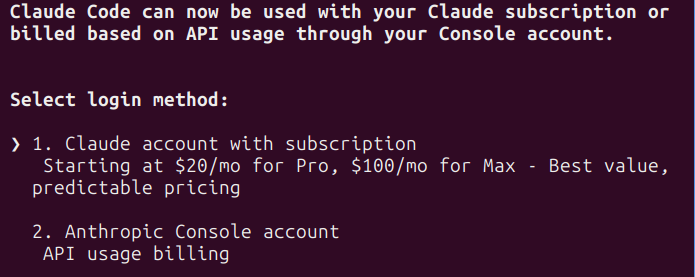

All three instruments — DuckDB, SQLite, and Pandas — have been arrange and run in the identical Jupyter Pocket book setting to make sure the check was truthful. This ensured that the circumstances throughout runtime and the usage of reminiscence remained fixed all through.

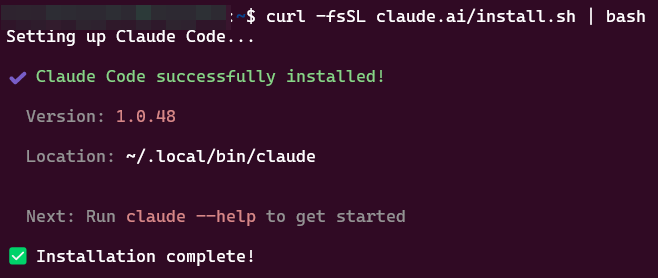

First, we put in and loaded the mandatory packages.

Listed below are the instruments we would have liked:

- pandas: for normal

DataFrame operations

- duckdb: for SQL execution on a

DataFrame

- sqlite3: for managing an embedded SQL database

- time: for capturing execution time

- memory_profiler: to measure reminiscence allocation

# Set up if any of them are usually not in your setting

!pip set up duckdb --quiet

import pandas as pd

import duckdb

import sqlite3

import time

from memory_profiler import memory_usage

Now let’s put together the information in a format that may be shared throughout all three instruments.

// Loading Information into Pandas

We’ll use Pandas to load the dataset as soon as, after which we’ll share or register it for DuckDB and SQLite.

df = pd.read_excel('bankdataset.xlsx')

df.head()

Right here is the output to validate.

// Registering Information with DuckDB

DuckDB allows you to immediately entry Pandas DataFrames. You do not have to transform something—simply register and question. Right here is the code.

# Register DataFrame as a DuckDB desk

duckdb.register("bank_data", df)

# Question through DuckDB

duckdb.question("SELECT * FROM bank_data LIMIT 5").to_df()

Right here is the output.

// Making ready Information for SQLite

Since SQLite does not learn Excel information immediately, we began by including the Pandas DataFrame to an in-memory database. After that, we used a easy question to look at the information format.

conn_sqlite = sqlite3.join(":reminiscence:")

df.to_sql("bank_data", conn_sqlite, index=False, if_exists="change")

pd.read_sql_query("SELECT * FROM bank_data LIMIT 5", conn_sqlite)

Right here is the output.

# How We Benchmarked the Instruments

We used the identical 4 queries on DuckDB, SQLite, and Pandas to match their efficiency. Every question was designed to deal with a standard analytical activity that mirrors how information evaluation is utilized in the true world.

// Making certain Constant Setup

The in-memory dataset was utilized by all three instruments.

- Pandas queried the

DataFrame immediately

- DuckDB executed SQL queries immediately towards the

DataFrame

- SQLite saved a duplicate of the

DataFrame in an in-memory database and ran SQL queries on it

This methodology ensured that every one three instruments used the identical information and operated with the identical system settings.

// Measuring Execution Time

To trace question period, Python’s time module wrapped every question in a easy begin/finish timer. Solely the question execution time was recorded; data-loading and preparation steps have been excluded.

// Monitoring Reminiscence Utilization

Together with processing time, reminiscence utilization signifies how properly every engine performs with giant datasets.

If desired, reminiscence utilization could be sampled instantly earlier than and after every question to estimate incremental RAM consumption.

// The Benchmark Queries

We examined every engine on the identical 4 on a regular basis analytical duties:

- Whole transaction worth: summing a numeric column

- Group by area: aggregating transaction counts per class

- Filter by location: filtering rows by a situation earlier than aggregation

- Group by area & location: multi-field aggregation with averages

# Benchmark Outcomes

// Question 1: Whole Transaction Worth

Right here we measure how Pandas, DuckDB, and SQLite carry out when summing the Worth column throughout the dataset.

// Pandas Efficiency

We calculate the entire transaction worth utilizing .sum() on the Worth column. Right here is the code.

pandas_results = []

def pandas_q1():

return df['Value'].sum()

mem_before = memory_usage(-1)[0]

begin = time.time()

pandas_q1()

finish = time.time()

mem_after = memory_usage(-1)[0]

pandas_results.append({

"engine": "Pandas",

"question": "Whole transaction worth",

"time": spherical(finish - begin, 4),

"reminiscence": spherical(mem_after - mem_before, 4)

})

pandas_results

Right here is the output.

// DuckDB Efficiency

We calculate the entire transaction worth utilizing a full-column aggregation. Right here is the code.

duckdb_results = []

def duckdb_q1():

return duckdb.question("SELECT SUM(worth) FROM bank_data").to_df()

mem_before = memory_usage(-1)[0]

begin = time.time()

duckdb_q1()

finish = time.time()

mem_after = memory_usage(-1)[0]

duckdb_results.append({

"engine": "DuckDB",

"question": "Whole transaction worth",

"time": spherical(finish - begin, 4),

"reminiscence": spherical(mem_after - mem_before, 4)

})

duckdb_results

Right here is the output.

// SQLite Efficiency

We calculate the entire transaction worth by summing the worth column. Right here is the code.

sqlite_results = []

def sqlite_q1():

return pd.read_sql_query("SELECT SUM(worth) FROM bank_data", conn_sqlite)

mem_before = memory_usage(-1)[0]

begin = time.time()

sqlite_q1()

finish = time.time()

mem_after = memory_usage(-1)[0]

sqlite_results.append({

"engine": "SQLite",

"question": "Whole transaction worth",

"time": spherical(finish - begin, 4),

"reminiscence": spherical(mem_after - mem_before, 4)

})

sqlite_results

Right here is the output.

// Total Efficiency Evaluation

Now let’s evaluate execution time and reminiscence utilization. Right here is the code.

import matplotlib.pyplot as plt

all_q1 = pd.DataFrame(pandas_results + duckdb_results + sqlite_results)

fig, axes = plt.subplots(1, 2, figsize=(10,4))

all_q1.plot(x="engine", y="time", form="barh", ax=axes[0], legend=False, title="Execution Time (s)")

all_q1.plot(x="engine", y="reminiscence", form="barh", colour="salmon", ax=axes[1], legend=False, title="Reminiscence Utilization (MB)")

plt.tight_layout()

plt.present()

Right here is the output.

Pandas is by far the quickest and most memory-efficient right here, finishing virtually immediately with minimal RAM utilization. DuckDB is barely slower and makes use of extra reminiscence however stays environment friendly, whereas SQLite is each the slowest and the heaviest by way of reminiscence consumption.

// Question 2: Group by Area

Right here we measure how Pandas, DuckDB, and SQLite carry out when grouping transactions by Area and summing their counts.

// Pandas Efficiency

We calculate the entire transaction rely per area utilizing .groupby() on the Area column.

def pandas_q2():

return df.groupby('Area')['Transaction_count'].sum()

mem_before = memory_usage(-1)[0]

begin = time.time()

pandas_q2()

finish = time.time()

mem_after = memory_usage(-1)[0]

pandas_results.append({

"engine": "Pandas",

"question": "Group by area",

"time": spherical(finish - begin, 4),

"reminiscence": spherical(mem_after - mem_before, 4)

})

[p for p in pandas_results if p["query"] == "Group by area"]

Right here is the output.

// DuckDB Efficiency

We calculate the entire transaction rely per area utilizing a SQL GROUP BY on the area column.

def duckdb_q2():

return duckdb.question("""

SELECT area, SUM(transaction_count)

FROM bank_data

GROUP BY area

""").to_df()

mem_before = memory_usage(-1)[0]

begin = time.time()

duckdb_q2()

finish = time.time()

mem_after = memory_usage(-1)[0]

duckdb_results.append({

"engine": "DuckDB",

"question": "Group by area",

"time": spherical(finish - begin, 4),

"reminiscence": spherical(mem_after - mem_before, 4)

})

[p for p in duckdb_results if p["query"] == "Group by area"]

Right here is the output.

// SQLite Efficiency

We calculate the entire transaction rely per area utilizing SQL GROUP BY on the in-memory desk.

def sqlite_q2():

return pd.read_sql_query("""

SELECT area, SUM(transaction_count) AS total_txn

FROM bank_data

GROUP BY area

""", conn_sqlite)

mem_before = memory_usage(-1)[0]

begin = time.time()

sqlite_q2()

finish = time.time()

mem_after = memory_usage(-1)[0]

sqlite_results.append({

"engine": "SQLite",

"question": "Group by area",

"time": spherical(finish - begin, 4),

"reminiscence": spherical(mem_after - mem_before, 4)

})

[p for p in sqlite_results if p["query"] == "Group by area"]

Right here is the output.

// Total Efficiency Evaluation

Now let’s evaluate execution time and reminiscence utilization. Right here is the code.

import pandas as pd

import matplotlib.pyplot as plt

groupby_results = [r for r in (pandas_results + duckdb_results + sqlite_results)

if "Group by" in r["query"]]

df_groupby = pd.DataFrame(groupby_results)

fig, axes = plt.subplots(1, 2, figsize=(10,4))

df_groupby.plot(x="engine", y="time", form="barh", ax=axes[0], legend=False, title="Execution Time (s)")

df_groupby.plot(x="engine", y="reminiscence", form="barh", colour="salmon", ax=axes[1], legend=False, title="Reminiscence Utilization (MB)")

plt.tight_layout()

plt.present()

Right here is the output.

DuckDB is quickest, Pandas trades a bit extra time for decrease reminiscence, whereas SQLite is each slowest and most memory-hungry.

// Question 3: Filter by Location (Goa)

Right here we measure how Pandas, DuckDB, and SQLite carry out when filtering the dataset for Location = 'Goa' and summing the transaction values.

// Pandas Efficiency

We filter rows for Location == 'Goa' and sum their values. Right here is the code.

def pandas_q3():

return df[df['Location'] == 'Goa']['Value'].sum()

mem_before = memory_usage(-1)[0]

begin = time.time()

pandas_q3()

finish = time.time()

mem_after = memory_usage(-1)[0]

pandas_results.append({

"engine": "Pandas",

"question": "Filter by location",

"time": spherical(finish - begin, 4),

"reminiscence": spherical(mem_after - mem_before, 4)

})

[p for p in pandas_results if p["query"] == "Filter by location"]

Right here is the output.

// DuckDB Efficiency

We filter transactions for Location = 'Goa' and calculate their whole worth. Right here is the code.

def duckdb_q3():

return duckdb.question("""

SELECT SUM(worth)

FROM bank_data

WHERE location = 'Goa'

""").to_df()

mem_before = memory_usage(-1)[0]

begin = time.time()

duckdb_q3()

finish = time.time()

mem_after = memory_usage(-1)[0]

duckdb_results.append({

"engine": "DuckDB",

"question": "Filter by location",

"time": spherical(finish - begin, 4),

"reminiscence": spherical(mem_after - mem_before, 4)

})

[p for p in duckdb_results if p["query"] == "Filter by location"]

Right here is the output.

// SQLite Efficiency

We filter transactions for Location = 'Goa' and sum their values. Right here is the code.

def sqlite_q3():

return pd.read_sql_query("""

SELECT SUM(worth) AS total_value

FROM bank_data

WHERE location = 'Goa'

""", conn_sqlite)

mem_before = memory_usage(-1)[0]

begin = time.time()

sqlite_q3()

finish = time.time()

mem_after = memory_usage(-1)[0]

sqlite_results.append({

"engine": "SQLite",

"question": "Filter by location",

"time": spherical(finish - begin, 4),

"reminiscence": spherical(mem_after - mem_before, 4)

})

[p for p in sqlite_results if p["query"] == "Filter by location"]

Right here is the output.

// Total Efficiency Evaluation

Now let’s evaluate execution time and reminiscence utilization. Right here is the code.

import pandas as pd

import matplotlib.pyplot as plt

filter_results = [r for r in (pandas_results + duckdb_results + sqlite_results)

if r["query"] == "Filter by location"]

df_filter = pd.DataFrame(filter_results)

fig, axes = plt.subplots(1, 2, figsize=(10, 4))

df_filter.plot(x="engine", y="time", form="barh", ax=axes[0], legend=False, title="Execution Time (s)")

df_filter.plot(x="engine", y="reminiscence", form="barh", colour="salmon", ax=axes[1], legend=False, title="Reminiscence Utilization (MB)")

plt.tight_layout()

plt.present()

Right here is the output.

DuckDB is the quickest and best; Pandas is slower with larger reminiscence utilization; and SQLite is the slowest however lighter on reminiscence.

// Question 4: Group by Area & Location

// Pandas Efficiency

We calculate the typical transaction worth grouped by each Area and Location. Right here is the code.

def pandas_q4():

return df.groupby(['Domain', 'Location'])['Value'].imply()

mem_before = memory_usage(-1)[0]

begin = time.time()

pandas_q4()

finish = time.time()

mem_after = memory_usage(-1)[0]

pandas_results.append({

"engine": "Pandas",

"question": "Group by area & location",

"time": spherical(finish - begin, 4),

"reminiscence": spherical(mem_after - mem_before, 4)

})

[p for p in pandas_results if p["query"] == "Group by area & location"]

Right here is the output.

// DuckDB Efficiency

We calculate the typical transaction worth grouped by each area and location. Right here is the code.

def duckdb_q4():

return duckdb.question("""

SELECT area, location, AVG(worth) AS avg_value

FROM bank_data

GROUP BY area, location

""").to_df()

mem_before = memory_usage(-1)[0]

begin = time.time()

duckdb_q4()

finish = time.time()

mem_after = memory_usage(-1)[0]

duckdb_results.append({

"engine": "DuckDB",

"question": "Group by area & location",

"time": spherical(finish - begin, 4),

"reminiscence": spherical(mem_after - mem_before, 4)

})

[p for p in duckdb_results if p["query"] == "Group by area & location"]

Right here is the output.

// SQLite Efficiency

We calculate the typical transaction worth grouped by each area and location. Right here is the code.

def sqlite_q4():

return pd.read_sql_query("""

SELECT area, location, AVG(worth) AS avg_value

FROM bank_data

GROUP BY area, location

""", conn_sqlite)

mem_before = memory_usage(-1)[0]

begin = time.time()

sqlite_q4()

finish = time.time()

mem_after = memory_usage(-1)[0]

sqlite_results.append({

"engine": "SQLite",

"question": "Group by area & location",

"time": spherical(finish - begin, 4),

"reminiscence": spherical(mem_after - mem_before, 4)

})

[p for p in sqlite_results if p["query"] == "Group by area & location"]

Right here is the output.

// Total Efficiency Evaluation

Now let’s evaluate execution time and reminiscence utilization. Right here is the code.

import pandas as pd

import matplotlib.pyplot as plt

gdl_results = [r for r in (pandas_results + duckdb_results + sqlite_results)

if r["query"] == "Group by area & location"]

df_gdl = pd.DataFrame(gdl_results)

fig, axes = plt.subplots(1, 2, figsize=(10, 4))

df_gdl.plot(x="engine", y="time", form="barh", ax=axes[0], legend=False,

title="Execution Time (s)")

df_gdl.plot(x="engine", y="reminiscence", form="barh", ax=axes[1], legend=False,

title="Reminiscence Utilization (MB)", colour="salmon")

plt.tight_layout()

plt.present()

Right here is the output.

DuckDB handles multi-field group-bys quickest with average reminiscence use, Pandas is slower with very excessive reminiscence utilization, and SQLite is the slowest with substantial reminiscence consumption.

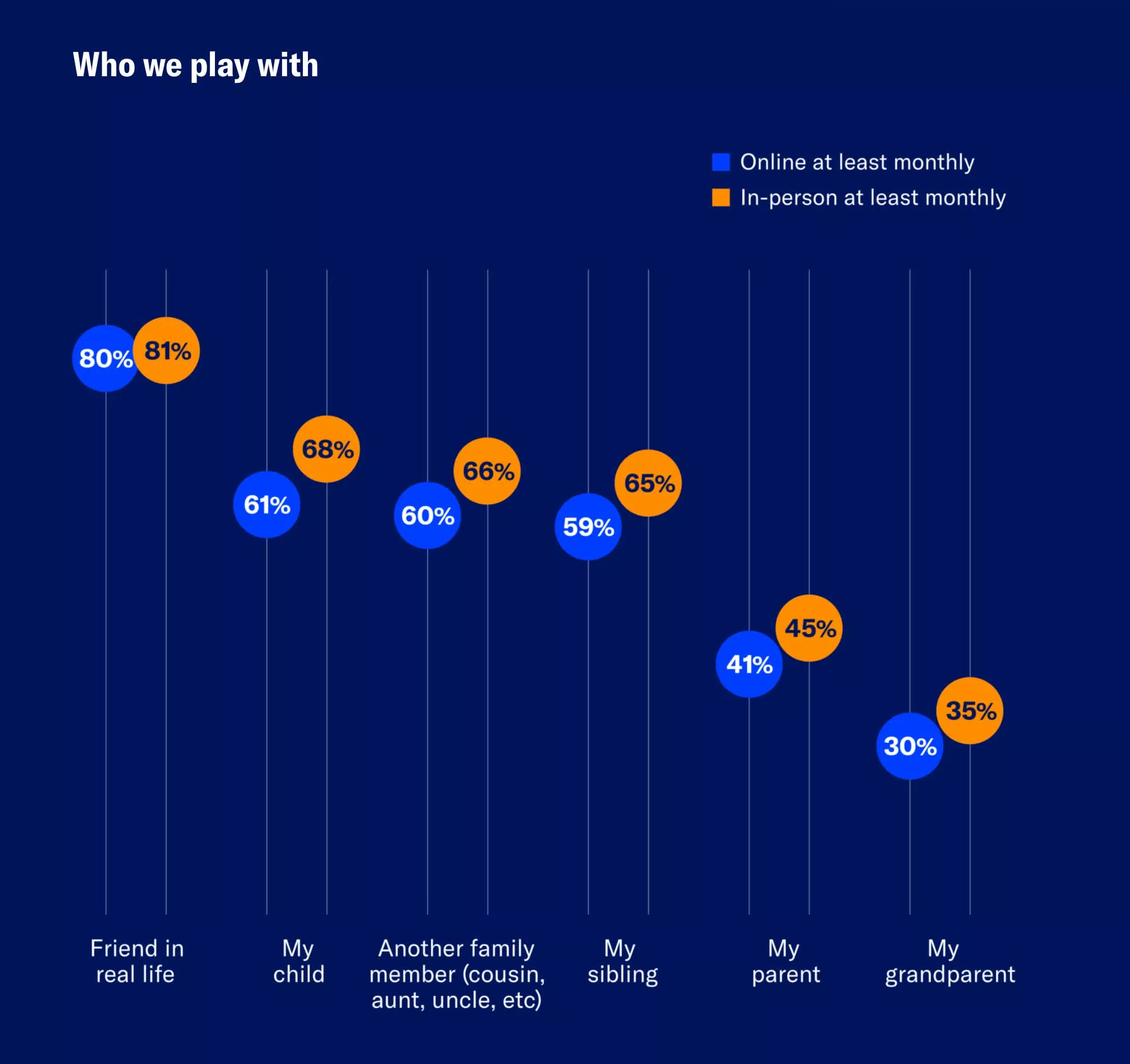

# Closing Comparability Throughout All Queries

We’ve in contrast these three engines towards one another by way of reminiscence and velocity. Let’s verify the execution time as soon as once more. Right here is the code.

import pandas as pd

import matplotlib.pyplot as plt

all_results = pd.DataFrame(pandas_results + duckdb_results + sqlite_results)

measure_order = [

"Total transaction value",

"Group by domain",

"Filter by location",

"Group by domain & location",

]

engine_colors = {"Pandas": "#1f77b4", "DuckDB": "#ff7f0e", "SQLite": "#2ca02c"}

fig, axes = plt.subplots(2, 2, figsize=(12, 8))

axes = axes.ravel()

for i, q in enumerate(measure_order):

d = all_results[all_results["query"] == q]

axes[i].barh(d["engine"], d["time"],

colour=[engine_colors[e] for e in d["engine"]])

for y, v in enumerate(d["time"]):

axes[i].textual content(v, y, f" {v:.3f}", va="middle")

axes[i].set_title(q, fontsize=10)

axes[i].set_xlabel("Seconds")

fig.suptitle("Per-Measure Comparability — Execution Time", fontsize=14)

plt.tight_layout()

plt.present()

Right here is the output.

This chart exhibits that DuckDB persistently maintains the bottom execution instances for nearly all queries, apart from the entire transaction worth the place Pandas edges it out; SQLite is the slowest by a large margin throughout the board. Let’s verify reminiscence subsequent. Right here is the code.

import pandas as pd

import matplotlib.pyplot as plt

all_results = pd.DataFrame(pandas_results + duckdb_results + sqlite_results)

measure_order = [

"Total transaction value",

"Group by domain",

"Filter by location",

"Group by domain & location",

]

engine_colors = {"Pandas": "#1f77b4", "DuckDB": "#ff7f0e", "SQLite": "#2ca02c"}

fig, axes = plt.subplots(2, 2, figsize=(12, 8))

axes = axes.ravel()

for i, q in enumerate(measure_order):

d = all_results[all_results["query"] == q]

axes[i].barh(d["engine"], d["memory"],

colour=[engine_colors[e] for e in d["engine"]])

for y, v in enumerate(d["memory"]):

axes[i].textual content(v, y, f" {v:.1f}", va="middle")

axes[i].set_title(q, fontsize=10)

axes[i].set_xlabel("MB")

fig.suptitle("Per-Measure Comparability — Reminiscence Utilization", fontsize=14)

plt.tight_layout()

plt.present()

Right here is the output.

This chart exhibits that SQLite swings between being the perfect and the worst in reminiscence utilization, Pandas is excessive with two greatest and two worst circumstances, whereas DuckDB stays persistently within the center throughout all queries. Consequently, DuckDB proves to be essentially the most balanced selection total, delivering persistently quick efficiency with average reminiscence utilization. Pandas exhibits extremes—typically the quickest, typically the heaviest—whereas SQLite struggles with velocity and sometimes finally ends up on the inefficient facet for reminiscence.

Nate Rosidi is an information scientist and in product technique. He is additionally an adjunct professor educating analytics, and is the founding father of StrataScratch, a platform serving to information scientists put together for his or her interviews with actual interview questions from high firms. Nate writes on the newest tendencies within the profession market, provides interview recommendation, shares information science initiatives, and covers all the things SQL.