A number of weeks in the past, I participated in Entrance Finish Research Corridor. Entrance Finish Research Corridor is an HTML and CSS targeted assembly held on Zoom each two weeks. It is a chance to study from each other as we share our widespread curiosity in these two constructing blocks of the Net. Some weeks, there’s extra targeted dialogue whereas different weeks are extra open ended and members will ask questions or carry up matters of curiosity.

Joe, the moderator of the group, often begins the dialogue with one thing he has been eager about. On this specific assembly, he requested us about Sass. He requested us if we used it, if we favored it, after which to share our expertise with it. I had deliberate to reply the query however the dialog drifted into one other matter earlier than I had the possibility to reply. I noticed it as a chance to put in writing and to share among the issues that I’ve been eager about just lately.

Beginnings

I began utilizing Sass in March 2012. I had been listening to about it via various things I learn. I consider I heard Chris Coyier speak about it on his then-new podcast, ShopTalk Present. I had been concerned with redesigning my private web site and I assumed it might be an important probability to study Sass. I purchased an e-book model of Pragmatic Information to Sass after which put what I used to be studying into follow as I constructed a brand new model of my web site. The e-book steered utilizing Compass to course of my Sass into CSS. I selected to make use of SCSS syntax as an alternative of indented syntax as a result of SCSS was much like plain CSS. I assumed it was necessary to remain near the CSS syntax as a result of I may not at all times have the possibility to make use of Sass, and I needed to proceed to construct my CSS expertise.

It was very straightforward to stand up and operating with Sass. I used a GUI device referred to as Scout to run Compass. After some frustration attempting to replace Ruby on my laptop, Scout gave me an atmosphere to stand up and going shortly. I didn’t even have to make use of the command line. I simply pressed “Play” to inform my laptop to observe my information. Later I realized learn how to use Compass via the command line. I favored the simplicity of that device and want that not less than one among at the moment’s construct instruments included that very same simplicity.

I loved utilizing Sass out of the gate. I favored that I used to be capable of create reusable variables in my code. I may arrange colours and typography and have consistency throughout my code. I had not deliberate on utilizing nesting a lot however after I attempted it, I used to be hooked. I actually favored that I may write much less code and handle all of the relationships with nesting. It was nice to have the ability to nest a media question inside a selector and never should hunt for it in one other place in my code.

Quick-forward a bit…

After my profitable first expertise utilizing Sass in a private venture, I made a decision to begin utilizing it in my skilled work. And I inspired my teammates to embrace it. One of many issues I favored most about Sass was that you might use as little or as a lot as you favored. I used to be nonetheless writing CSS however now had the superpower that the completely different helper features in Sass enabled.

I didn’t get as deep into Sass as I may have. I used the Sass @prolong rule extra at first. There are a number of options that I didn’t reap the benefits of, like placeholder selectors and for loops. I’ve by no means been one to rely a lot on shortcuts. I take advantage of only a few of the shortcuts on my Mac. I’ve dabbled in issues like Emmet however are likely to shortly abandon them as a result of I’m simply use to writing issues out and haven’t developed the muscle reminiscence of utilizing shortcuts.

Is it time to un-Sass?

By my depend, I’ve been utilizing Sass for over 13 years. I selected Sass over Much less.js as a result of I assumed it was a greater route to go on the time. And my wager paid off. That is among the tough issues about working within the technical area. There are a number of good instruments however some find yourself rising to the highest and others fall away. I’ve been fairly lucky that a lot of the selections I’ve made have gone the way in which that they’ve. All of the businesses I’ve labored for have used Sass.

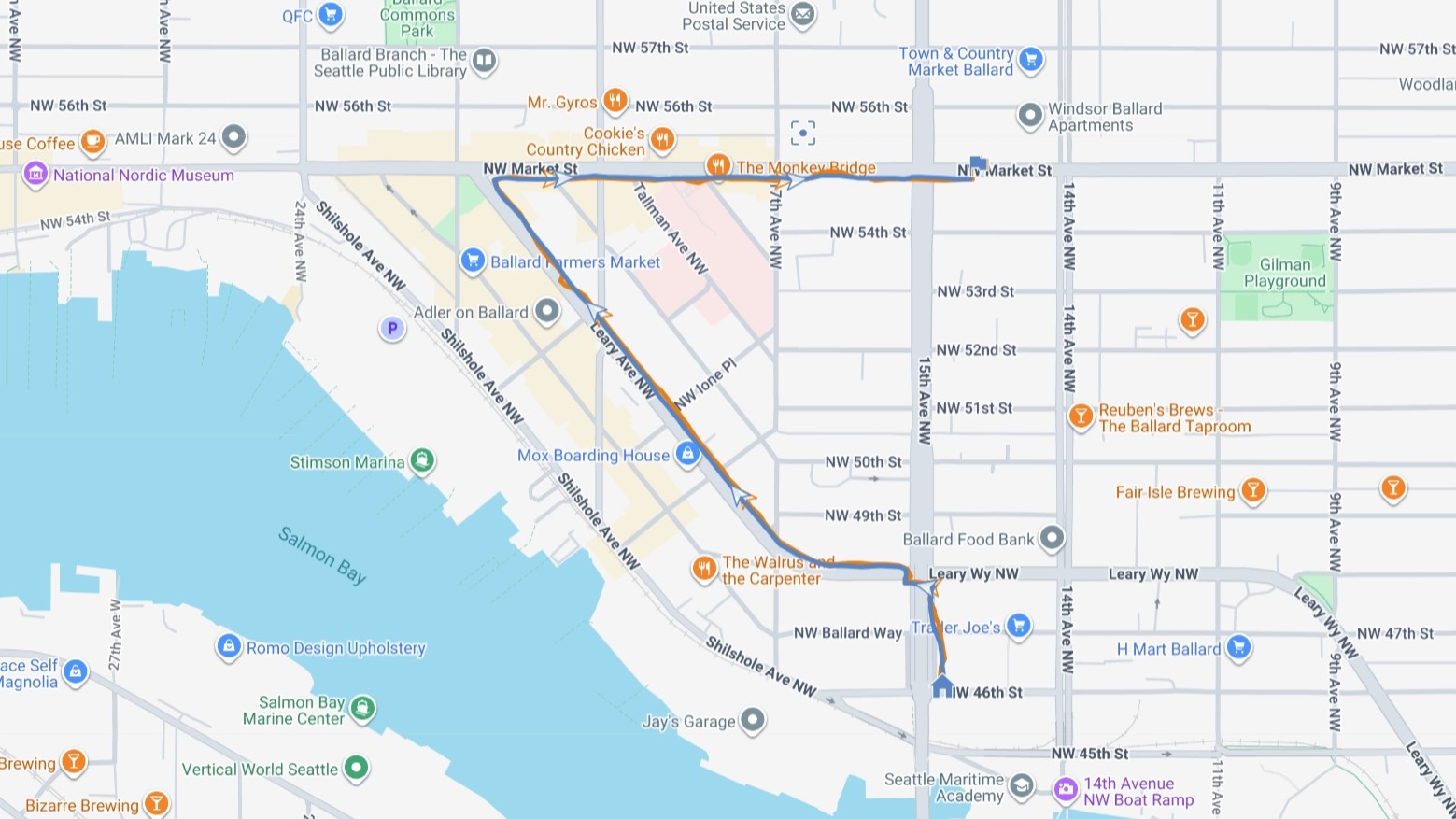

Originally of this 12 months, I lastly jumped into constructing a prototype for a private venture that I’ve been eager about for years: my very own reminiscence keeper. One of many few issues that I favored about Fb was the Recollections function. I loved visiting that web page every day to recollect what I had been doing on that particular day in years previous. However I felt at instances that Fb was not giving me all of my reminiscences. And my life doesn’t simply occur on Fb. I additionally needed a technique to view reminiscences from different days in addition to simply the present date.

As I began constructing my prototype, I needed to maintain it easy. I didn’t need to should arrange any construct instruments. I made a decision to put in writing CSS with out Sass.

Okay, in order that was my intention. However I quickly realized that that I used to be utilizing nesting. I had been engaged on it a few days earlier than I spotted it.

However my code was working. That’s once I realized that the native nesting in CSS works a lot the identical nesting in Sass. I had adopted the dialogue about implementing nesting in native CSS. At one level, the syntax was going to be very completely different. To be trustworthy, I misplaced monitor of the place issues had landed as a result of I used to be persevering with to make use of Sass. Native CSS nesting was not a giant concern to me proper then.

I used to be amazed once I realized that nesting works simply the identical means. And it was in that second that I started to surprise:

Is that this lastly the time to un-Sass?

I need to give credit score the place credit score is due. I’m borrowing the time period “un-Sass” from Stu Robson, who is definitely in the course of writing a collection referred to as “Un-Sass’ing my CSS” as I began eager about scripting this publish. I really like the time period “un-Sass” as a result of it’s straightforward to recollect and so spot on to explain what I’ve been eager about.

Here’s what I’m considering:

Customized Properties

I knew that so much about what I favored about Sass had began to make its means into native CSS. Customized properties had been one of many first issues. Customized properties are extra highly effective than Sass variables as a result of you may assign a brand new worth to a customized property in a media question or in a theming system, like gentle and darkish modes. That’s one thing Sass is unable to do since variables grow to be static as soon as they’re compiled into vanilla CSS. It’s also possible to assign and replace customized properties with JavaScript. Customized properties additionally work with inheritance and have a broader scope than Sass variables.

So, yeah. I discovered that not solely was I already pretty aware of the idea of variables, because of Sass, however the native CSS model was rather more highly effective.

I first used CSS Customized Properties when constructing two completely different themes (gentle and darkish) for a shopper venture. I additionally used them a number of instances with JavaScript and favored the way it gave me new potentialities for utilizing CSS and JavaScript collectively. In my new job, we use customized properties extensively and I’ve fully converted to utilizing them in any new code that I write. I made use of customized properties extensively once I redesigned my private website final 12 months. I took benefit of it to create a lightweight and darkish theme and I utilized it with Utopia for typography and spacing utilities.

Nesting

When Sass launched nesting, it simplified the writing of CSS code since you write model guidelines inside one other model rule (often a guardian). Which means you now not needed to write out the total descendent selector as a separate rule. You might additionally nest media queries, function queries, and container queries.

This skill to group code collectively made it simpler to see the relationships between guardian and youngster selectors. It was additionally helpful to have the media queries, container queries, or function queries grouped inside these selectors moderately than grouping all of the media question guidelines collectively additional down within the stylesheet.

I already talked about that I stumbled throughout native CSS nesting when writing code for my reminiscence keeper prototype. I used to be very excited that the specification prolonged what I already knew about nesting from Sass.

Two years in the past, the nesting specification was going to require you to begin the nested question with the & image, which was completely different from the way it labored in Sass.

.footer {

a { colour: blue }

}/* 2023 */

.footer {

& a { colour: blue } /* This was legitimate then */

}However that modified someday within the final two years and also you now not want the ampersand (&) image to put in writing a nested question. You may write simply as you had been writing it in Sass. I’m very glad about this transformation as a result of it means native CSS nesting is rather like I’ve been writing it in Sass.

/* 2025 */

.footer {

a { colour: blue } /* As we speak's legitimate syntax */

}There are some variations within the native implementation of nesting versus Sass. One distinction is that you just can’t create concatenated selectors with CSS. If you happen to love BEM, you then in all probability made use of this function in Sass. Nevertheless it doesn’t work in native CSS.

.card {

&__title {}

&__body {}

&__footer {}

}It doesn’t work as a result of the & image is a dwell object in native CSS and it’s at all times handled as a separate selector. Don’t fear, if you happen to don’t perceive that, neither do I. The necessary factor is to know the implication – you can’t concatenate selectors in native CSS nesting.

In case you are concerned with studying a bit extra about this, I’d recommend Kevin Powell’s, “Native CSS Nesting vs. Sass Nesting” from 2023. Simply know that the details about having to make use of the & image earlier than a component selector in native CSS nesting is old-fashioned.

I by no means took benefit of concatenated selectors in my Sass code so this is not going to have an effect on my work. For me, nesting is native CSS is equal to how I used to be utilizing it in Sass and is among the the explanation why to think about un-Sassing.

My recommendation is to watch out with nesting. I’d recommend attempting to maintain your nested code to 3 ranges on the most. In any other case, you find yourself with very lengthy selectors which may be harder to override elsewhere in our codebase. Maintain it easy.

The color-mix() perform

I favored utilizing the Sass colour module to lighten or darken a colour. I’d use this most frequently with buttons the place I needed the hover colour to be completely different. It was very easy to do with Sass. (I’m utilizing $colour to face in for the colour worth).

background-color: darken($colour, 20%);The color-mix() perform in native CSS permits me to do the identical factor and I’ve used it extensively up to now few months since studying about it from Chris Ferdinandi.

background-color: color-mix(in oklab, var(--color), #000000 20%);Mixins and features

I do know that a number of builders who use Sass make in depth use of mixins. Previously, I used a good variety of mixins. However a number of the time, I used to be simply pasting mixins from earlier initiatives. And lots of instances, I didn’t make as a lot use of them as I may as a result of I’d simply plain overlook that I had them. They had been at all times good helper features and allowed me to not have to recollect issues like clearfix or font smoothing. However these had been additionally methods that I discovered myself utilizing much less and fewer.

I additionally utilized features in Sass and created a number of of my very own, principally to do some math on the fly. I primarily used them to transform pixels into ems as a result of I favored with the ability to outline my typography and spacing as relative and creating relationships in my code. I additionally had written a perform to covert pixels to ems for customized media queries that didn’t match throughout the breakpoints I usually used. I had realized that it was a a lot better follow to make use of ems in media queries in order that layouts wouldn’t break when a consumer used web page zoom.

At present, we do not need a technique to do mixins and features in native CSS. However there’s work being finished so as to add that performance. Geoff wrote in regards to the CSS Features and Mixins Module.

I did a little bit experiment for the use case I used to be utilizing Sass features for. I needed to calculate em items from pixels in a customized media question. My customary follow is to set the physique textual content measurement to 100% which equals 16 pixels by default. So, I wrote a calc() perform to see if I may replicate what my Sass perform offered me.

@media (min-width: calc((600 / 16) * 1em));This tradition media question is for a minimal width of 600px. This is able to work primarily based on my setting the bottom font measurement to 100%. It may very well be modified.

Uninterested in tooling

One more reason to think about un-Sassing is that I’m merely drained of tooling. Tooling has gotten an increasing number of advanced over time, and never essentially with a greater developer expertise. From what I’ve noticed, at the moment’s tooling is predominantly geared in direction of JavaScript-first builders, or anybody utilizing a framework like React. All I would like is a device that’s straightforward to arrange and preserve. I don’t need to should study a posh system with the intention to do quite simple duties.

One other concern is dependencies. At my present job, I wanted so as to add some new content material and types to an older WordPress website that had not been up to date in a number of years. The positioning used Sass, and after a little bit of digging, I found that the earlier developer had used CodeKit to course of the code. I renewed my Codekit license in order that I may add CSS to model the content material I used to be including. It took me a bit to get the settings right as a result of the settings within the repo weren’t saving the processed information to the right location.

As soon as I lastly received that set, I continued to come across errors. Dart Sass, the engine that powers Sass, launched modifications to the syntax that broke the present code. I began refactoring a considerable amount of code to replace the positioning to the right syntax, permitting me to put in writing new code that will be processed.

I spent about 10 minutes trying to refactor the older code, however was nonetheless getting errors. I simply wanted so as to add a number of traces of CSS to model the brand new content material I used to be including to the positioning. So, I made a decision to go rogue and write the brand new CSS I wanted instantly within the WordPress template. I’ve had comparable experiences with different legacy codebases, and that’s the type of factor that may occur once you’re tremendous reliant on third-party dependencies. You spend extra time attempting to refactor the Sass code so you may get to the purpose the place you may add new code and have it compiled.

All of this has left me bored with tooling. I’m fortune sufficient at my new place that the tooling is all arrange via the Django CMS. However even with that system, I’ve run into points. For instance, I attempted utilizing a combination of proportion and pixels values in a minmax() perform and Sass was attempting to guage it as a math perform and the items had been incompatible.

grid-template-columns: repeat(auto-fill, minmax(min(200px, 100%), 1fr));I wanted to have the ability to escape and never have Sass attempt to consider the code as a math perform:

grid-template-columns: repeat(auto-fill, minmax(unquote("min(200px, 100%)"), 1fr));This isn’t an enormous ache level but it surely was one thing that I needed to take a while to analyze that I may have been utilizing to put in writing HTML or CSS. Fortunately, that’s one thing Ana Tudor has written about.

All of those completely different ache factors lead me to be bored with having to mess with tooling. It’s another excuse why I’ve thought of un-Sassing.

Verdict

So what’s my verdict — is it time to un-Sass?

Please don’t hate me, however my conclusion is: it relies upon. Perhaps not the definitive reply you had been on the lookout for.

However you in all probability are usually not stunned. You probably have been working in internet improvement even a brief period of time, that there are only a few definitive methods of doing issues. There are a number of completely different approaches, and simply because another person solves it in another way, doesn’t imply you’re proper and they’re flawed (or vice versa). Most issues come all the way down to the venture you’re engaged on, your viewers, and a bunch of different elements.

For my private website, sure, I wish to un-Sass. I need to kick the construct course of to the curb and get rid of these dependencies. I’d additionally like for different builders to have the ability to view supply on my CSS. You may’t view supply on Sass. And a part of the explanation I write on my website is to share options which may profit others, and making code extra accessible is a pleasant upkeep enhancement.

My private website doesn’t have a really giant codebase. I may in all probability simply un-Sass it in a few days or over a weekend.

However for bigger websites, just like the codebase I work with at my job. I wouldn’t recommend un-Sassing it. There may be means an excessive amount of code that must be refactored and I’m unable to justify the associated fee for that sort of effort. And truthfully, it’s not one thing I really feel motivated to deal with. It really works simply superb the way in which that it’s. And Sass remains to be an excellent device to make use of. It’s not “breaking” something.

Your venture could also be completely different and there is likely to be extra positive factors from un-Sassing than the venture I work on. Once more, it relies upon.

The way in which ahead

It’s an thrilling time to be a CSS developer. The language is continuous to evolve and mature. And every single day, it’s incorporating new options that first got here to us via different third-party instruments resembling Sass. It’s at all times a good suggestion to cease and re-evaluate your know-how selections to find out in the event that they nonetheless maintain up or if extra trendy approaches could be a greater means ahead.

That doesn’t imply now we have to return and “repair” all of our previous initiatives. And it may not imply doing a whole overhaul. Plenty of newer methods can dwell facet by facet with the older ones. We have now a mixture of each Sass variables and CSS customized properties in our codebase. They don’t work in opposition to one another. The beauty of internet applied sciences is that they construct on one another and there’s often backward compatibility.

Don’t be afraid to attempt new issues. And don’t decide your previous work primarily based on what at the moment. You probably did one of the best you might given your talent degree, the constraints of the venture, and the applied sciences you had out there. You can begin to include newer methods proper alongside the previous ones. Simply construct web sites!