This submit is a set of extra technical notes forming a companion piece to the earlier submit on males’s time spent on home chores and complete fertility charges on the nation degree.

The target market for immediately’s submit is future-me, and anybody else equally within the fairly particular points it’s jotting down notes for. These points embrace:

- Drawing directed graphs with

ggdagwhich have totally different colored edges - Accessing the UN Sustainable Improvement Objectives (SDGs) database API

- Information choices on gender inequality

- Becoming the identical blended results mannequin with

lme4::lmer,mgcv::gammandmgcv::gam, evaluating outcomes and extracting group-level residuals - Hand-drawing prediction plots to indicate the outcomes of fashions with interactions and evaluating that to the

marginaleffectspackage deal - Diagnostics of a simplified model of the mannequin

- Modelling technique questions regarding researcher levels of freedom, use of splines and interplay results

My method to presenting this (each immediately, and in Half I) is totally different to my standard one, the place I attempt to make the submit itself a stand-alone, reproducible artefact with all of the code essential to provide the leads to the submit. That method simply didn’t work out on this case; the code was too lengthy and boring to incorporate in the principle weblog, there are too many various audiences to write down for, and I went down too many useless ends in exploring a few of the modelling choices. Even on this technical companion piece, this submit isn’t going to be totally reproducible, however simply have the snippets of code that greatest illustrate the purpose.

To make up for this, as ever, the total code to provide that weblog submit is on GitHub as a part of my total blog-source repository.

Directed graphs with totally different colored edges

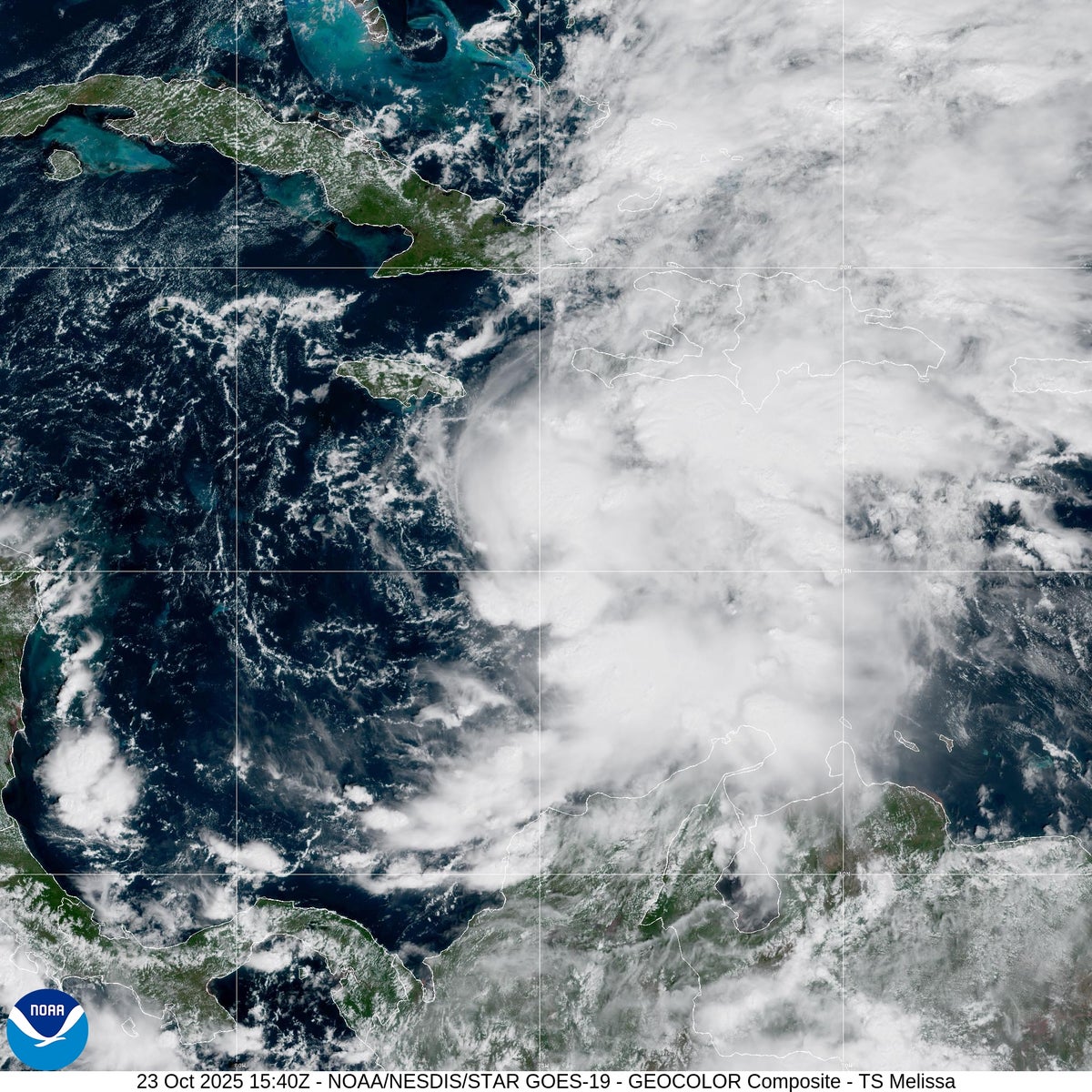

For the principle submit, I had to attract a few directed graphs with totally different colored edges connecting the nodes. Particularly, I wished to make use of a crimson line to indicate a destructive route impact, and blue to indicate a constructive, like this:

This was surprisingly fiddly to do and required a little bit of a hack. Particularly, you must explicitly name the tidy_dagitty operate, which turns a ggdag graph object of sophistication dagitty into an information body of sophistication tidy_dagitty; then you definitely add a column to that knowledge body which has the precise colors as its values, conditional on no matter algorithm it’s good to decide these colors. On this instance, I need it to be crimson when the road phase is join “opp” (Alternatives for girls and women) to “tfr” (Complete fertility fee), and blue in any other case.

So far as I might inform, you possibly can’t simply map a column of character or issue values to color and let the color scale match it, which might be the method extra according to the ggplot2 philosophy. As an alternative, you solely have the selection of an identification scale, which is why that column edge_type I add has to have the values “darkred” and “steelblue”. That’s the principle trick for doing this.

dg2 <- dagify(tfr ~ opp + hw ,

hw ~ opp,

labels = c(

"tfr" = "Complete fertility fee",

"hw" = "Males doing home tasks",

"opp" = "Alternatives fornwomen and women"

),

consequence = "tfr",

publicity = "hw"

) |>

# explicitly name this often hidden operate so we are able to color the sides:

ggdag::tidy_dagitty(seed = 124) |>

# color the sides. Have to specify identification of color right here, not use scale_

mutate(edge_type = ifelse(to == "tfr" & title == "opp", "darkred", "steelblue"))

# Draw the simplified causal graph

set.seed(124)

dg2 |>

ggplot(aes(x = x, y = y, xend = xend, yend =yend)) +

geom_dag_node(color = "gray") +

geom_dag_edges(aes(edge_colour = edge_type),

arrow_directed = grid::arrow(size = unit(12, "pt"), sort = "closed")) +

geom_dag_text_repel(aes(label = label), col = lab_col) +

theme_dag(base_family = "Roboto")Accessing the UN SDGs database

I couldn’t discover a easy manner of accessing the United Nations Statistics Division’s invaluable definitive database of the SDG indicators for all nations of the world. By which I imply, it has an API, however I didn’t see anybody who’d written a pleasant R package deal to conveniently work together with it. If anybody is aware of of somebody who’s finished this, or needs to do it themselves, please let me know.

So I needed to write my very own API request on my own, like an animal. I did this in what I’m positive is a suboptimal manner, but it surely works. From taking part in round with the UN’s API I discovered the curl command I wished to obtain the info:

curl -X POST --header 'Content material-Kind: utility/x-www-form-urlencoded' --header 'Settle for: utility/octet-stream' -d 'seriesCodes=SL_DOM_TSPD' 'https://unstats.un.org/sdgapi/v1/sdg/Collection/DataCSV'

Then I used features from Bob Rudis’ curlconverter R package deal to transform this to a request for the old style httr package deal to make use of. Because the feedback on this code say, I do know all that is outmoded; but it surely works for now.

#-----------downloading some SDG time use knowledge from the UN database-------------

# Be aware positive that is the easiest way to do that, it was clunky to work out,

# but it surely works. Somebody ought to (or have they already?) construct an R package deal.

#

# that is all httr, I perceive httr2 is the present factor now, however this nonetheless works

library(curlconverter)

library(httr)

request <- "curl -X POST --header 'Content material-Kind: utility/x-www-form-urlencoded' --header 'Settle for: utility/octet-stream' -d 'seriesCodes=SL_DOM_TSPD' 'https://unstats.un.org/sdgapi/v1/sdg/Collection/DataCSV'" |>

straighten() |>

make_req()

gender_txt <- content material(request[[1]](), as = "textual content")

gender <- read_csv(gender_txt) |>

clean_names()The tip outcomes is I desire a variable, from that SL_DOM_TPSD indicator (time spent on home chores and care work by intercourse and concrete/rural location) that may be represented like this:

There are vital knowledge wrangling challenges, although, particularly the totally different age classes utilized in every nation, the totally different years that surveys had been carried out, and the presence of a number of observations for some however not all nations.

The primary motive for together with this subsequent snippet is to remind myself of what was wanted to do to fiddle with these age classes. For instance, notice that some nations have values for a number of open ended classes like 3+ and 15+; we want a rule for deciding which of those is greatest for our desired constructed variable of males’s share of grownup home home and care work (on this case, 15+ is best than 3+, when each can be found for a rustic):

rely(gender, intercourse) # two classes, FEMALE and MALE - no TOTAL

rely(gender, age) # many various ages used for various nations

rely(gender, location) # there's ALLAREA, RURAL and URBAN

# needs to be just one indicator:

stopifnot(size(distinctive(gender$series_description)) == 1)

# which is

# Proportion of time spent on unpaid home chores and care work, by intercourse, age and placement (%)

time_chores <- gender |>

# we do not need rural and concrete, simply nation complete:

filter(location == "ALLAREA") |>

# we wish the ages like 15+, 12+ and so on, not these like 15-59 with an higher certain

filter(grepl("^[0-9]*+$", age)) |>

# however not the retirees, which some nations embrace. We wish the 15+, not 15+

# and 65+ individually:

filter(!age %in% c("65+", "85+", "60+")) |>

# calculate the male time spent as a proportion of complete (female and male) time spent

group_by(geo_area_name, time_period, age) |>

summarise(prop_male = worth[sex == 'MALE'] / sum(worth[sex == 'MALE'] + worth[sex == 'FEMALE'])) |>

group_by(geo_area_name) |>

# Label the most recent survey per nation. Be aware that any modelling must

# embrace a rustic random impact for the a number of observations per nation:

mutate(is_latest = ifelse(time_period == max(time_period), "Most up-to-date", "Earlier")) |>

# restrict to only the most effective age group, closest to adults, for every nation/time:

group_by(geo_area_name, time_period) |>

mutate(age = issue(age, ranges = c("15+", "16+", "18+", "12+", "10+", "6+", "5+", "3+"))) |>

prepare(geo_area_name, time_period, age) |>

slice(1) |>

ungroup() |>

mutate(iso3_code = countrycode(geo_area_name, origin = "nation.title.en", vacation spot = "iso3c"))Information on gender inequality

I spent fairly a little bit of time on the lookout for knowledge on gender inequality unbiased of the home tasks query. I wished one thing that resembled ladies’s and women’ alternatives in training and the financial system extra broadly. I had varied deadends in pursuing this. My first concept was some type of literacy measure—feminine literacy at some given age, or for adults total, as a ratio for equal male literacy. However the varied sources for this simply didn’t have sufficient observations.

The primary sources for literacy can be self-report in a census or presumably a social survey; or a standardised check at a given yr of education. After some fruitless time with SDGs, the World Financial institution’s World Improvement Indicators, and varied different sources, I concluded that neither of those appear to be available in a comparable foundation for sufficient years that matched with the year-country mixtures that I had time-use knowledge for.

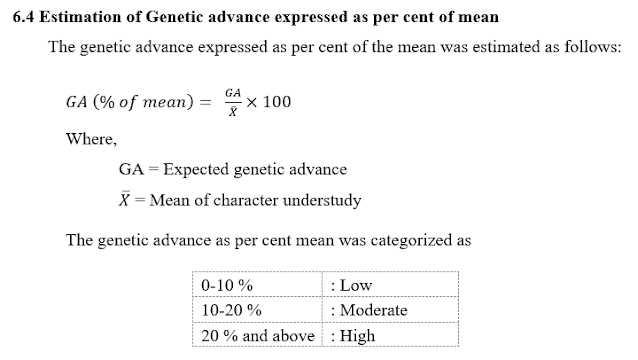

I ended up utilizing the Gender Inequality Index (GII) from the UNDP as an alternative. Now, this index is advanced and depends on a bunch of indicators which might be clearly going to be at the very least as laborious to measure as literacy—like degree of secondary training (wants admin knowledge or survey or census) and maternal mortality ratio (wants good civil registry, or survey knowledge as a much less passable different). Right here’s how the GII is constructed:

However the GII is offered for all country-year mixtures, which merely can’t be based mostly on direct observations of those variables. Clearly the UNDP do a bunch of modelling to interpolate all of the lacking values. I didn’t look into this however simply trusted the UNDP to have finished the most effective job attainable. It’s definitely very handy to get this measure of gender inequality for thus many nations (206 ‘nations’, however this contains some regional groupings), and for thus a few years.

There have been a number of methods to obtain this GII knowledge from the Human Improvement Experiences web site, but it surely seems the most effective is to obtain all of the Human Improvement Report knowledge for the most recent yr in a single, massive CSV:

# You'll be able to obtain all of the HDR elements (together with GII):

df <- "hdr25.csv"

if(!file.exists(df)){

obtain.file("https://hdr.undp.org/websites/default/information/2025_HDR/HDR25_Composite_indices_complete_time_series.csv",

destfile = df)

}

hdr <- read_csv(df)From there it’s simple knowledge wrangling to extract simply the GII knowledge and mix with my different datasets utilizing yr and the ISO three character codes for nations to affix by.

Becoming the identical blended results mannequin with lmer, gamm and gam

Specifying fashions

One of many issues I wished to kind on this submit was the close to equivalence of a few of the many various methods of specifying and becoming a blended results mannequin in R. There’s an excellent submit from Gavin Simpson on ‘Utilizing random results in GAMs with mgcv’ that I referred to repeatedly in making ready this.

Particularly, I wished to verify that these 4 fashions, set out under, are all very comparable. By which I truly imply the final three are equivalent statistically, however have other ways of being estimated and/or the components written down; and the primary is a statistically totally different mannequin when it comes to chance distributions and hyperlink features, however successfully very very comparable certainly to the opposite three:

# notice response variable is ltfr, outlined earlier as log(tfr):

model2 <- lmer(ltfr ~ gii + log(gdprppppc) * prop_male + (1 | country_fac),

knowledge = model_ready)

model7a <- gamm(tfr ~ gii + log(gdprppppc) * prop_male + s(country_fac, bs = 're'),

knowledge = model_ready, household = quasipoisson)

model7b <- gamm(tfr ~ gii + log(gdprppppc) * prop_male,

random = checklist(country_fac = ~ 1),

knowledge = model_ready, household = quasipoisson)

model7c <- gam(tfr ~ gii + log(gdprppppc) * prop_male + s(country_fac, bs = 're'),

knowledge = model_ready, household = quasipoisson, technique = "REML")These are:

model2– match withlme4::lmer, response variable is log-transformed first after which response is Gaussian, nation degree random impact is specified with1 | country_faccomponents notation. Be aware that I can’t useglmerbecuase it doesn’t enable household = quasipoisson.model7a– match withmgcv::gammwhich is an iterative course of the place the blended results are with withlmerand smoothing splines are finished withgam, iterate until converges. The tip end result incorporates the ultimate model of each thelmemannequin and thegammannequin. There’s no pre-transformation finished of the response variable as a result of we’re utilizing a generalized linear mannequin with quasipoisson household – that’s, variance is proportional to the imply, however not compelled to be equal to it. The random nation degree impact is specified withs(country_fac, bs="re")(restands for random results), which is handed on tolmethat treats it asFormulation: ~1 | country_fac.model7b– equivalent to 7a besides the random results are specified byrandom = checklist(country_fac = ~ 1)model7c– match withmgcv::gam, utilizing the identical mannequin specification asmodel7b. In contrast togamm, this does all of the work insidegamitself, there’s no iterating to the features of thelme4package deal. There are limitations imposed consequently – the random results can’t be correlated with eachother, and you’ll’t specify advanced error buildings (autocorrelation and so on) like you might withgammorlmer. However I don’t have a necessity for both of this stuff. Importantlymodel7chas to make use of restricted most probability as its estimation technique if we wish it to get equal outcomes to thelmer-based strategies.

Ultimately, none of those fashions had been referred to in my essential submit as a result of I went for an method based mostly purely on the usage of gam() and with varied non-linear results even within the base, null mannequin. Nevertheless it was a really helpful studying expertise for me to work out precisely what’s and isn’t totally different in a bunch of comparable mannequin specs.

Getting the identical fastened coefficients

Right here is code to extract the coefficients for the fastened results from these 4 basically equivalent fashions:

# Mounted coefficients of the 4 comparable linear fashions:

abstract(model7a$lme)$tTable |>

as.knowledge.body() |>

choose(mod7a = Worth) |>

mutate(mod7b = pull(as.knowledge.body(abstract(model7b$lme)$tTable), Worth)) |>

mutate(mod7c = pull(as.knowledge.body(abstract(model7c)$p.desk), Estimate)) |>

mutate(mod2 = fixef(model2)) |>

mutate(throughout(the place(is.numeric), spherical, digits = 2))which provides

mod7a mod7b mod7c mod2

X(Intercept) 3.55 3.55 3.53 3.65

Xgii 1.30 1.30 1.30 1.27

Xlog(gdprppppc) -0.34 -0.34 -0.34 -0.35

Xprop_male -8.17 -8.17 -8.12 -8.52

Xlog(gdprppppc):prop_male 0.87 0.87 0.86 0.90

The largest distinction within the coefficients is with model2, not shocking as a result of it has the largest distinction in its specification from the opposite three. The log transformation is finished earlier than modelling and the response is then handled as Gaussian, versus the quasipoisson hyperlink and variance features method of the opposite three.

Be aware that from the above snippet of code that not least of the variations between these fashions is the totally different strategies used to extract these fastened coefficients.

Getting the identical group degree random results

One other verify that these fashions are principally the identical was to check the country-level random results. For instance, is the “Oman” impact going to be the identical in every of those 4 fashions?

To reply this I first needed to work out the right way to extract the random results from the variations that used the s(country_fac, bs="re") notation to set the random results. It seems the easiest way to do that is with the gratia package deal by Gavin Simpson (once more), which has the smooth_coefs operate for this and associated functions. So this subsequent chunk of code extracts all these nation results and attracts a pairs plot of them.

tibble(

rf2 = ranef(model2)[[1]][, 1],

rf7a = smooth_coefs(model7a, "s(country_fac)"),

rf7b = ranef(model7b$lme)[, 1],

rf7c = smooth_coefs(model7c, "s(country_fac)")

) |>

ggpairs()Which provides this end result, with a satisfying excessive correlation within the nation results of the 4 totally different fashions:

Once more, model2 is a bit totally different from the opposite three, for a similar motive. I’m truly struck with how a lot we get near-identical leads to a mannequin that does the log transformation earlier than modelling to those who use a log hyperlink operate.

Displaying marginal results

In some unspecified time in the future when taking part in round with the other ways of specifying fashions I used to be having bother understanding a few of the output—some coefficients I assumed needs to be equivalent weren’t—and began constructing my very own, very primary predicted imply values, by multiplying numbers by the coefficients. The unique drawback went away after I found some mistake or different, however I repurposed what I’d finished into the code to provide this plot.

That is the form of plot I’d been imagining to make use of for instance the interplay of the male home tasks variable with GDP per capita. I’d been anticipating to see one thing like this as soon as I’d seen the route of the development swap round in excessive revenue nations in comparison with low revenue nations:

This plot was produced with this very hacked-together, brittle, operate that multiplies variables by their coefficients:

# Handbook manner of constructing a plot. Not even utilizing predict()

b <- fixef(model2)

#' Predict TFR given these coefficients

calc_tfr <- operate(prop_male, gdp, gii = imply(model_ready$gii)){

exp(b[1] +

b[2] * gii +

b[3] * log(gdp) +

b[4] * prop_male +

b[5] * prop_male * log(gdp))

}

# Dwelling-made prediction plot to indicate the interplay impact:

tibble(prop_male = rep(seq(from = 0.05, to = 0.45, size.out = 50), 3),

gdp = rep(c(3000, 10000, 80000), every = 50)) |>

mutate(tfr = c(calc_tfr(prop_male, gdp)),

gdp = greenback(gdp),

gdp = fct_relevel(gdp, "$3,000")) |>

ggplot(aes(x = prop_male, color = gdp, y = tfr)) +

geom_line(linewidth = 1.5) +

geom_point(knowledge = model_ready, color = "black") +

scale_x_continuous(label = %) +

labs(x = "Proportion of grownup home tasks finished by males",

y = "Predicted complete fertility fee",

title = "Interplay of revenue, home tasks finished by males on fertility fee",

subtitle = "Calculations finished for a hypothetical nation that in any other case has the common Gender Inequality Index",

color = "PPP GDP per capita",

caption = full_caption)It’s not one thing I’d use for actual as a result of I’d need to calculate the usual errors at every level too. In some unspecified time in the future, we are saying that’s what the varied package deal authors gave us the predict technique for the lessons they made holding fitted fashions. However even utilizing predict and making use of it to a rigorously chosen grid of values is made simple nowadays by the marginaleffects package deal, by Vincent Arel-Bundock, Noah Greifer and Andrew Heiss. I used to be utilizing this for the primary critical time on this train.

It seems that marginaleffects is nice for this function; simple to make use of to get you an almost adequate plot. Right here’s the outcomes of the marginaleffects::predict_plot()

It’s like my home-made plot, however higher in at the very least one respect; it has confidence intervals. There have been some hitches in scales and guides:

- the y axis actually wished to be labelled on the dimensions of the linear predictor, and ultimately I let it have its manner and add a secondardy axis on the proper hand aspect labelled on the unique scale

- controling the color scale non-trivial, as did labelling it with $ indicators. Ultimately I didn’t persist on this; there are methods to get

plot_predictionsto provide the knowledge fairly than draw a plot, however I didn’t sluggish issues down.

Right here’s the good and easy code to attract that; the “good and easy” bit partiuclarly referring to the straightforward manner you possibly can specify the variables’ values for instance. As soon as I’d realised how simple this was, I used it for the remainder of the weblog submit, together with the significantly extra advanced generalized additive fashions that had been my precise most popular fashions.

plot_predictions(model2, factors = 1, situation = checklist(

"prop_male",

"gdprppppc" = c(3000, 10000, 80000))) +

scale_y_continuous(trans = transform_exp(),

breaks = log(c(2, 4, 6)),

label = comma,

sec.axis = sec_axis(exp, title = "Complete Fertility Fee")) +

scale_x_continuous(label = %) +

labs(y = "log(complete fertility fee)",

color = "PPP GDP per capita",

fill = "PPP GDP per capita",

x = "Proportion of grownup home tasks finished by males",

title = "Interplay of revenue, home tasks finished by males on fertility fee",

subtitle = "Calculations finished for a hypothetical nation that in any other case has the common Gender Inequality Index",

caption = full_caption)

# notice the warning that this solely takes into consideration the uncertainty of

# fixed-effect parameters. That is in all probability okay? if we're all for

# the causality fairly than predicting new nations?Modelling selections and checks

Detoured into the backyard of forking paths?

Now, all that stuff within the earlier part is usually cosmetics. Eager readers could have observed that the mannequin described there may be not the one I utilized in the principle weblog in any respect. Specifically, it has a simple linear interplay of GDP per capita and male home tasks, whereas I in the end used a smoothed spline interplay as an alternative; I added a smoothed time impact; and made gender inequality index additionally a non-linear spline.

To refresh reminiscences, the 2 fashions contrasted in the principle weblog had been these two:

model4b <- gam(tfr ~ s(time_period) + s(gii, okay = 3) + s(log(gdprppppc)) + s(country_fac, bs = 're'),

knowledge = model_ready, household = quasipoisson, technique = "REML")

model6b <- gam(tfr ~ s(time_period) + s(gii, okay = 3) + s(log(gdprppppc), prop_male) + s(country_fac, bs = 're'),

knowledge = model_ready, household = quasipoisson, technique = "REML")I discovered model6b defined just about no additional deviance in comparison with model4b. The distinction between model6b and the model2 used above is all of the s() splines, and the nuisance impact of time_period being managed for.

In case you have a look at the plot within the earlier part displaying the marginal impact of elevated male home work from the linear mannequin with no splines, it seems to be vital. And the output appears to substantiate this—the abstract under reveals the male home work as negatively associated to fertility, and its interplay with GDP per capita as positively associated (so for increased GDP per capita AND increased ranges of male home tasks, complete fertility fee goes up). These are clearly vital at typical ranges. And that is the other of what I reported in my weblog, which was that there was no male home tasks affect on fertility after we management for gender inequality and GDP per capita.

> abstract(model2, cor = FALSE)

Linear blended mannequin match by REML ['lmerMod']

Formulation: ltfr ~ gii + log(gdprppppc) * prop_male + (1 | country_fac)

Information: model_ready

REML criterion at convergence: -149.8

Scaled residuals:

Min 1Q Median 3Q Max

-3.03513 -0.41571 0.07449 0.46057 2.51483

Random results:

Teams Title Variance Std.Dev.

country_fac (Intercept) 0.037475 0.1936

Residual 0.008724 0.0934

Variety of obs: 172, teams: country_fac, 79

Mounted results:

Estimate Std. Error t worth

(Intercept) 3.65360 0.60667 6.022

gii 1.26916 0.20080 6.320

log(gdprppppc) -0.34957 0.06275 -5.571

prop_male -8.51667 2.05957 -4.135

log(gdprppppc):prop_male 0.90124 0.21501 4.192

That is the place I come to an issue that truly worries me—did I am going down the backyard of forking paths? You might accuse me of constructing the mannequin extra advanced—by including a time impact, non-linear gender inequality impact and GDP per capita results—till I obtained the specified end result, of no remaining deviance defined by male time spent on home tasks.

My defence towards this must be that I at all times meant so as to add in these non-linear results, and that I’d solely stopped to specify these fashions with out them as a result of I wished to take a look at the lmer v gam v gamm specification query. And that is true. Nevertheless it’s additionally true that I had anticipated that even with out non-linear splines added, the male time on home tasks can be non-significant; an expectation that turned out to be mistaken.

The truth is, earlier than happening the lmer v gam v gamm rabbit gap, I had began with a model0, specified by this:

# A primary null mannequin:

model0 <- lmer(ltfr ~ gii + log(gdprppppc) + (1 | country_fac),

knowledge = model_ready)I had then finished this diagnostic verify, and a plot of the residual fertility fee towards male home tasks (backside proper panel within the plot under):

This appeared (visually) like solely random noise remained, and I in all probability obtained careless in assuming that splines and stuff, whereas the place I wished to move, weren’t important, therefore it was okay to suit these linear fashions first. It’s simply that when it turned out that there was an obvious “vital” impact from doing this, I used to be left with a grimy style in my mouth that I used to be attempting becoming fashions till I discovered the one which suited my expectation (of no male home tasks impact after controlling for gender inequality and GDP per capita).

Fortunately, that is solely a weblog; no-one expects me to pre-register my analytical plan for it; and anyway I’m satisfied of the substance of the ultimate discovering; and I actually do bear in mind intending to make use of the variations with splines. However I’m guessing lots of researchers really feel this once they train their researcher levels of freedom.

Spline v tensor product smooths

Once I posted the principle weblog, Stephen Wild made the next remark: “I’m interested in s(log(gdprppppc), prop_male) in your mannequin fairly than ti(log(gdprppppc), prop_male)”. The truth is, I hadn’t thought-about this feature, and I ought to have. So after this remark I went again and match a brand new mannequin with tensor product smooths. Be aware that it’s additionally essential so as to add the ti(prop_male) + ti(log(gdprppppc) single phrases explicitly on this case, not like when utilizing s().

model6c <- gam(tfr ~ s(time_period) + s(gii, okay = 3) +

ti(log(gdprppppc)) + ti(prop_male) + ti(log(gdprppppc), prop_male) +

s(country_fac, bs = 're'),

knowledge = model_ready, household = quasipoisson, technique = "REML")That manner of specifying the person phrases with eg ti(prop_male) isn’t a kind of urged by Gavin Simpson right here; I believe it’s okay although. If not I’ll likely have a future weblog attempting to get this straight in my head.

Now, ideally I might have a digression right here the place I clarify the theoretical and sensible variations between spline and tensor product smooths and when to make use of every, however I’m not feeling as much as that. One factor I do know is {that a} tensor product clean is invariant to modifications within the authentic scale of the variable, which might make it extra strong in case you’re involved about totally different scales of your totally different variables; this appears to me the principle level emphasised when this concern is mentioned in Gavin Simpson’s definitive ebook on generalized additive fashions in R

Anyway, on this specific case there’s little to selected from, as seen on this thrown collectively assortment of plots. These within the high present the GDP per capita and male home tasks results when utilizing a tensor product clean; these on the backside are the identical when utilizing a spline:

My hunch is that the tensor product clean is a bit higher right here, however I received’t change historical past by modifying the principle weblog to make use of it. The addition of the male_prop variable nonetheless isn’t statistically ‘vital’; whereas we are able to see that for increased revenue nations there’s a little bit of an upwards slope (within the high proper panel of the gathering of plots above), it’s not explaining a fabric quantity of additional materials.

> abstract(model6c)

Household: quasipoisson

Hyperlink operate: log

Formulation:

tfr ~ s(time_period) + s(gii, okay = 3) + ti(log(gdprppppc)) + ti(prop_male) +

ti(log(gdprppppc), prop_male) + s(country_fac, bs = "re")

Parametric coefficients:

Estimate Std. Error t worth Pr(>|t|)

(Intercept) 0.68636 0.02561 26.8 <2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Approximate significance of clean phrases:

edf Ref.df F p-value

s(time_period) 2.742 3.357 5.425 0.001134 **

s(gii) 1.342 1.458 33.437 < 2e-16 ***

ti(log(gdprppppc)) 3.485 3.652 6.563 0.000112 ***

ti(prop_male) 1.000 1.001 0.418 0.519799

ti(log(gdprppppc),prop_male) 1.761 1.940 2.119 0.175333

s(country_fac) 64.879 78.000 7.850 < 2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

R-sq.(adj) = 0.964 Deviance defined = 97.7%

-REML = -176.7 Scale est. = 0.013138 n = 172

> anova(model6c, model4c)

Evaluation of Deviance Desk

Mannequin 1: tfr ~ s(time_period) + s(gii, okay = 3) + ti(log(gdprppppc)) + ti(prop_male) +

ti(log(gdprppppc), prop_male) + s(country_fac, bs = "re")

Mannequin 2: tfr ~ s(time_period) + s(gii, okay = 3) + ti(log(gdprppppc)) + s(country_fac,

bs = "re")

Resid. Df Resid. Dev Df Deviance F Pr(>F)

1 82.875 1.2599

2 86.013 1.2055 -3.1375 0.054375

There’s negligible additional deviance defined by the mannequin with the prop_male and its GDP interplay, in comparison with the easier mannequin with out them.

Truly, I don’t actually have a lot so as to add right here.

I might have talked extra about a few of this, eg that unexplained okay=3 within the spline for gender inequality, and my interested by diagnostic plots for GAMs (and my rushed, imperfect implementation of it); however in some unspecified time in the future there are diminishing marginal returns. I’ve coated off the principle issues right here; largely issues that I believe future-me will need to consult with subsequent time I’m doing comparable issues.

For some individuals, it’s in all probability value testing the full script of authentic code if there’s further factors of curiosity or questions.