Synthetic intelligence (AI) is a well-liked matter within the media today, and ChatGPT is, maybe, essentially the most well-known AI software. I not too long ago tweeted that I had written a Stata command referred to as chatgpt for myself that runs ChatGPT. I promised to clarify how I did it, so right here is the reason.

Assessment of Stata/Python integration

My chatgpt command makes use of a mix of Stata and Python code. You could wish to learn my earlier weblog posts if you’re not aware of utilizing Stata and Python collectively.

Utilizing Python to work together with ChatGPT

ChatGPT was created by OpenAI, and we will probably be utilizing the OpenAI API to speak with ChatGPT. You will want an Open AI person account and your personal Open AI API key to make use of the code beneath. Additionally, you will have to set up the Python package deal openai. You possibly can sort shell pip set up openai within the Stata command window if you’re utilizing Python. You could want to make use of a distinct methodology to put in the openai package deal if you’re utilizing Python as a part of a platform equivalent to Anaconda.

Let’s start by writing some Python code to import the openai package deal, outline a perform named chatgpt(), and go our API key to the Open AI server. I’ve typed feedback utilizing a inexperienced font to point the aim of every subsequent line of code. Notice that the perform is outlined utilizing tabs. The perform definition begins with def chatgpt() and ends when the part of tabbed code ends.

python:

# Import the Open AI package deal

import openai

# Outline a perform named chatgpt()

def chatgpt():

# Move my API key to the OpenAI server

openai.api_key = "PASTE YOUR API KEY HERE"

finish

Subsequent, let’s add some code to ask ChatGPT to put in writing a haiku about Stata. We are going to retailer our question to inputtext. Then we’ll ship the question by the API to ChatGPT utilizing the ChatCompletion.create() methodology and retailer ChatGPT’s reply to outputtext. The time period “methodology” is Python jargon for a perform, and the ChatCompletion.create() methodology requires two arguments. The mannequin argument specifies that we are going to use the “gpt-3.5-turbo” mannequin, and the messages argument specifies that we’re submitting our question in our function as a “person” and the content material of our question is saved in inputtext. The textual content of ChatGPT’s reply is saved in outputtext.decisions[0].message.content material, and the final line of code in our chatgpt() perform prints the reply to the display.

python:

# Import the Open AI package deal

import openai

# Outline a perform named chatgpt()

def chatgpt():

# Move my API key to the OpenAI server

openai.api_key = "PASTE YOUR API KEY HERE"

# Outline an enter string

inputtext = "Write a haiku about Stata"

# Ship the inputtext by the API to ChatGPT

# and retailer the consequence to outputtext

outputtext = openai.ChatCompletion.create(

mannequin="gpt-3.5-turbo",

messages=[{"role": "user", "content": inputtext}]

)

# Show the consequence

print(outputtext.decisions[0].message.content material)

finish

Now we will run our perform in Python and consider the consequence.

. python:

------------------------------ python (sort finish to exit) -----------------------

>>> chatgpt()

Knowledge prepared, Stata

Regression, plots, and graphs

Insights we unearth

>>> finish

--------------------------------------------------------------------------------

It labored! And this was a lot simpler than I’d have guessed once we started. Understand that we’re simply utilizing ChatGPT for enjoyable. You need to perceive the copyright implications and double-check the content material earlier than utilizing ChatGPT for critical work.

Calling the Python perform from Stata

The simplest approach to make use of our new Python perform with Stata is to easily sort python: chatgpt(). Notice that ChatGPT returns a distinct reply every time we use our perform.

. python: chatgpt()

Knowledge is sacred

Stata, the guiding compass

Insights, clear and true

However I want to create a Stata command to run my chatgpt() perform in Python. I can create a Stata command named chatgpt by typing program chatgpt to start my command and typing finish to finish the command.

program chatgpt

python: chatgpt()

finish

For technical causes, our new chatgpt command received’t work but. We will make it work by saving our Stata code and Python code in a file named chatgpt.ado. Notice that I’ve made two adjustments to our code within the code block beneath. First, I eliminated the feedback to avoid wasting area. And second, we’ve already outlined the Python perform chatgpt() and the Stata program chatgpt. So I’ve typed python clear and program drop chatgpt to take away them from Stata’s reminiscence.

chatgpt.ado model 1

python clear

program drop chatgpt

program chatgpt

model 18

python: chatgpt()

finish

python:

import openai

def chatgpt():

openai.api_key = "PASTE YOUR API KEY HERE"

inputtext = "Write a haiku about Stata"

outputtext = openai.ChatCompletion.create(

mannequin="gpt-3.5-turbo",

messages=[{"role": "user", "content": inputtext}]

)

print(outputtext.decisions[0].message.content material)

finish

Let’s run our code to redefine our Stata command chatgpt and our Python perform chatgpt() after which sort chatgpt.

. chatgpt

Statistical software

Stata, analyzing information

Insights dropped at gentle

It labored! We efficiently wrote a Stata command that calls a Python perform that sends our question by the OpenAI API to ChatGPT, retrieved the reply from ChatGPT by the API, and printed the reply to the display.

Passing queries from Stata to Python

That was enjoyable, however, eventually, we might develop uninterested in studying haikus about Stata and want to submit a brand new question to ChatGPT. It could be good if we may sort our question straight into our Stata command. To do that, we might want to enable our Stata command to simply accept an enter string after which go that string to our Python perform.

We will enable our chatgpt command to simply accept a string enter by including the road args InputText. Then we will sort chatgpt “question”, and the contents of question will probably be saved within the native macro InputText. I’ve added this line to the code block beneath utilizing a purple font.

Subsequent, we might want to go the native macro InputText from Stata to our Python perform. Stata’s Operate Interface (SFI) makes it straightforward to go data back-and-forth between Stata and Python. First we will sort from sfi import Macro to import the Macro package deal from SFI. Then we will sort inputtext = Macro.getLocal(‘InputText’) to make use of the getLocal() methodology to go the Stata native macro InputText to the Python variable inputtext. I’ve once more added these strains of code with a purple font within the code block beneath so they’re straightforward to see.

chatgpt.ado model 2

python clear

seize program drop chatgpt

program chatgpt

model 18

args InputText

python: chatgpt()

finish

python:

import openai

from sfi import Macro

def chatgpt():

openai.api_key = "PASTE YOUR API KEY HERE"

inputtext = Macro.getLocal('InputText')

outputtext = openai.ChatCompletion.create(

mannequin="gpt-3.5-turbo",

messages=[{"role": "user", "content": inputtext}]

)

print(outputtext.decisions[0].message.content material)

finish

Let’s run our up to date code to redefine our Stata command chatgpt and our Python perform chatgpt() after which attempt the brand new model of our chatgpt command.

. chatgpt "Write a limerick about Stata"

There as soon as was a software program named Stata,

For information evaluation, it was the mantra.

With graphs and regressions,

And numerous expressions,

It made statistics really feel like a tada!

This time I requested ChatGPT to put in writing a limerick about Stata, and it labored! Notice that the double quotes across the question aren’t non-compulsory.

Move the response from Python to Stata

In some unspecified time in the future, we might want to use ChatGPT’s reply after we run our command. Particularly, we might want to retailer ChatGPT’s reply to an area macro in order that we don’t have to repeat and paste it from the display. Once more, Stata’s SFI interface makes this a simple process.

Let’s modify our Python perform first. Recall that the textual content of ChatGPT’s reply is saved in outputtext.decisions[0].message.content material. We will use the setLocal() methodology in SFI’s Macro package deal to retailer the reply to a Stata native macro named OutputText. I’ve once more typed that line of code with a purple font to make it straightforward to see within the code block beneath.

Subsequent, we have to make two adjustments to the definition of our Stata command. First, we have to add the choice rclass to our program definition to permit our command to return data after it terminates. Second, we have to add the road return native OutputText = `“`OutputText’”’ to return the contents of the native macro OutputText to the person. Notice that I’ve used compound double quotes across the native macro as a result of ChatGPT’s reply might include double quotes. Once more, I’ve typed these adjustments with a purple font to make them straightforward to see within the code block beneath.

chatgpt.ado model 3

python clear

seize program drop chatgpt

program chatgpt, rclass

model 18

args InputText

python: chatgpt()

return native OutputText = `"`OutputText'"'

finish

python:

import openai

from sfi import Macro

def chatgpt():

openai.api_key = "PASTE YOUR API KEY HERE"

inputtext = Macro.getLocal('InputText')

outputtext = openai.ChatCompletion.create(

mannequin="gpt-3.5-turbo",

messages=[{"role": "user", "content": inputtext}]

)

print(outputtext.decisions[0].message.content material)

Macro.setLocal("OutputText", outputtext.decisions[0].message.content material)

finish

Let’s run our ado-file to redefine our Stata command and Python perform after which sort a brand new chatgpt command.

. chatgpt "Write a haiku about Stata"

Knowledge, Stata's realm,

Numbers dance, insights unfold,

Evaluation blooms.

Now we will sort return record and see that ChatGPT’s reply has been saved to the native macro r(OutputText).

. return record

macros:

r(OutputText) : "Knowledge, Stata's realm, Numbers dance, insights unfo..."

Write ChatGPT’s reply to a file

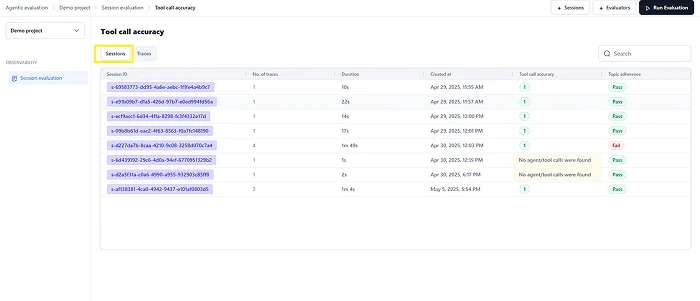

I like getting access to ChatGPT’s reply in an area macro, however I seen that a few of the formatting, equivalent to line breaks, has been misplaced. This could be straightforward to appropriate for a brief reply, however it could possibly be time consuming for an extended reply. One answer to this downside can be to put in writing ChatGPT’s reply to a file.

I’ve added three strains of code to the Python perform within the code block beneath utilizing a purple font. The primary line makes use of the open() methodology to create an output file named chatgpt_output.txt. The w tells open() to overwrite the file reasonably than to append new textual content. The second line makes use of the write() methodology to put in writing ChatGPT’s reply to the file. And the third line makes use of the shut() methodology to shut the file.

chatgpt.ado model 4

python clear

seize program drop chatgpt

program chatgpt, rclass

model 18

args InputText

python: chatgpt()

return native OutputText = `"`OutputText'"'

finish

python:

import openai

from sfi import Macro

def chatgpt():

openai.api_key = "PASTE YOUR API KEY HERE"

inputtext = Macro.getLocal('InputText')

outputtext = openai.ChatCompletion.create(

mannequin="gpt-3.5-turbo",

messages=[{"role": "user", "content": inputtext}]

)

print(outputtext.decisions[0].message.content material)

Macro.setLocal("OutputText", outputtext.decisions[0].message.content material)

f = open("chatgpt_output.txt", "w")

f.write(outputtext.decisions[0].message.content material)

f.shut()

finish

Once more, we should run our ado-file to redefine our Stata command and Python perform. Then we will sort a brand new chatgpt command to submit a brand new question.

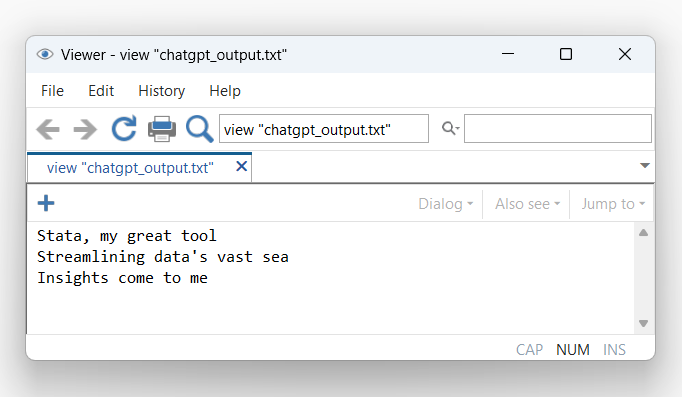

. chatgpt "Write a haiku about Stata"

Stata, my useful gizmo

Streamlining information's huge sea

Insights come to me

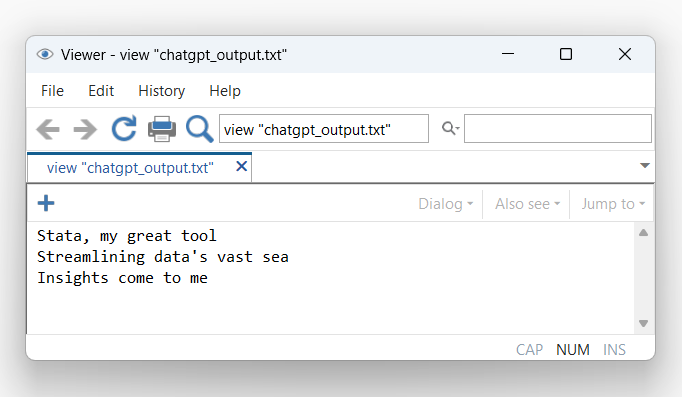

Now we will view the output file to confirm that it incorporates ChatGPT’s reply.

. view "chatgpt_output.txt"

We have to take away the road python clear from our .ado file as soon as we end modifying our program. This might trigger issues with different Python code.

Conclusion

In order that’s how I wrote the little chatgpt command that I posted on Twitter. It has very restricted capabilities, however I wrote it solely out of curiosity whereas I used to be consuming lunch in the future. I’ve tried some enjoyable experiments equivalent to asking ChatGPT to put in writing Stata code to do simulated energy calculations for various eventualities. The outcomes are blended at finest, so I don’t have to mud off my CV but. However the ChatGPT API can do excess of what I’ve demonstrated right here, and you may be taught extra by studying the ChatGPT API Reference. And Python integration with Stata makes it comparatively straightforward to create user-friendly Stata instructions that make the most of these highly effective instruments.

Tonny Ouma is an Utilized AI Specialist at AWS, specializing in generative AI and machine studying. As a part of the Utilized AI staff, Tonny helps inside groups and AWS prospects incorporate modern AI methods into their merchandise. In his spare time, Tonny enjoys using sports activities bikes, {golfing}, and entertaining household and mates together with his mixology abilities.

Tonny Ouma is an Utilized AI Specialist at AWS, specializing in generative AI and machine studying. As a part of the Utilized AI staff, Tonny helps inside groups and AWS prospects incorporate modern AI methods into their merchandise. In his spare time, Tonny enjoys using sports activities bikes, {golfing}, and entertaining household and mates together with his mixology abilities. Simon Handley, PhD, is a Senior AI/ML Options Architect within the World Healthcare and Life Sciences staff at Amazon Internet Companies. He has greater than 25 years’ expertise in biotechnology and machine studying and is enthusiastic about serving to prospects remedy their machine studying and life sciences challenges. In his spare time, he enjoys horseback using and taking part in ice hockey.

Simon Handley, PhD, is a Senior AI/ML Options Architect within the World Healthcare and Life Sciences staff at Amazon Internet Companies. He has greater than 25 years’ expertise in biotechnology and machine studying and is enthusiastic about serving to prospects remedy their machine studying and life sciences challenges. In his spare time, he enjoys horseback using and taking part in ice hockey.