Introduction

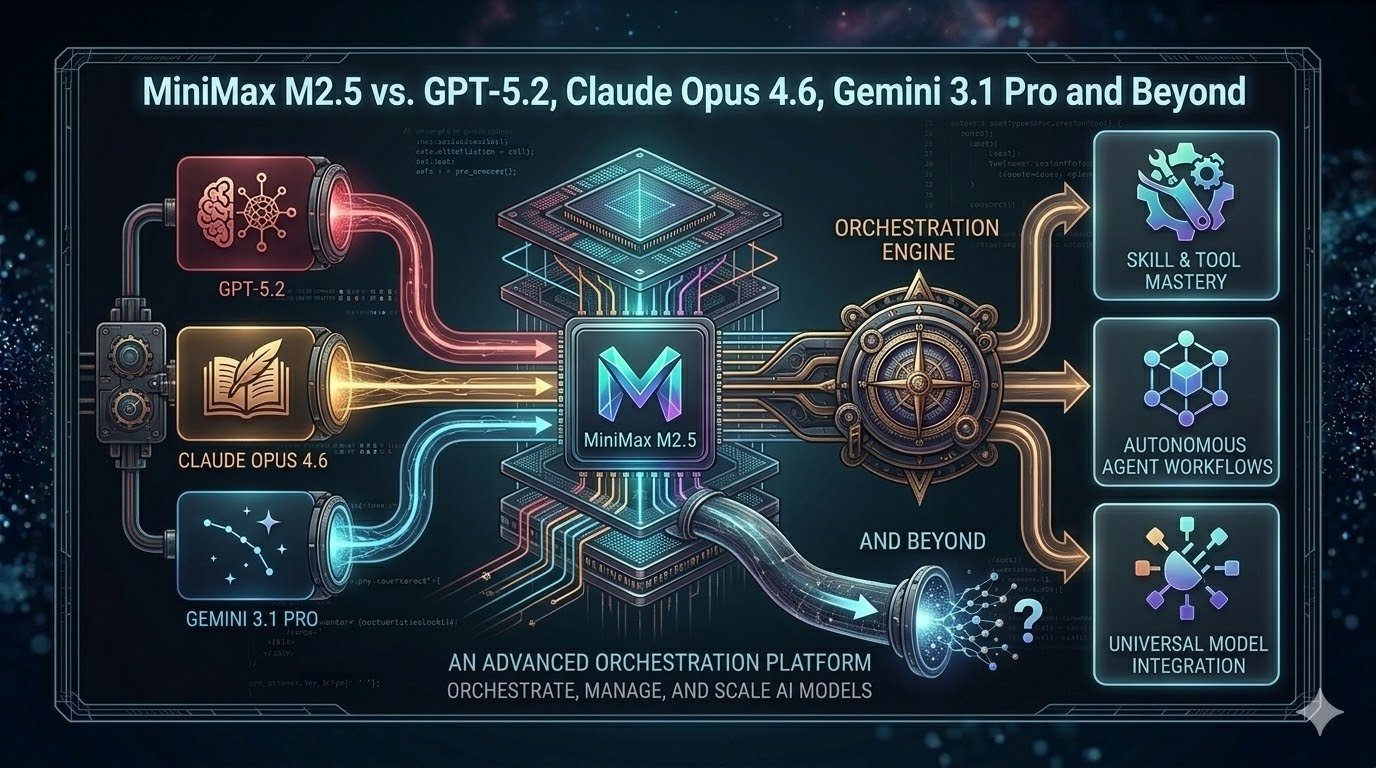

Since late 2025, the generative AI panorama has exploded with new releases. OpenAI’s GPT‑5.2, Anthropic’s Claude Opus 4.6, Google’s Gemini 3.1 Professional and MiniMax’s M2.5 sign a turning level: fashions are now not one‑dimension‑matches‑all instruments however specialised engines optimized for distinct duties. The stakes are excessive—groups must determine which mannequin will deal with their coding tasks, analysis papers, spreadsheets or multimodal analyses. On the similar time, prices are rising and fashions diverge on licensing, context lengths, security profiles and operational complexity. This text gives an in depth, up‑to‑date exploration of the main fashions as of March 2026. We evaluate benchmarks, dive into structure and capabilities, unpack pricing and licensing, suggest choice frameworks and present how Clarifai orchestrates deployment throughout hybrid environments. Whether or not you’re a developer in search of probably the most environment friendly coding assistant, an analyst looking for dependable reasoning, or a CIO trying to combine a number of fashions with out breaking budgets, this information will show you how to navigate the quickly evolving AI ecosystem.

Why this issues now

Enterprise adoption of LLMs has been accelerating. Based on OpenAI, early testers of GPT‑5.2 declare the mannequin can cut back data‑work duties by 11x the pace and <1% of the price in comparison with human specialists, hinting at main productiveness beneficial properties. On the similar time, open‑supply fashions like MiniMax M2.5 are attaining state‑of‑the‑artwork efficiency in actual coding duties for a fraction of the value. The distinction between selecting an unsuitable mannequin and the best one can imply hours of wasted prompting or vital price overruns. This information combines EEAT‑optimized analysis (express citations to credible sources), operational depth (the way to really implement and deploy fashions) and resolution frameworks so you can also make knowledgeable selections.

Fast digest

- Latest releases: MiniMax M2.5 (Feb 2026), Claude Opus 4.6 (Feb 2026), Gemini 3.1 Professional (Feb 2026) and GPT‑5.2 (Dec 2025). Every improves dramatically on its predecessor, extending context home windows, pace and agentic capabilities.

- Price divergence: Pricing ranges from ~$0.30 per million tokens for MiniMax M2.5‑Lightning to $25 per million output tokens for Claude. Hidden charges equivalent to GPT‑5.2’s “reasoning tokens” can inflate API payments.

- No common winner: Benchmarks present that Claude leads coding, GPT‑5.2 dominates math and reasoning, Gemini excels in lengthy‑context multimodal duties, and MiniMax gives one of the best worth‑efficiency ratio.

- Integration issues: Clarifai’s orchestration platform means that you can run a number of fashions—each proprietary and open—via a single API and even host them domestically through Native Runners.

- Future outlook: Rising open fashions like DeepSeek R1 and Qwen 3‑Coder slender the hole with proprietary methods, whereas upcoming releases (MiniMax M3, GPT‑6) will additional increase the bar. A multi‑mannequin technique is crucial.

1 The New AI Panorama and Mannequin Evolution

As we speak’s AI panorama is break up between proprietary giants—OpenAI, Anthropic and Google—and a quickly maturing open‑mannequin motion anchored by MiniMax, DeepSeek, Qwen and others. The competitors has created a virtuous cycle of innovation: every launch pushes the following to grow to be sooner, cheaper or smarter. To know how we arrived right here, we have to look at the evolutionary arcs of the important thing fashions.

1.1 MiniMax: From M2 to M2.5

M2 (Oct 2025). MiniMax launched M2 because the world’s most succesful open‑weight mannequin, topping intelligence and agentic benchmarks amongst open fashions. Its combination‑of‑specialists (MoE) structure makes use of 230 billion parameters however prompts solely 10 billion per inference. This reduces compute necessities and permits the mannequin to run on modest GPU clusters or Clarifai’s native runners, making it accessible to small groups.

M2.1 (Dec 2025). The M2.1 replace centered on manufacturing‑grade programming. MiniMax added complete assist for languages equivalent to Rust, Java, Golang, C++, Kotlin, TypeScript and JavaScript. It improved Android/iOS improvement, design comprehension, and launched an Interleaved Pondering mechanism to interrupt advanced directions into smaller, coherent steps. Exterior evaluators praised its potential to deal with multi‑step coding duties with fewer errors.

M2.5 (Feb 2026). MiniMax’s newest launch, M2.5, is a leap ahead. The mannequin was educated utilizing reinforcement studying on a whole lot of hundreds of actual‑world environments and duties. It scored 80.2% on SWE‑Bench Verified, 51.3% on Multi‑SWE‑Bench, 76.3% on BrowseComp and 76.8% on BFCL (software‑calling)—closing the hole with Claude Opus 4.6. MiniMax describes M2.5 as buying an “Architect Mindset”: it plans out options and consumer interfaces earlier than writing code and executes whole improvement cycles, from preliminary design to ultimate code evaluate. The mannequin additionally excels at search duties: on the RISE analysis it completes info‑in search of duties utilizing 20% fewer search rounds than M2.1. In company settings it performs administrative work (Phrase, Excel, PowerPoint) and beats different fashions in inside evaluations, successful 59% of head‑to‑head comparisons on the GDPval‑MM benchmark. Effectivity enhancements imply M2.5 runs at 100 tokens/s and completes SWE‑Bench duties in 22.8 minutes—a 37% speedup in comparison with M2.1. Two variations exist: M2.5 (50 tokens/s, cheaper) and M2.5‑Lightning (100 tokens/s, increased throughput).

Pricing & Licensing. M2.5 is open‑supply underneath a modified MIT licence requiring industrial customers to show “MiniMax M2.5” in product credit. The Lightning model prices $0.30 per million enter tokens and $2.4 per million output tokens, whereas the bottom model prices half that. Based on VentureBeat, M2.5’s efficiencies permit it to be 95% cheaper than Claude Opus 4.6 for equal duties. At MiniMax headquarters, staff already delegate 30% of duties to M2.5, and 80% of recent code is generated by the mannequin.

1.2 Claude Opus 4.6

Anthropic’s Claude Opus 4.6 (Feb 2026) builds on the broadly revered Opus 4.5. The brand new model enhances planning, code evaluate and lengthy‑horizon reasoning. It gives a beta 1 million‑token context window (1 million enter tokens) for big paperwork or code bases and improved reliability over multi‑step duties. Opus 4.6 excels at Terminal‑Bench 2.0, Humanity’s Final Examination, GDPval‑AA and BrowseComp, outperforming GPT‑5.2 by 144 Elo factors on Anthropic’s inside GDPval‑AA benchmark. Security is improved with a greater security profile than earlier variations. New options embody context compaction, which robotically summarizes earlier components of lengthy conversations, and adaptive considering/effort controls, letting customers modulate reasoning depth and pace. Opus 4.6 can assemble groups of agentic employees (e.g., one agent writes code whereas one other checks it) and handles superior Excel and PowerPoint duties. Pricing stays unchanged at $5 per million enter tokens and $25 per million output tokens. Testimonials from firms like Notion and GitHub spotlight the mannequin’s potential to interrupt duties into sub‑duties and coordinate advanced engineering tasks.

1.3 Gemini 3.1 Professional

Google’s Gemini 3 Professional already held the file for the longest context window (1 million tokens) and robust multimodal reasoning. Gemini 3.1 Professional (Feb 2026) upgrades the structure and introduces a thinking_level parameter with low, medium, excessive and max choices. These ranges management how deeply the mannequin causes earlier than responding; medium and excessive ship extra thought-about solutions at the price of latency. On the ARC‑AGI‑2 benchmark, Gemini 3.1 Professional scores 77.1%, beating Gemini 3 Professional (31.1%), Claude Opus 4.6 (68.8%) and GPT‑5.2 (52.9%). It additionally achieves 94.3% on GPQA Diamond and robust outcomes on agentic benchmarks: 33.5% on APEX‑Brokers, 85.9% on BrowseComp, 69.2% on MCP Atlas and 68.5% on Terminal‑Bench 2.0. Gemini 3.1 Professional resolves output truncation points and might generate animated SVGs or different code‑primarily based interactive outputs. Use circumstances embody analysis synthesis, codebase evaluation, multimodal content material evaluation, inventive design and enterprise information synthesis. Pricing is tiered: $2 per million enter tokens and $12 per million output tokens for contexts as much as 200K tokens, and $4/$18 past 200K. Shopper plans stay round $20/month with choices for limitless excessive‑context utilization.

1.4 GPT‑5.2

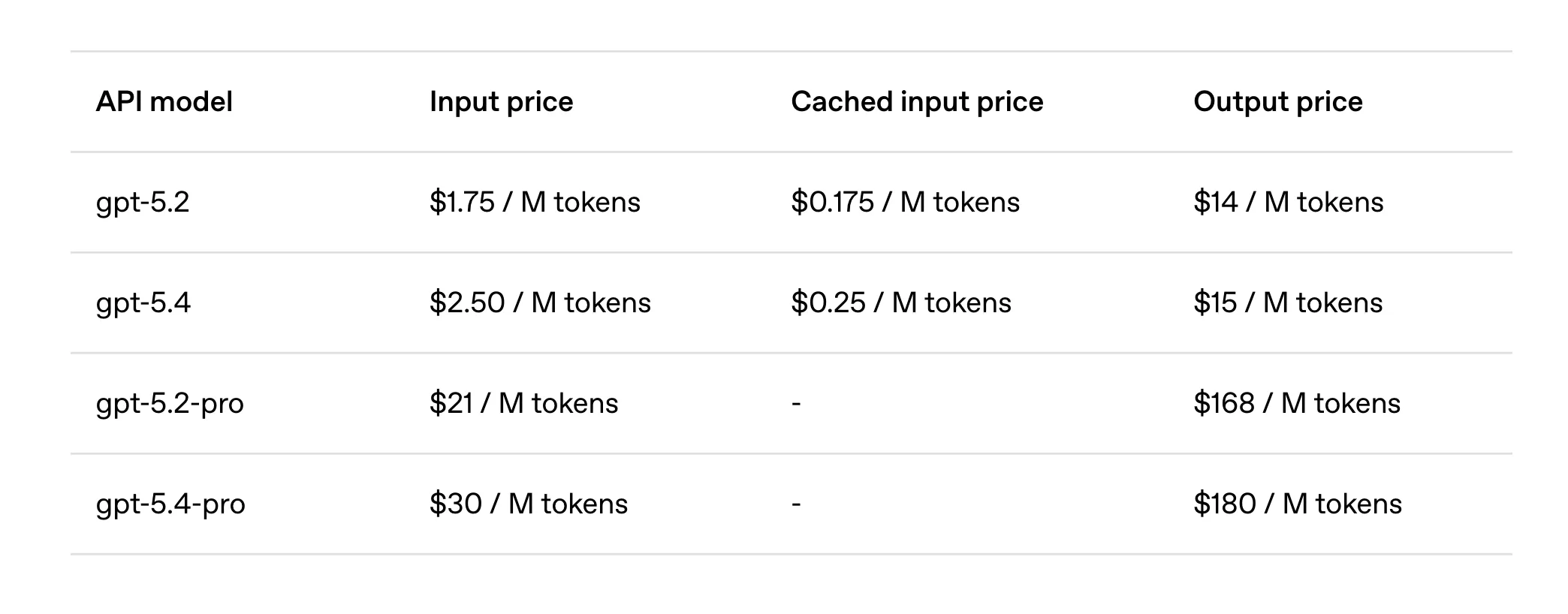

OpenAI’s GPT‑5.2 (Dec 2025) units a brand new state-of-the-art for skilled reasoning, outperforming trade specialists on GDPval duties throughout 44 occupations. The mannequin improves on chain‑of‑thought reasoning, agentic software calling and lengthy‑context understanding, attaining 80% on SWE‑bench Verified, 100% on AIME 2025, 92.4% on GPQA Diamond and 86.2% on ARC‑AGI‑1. GPT‑5.2 Pondering, Professional and Prompt variants assist tailor-made commerce‑offs between latency and reasoning depth; the API exposes a reasoning parameter to regulate chain‑of‑thought size. Security upgrades goal delicate conversations equivalent to psychological well being discussions. Pricing begins at $1.75 per million enter tokens and $14 per million output tokens. A 90% low cost applies to cached enter tokens for repeated prompts, however costly reasoning tokens (inside chain-of-thought tokens) are billed on the output price, elevating complete price on advanced duties. Regardless of being dear, GPT‑5.2 usually finishes duties in fewer tokens, so complete price should be decrease in comparison with cheaper fashions that require a number of retries. The mannequin is built-in into ChatGPT, with subscription plans (Plus, Staff, Professional) beginning at $20/month.

1.5 Different Open Fashions: DeepSeek R1 and Qwen 3

Past MiniMax, different open fashions are gaining floor. DeepSeek R1, launched in January 2025, matches proprietary fashions on lengthy‑context reasoning throughout English and Chinese language and is launched underneath the MIT licence. Qwen 3‑Coder 32B, from Alibaba’s Qwen collection, scores 69.6% on SWE‑Bench Verified, outperforming fashions like GPT‑4 Turbo and Claude 3.5 Sonnet. Qwen fashions are open supply underneath Apache 2.0 and assist coding, math and reasoning. These fashions illustrate the broader pattern: open fashions are closing the efficiency hole whereas providing versatile deployment and decrease prices.

2 Benchmark Deep Dive

Benchmarks are the yardsticks of AI efficiency, however they are often deceptive if misinterpreted. We combination information throughout a number of evaluations to disclose every mannequin’s strengths and weaknesses. Desk 1 compares the newest scores on broadly used benchmarks for M2.5, GPT‑5.2, Claude Opus 4.6 and Gemini 3.1 Professional.

2.1 Benchmark comparability desk

|

Benchmark

|

MiniMax M2.5

|

GPT‑5.2

|

Claude Opus 4.6

|

Gemini 3.1 Professional

|

Notes

|

|

SWE‑Bench Verified

|

80.2 %

|

80 %

|

81 % (Opus 4.5)

|

76.2 %

|

Bug‑fixing in actual repositories.

|

|

Multi‑SWE‑Bench

|

51.3 %

|

—

|

—

|

—

|

Multi‑file bug fixing.

|

|

BrowseComp

|

76.3 %

|

—

|

prime (4.6)

|

85.9 %

|

Browser‑primarily based search duties.

|

|

BFCL (software calling)

|

76.8 %

|

—

|

—

|

69.2 % (MCP Atlas)

|

Agentic duties requiring perform calls.

|

|

AIME 2025 (Math)

|

≈78 %

|

100 %

|

~94 %

|

95 %

|

Contest‑stage arithmetic.

|

|

ARC‑AGI‑2 (Summary reasoning)

|

~40 %

|

52.9 %

|

68.8 % (Opus 4.6)

|

77.1 %

|

Exhausting reasoning duties; increased is healthier.

|

|

Terminal‑Bench 2.0

|

59 %

|

47.6 %

|

59.3 %

|

68.5 %

|

Command‑line duties.

|

|

GPQA Diamond (Science)

|

—

|

92.4 %

|

91.3 %

|

94.3 %

|

Graduate‑stage science questions.

|

|

ARC‑AGI‑1 (Basic reasoning)

|

—

|

86.2 %

|

—

|

—

|

Basic reasoning duties; 5.2 leads.

|

|

RISE (Search analysis)

|

20 % fewer rounds than M2.1

|

—

|

—

|

—

|

Interactive search duties.

|

|

Context window

|

196K

|

400K

|

1M (beta)

|

1M

|

Enter tokens; increased means longer prompts.

|

2.2 Deciphering the numbers

Benchmarks measure totally different sides of intelligence. SWE‑Bench signifies software program engineering prowess; AIME and GPQA measure math and science; ARC‑AGI checks summary reasoning; BrowseComp and BFCL consider agentic software use. The desk reveals no single mannequin dominates throughout all metrics. Claude Opus 4.6 leads on terminal and reasoning in lots of datasets, however M2.5 and Gemini 3.1 Professional shut the hole. GPT‑5.2’s good AIME and excessive ARC‑AGI‑1 scores reveal unparalleled math and common reasoning, whereas Gemini’s 77.1% on ARC‑AGI‑2 reveals sturdy fluid reasoning. MiniMax lags in math however shines in software calling and search effectivity. When deciding on a mannequin, align the benchmark to your process: coding requires excessive SWE‑Bench efficiency; analysis requires excessive ARC‑AGI and GPQA; agentic automation wants sturdy BrowseComp and BFCL scores.

Benchmark Triad Matrix (Framework)

To systematically select a mannequin primarily based on benchmarks, use the Benchmark Triad Matrix:

- Job Alignment: Establish the benchmarks that mirror your main workload (e.g., SWE‑Bench for code, GPQA for science).

- Useful resource Price range: Consider the context size and compute required; longer contexts are helpful for big paperwork however improve price and latency.

- Threat Tolerance: Take into account security benchmarks like immediate‑injection success charges (Claude has the bottom at 4.7 %) and the reliability of chain‑of‑thought reasoning.

Place fashions on these axes to see which gives one of the best commerce‑offs to your use case.

2.3 Fast abstract

Query: Which mannequin is greatest for coding?

Abstract: Claude Opus 4.6 barely edges out M2.5 on SWE‑Bench and terminal duties, however M2.5’s price benefit makes it enticing for top‑quantity coding. For those who want the very best code evaluate and debugging, select Opus; if finances issues, select M2.5.

Query: Which mannequin leads in math and reasoning?

Abstract: GPT‑5.2 stays unmatched in AIME and ARC‑AGI‑1. For fluid reasoning on advanced duties, Gemini 3.1 Professional leads ARC‑AGI‑2.

Query: How vital are benchmarks?

Abstract: Benchmarks provide steering however don’t absolutely seize actual‑world efficiency. Consider fashions towards your particular workload and danger profile.

3 Capabilities and Operational Issues

Past benchmark scores, sensible deployment requires understanding options like context home windows, multimodal assist, software calling, reasoning modes and runtime pace. Every mannequin gives distinctive capabilities and constraints.

3.1 Context and multimodality

Context home windows. M2.5 retains the 196K token context of its predecessor. GPT‑5.2 gives a 400K context, appropriate for lengthy code repositories or analysis paperwork. Claude Opus 4.6 enters beta with a 1 million enter token context, although output limits stay round 100K tokens. Gemini 3.1 Professional gives a full 1 million context for each enter and output. Lengthy contexts cut back the necessity for retrieval or chunking however improve token utilization and latency.

Multimodal assist. GPT‑5.2 helps textual content and pictures and features a reasoning mode that toggles deeper chain‑of‑thought at increased latency. Gemini 3.1 Professional options sturdy multimodal capabilities—video understanding, picture reasoning and code‑generated animated outputs. Claude Opus 4.6 and MiniMax M2.5 stay textual content‑solely, although they excel in software‑calling and programming duties. The absence of multimodality in MiniMax is a key limitation in case your workflow entails PDFs, diagrams or movies.

3.2 Reasoning modes and energy controls

MiniMax M2.5 implements Interleaved Pondering, enabling the mannequin to interrupt advanced directions into sub‑duties and ship extra concise solutions. RL coaching throughout different environments fosters strategic planning, giving M2.5 an Architect Mindset that plans earlier than coding.

Claude Opus 4.6 introduces Adaptive Pondering and effort controls, letting customers dial reasoning depth up or down. Decrease effort yields sooner responses with fewer tokens, whereas increased effort performs deeper chain‑of‑thought reasoning however consumes extra tokens.

Gemini 3.1 Professional’s thinking_level parameter (low, medium, excessive, max) accomplishes an analogous objective—balancing pace towards reasoning accuracy. The brand new medium stage gives a candy spot for on a regular basis duties. Gemini can generate full outputs equivalent to code‑primarily based interactive charts (SVGs), increasing its use for information visualization and net design.

GPT‑5.2 exposes a reasoning parameter through API, permitting builders to regulate chain‑of‑thought size for various duties. Longer reasoning could also be billed as inside “reasoning tokens” that price the identical as output tokens, rising complete price however delivering higher outcomes for advanced issues.

3.3 Instrument calling and agentic duties

Fashions more and more act as autonomous brokers by calling exterior capabilities, invoking different fashions or orchestrating duties.

- MiniMax M2.5: The mannequin ranks extremely on software‑calling benchmarks (BFCL) and demonstrates improved search effectivity (fewer search rounds). M2.5’s potential to plan and name code‑enhancing or testing instruments makes it nicely‑fitted to setting up pipelines of actions.

- Claude Opus 4.6: Opus can assemble agent groups, the place one agent writes code, one other checks it and a 3rd generates documentation. The mannequin’s security controls cut back the danger of misbehaving brokers.

- Gemini 3.1 Professional: With excessive scores on agentic benchmarks like APEX‑Brokers (33.5%) and MCP Atlas (69.2%), Gemini orchestrates a number of actions throughout search, retrieval and reasoning. Its integration with Google Workspace and Vertex AI simplifies software entry.

- GPT‑5.2: Early testers report that GPT‑5.2 collapsed their multi‑agent methods right into a single “mega‑agent” able to calling 20+ instruments seamlessly, decreasing immediate engineering complexity.

3.4 Pace, latency and throughput

Execution pace influences consumer expertise and price. M2.5 runs at 50 tokens/s for the bottom mannequin and 100 tokens/s for the Lightning model. Opus 4.6’s new compaction reduces the quantity of context wanted to take care of dialog state, chopping latency. Gemini 3.1 Professional’s excessive context can gradual responses however the low considering stage is quick for fast interactions. GPT‑5.2 gives Prompt, Pondering and Professional variants to steadiness pace towards reasoning depth; the Prompt model resembles GPT‑5.1 efficiency however the Professional variant is slower and extra thorough. Basically, deeper reasoning and longer contexts improve latency; select the mannequin variant that matches your tolerance for ready.

3.5 Functionality Scorecard (Framework)

To judge capabilities holistically, we suggest a Functionality Scorecard ranking fashions on 4 axes: Context size (C), Modality assist (M), Instrument‑calling potential (T) and Security (S). Assign every axis a rating from 1 to five (increased is healthier) primarily based in your priorities. For instance, should you want lengthy context and multimodal assist, Gemini 3.1 Professional may rating C=5, M=5, T=4, S=3; GPT‑5.2 is likely to be C=4, M=4, T=4, S=4; Opus 4.6 could possibly be C=5, M=1, T=4, S=5; M2.5 is likely to be C=2, M=1, T=5, S=4. Multiply the scores by weightings reflecting your mission’s wants and select the mannequin with the very best weighted sum. This structured strategy ensures you think about all crucial dimensions quite than specializing in a single headline metric.

3.6 Fast abstract

- Context issues: Use lengthy contexts (Gemini or Claude) for whole codebases or authorized paperwork; brief contexts (MiniMax) for chatty duties or when price is essential.

- Multimodality vs. effectivity: GPT‑5.2 and Gemini assist photographs or video, however should you’re solely writing code, a textual content‑solely mannequin with stronger software‑calling could also be cheaper and sooner.

- Reasoning controls: Alter considering ranges or effort controls to tune price vs. high quality. Acknowledge that reasoning tokens in GPT‑5.2 incur further price.

- Agentic energy: MiniMax and Gemini excel at planning and search, whereas Claude assembles agent groups with sturdy security; GPT‑5.2 can perform as a mega‑agent.

- Pace commerce‑offs: Lightning variations price extra however save time; choose the variant that matches your latency necessities.

4 Prices, Licensing and Economics

Price range constraints, licensing restrictions and hidden prices could make or break AI adoption. Beneath we summarize pricing and licensing particulars for the main fashions and discover methods to optimize your spend.

4.1 Pricing comparability

|

Mannequin

|

Enter price (per M tokens)

|

Output price (per M tokens)

|

Notes

|

|

MiniMax M2.5

|

$0.15 (commonplace) / $0.30 (Lightning)

|

$1.2 / $2.4

|

Modified MIT licence; requires crediting “MiniMax M2.5”.

|

|

GPT‑5.2

|

$1.75

|

$14

|

90% low cost for cached inputs; reasoning tokens billed at output price.

|

|

Claude Opus 4.6

|

$5

|

$25

|

Identical worth as Opus 4.5; 1 M context in beta.

|

|

Gemini 3.1 Professional

|

$2 (≤200K context) / $4 (>200K)

|

$12 / $18

|

Shopper subscription round $20/month.

|

|

MiniMax M2.1

|

$0.27

|

$0.95

|

36% cheaper than GPT‑5 Mini general.

|

Hidden prices. GPT‑5.2’s reasoning tokens can dramatically improve bills for advanced issues. Builders can cut back prices by caching repeated prompts (90% enter low cost). Subscription stacking is one other problem: an influence consumer may pay for ChatGPT, Claude, Gemini and Perplexity to get one of the best of every, leading to over $80/month. Aggregators like GlobalGPT or platforms like Clarifai can cut back this friction by providing a number of fashions via a single subscription.

4.2 Licensing and deployment flexibility

- MiniMax and different open fashions: Launched underneath MIT (MiniMax) or Apache (Qwen, DeepSeek) licences. You possibly can obtain weights, advantageous‑tune, self‑host and combine into proprietary merchandise. M2.5 requires together with a visual attribution in industrial merchandise.

- Proprietary fashions: GPT, Claude and Gemini limit entry to API endpoints; weights usually are not out there. They could prohibit excessive‑danger use circumstances and require compliance with utilization insurance policies. Knowledge utilized in API calls is mostly used to enhance the mannequin until you decide out. Deploying these fashions on‑prem just isn’t attainable, however you possibly can run them via Clarifai’s orchestration platform or use aggregator providers.

4.3 Price‑Match Matrix (Framework)

To optimize spend, apply the Price‑Match Matrix:

- Price range vs. Accuracy: If price is the first constraint, open fashions like MiniMax or DeepSeek ship spectacular outcomes at low costs. When accuracy or security is mission‑crucial, paying for GPT‑5.2 or Claude could lower your expenses in the long term by decreasing retries.

- Licensing Flexibility: Enterprises needing on‑prem deployment or mannequin customization ought to prioritize open fashions. Proprietary fashions are plug‑and‑play however restrict management.

- Hidden Prices: Look at reasoning token charges, context size expenses and subscription stacking. Use cached inputs and aggregator platforms to chop prices.

- Whole Price of Completion: Take into account the price of attaining a desired accuracy or final result, not simply per‑token costs. GPT‑5.2 could also be cheaper general regardless of increased token costs as a result of its effectivity.

4.4 Fast abstract

- M2.5 is the finances king: At $0.15–0.30 per million enter tokens, M2.5 gives the bottom worth–efficiency ratio, however don’t overlook the required attribution and the smaller context window.

- GPT‑5.2 is costly however environment friendly: The API’s reasoning tokens can shock you, however the mannequin solves advanced duties sooner and should lower your expenses general.

- Claude prices probably the most: At $5/$25 per million tokens, it’s the costliest however boasts prime coding efficiency and security.

- Gemini gives tiered pricing: Select the suitable tier primarily based in your context necessities; for duties underneath 200K tokens, prices are average.

- Subscription stacking is a lure: Keep away from paying a number of $20 subscriptions by utilizing platforms that route duties throughout fashions, like Clarifai or GlobalGPT.

5 The AI Mannequin Choice Compass

Choosing the optimum mannequin for a given process entails greater than studying benchmarks or worth charts. We suggest a structured resolution framework—the AI Mannequin Choice Compass—to information your selection.

5.1 Establish your persona and duties

Totally different roles have totally different wants:

- Software program engineers and DevOps: Want correct code technology, debugging help and agentic software‑calling. Appropriate fashions: Claude Opus 4.6, MiniMax M2.5 or Qwen 3‑Coder.

- Researchers and information scientists: Require excessive math accuracy and reasoning for advanced analyses. Appropriate fashions: GPT‑5.2 for math and Gemini 3.1 Professional for lengthy‑context multimodal analysis.

- Enterprise analysts and authorized professionals: Usually course of massive paperwork, spreadsheets and displays. Appropriate fashions: Claude Opus 4.6 (Excel/PowerPoint prowess) and Gemini 3.1 Professional (1M context).

- Content material creators and entrepreneurs: Want creativity, consistency and generally photographs or video. Appropriate fashions: Gemini 3.1 Professional for multimodal content material and interactive outputs; GPT‑5.2 for structured writing and translation.

- Price range‑constrained startups: Want low prices and versatile deployment. Appropriate fashions: MiniMax M2.5, DeepSeek R1 and Qwen households.

5.2 Outline constraints and preferences

Ask your self: Do you require lengthy context? Is picture/video enter vital? How crucial is security? Do you want on‑prem deployment? What’s your tolerance for latency? Summarize your solutions and rating fashions utilizing the Functionality Scorecard. Establish any exhausting constraints: for instance, regulatory necessities could drive you to maintain information on‑prem, eliminating proprietary fashions. Set a finances cap to keep away from runaway prices.

5.3 Choice tree

We current a easy resolution tree utilizing conditional logic:

- Context requirement: If you’ll want to enter paperwork >200K tokens → select Gemini 3.1 Professional or Claude Opus 4.6. If not, proceed.

- Modality requirement: For those who want photographs or video → select Gemini 3.1 Professional or GPT‑5.2. If not, proceed.

- Coding duties: In case your main workload is coding and you’ll pay premium costs → select Claude Opus 4.6. For those who want price effectivity → select MiniMax M2.5 or Qwen 3‑Coder.

- Math/science duties: Select GPT‑5.2 (greatest math/GPQA); if context is extraordinarily lengthy or duties require dynamic reasoning throughout texts and charts → select Gemini 3.1 Professional.

- Knowledge privateness: If information should keep on‑prem → use an open mannequin (MiniMax, DeepSeek or Qwen) with Clarifai Native Runners.

- Price range sensitivity: If budgets are tight → lean towards MiniMax or use aggregator platforms to keep away from subscription stacking.

5.4 Mannequin Choice Compass in apply

Think about a mid‑sized software program firm: they should generate new options, evaluate code, course of bug experiences and compile design paperwork. They’ve average finances, require information privateness and wish to cut back human hours. Utilizing the Choice Compass, they conclude:

- Goal: Code technology and evaluate → emphasise SWE‑Bench and BFCL scores.

- Constraints: Knowledge privateness is vital → on‑prem internet hosting through open fashions and native runners. Context size want is average.

- Price range: Restricted; can’t maintain $25/M output token charges.

- Knowledge sensitivity: Non-public code should keep on‑prem.

Mapping to fashions: MiniMax M2.5 emerges as one of the best match as a result of sturdy coding benchmarks, low price and open licensing. The corporate can self‑host M2.5 or run it through Clarifai’s Native Runners to take care of information privateness. For infrequent excessive‑complexity bugs requiring deep reasoning, they might name GPT‑5.2 via Clarifai’s orchestrated API to enhance M2.5. This multi‑mannequin strategy maximizes worth whereas controlling price.

5.5 Fast abstract

- Use the Choice Compass: Establish duties, rating constraints, select fashions accordingly.

- No single mannequin matches all: Multi‑mannequin methods with orchestration ship one of the best outcomes.

- Clarifai as a mediator: Clarifai’s platform routes requests to the best mannequin and simplifies deployment, stopping subscription litter and guaranteeing price management.

6 Integration & Deployment with Clarifai

Deployment is commonly tougher than mannequin choice. Managing GPUs, scaling infrastructure, defending information and integrating a number of fashions can drain engineering sources. Clarifai gives a unifying platform that orchestrates compute and fashions whereas preserving flexibility and privateness.

6.1 Clarifai’s compute orchestration

Clarifai’s orchestration platform abstracts away underlying {hardware} (GPUs, CPUs) and robotically selects sources primarily based on latency and price. You possibly can combine pre‑educated fashions from Clarifai’s market with your personal advantageous‑tuned or open fashions. A low‑code pipeline builder permits you to chain steps (ingest, course of, infer, publish‑course of) with out writing infrastructure code. Security measures embody position‑primarily based entry management (RBAC), audit logging and compliance certifications. This implies you possibly can run GPT‑5.2 for reasoning duties, M2.5 for coding and DeepSeek for translations, all via one API name.

6.2 Native Runners and hybrid deployments

When information can’t go away your setting, Clarifai’s Native Runners will let you host fashions on native machines whereas sustaining a safe cloud connection. The Native Runner opens a tunnel to Clarifai, which means API calls route via your machine’s GPU; information stays on‑prem, whereas Clarifai handles authentication, mannequin scheduling and billing. To arrange:

- Set up Clarifai CLI and create an API token.

- Create a context specifying your mannequin (e.g., MiniMax M2.5) and desired {hardware}.

- Begin the Native Runner utilizing the CLI; it can register with Clarifai’s cloud.

- Ship API calls to the Clarifai endpoint; the runner executes the mannequin domestically.

- Monitor utilization through Clarifai’s dashboard. A $1/month developer plan permits as much as 5 native runners. SiliconANGLE notes that Clarifai’s strategy is exclusive—no different platform so seamlessly bridges native fashions and cloud APIs.

6.3 Hybrid AI Deployment Guidelines (Framework)

Use this guidelines when deploying fashions throughout cloud and on‑prem:

- Safety & Compliance: Guarantee information insurance policies (GDPR, HIPAA) are met. Use RBAC and audit logs. Determine whether or not to choose out of knowledge sharing.

- Latency Necessities: Decide acceptable response instances. Use native runners for low‑latency duties; use distant compute for heavy duties the place latency is tolerable.

- {Hardware} & Prices: Estimate GPU wants. Clarifai’s orchestration can assign duties to price‑efficient {hardware}; native runners use your personal GPUs.

- Mannequin Availability: Examine which fashions can be found on Clarifai. Open fashions are simply deployed; proprietary fashions could have licensing restrictions or be unavailable.

- Pipeline Design: Define your workflow. Establish which mannequin handles every step. Clarifai’s low‑code builder or YAML configuration can orchestrate multi‑step duties.

- Fallback Methods: Plan for failure. Use fallback fashions or repeated prompts. Monitor for hallucinations, truncated responses or excessive prices.

6.4 Case illustration: Multi‑mannequin analysis assistant

Suppose you’re constructing an AI analysis assistant that reads lengthy scientific papers, extracts equations, writes abstract notes and generates slides. A hybrid structure may seem like this:

- Enter ingestion: A consumer uploads a 300‑web page PDF.

- Summarization: Gemini 3.1 Professional is invoked through Clarifai to course of your entire doc (1M context) and extract a structured define.

- Equation reasoning: GPT‑5.2 (Pondering) is named to derive mathematical insights or clear up instance issues, utilizing the extracted equations as prompts.

- Code examples: MiniMax M2.5 generates code snippets or simulations primarily based on the paper’s algorithms, working domestically through a Clarifai Native Runner.

- Presentation technology: Claude Opus 4.6 constructs slides with charts and summarises key findings, leveraging its improved PowerPoint capabilities.

- Overview: A human verifies outputs. If corrections are wanted, the chain is repeated with changes.

Such a pipeline harnesses the strengths of every mannequin whereas respecting privateness and price constraints. Clarifai orchestrates the sequence, switching fashions seamlessly and monitoring utilization.

6.5 Fast abstract

- Clarifai unifies the ecosystem: Run a number of fashions via one API with computerized {hardware} choice.

- Native Runners defend privateness: Hold information on‑prem whereas nonetheless benefiting from cloud orchestration.

- Hybrid deployment requires planning: Use our guidelines to make sure safety, efficiency and price optimisation.

- Case instance: A multi‑mannequin analysis assistant demonstrates the facility of orchestrated workflows.

7 Rising Gamers & Future Outlook

Whereas massive names dominate headlines, the open‑mannequin motion is flourishing. New entrants provide specialised capabilities, and 2026 guarantees extra variety and innovation.

7.1 Notable rising fashions

- DeepSeek R1: Open‑sourced underneath MIT, excelling at lengthy‑context reasoning in each English and Chinese language. A promising various for bilingual functions and analysis.

- Qwen 3 household: Qwen 3‑Coder 32B scores 69.6 % on SWE‑Bench Verified and gives sturdy math and reasoning. As Alibaba invests closely, anticipate iterative releases with improved effectivity.

- Kimi K2 and GLM‑4.5: Compact fashions specializing in writing model and effectivity; good for chatty duties or cellular deployment.

- Grok 4.1 (xAI): Emphasises actual‑time information and excessive throughput; appropriate for information aggregation or trending matters.

- MiniMax M3 and GPT‑6 (speculative): Rumoured releases later in 2026 promise even deeper reasoning and bigger context home windows.

7.2 Horizon Watchlist (Framework)

To maintain tempo with the quickly altering ecosystem, monitor fashions throughout 4 dimensions:

- Efficiency: Benchmark scores and actual‑world evaluations.

- Openness: Licensing and weight availability.

- Specialisation: Area of interest abilities (coding, math, inventive writing, multilingual).

- Ecosystem: Neighborhood assist, tooling, integration with platforms like Clarifai.

Use these standards to judge new releases and determine when to combine them into your workflow. For instance, DeepSeek R2 may provide specialised reasoning in legislation or drugs; Qwen 4 may embed superior reasoning with decrease parameter counts; a brand new MiniMax launch may add imaginative and prescient. Holding a watchlist ensures you don’t miss alternatives whereas avoiding hype‑pushed diversions.

7.3 Fast abstract

- Open fashions are accelerating: DeepSeek and Qwen present that open supply can rival proprietary methods.

- Specialisation is the following frontier: Count on area‑particular fashions in legislation, drugs, and finance.

- Plan for change: Construct workflows that may adapt to new fashions simply, leveraging Clarifai or comparable orchestration platforms.

8 Dangers, Limitations & Failure Eventualities

All fashions have limitations. Understanding these dangers is crucial to keep away from misapplication, overreliance and sudden prices.

8.1 Hallucinations and factual errors

LLMs generally generate believable however incorrect info. Fashions could hallucinate citations, miscalculate numbers or invent capabilities. Excessive reasoning fashions like GPT‑5.2 nonetheless hallucinate on advanced duties, although the speed is lowered. MiniMax and different open fashions could hallucinate area‑particular jargon as a result of restricted coaching information. To mitigate: use retrieval‑augmented technology (RAG), cross‑verify outputs towards trusted sources and make use of human evaluate for top‑stakes choices.

8.2 Immediate injection and safety

Malicious prompts could cause fashions to disclose delicate info or carry out unintended actions. Claude Opus has the bottom immediate‑injection success price (4.7 %), whereas different fashions are extra susceptible. All the time sanitise consumer inputs, make use of content material filters and restrict software permissions when enabling perform calls. In multi‑agent methods, implement guardrails to stop brokers from executing harmful instructions.

8.3 Context truncation and price overruns

Giant context home windows permit lengthy conversations however can result in costly and truncated outputs. GPT‑5.2 and Gemini present prolonged contexts, however should you exceed output limits, vital info could also be reduce off. The price of reasoning tokens for GPT‑5.2 can balloon unexpectedly. To handle: summarise enter texts, break duties into smaller prompts and monitor token utilization. Use Clarifai’s dashboards to trace prices and set utilization caps.

8.4 Overfitting and bias

Fashions could exhibit hidden biases from their coaching information. A mannequin’s superior efficiency on a benchmark could not translate throughout languages or domains. As an illustration, MiniMax is educated totally on Chinese language and English code; efficiency could drop on underrepresented languages. All the time check fashions in your area information and apply equity auditing the place vital.

8.5 Operational challenges

Deploying open fashions means dealing with MLOps duties equivalent to mannequin versioning, safety patching and scaling. Proprietary fashions relieve this however create vendor lock‑in and restrict customisation. Utilizing Clarifai mitigates some overhead however requires familiarity with its API and infrastructure. Operating native runners calls for GPU sources and community connectivity; in case your setting is unstable, calls could fail. Have fallback fashions prepared and design workflows to get better gracefully.

8.6 Threat Mitigation Guidelines (Framework)

To scale back danger:

- Assess information sensitivity: Decide if information comprises PII or proprietary info; determine whether or not to course of domestically or through cloud.

- Restrict context dimension: Ship solely vital info to fashions; summarise or chunk massive inputs.

- Cross‑validate outputs: Use secondary fashions or human evaluate to confirm crucial outputs.

- Set budgets and displays: Observe token utilization, reasoning tokens and price per name.

- Management software entry: Limit mannequin permissions; use permit lists for capabilities and information sources.

- Replace and retrain: Hold open fashions up to date; patch vulnerabilities; retrain on area‑particular information if wanted.

- Have fallback methods: Preserve various fashions or older variations in case of outages or degraded efficiency.

8.7 Fast abstract

- LLMs are fallible: Reality‑checking and human oversight are necessary.

- Security varies: Claude has sturdy security measures; different fashions require cautious guardrails.

- Monitor tokens: Reasoning tokens and lengthy contexts can inflate prices rapidly.

- Operational complexity: Use orchestration platforms and checklists to handle deployment challenges.

9 FAQs & Closing Ideas

9.1 Steadily requested questions

Q: What’s MiniMax M2.5 and the way is it totally different from M2.1?

A: M2.5 is a February 2026 replace that improves coding accuracy (80.2% SWE‑Bench Verified), search effectivity and workplace capabilities. It runs 37% sooner than M2.1 and introduces an “Architect Mindset” for planning duties.

Q: How does Claude Opus 4.6 enhance on 4.5?

A: Opus 4.6 provides a 1 M token context window, adaptive considering and energy controls, context compaction and agent crew capabilities. It leads on a number of benchmarks and improves security. Pricing stays $5/$25 per million tokens.

Q: What’s particular about Gemini 3.1 Professional’s “thinking_level”?

A: Gemini 3.1 introduces low, medium, excessive and max reasoning ranges. Medium gives balanced pace and high quality; excessive and max ship deeper reasoning at increased latency. This flexibility permits you to tailor responses to process urgency.

Q: What are GPT‑5.2 “reasoning tokens”?

A: GPT‑5.2 expenses for inside chain‑of‑thought tokens as output tokens, elevating price on advanced duties. Use caching and shorter prompts to minimise this overhead.

Q: How can I run these fashions domestically?

A: Use open fashions (MiniMax, Qwen, DeepSeek) and host them through Clarifai’s Native Runners. Proprietary fashions can’t be self‑hosted however will be orchestrated via Clarifai’s platform.

Q: Which mannequin ought to I select for my startup?

A: It is dependent upon your duties, finances and information sensitivity. Use the Choice Compass: for price‑environment friendly coding, select MiniMax; for math or excessive‑stakes reasoning, select GPT‑5.2; for lengthy paperwork and multimodal content material, select Gemini; for security and Excel/PowerPoint duties, select Claude.

9.2 Last reflections

The primary quarter of 2026 marks a brand new period for LLMs. Fashions are more and more specialised, pricing buildings are advanced, and operational issues will be as vital as uncooked intelligence. MiniMax M2.5 demonstrates that open fashions can compete with and generally surpass proprietary ones at a fraction of the price. Claude Opus 4.6 reveals that cautious planning and security enhancements yield tangible beneficial properties for skilled workflows. Gemini 3.1 Professional pushes context lengths and multimodal reasoning to new heights. GPT‑5.2 retains its crown in mathematical and common reasoning however calls for cautious price administration.

No single mannequin dominates all duties, and the hole between open and closed methods continues to slender. The longer term is multi‑mannequin, the place orchestrators like Clarifai route duties to probably the most appropriate mannequin, mix strengths and defend consumer information. To remain forward, practitioners ought to preserve a watchlist of rising fashions, make use of structured resolution frameworks just like the Benchmark Triad Matrix and AI Mannequin Choice Compass, and comply with hybrid deployment greatest practices. With these instruments and a willingness to experiment, you’ll harness one of the best that AI has to supply in 2026 and past.