Foundry Native is an on-device AI inference resolution providing efficiency, privateness, customization, and value benefits. It integrates seamlessly into your current workflows and functions by way of an intuitive CLI, SDK, and REST API. Foundry Native has the next advantages:

- On-Gadget Inference: Run fashions domestically by yourself {hardware}, lowering your prices whereas preserving all of your knowledge in your machine.

- Mannequin Customization: Choose from preset fashions or use your individual to fulfill particular necessities and use instances.

- Price Effectivity: Remove recurring cloud service prices by utilizing your current {hardware}, making AI extra accessible.

- Seamless Integration: Join along with your functions by way of an SDK, API endpoints, or the CLI, with simple scaling to Azure AI Foundry as your wants develop.

Foundry Native is right for situations the place:

- You wish to maintain delicate knowledge in your machine.

- It is advisable function in environments with restricted or no web connectivity.

- You wish to scale back cloud inference prices.

- You want low-latency AI responses for real-time functions.

- You wish to experiment with AI fashions earlier than deploying to a cloud surroundings.

You’ll be able to set up Foundry Native by operating the next command:

winget set up Microsoft.FoundryLocal

As soon as Foundry Native is put in, you obtain and work together with a mannequin from the command line by utilizing a command like:

foundry mannequin run phi-4

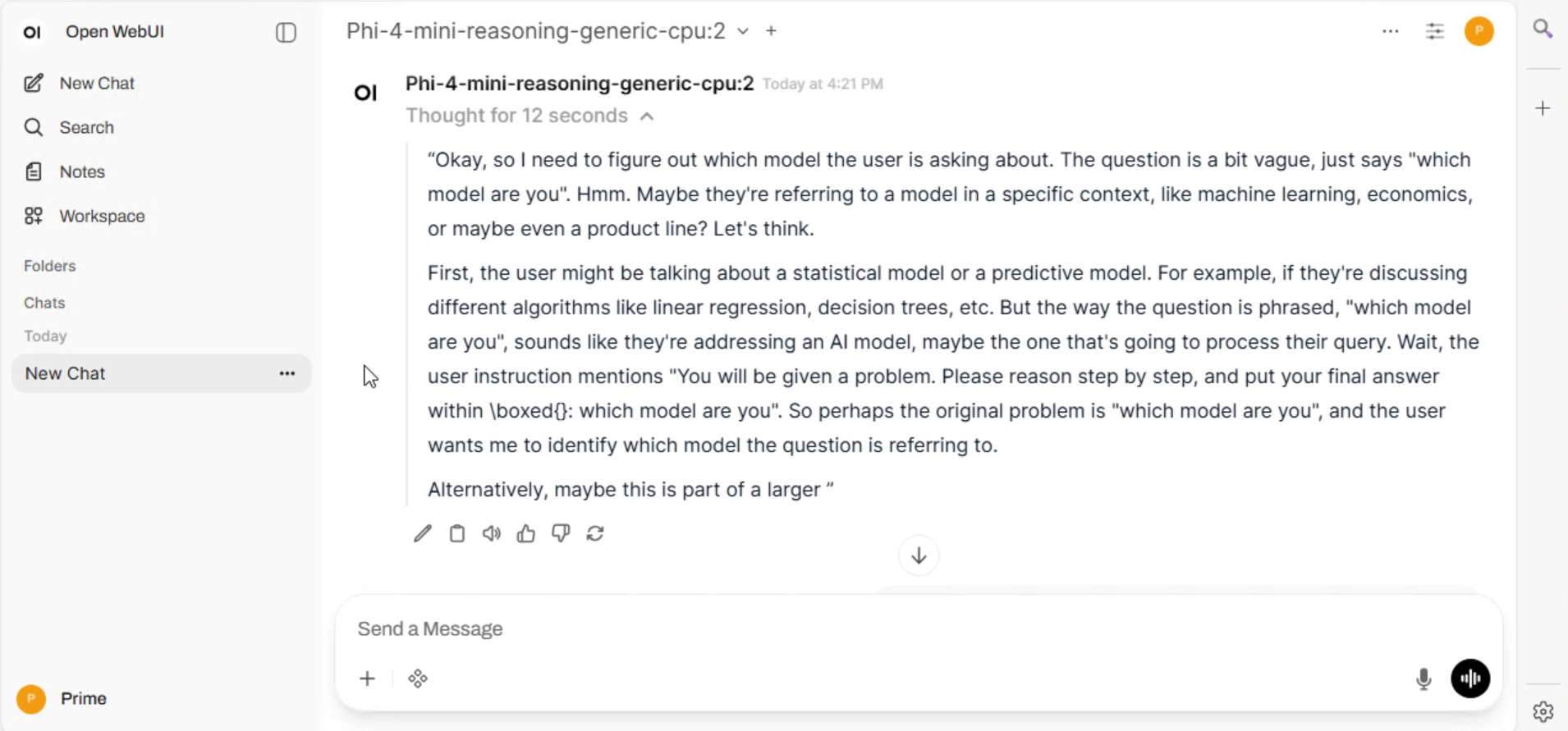

It will obtain the phi-4 mannequin and supply a textual content based mostly chat interface. If you wish to work together with Foundry Native by way of an online chat interface, you need to use the open supply Open WebUI mission. You’ll be able to set up Open WebUI on Home windows Server by performing the next steps:

Obtain OpenWebUIInstaller.exe from https://github.com/BrainDriveAI/OpenWebUI_CondaInstaller/releases. You may get warning messages from Home windows Defender SmartScreen. Copy OpenWebUIInstaller.exe into C:Temp.

In an elevated command immediate, run the next instructions

winget set up -e --id Anaconda.Miniconda3 --scope machine$env:Path="C:ProgramDataminiconda3;" + $env:Path

$env:Path="C:ProgramDataminiconda3Scripts;" + $env:Path

$env:Path="C:ProgramDataminiconda3Librarybin;" + $env:Pathconda.exe tos settle for --override-channels --channel https://repo.anaconda.com/pkgs/foremost

conda.exe tos settle for --override-channels --channel https://repo.anaconda.com/pkgs/r

conda.exe tos settle for --override-channels --channel https://repo.anaconda.com/pkgs/msys2C:TempOpenWebUIInstaller.exe

Then from the dialog select to put in and run Open WebUI. You then must take a number of further steps to configure Open WebUI to connect with the Foundry Native endpoint.

- Allow Direct Connections in Open WebUI

- Choose Settings and Admin Settings within the profile menu.

- Choose Connections within the navigation menu.

- Allow Direct Connections by turning on the toggle. This permits customers to connect with their very own OpenAI suitable API endpoints.

- Join Open WebUI to Foundry Native:

- Choose Settings within the profile menu.

- Choose Connections within the navigation menu.

- Choose + by Handle Direct Connections.

- For the URL, enter http://localhost:PORT/v1 the place PORT is the Foundry Native endpoint port (use the CLI command foundry service standing to search out it). Observe that Foundry Native dynamically assigns a port, so it is not all the time the identical.

- For the Auth, choose None.

- Choose Save

➡️ What’s Foundry Native https://study.microsoft.com/en-us/azure/ai-foundry/foundry-local/what-is-foundry-local

➡️ Edge AI for Freshmen https://aka.ms/edgeai-for-beginners

➡️ Open WebUI: https://docs.openwebui.com/