Desk of Contents

-

SAM 3: Idea-Based mostly Visible Understanding and Segmentation

- The Evolution of Phase Something: From Geometry to Ideas

- Core Mannequin Structure and Technical Elements

- Promptable Idea Segmentation (PCS): Defining the Process

- The SA-Co Knowledge Engine and Huge Scale Dataset

- Coaching Methodology and Optimization

- Benchmarks and Efficiency Evaluation

- Actual-World Functions and Industrial Impression

- Challenges and Future Outlook

- Configuring Your Improvement Atmosphere

- Setup and Imports

- Loading the SAM 3 Mannequin

- Downloading a Few Photographs

- Helper Perform

- Promptable Idea Segmentation on Photographs: Single Textual content Immediate on a Single Picture

- Abstract

SAM 3: Idea-Based mostly Visible Understanding and Segmentation

On this tutorial, we introduce Phase Something Mannequin 3 (SAM 3), the shift from geometric promptable segmentation to open-vocabulary idea segmentation, and why that issues.

First, we summarize the mannequin household’s evolution (SAM-1 → SAM-2 → SAM-3), define the brand new Notion Encoder + DETR detector + Presence Head + streaming tracker structure, and describe the SA-Co information engine that enabled large-scale idea supervision.

Lastly, we arrange the event atmosphere and present single-prompt examples to show the mannequin’s primary picture segmentation workflow.

By the top of this tutorial, we’ll have a stable understanding of what makes SAM 3 revolutionary and how one can carry out primary concept-driven segmentation utilizing textual content prompts.

This lesson is the first of a 4-part collection on SAM 3:

- SAM 3: Idea-Based mostly Visible Understanding and Segmentation (this tutorial)

- Lesson 2

- Lesson 3

- Lesson 4

To study SAM 3 and how one can carry out idea segmentation on photographs utilizing textual content prompts, simply preserve studying.

The discharge of the Phase Something Mannequin 3 (SAM 3) marks a definitive transition in laptop imaginative and prescient, shifting the main target from purely geometric object localization to a classy, concept-driven understanding of visible scenes.

Developed by Meta AI, SAM 3 is described as the primary unified basis mannequin able to detecting, segmenting, and monitoring all situations of an open-vocabulary idea throughout photographs and movies through pure language prompts or visible exemplars.

Whereas its predecessors (i.e., SAM 1 and SAM 2) established the paradigm of Promptable Visible Segmentation (PVS) by permitting customers to outline objects through factors, packing containers, or masks, they remained semantically agnostic. Consequently, they basically functioned as high-precision geometric instruments.

SAM 3 transcends this limitation by introducing Promptable Idea Segmentation (PCS). This activity internalizes semantic recognition and permits the mannequin to “perceive” user-provided noun phrases (NPs).

This transformation from a geometrical segmenter to a imaginative and prescient basis mannequin is facilitated by a large new dataset, SA-Co (Phase Something with Ideas), and a novel architectural design that decouples recognition from localization.

The Evolution of Phase Something: From Geometry to Ideas

The trajectory of the Phase Something venture displays a broader development in synthetic intelligence towards multi-modal unification and zero-shot generalization.

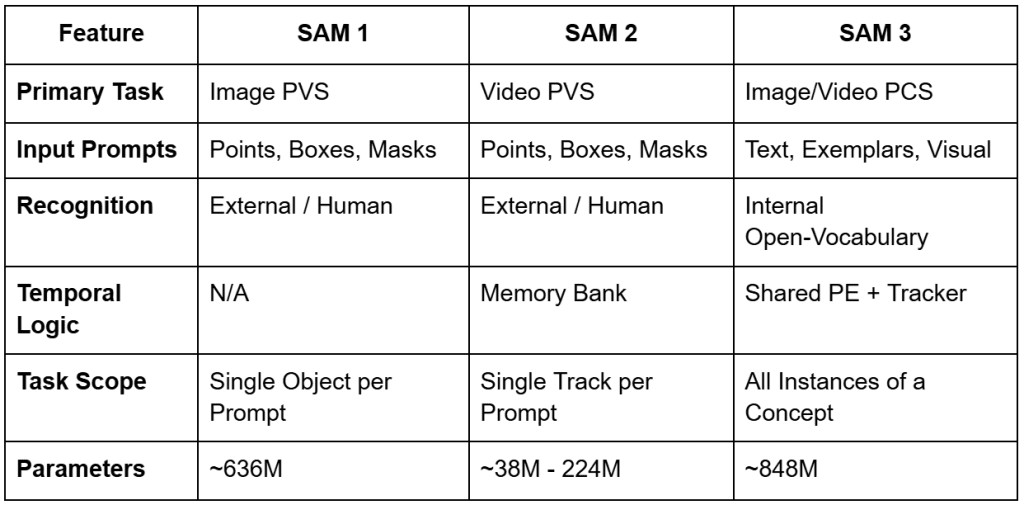

SAM 1, launched in early 2023, launched the idea of a promptable basis mannequin for picture segmentation, able to zero-shot generalization to unseen domains by utilizing easy spatial prompts.

Launched in 2024, SAM 2 prolonged this functionality to the temporal area by using a reminiscence financial institution structure to trace single objects throughout video frames with excessive temporal consistency.

Nonetheless, each fashions suffered from a standard bottleneck: they required an exterior system or a human operator to inform them the place an object was earlier than they might decide its extent.

SAM 3 addresses this foundational hole by integrating an open-vocabulary detector straight into the segmentation and monitoring pipeline. This integration permits the mannequin to resolve “what” is within the picture, successfully turning segmentation right into a query-based search interface.

For instance, whereas SAM 2 required customers to click on on each automotive in a car parking zone to section them, SAM 3 can settle for the textual content immediate “automobiles” and immediately return masks and distinctive identifiers for every particular person automotive within the scene. This evolution is summarized within the following comparability of the three mannequin generations.

Core Mannequin Structure and Technical Elements

The structure of SAM 3 represents a basic departure from earlier fashions, shifting to a unified, twin encoder-decoder transformer system.

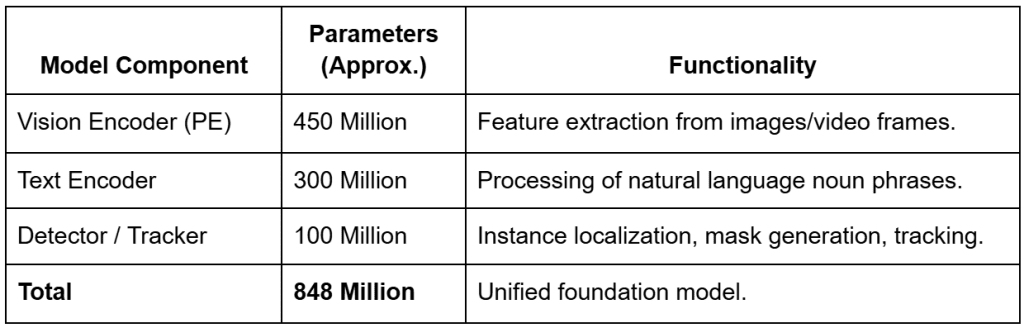

The mannequin includes roughly 848 million parameters (relying on configuration), a major scale-up from the most important SAM 2 variants, reflecting the elevated complexity of the open-vocabulary recognition activity.

These parameters are distributed throughout 3 principal architectural pillars:

- shared Notion Encoder (PE)

- DETR-based detector

- memory-based tracker

The Notion Encoder (PE) and Imaginative and prescient Spine

Central to SAM 3’s design is the Notion Encoder (PE), a imaginative and prescient spine that’s shared between the image-level detector and the video-level tracker.

This shared design is vital for guaranteeing that visible options are processed persistently throughout each static and temporal domains, minimizing activity interference and maximizing information scaling effectivity.

In contrast to SAM 2, which utilized the Hiera structure, SAM 3 employs a ViT-style notion encoder that’s extra simply aligned with the semantic embeddings of the textual content encoder.

The imaginative and prescient encoder accounts for about 450 million parameters and is designed to deal with high-resolution inputs (typically scaled to 1024 or 1008 pixels) to protect the spatial element mandatory for exact masks era.

The encoder’s output embeddings, usually of measurement  with 1024 channels, are handed to a fusion encoder that situations them based mostly on the supplied immediate tokens.

with 1024 channels, are handed to a fusion encoder that situations them based mostly on the supplied immediate tokens.

The Open-Vocabulary Textual content and Exemplar Encoders

To facilitate Promptable Idea Segmentation, SAM 3 integrates a classy textual content encoder with roughly 300 million parameters. This encoder processes noun phrases utilizing a specialised Byte Pair Encoding (BPE) vocabulary, permitting it to deal with an unlimited vary of descriptive phrases. When a person supplies a textual content immediate, the encoder generates linguistic embeddings which might be handled as “immediate tokens”.

Along with textual content, SAM 3 helps picture exemplars — visible crops of goal objects supplied by the person. These exemplars are processed by a devoted exemplar encoder that extracts visible options to outline the goal idea.

This multi-modal immediate interface permits the fusion encoder to collectively course of linguistic and visible cues, making a unified idea embedding that tells the mannequin precisely what to seek for within the picture.

The DETR-Based mostly Detector and Presence Head

The detection part of SAM 3 is predicated on the DEtection TRansformer (DETR) framework, which makes use of discovered object queries to work together with the conditioned picture options.

In an ordinary DETR structure, queries are accountable for each classifying an object and figuring out its location. Nonetheless, in open-vocabulary eventualities, this typically results in “phantom detections.” There are false positives the place the mannequin localizes background noise as a result of it lacks a world understanding of whether or not the requested idea even exists within the scene.

To resolve this, SAM 3 introduces the Presence Head, a novel architectural innovation that decouples recognition from localization. The Presence Head makes use of a discovered world token that attends to the complete picture context and predicts a single scalar “presence rating” ( ) between 0 and 1. This rating represents the likelihood that the prompted idea is current wherever within the body. The ultimate confidence rating for any particular person object question is then calculated as:

) between 0 and 1. This rating represents the likelihood that the prompted idea is current wherever within the body. The ultimate confidence rating for any particular person object question is then calculated as:

the place  is the rating produced by the person question’s native detection. If the Presence Head determines {that a} “unicorn” shouldn’t be within the picture (rating ≈ 0.01), it suppresses all native detections, stopping hallucinations throughout the board. This mechanism considerably improves the mannequin’s calibration, significantly on the Picture-Stage Matthews Correlation Coefficient (IL_MCC) metric.

is the rating produced by the person question’s native detection. If the Presence Head determines {that a} “unicorn” shouldn’t be within the picture (rating ≈ 0.01), it suppresses all native detections, stopping hallucinations throughout the board. This mechanism considerably improves the mannequin’s calibration, significantly on the Picture-Stage Matthews Correlation Coefficient (IL_MCC) metric.

The Streaming Reminiscence Tracker

For video processing, SAM 3 integrates a tracker that inherits the reminiscence financial institution structure from SAM 2 however is extra tightly coupled with the detector via the shared Notion Encoder.

On every body, the detector identifies new situations of the goal idea, whereas the tracker propagates present “masklets” (i.e., object-specific spatial-temporal masks) from earlier frames utilizing self- and cross-attention.

The system manages the temporal id of objects via an identical and replace stage. Propagated masks are in contrast with newly detected masks to make sure consistency, permitting the mannequin to deal with occlusions or objects that quickly exit the body.

If an object disappears behind an obstruction and later reappears, the detector supplies a “recent” detection that the tracker makes use of to re-establish the item’s historical past, stopping id drift.

Promptable Idea Segmentation (PCS): Defining the Process

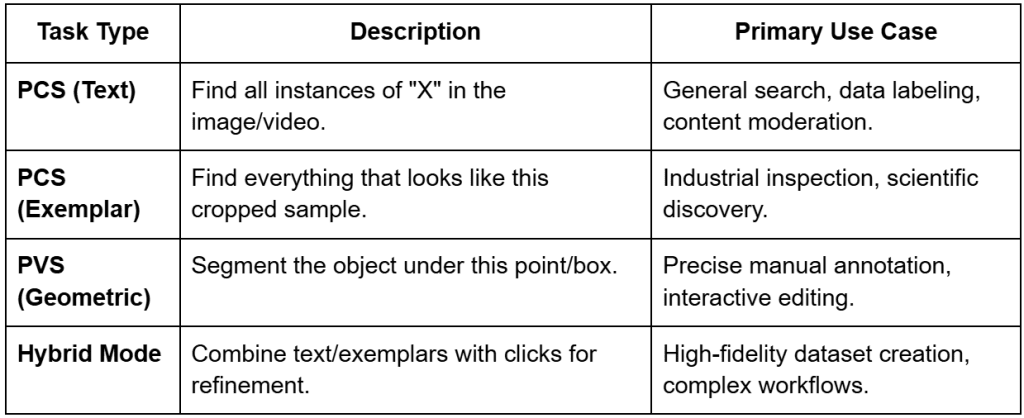

The introduction of Promptable Idea Segmentation (PCS) is the defining attribute of SAM 3, reworking it from a device for “segmenting that factor” to a system for “segmenting every thing like that”. SAM 3 unifies a number of segmentation paradigms (i.e., single-image, video, interactive refinement, and concept-driven detection) below a single spine.

Open-Vocabulary Noun Phrases

The mannequin’s main interplay mode is thru textual content prompts. In contrast to conventional object detectors which might be restricted to a hard and fast set of lessons (e.g., the 80 lessons in COCO), SAM 3 is open-vocabulary.

As a result of it has been skilled on over 4 million distinctive noun phrases, it could perceive particular descriptions (e.g., “delivery container,” “striped cat,” or “gamers sporting pink jerseys”). This permits researchers to question datasets for particular attributes with out retraining the mannequin for each new class.

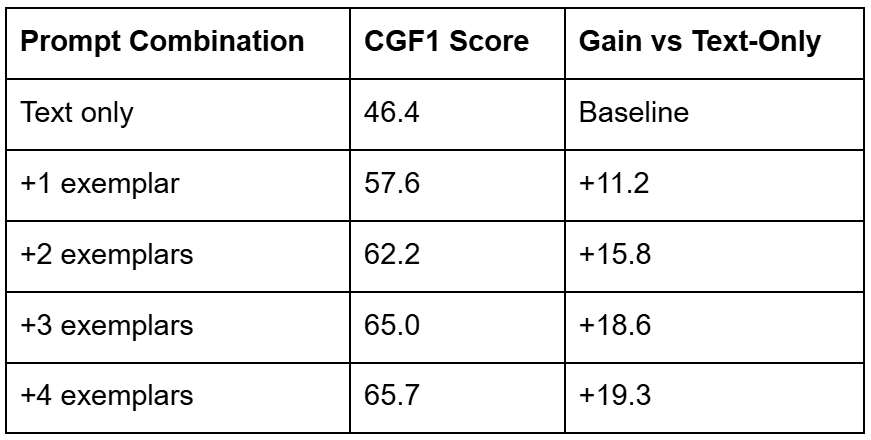

Picture Exemplars and Hybrid Prompting

Exemplar prompting permits customers to offer visible examples as an alternative of or along with textual content.

By drawing a field round an instance object, the person tells the mannequin to “discover extra of those”. That is significantly helpful in specialised fields the place textual content descriptions could also be ambiguous (e.g., figuring out a particular sort of business defect or a uncommon organic specimen).

The mannequin additionally helps hybrid prompting, the place a textual content immediate is used to slim the search and visible prompts are used for refinement. As an illustration, a person can immediate for “helmets” after which use unfavorable exemplars (packing containers round bicycle helmets) to pressure the mannequin to solely section building onerous hats.

This iterative refinement loop maintains the interactive “spirit” of the unique SAM whereas scaling it to hundreds of potential objects.

The SA-Co Knowledge Engine and Huge Scale Dataset

The success of SAM 3 is basically pushed by its coaching information. Meta developed an modern information engine to create the SA-Co (Phase Something with Ideas) dataset, which is the most important high-quality open-vocabulary segmentation dataset so far. This dataset accommodates roughly 5.2 million photographs and 52.5 thousand movies, with over 4 million distinctive noun phrases and 1.4 billion masks.

The 4-Stage Knowledge Engine

The SA-Co information engine follows a classy semi-automated suggestions loop designed to maximise each range and accuracy.

- Media Curation: The engine curates numerous media domains, shifting past homogeneous net information to incorporate aerial, doc, medical, and industrial imagery.

- Label Curation and AI Annotation: By leveraging a fancy ontology and multimodal massive language fashions (MLLMs) reminiscent of Llama 3.2 to function “AI annotators,” the system generates a large variety of distinctive noun phrases for the curated media.

- High quality Verification: AI annotators are deployed to verify masks high quality and exhaustivity. Apparently, these AI programs are reported to be 5× quicker than people at figuring out “unfavorable prompts” (ideas not current within the scene) and 36% quicker at figuring out “constructive prompts”.

- Human Refinement: Human annotators are used strategically, stepping in just for essentially the most difficult examples the place the AI fashions battle (e.g., fine-grained boundary corrections or resolving semantic ambiguities).

Dataset Composition and Statistics

The ensuing dataset is categorized into coaching and analysis units that cowl a variety of real-world eventualities.

- SA-Co/HQ: 5.2 million high-quality photographs with 4 million distinctive NPs.

- SA-Co/SYN: 38 million artificial phrases with 1.4 billion masks, used for massive-scale pre-training.

- SA-Co/VIDEO: 52.5 thousand movies containing over 467,000 masklets, guaranteeing temporal stability.

The analysis benchmark (SA-Co Benchmark) is especially rigorous, containing 214,000 distinctive phrases throughout 126,000 photographs and movies — over 50× the ideas present in present benchmarks (e.g., LVIS). It contains subsets (e.g., SA-Co/Gold), the place every image-phrase pair is annotated by three totally different people to ascertain a baseline for “human-level” efficiency.

Coaching Methodology and Optimization

The coaching of SAM 3 is a multi-stage course of designed to stabilize the educational of numerous duties inside a single mannequin spine.

4-Stage Coaching Pipeline

- Notion Encoder Pre-training: The imaginative and prescient spine is pre-trained to develop a strong function illustration of the world.

- Detector Pre-training: The detector is skilled on a mixture of artificial information and high-quality exterior datasets to ascertain foundational idea recognition.

- Detector Positive-tuning: The mannequin is fine-tuned on the SA-Co/HQ dataset, the place it learns to deal with exhaustive occasion detection, and the Presence Head is optimized utilizing difficult unfavorable phrases.

- Tracker Coaching: Lastly, the tracker is skilled whereas the imaginative and prescient spine is frozen, permitting the mannequin to study temporal consistency with out degrading the detector’s semantic precision.

Optimization Methods

The coaching course of leverages fashionable engineering methods to deal with the huge dataset and parameter rely.

- Precision: Use of PyTorch Computerized Combined Precision (AMP) (float16/bfloat16) to optimize reminiscence utilization on massive GPUs (e.g., the H200).

- Gradient Checkpointing: Enabled for decoder cross-attention blocks to cut back reminiscence overhead through the coaching of the 848M-parameter mannequin.

- Instructor Caching: In distillation eventualities (e.g., EfficientSAM3), trainer encoder options are cached to cut back the I/O bottleneck, considerably accelerating the coaching of smaller “scholar” fashions.

Benchmarks and Efficiency Evaluation

SAM 3 delivers a “step change” in efficiency, setting new state-of-the-art outcomes throughout picture and video segmentation duties.

Zero-Shot Occasion Segmentation (LVIS)

The LVIS dataset is an ordinary benchmark for long-tail occasion segmentation. SAM 3 achieves a zero-shot masks common precision (AP) of 47.0 (or 48.8 in some experiences), representing a 22% enchancment over the earlier better of 38.5. This means a vastly improved means to acknowledge uncommon or specialised classes with out specific coaching on these labels.

The SA-Co Benchmark Outcomes

On the brand new SA-Co benchmark, SAM 3 achieves a 2× efficiency acquire over present programs. On the Gold subset, the mannequin reaches 88% of human-level efficiency, establishing it as a extremely dependable device for automated labeling.

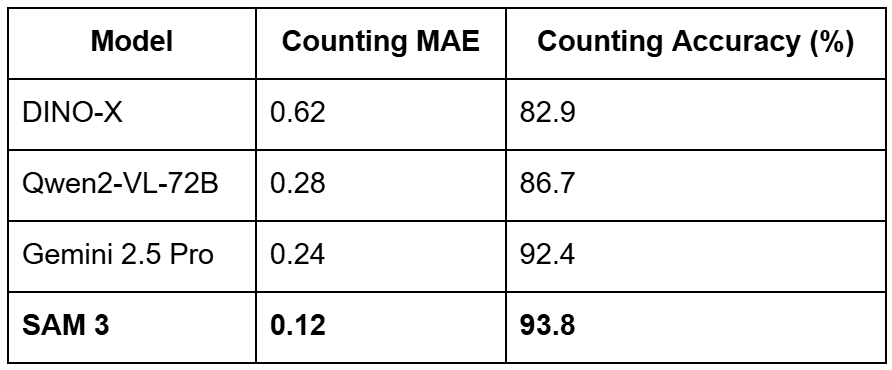

Object Counting and Reasoning Benchmarks

The mannequin’s means to rely and motive about objects can be a significant spotlight. In counting duties, SAM 3 achieves an accuracy of 93.8% and a Imply Absolute Error (MAE) of simply 0.12, outperforming huge fashions (e.g., Gemini 2.5 Professional and Qwen2-VL-72B) on exact visible grounding benchmarks.

For advanced reasoning duties (ReasonSeg), the place directions is perhaps “the leftmost individual sporting a blue vest,” SAM 3, when paired with an MLLM agent, achieves 76.0 gIoU (Generalized Intersection over Union), a 16.9% enchancment over the prior state-of-the-art.

Actual-World Functions and Industrial Impression

The flexibility of SAM 3 makes it a strong basis for a variety of business and artistic purposes.

Good Video Modifying and Content material Creation

Creators can now use pure language to use results to particular topics in movies. For instance, a video editor can immediate “apply a sepia filter to the blue chair” or “blur the faces of all bystanders,” and the mannequin will deal with the segmentation and monitoring all through the clip. This performance is being built-in into instruments (e.g., Vibes on the Meta AI app and media modifying flows on Instagram).

Dataset Labeling and Distillation

As SAM 3 is computationally heavy (working at  30 ms per picture on an H200), its most rapid industrial affect is in scaling information annotation. Groups can use SAM 3 to robotically label thousands and thousands of photographs with high-quality occasion masks after which use this “floor reality” to coach smaller, quicker fashions like YOLO or EfficientSAM3 for real-time use on the sting (e.g., in drones or cellular apps).

30 ms per picture on an H200), its most rapid industrial affect is in scaling information annotation. Groups can use SAM 3 to robotically label thousands and thousands of photographs with high-quality occasion masks after which use this “floor reality” to coach smaller, quicker fashions like YOLO or EfficientSAM3 for real-time use on the sting (e.g., in drones or cellular apps).

Robotics and AR Analysis

SAM 3 is being utilized in Aria Gen 2 analysis glasses to assist section and observe palms and objects from a first-person perspective. This helps contextual AR analysis, the place a wearable assistant can acknowledge {that a} person is “holding a screwdriver” or “taking a look at a leaky pipe” and supply related holographic overlays or directions.

Challenges and Future Outlook

Regardless of its breakthrough efficiency, a number of analysis frontiers stay for the Phase Something household.

- Instruction Reasoning: Whereas SAM 3 handles atomic ideas, it nonetheless depends on exterior brokers (MLLMs) to interpret long-form or advanced directions. Future iterations (e.g., SAM 3-I) are working to combine this instruction-level reasoning natively into the mannequin.

- Effectivity and On-Gadget Use: The 848M parameter measurement restricts SAM 3 to server-side environments. The event of EfficientSAM3 via progressive hierarchical distillation is essential for bringing concept-aware segmentation to real-time, on-device purposes.

- Positive-Grained Context: In duties involving fine-grained organic constructions or context-dependent targets, textual content prompts can generally fail or present coarse boundaries. Positive-tuning with adapters (e.g., SAM3-UNet) stays an important analysis path for adapting the muse mannequin to specialised scientific and medical domains.

Would you want rapid entry to three,457 photographs curated and labeled with hand gestures to coach, discover, and experiment with … free of charge? Head over to Roboflow and get a free account to seize these hand gesture photographs.

Configuring Your Improvement Atmosphere

To comply with this information, it’s essential to have the next libraries put in in your system.

!pip set up --q git+https://github.com/huggingface/transformers supervision jupyter_bbox_widget

We set up the transformers library to load the SAM 3 mannequin and processor, the supervision library for annotation, drawing, and inspection, which we use later to visualise bounding packing containers and segmentation outputs. We additionally set up jupyter_bbox_widget, which provides us an interactive widget. This widget runs inside a pocket book and lets us click on on the picture so as to add factors or draw bounding packing containers.

We additionally cross the --q flag to cover set up logs. This retains pocket book output clear.

Want Assist Configuring Your Improvement Atmosphere?

All that stated, are you:

- Quick on time?

- Studying in your employer’s administratively locked system?

- Desirous to skip the trouble of preventing with the command line, package deal managers, and digital environments?

- Able to run the code instantly in your Home windows, macOS, or Linux system?

Then be part of PyImageSearch College in the present day!

Acquire entry to Jupyter Notebooks for this tutorial and different PyImageSearch guides pre-configured to run on Google Colab’s ecosystem proper in your net browser! No set up required.

And better of all, these Jupyter Notebooks will run on Home windows, macOS, and Linux!

Setup and Imports

As soon as put in, we transfer on to import the required libraries.

import io import torch import base64 import requests import matplotlib import numpy as np import ipywidgets as widgets import matplotlib.pyplot as plt from google.colab import output from speed up import Accelerator from IPython.show import show from jupyter_bbox_widget import BBoxWidget from PIL import Picture, ImageDraw, ImageFont from transformers import Sam3Processor, Sam3Model, Sam3TrackerProcessor, Sam3TrackerModel

We import the next:

io: Python’s built-in module to deal with in-memory picture buffers later when changing PIL photographs to base64 formattorch: to run the SAM 3 mannequin, ship tensors to the GPU, and work with mannequin outputsbase64: module to transform our photographs into base64 strings in order that the BBox widget can show them within the pocket bookrequests: library to obtain photographs straight from a URL; this retains our workflow easy and avoids handbook file uploads

We import a number of helper libraries.

matplotlib.pyplot: helps us visualize masks and overlaysnumpy: offers us quick array operationsipywidgets: permits interactive components contained in the pocket book

We import the output utility from Colab. Later, we use it to allow interactive widgets. With out this step, our bounding field widget is not going to render. We import Accelerator from Hugging Face to run the mannequin effectively on both CPU or GPU with the identical code. It additionally simplifies gadget placement.

We import the show perform to render photographs and widgets straight in pocket book cells, and BBoxWidget acts because the core interactive device that enables us to click on and draw bounding packing containers or factors on high of a picture. We use this as our immediate enter system.

We additionally import 3 lessons from Pillow:

Picture: hundreds RGB photographsImageDraw: helps us draw shapes on photographsImageFont: offers us textual content rendering help for overlays

Lastly, we import our SAM 3 instruments from transformers.

Sam3Processor: prepares inputs for the segmentation mannequinSam3Model: performs segmentation from textual content and field promptsSam3TrackerProcessor: prepares inputs for point-based or monitoring promptsSam3TrackerModel: runs point-based segmentation and masking

Loading the SAM 3 Mannequin

gadget = "cuda" if torch.cuda.is_available() else "cpu"

processor = Sam3Processor.from_pretrained("fb/sam3")

mannequin = Sam3Model.from_pretrained("fb/sam3").to(gadget)

First, we verify if a GPU is offered within the atmosphere. If PyTorch detects CUDA (Compute Unified Gadget Structure), then we use the GPU for quicker inference. In any other case, we fall again to the CPU. This verify ensures our code runs effectively on any machine (Line 1).

Subsequent, we load the Sam3Processor. The processor is accountable for making ready all inputs earlier than they attain the mannequin. It handles picture preprocessing, bounding field formatting, textual content prompts, and tensor conversion. In any case, it makes our uncooked photographs appropriate with the mannequin (Line 3).

Lastly, we load the Sam3Model from Hugging Face. This mannequin takes the processed inputs and generates segmentation masks. We instantly transfer the mannequin to the chosen gadget (GPU or CPU) for inference (Line 4).

Downloading a Few Photographs

!wget -q https://media.roboflow.com/notebooks/examples/birds.jpg !wget -q https://media.roboflow.com/notebooks/examples/traffic_jam.jpg !wget -q https://media.roboflow.com/notebooks/examples/basketball_game.jpg !wget -q https://media.roboflow.com/notebooks/examples/dog-2.jpeg

Right here, we obtain a couple of photographs from the Roboflow media server utilizing the wget command and use the -q flag to suppress output and preserve the pocket book clear.

Helper Perform

This helper overlays segmentation masks, bounding packing containers, labels, and confidence scores straight on high of the unique picture. We use it all through the pocket book to visualise mannequin predictions.

def overlay_masks_boxes_scores(

picture,

masks,

packing containers,

scores,

labels=None,

score_threshold=0.0,

alpha=0.5,

):

picture = picture.convert("RGBA")

masks = masks.cpu().numpy()

packing containers = packing containers.cpu().numpy()

scores = scores.cpu().numpy()

if labels is None:

labels = ["object"] * len(scores)

labels = np.array(labels)

# Rating filtering

preserve = scores >= score_threshold

masks = masks[keep]

packing containers = packing containers[keep]

scores = scores[keep]

labels = labels[keep]

n_instances = len(masks)

if n_instances == 0:

return picture

# Colormap (one colour per occasion)

cmap = matplotlib.colormaps.get_cmap("rainbow").resampled(n_instances)

colours = [

tuple(int(c * 255) for c in cmap(i)[:3])

for i in vary(n_instances)

]

First, we outline a perform named overlay_masks_boxes_scores. It accepts the unique RGB picture and the mannequin outputs: masks, packing containers, and scores. We additionally settle for elective labels, a rating threshold, and a transparency issue alpha (Strains 1-9).

Subsequent, we convert the picture into RGBA format. The additional alpha channel permits us to mix masks easily on high of the picture (Line 10). We transfer the tensors to the CPU and convert them to NumPy arrays. This makes them simpler to govern and appropriate with Pillow (Strains 12-14).

If the person doesn’t present labels, we assign a default label string to every detected object (Strains 16 and 17). We convert labels to a NumPy array so we are able to filter them later, together with masks and scores (Line 19). We filter out detections beneath the rating threshold. This permits us to cover low-confidence masks and cut back muddle within the visualization (Strains 22-26). If nothing survives filtering, we return the unique picture unchanged (Strains 28-30).

We choose a rainbow colormap and pattern one distinctive colour per detected object. We convert float values to RGB integer tuples (0-255 vary) (Strains 33-37).

# =========================

# PASS 1: MASK OVERLAY

# =========================

for masks, colour in zip(masks, colours):

mask_img = Picture.fromarray((masks * 255).astype(np.uint8))

overlay = Picture.new("RGBA", picture.measurement, colour + (0,))

overlay.putalpha(mask_img.level(lambda v: int(v * alpha)))

picture = Picture.alpha_composite(picture, overlay)

Right here, we loop via every mask-color pair. For every masks, we create a grayscale masks picture, convert it right into a clear RGBA overlay, and mix it onto the unique picture. The alpha worth controls transparency. This step provides gentle, coloured areas over segmented areas (Strains 42-46).

# =========================

# PASS 2: BOXES + LABELS

# =========================

draw = ImageDraw.Draw(picture)

strive:

font = ImageFont.load_default()

besides Exception:

font = None

for field, rating, label, colour in zip(packing containers, scores, labels, colours):

x1, y1, x2, y2 = map(int, field.tolist())

# --- Bounding field (with black stroke for visibility)

draw.rectangle([(x1, y1), (x2, y2)], define="black", width=3)

draw.rectangle([(x1, y1), (x2, y2)], define=colour, width=2)

# --- Label textual content

textual content = f"{label} | {rating:.2f}"

tb = draw.textbbox((0, 0), textual content, font=font)

tw, th = tb[2] - tb[0], tb[3] - tb[1]

# Label background

draw.rectangle(

[(x1, y1 - th - 4), (x1 + tw + 6, y1)],

fill=colour,

)

# Black label textual content (excessive distinction)

draw.textual content(

(x1 + 3, y1 - th - 2),

textual content,

fill="black",

font=font,

)

return picture

Right here, we put together a drawing context to overlay rectangles and textual content (Line 51). We try to load a default font. If unavailable, we fall again to no font (Strains 53-56). We loop over every object and extract its bounding field coordinates (Strains 58 and 59).

We draw two rectangles: The primary one (black) improves visibility, and the second makes use of the assigned object colour (Strains 62 and 63). We format the label and rating textual content, then compute the textual content field measurement (Strains 66-68). We draw a coloured background rectangle behind the label textual content (Strains 71-74). We draw black textual content on high. Black textual content supplies a robust distinction towards brilliant overlay colours (Strains 77-82).

Lastly, we return the annotated picture (Line 84).

Promptable Idea Segmentation on Photographs: Single Textual content Immediate on a Single Picture

Now, we’re prepared to indicate Promptable Idea Segmentation on photographs.

On this instance, we section particular visible ideas from a picture utilizing solely a single textual content immediate.

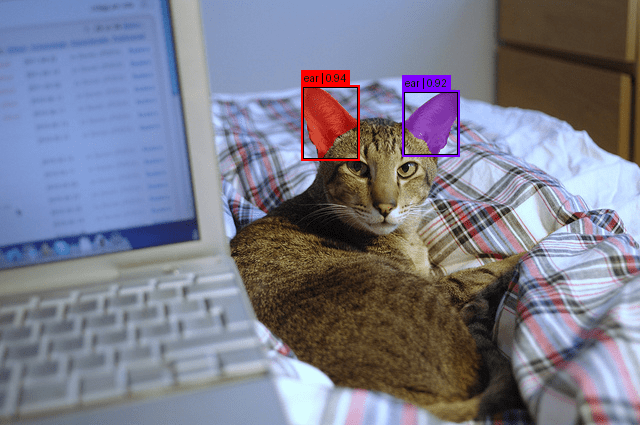

Instance 1

# Load picture

image_url = "http://photographs.cocodataset.org/val2017/000000077595.jpg"

picture = Picture.open(requests.get(image_url, stream=True).uncooked).convert("RGB")

# Phase utilizing textual content immediate

inputs = processor(photographs=picture, textual content="ear", return_tensors="pt").to(gadget)

with torch.no_grad():

outputs = mannequin(**inputs)

# Put up-process outcomes

outcomes = processor.post_process_instance_segmentation(

outputs,

threshold=0.5,

mask_threshold=0.5,

target_sizes=inputs.get("original_sizes").tolist()

)[0]

print(f"Discovered {len(outcomes['masks'])} objects")

# Outcomes comprise:

# - masks: Binary masks resized to unique picture measurement

# - packing containers: Bounding packing containers in absolute pixel coordinates (xyxy format)

# - scores: Confidence scores

First, we load a check picture from the COCO (Widespread Objects in Context) dataset. We obtain it straight through URL, convert its bytes right into a PIL picture, and guarantee it’s in RGB format utilizing Picture from Pillow. This supplies a standardized enter for SAM 3 (Strains 2 and three).

Subsequent, we put together the mannequin inputs. We cross the picture and a single textual content immediate — the key phrase "ear". The processor handles all preprocessing steps (e.g., resizing, normalization, and token encoding). We transfer the ultimate tensors to our chosen gadget (GPU or CPU) (Line 6).

Then, we run inference. We disable gradient monitoring utilizing torch.no_grad(). This reduces reminiscence utilization and quickens ahead passes. The mannequin returns uncooked segmentation outputs (Strains 8 and 9).

After inference, we convert uncooked mannequin outputs into usable instance-level segmentation predictions utilizing processor.post_process_instance_segmentation (Strains 12-17).

- We apply a

thresholdto filter weak detections. - We apply

mask_thresholdto transform predicted logits into binary masks. - We resize masks again to their unique dimensions.

We index [0] as a result of this output corresponds to the primary (and solely) picture within the batch (Line 17).

We print the variety of detected occasion masks. Every masks corresponds to at least one “ear” discovered within the picture (Line 19).

Under is the variety of objects detected within the picture.

Discovered 2 objects

Output

labels = ["ear"] * len(outcomes["scores"]) overlay_masks_boxes_scores( picture, outcomes["masks"], outcomes["boxes"], outcomes["scores"], labels )

Now, to visualise the output, we assign the label "ear" to every detected occasion. This ensures our visualizer shows clear textual content overlays.

Lastly, we name our visualization helper. This overlays:

- segmentation masks

- bounding packing containers

- labels

- scores

straight on high of the picture. The result’s a transparent visible map displaying the place SAM 3 discovered ears within the scene (Strains 2-8).

In Determine 1, we are able to see the item (ear) detected within the picture.

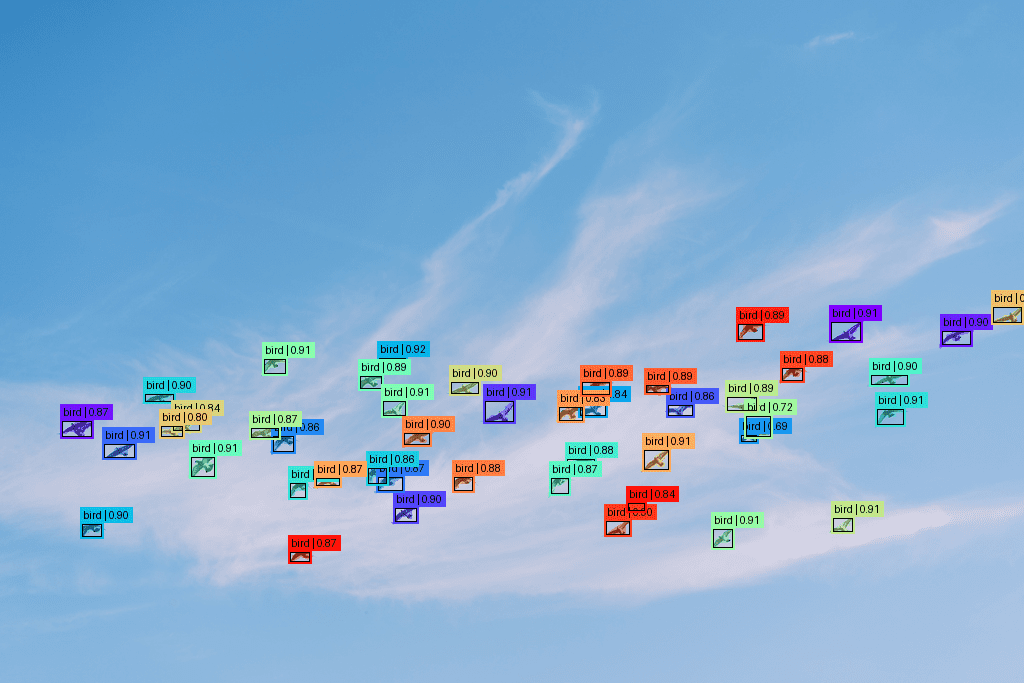

Instance 2

IMAGE_PATH = '/content material/birds.jpg'

# Load picture

picture = Picture.open(IMAGE_PATH).convert("RGB")

# Phase utilizing textual content immediate

inputs = processor(photographs=picture, textual content="fowl", return_tensors="pt").to(gadget)

with torch.no_grad():

outputs = mannequin(**inputs)

# Put up-process outcomes

outcomes = processor.post_process_instance_segmentation(

outputs,

threshold=0.5,

mask_threshold=0.5,

target_sizes=inputs.get("original_sizes").tolist()

)[0]

print(f"Discovered {len(outcomes['masks'])} objects")

# Outcomes comprise:

# - masks: Binary masks resized to unique picture measurement

# - packing containers: Bounding packing containers in absolute pixel coordinates (xyxy format)

# - scores: Confidence scores

This block of code is similar to the earlier instance. The one change is that we now load a native picture (birds.jpg) as an alternative of downloading one from COCO. We additionally replace the segmentation immediate from "ear" to "fowl".

Under is the variety of objects detected within the picture.

Discovered 45 objects

Output

labels = ["bird"] * len(outcomes["scores"]) overlay_masks_boxes_scores( picture, outcomes["masks"], outcomes["boxes"], outcomes["scores"], labels )

The output code stays just like the above. The one distinction is the label change from "ear" to "fowl".

In Determine 2, we are able to see the item (fowl) detected within the picture.

Instance 3

IMAGE_PATH = '/content material/traffic_jam.jpg'

# Load picture

picture = Picture.open(IMAGE_PATH).convert("RGB")

# Phase utilizing textual content immediate

inputs = processor(photographs=picture, textual content="taxi", return_tensors="pt").to(gadget)

with torch.no_grad():

outputs = mannequin(**inputs)

# Put up-process outcomes

outcomes = processor.post_process_instance_segmentation(

outputs,

threshold=0.5,

mask_threshold=0.5,

target_sizes=inputs.get("original_sizes").tolist()

)[0]

print(f"Discovered {len(outcomes['masks'])} objects")

# Outcomes comprise:

# - masks: Binary masks resized to unique picture measurement

# - packing containers: Bounding packing containers in absolute pixel coordinates (xyxy format)

# - scores: Confidence scores

This block of code is similar to the earlier instance. The one change is that we now load a native picture (traffic_jam.jpg) as an alternative of downloading one from COCO. We additionally replace the segmentation immediate from "fowl" to "taxi".

Under is the variety of objects detected within the picture.

Discovered 16 objects

Output

labels = ["taxi"] * len(outcomes["scores"]) overlay_masks_boxes_scores( picture, outcomes["masks"], outcomes["boxes"], outcomes["scores"], labels )

The output code stays just like the above. The one distinction is the change of the label from "fowl" to "taxi".

In Determine 3, we are able to see the item (taxi) detected within the picture.

What’s subsequent? We suggest PyImageSearch College.

86+ complete lessons • 115+ hours hours of on-demand code walkthrough movies • Final up to date: January 2026

★★★★★ 4.84 (128 Scores) • 16,000+ College students Enrolled

I strongly imagine that for those who had the appropriate trainer you would grasp laptop imaginative and prescient and deep studying.

Do you assume studying laptop imaginative and prescient and deep studying must be time-consuming, overwhelming, and sophisticated? Or has to contain advanced arithmetic and equations? Or requires a level in laptop science?

That’s not the case.

All it’s essential to grasp laptop imaginative and prescient and deep studying is for somebody to clarify issues to you in easy, intuitive phrases. And that’s precisely what I do. My mission is to vary training and the way advanced Synthetic Intelligence subjects are taught.

For those who’re severe about studying laptop imaginative and prescient, your subsequent cease needs to be PyImageSearch College, essentially the most complete laptop imaginative and prescient, deep studying, and OpenCV course on-line in the present day. Right here you’ll learn to efficiently and confidently apply laptop imaginative and prescient to your work, analysis, and initiatives. Be a part of me in laptop imaginative and prescient mastery.

Inside PyImageSearch College you may discover:

- &verify; 86+ programs on important laptop imaginative and prescient, deep studying, and OpenCV subjects

- &verify; 86 Certificates of Completion

- &verify; 115+ hours hours of on-demand video

- &verify; Model new programs launched usually, guaranteeing you possibly can sustain with state-of-the-art methods

- &verify; Pre-configured Jupyter Notebooks in Google Colab

- &verify; Run all code examples in your net browser — works on Home windows, macOS, and Linux (no dev atmosphere configuration required!)

- &verify; Entry to centralized code repos for all 540+ tutorials on PyImageSearch

- &verify; Straightforward one-click downloads for code, datasets, pre-trained fashions, and many others.

- &verify; Entry on cellular, laptop computer, desktop, and many others.

Abstract

On this tutorial, we explored how the discharge of Phase Something Mannequin 3 (SAM 3) represents a basic shift in laptop imaginative and prescient — from geometry-driven segmentation to concept-driven visible understanding. In contrast to SAM 1 and SAM 2, which relied on exterior cues to determine the place an object is, SAM 3 internalizes semantic recognition and permits customers to straight question what they wish to section utilizing pure language or visible exemplars.

We examined how this transition is enabled by a unified structure constructed round a shared Notion Encoder, an open-vocabulary DETR-based detector with a Presence Head, and a memory-based tracker for movies. We additionally mentioned how the huge SA-Co dataset and a fastidiously staged coaching pipeline permit SAM 3 to scale to thousands and thousands of ideas whereas sustaining sturdy calibration and zero-shot efficiency.

Via sensible examples, we demonstrated how one can arrange SAM 3 in your improvement atmosphere and implement single textual content immediate segmentation throughout varied eventualities — from detecting ears on a cat to figuring out birds in a flock and taxis in site visitors.

In Half 2, we’ll dive deeper into superior prompting methods, together with multi-prompt segmentation, bounding field steerage, unfavorable prompts, and absolutely interactive segmentation workflows that offer you pixel-perfect management over your outcomes. Whether or not you’re constructing annotation pipelines, video modifying instruments, or robotics purposes, Half 2 will present you how one can harness SAM 3’s full potential via refined immediate engineering.

Quotation Info

Thakur, P. “SAM 3: Idea-Based mostly Visible Understanding and Segmentation,” PyImageSearch, P. Chugh, S. Huot, G. Kudriavtsev, and A. Sharma, eds., 2026, https://pyimg.co/uming

@incollection{Thakur_2026_sam-3-concept-based-visual-understanding-and-segmentation,

creator = {Piyush Thakur},

title = {{SAM 3: Idea-Based mostly Visible Understanding and Segmentation}},

booktitle = {PyImageSearch},

editor = {Puneet Chugh and Susan Huot and Georgii Kudriavtsev and Aditya Sharma},

12 months = {2026},

url = {https://pyimg.co/uming},

}

To obtain the supply code to this put up (and be notified when future tutorials are printed right here on PyImageSearch), merely enter your electronic mail tackle within the type beneath!

Obtain the Supply Code and FREE 17-page Useful resource Information

Enter your electronic mail tackle beneath to get a .zip of the code and a FREE 17-page Useful resource Information on Laptop Imaginative and prescient, OpenCV, and Deep Studying. Inside you may discover my hand-picked tutorials, books, programs, and libraries that will help you grasp CV and DL!

The put up SAM 3: Idea-Based mostly Visible Understanding and Segmentation appeared first on PyImageSearch.