OpenAI has launched a analysis preview of gpt-oss-safeguard, two open weight security reasoning fashions that permit builders apply customized security insurance policies at inference time. The fashions are available two sizes, gpt-oss-safeguard-120b and gpt-oss-safeguard-20b, each positive tuned from gpt-oss, each licensed beneath Apache 2.0, and each accessible on Hugging Face for native use.

Why Coverage-Conditioned Security Issues?

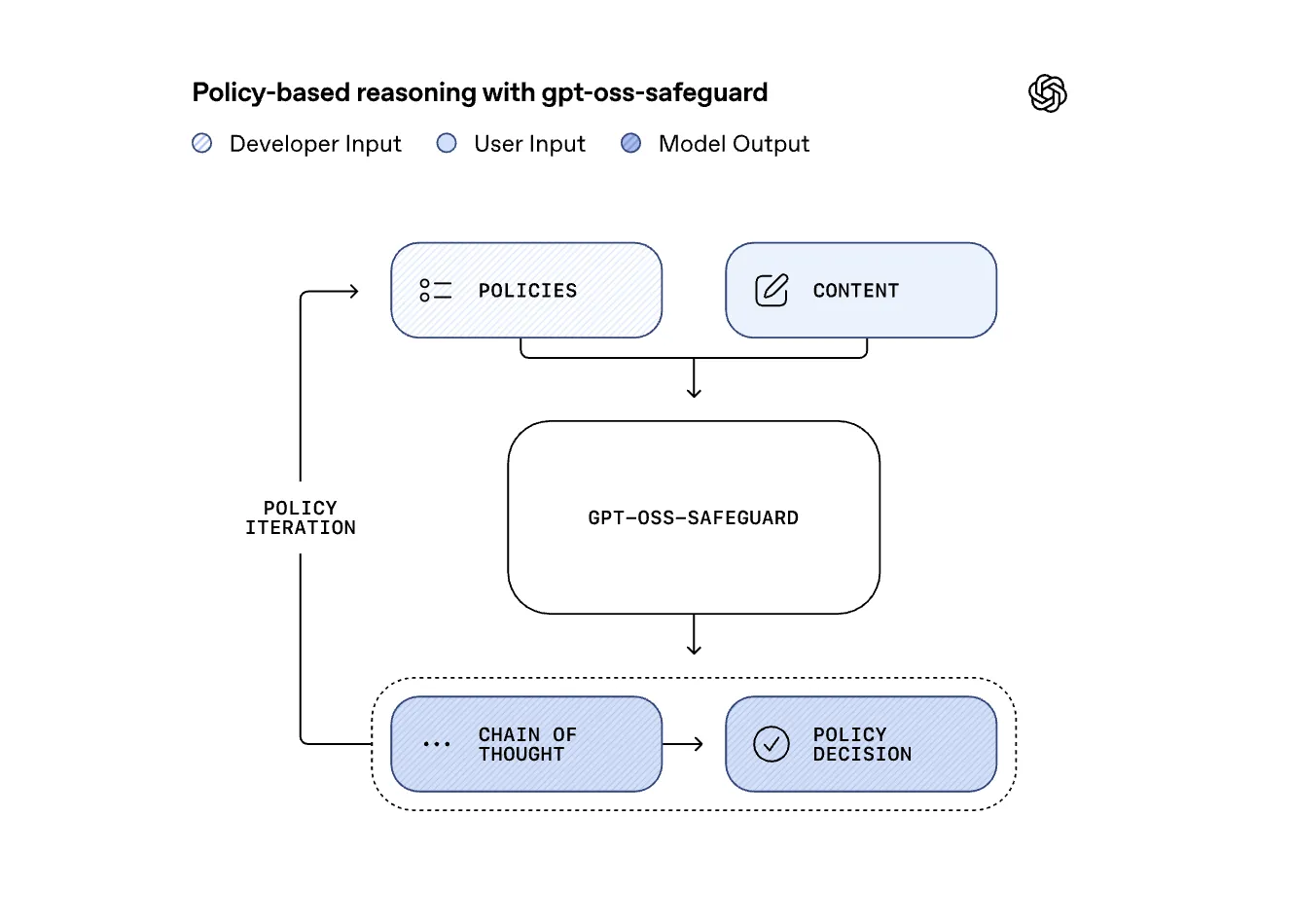

Standard moderation fashions are skilled on a single mounted coverage. When that coverage modifications, the mannequin should be retrained or changed. gpt-oss-safeguard reverses this relationship. It takes the developer authored coverage as enter along with the person content material, then causes step-by-step to resolve whether or not the content material violates the coverage. This turns security right into a immediate and analysis process, which is best fitted to quick altering or area particular harms akin to fraud, biology, self hurt or sport particular abuse.

Similar Sample as OpenAI’s Inside Security Reasoner

OpenAI states that gpt-oss-safeguard is an open weight implementation of the Security Reasoner used internally throughout programs like GPT 5, ChatGPT Agent and Sora 2. In manufacturing settings OpenAI already runs small excessive recall filters first, then escalates unsure or delicate objects to a reasoning mannequin, and in current launches as much as 16 p.c of whole compute was spent on security reasoning. The open launch lets exterior groups reproduce this protection in depth sample as an alternative of guessing how OpenAI’s stack works.

Mannequin Sizes and {Hardware} Match

The big mannequin, gpt-oss-safeguard-120b, has 117B parameters with 5.1B energetic parameters and is sized to suit on a single 80GB H100 class GPU. The smaller gpt-oss-safeguard-20b has 21B parameters with 3.6B energetic parameters and targets decrease latency or smaller GPUs, together with 16GB setups. Each fashions had been skilled on the concord response format, so prompts should observe that construction in any other case outcomes will degrade. The license is Apache 2.0, the identical because the mother or father gpt-oss fashions, so business native deployment is permitted.

Analysis Outcomes

OpenAI evaluated the fashions on inside multi coverage exams and on public datasets. In multi coverage accuracy, the place the mannequin should accurately apply a number of insurance policies without delay, gpt-oss-safeguard and OpenAI’s inside Security Reasoner outperform gpt-5-thinking and the open gpt-oss baselines. On the 2022 moderation dataset the brand new fashions barely outperform each gpt-5-thinking and the inner Security Reasoner, nonetheless OpenAI specifies that this hole will not be statistically vital, so it shouldn’t be oversold. On ToxicChat, the inner Security Reasoner nonetheless leads, with gpt-oss-safeguard shut behind. This locations the open fashions within the aggressive vary for actual moderation duties.

Really useful Deployment Sample

OpenAI is express that pure reasoning on each request is pricey. The advisable setup is to run small, quick, excessive recall classifiers on all visitors, then ship solely unsure or delicate content material to gpt-oss-safeguard, and when person expertise requires quick responses, to run the reasoner asynchronously. This mirrors OpenAI’s personal manufacturing steering and displays the truth that devoted process particular classifiers can nonetheless win when there’s a massive prime quality labeled dataset.

Key Takeaways

- gpt-oss-safeguard is a analysis preview of two open weight security reasoning fashions, 120b and 20b, that classify content material utilizing developer provided insurance policies at inference time, so coverage modifications don’t require retraining.

- The fashions implement the identical Security Reasoner sample OpenAI makes use of internally throughout GPT 5, ChatGPT Agent and Sora 2, the place a primary quick filter routes solely dangerous or ambiguous content material to a slower reasoning mannequin.

- Each fashions are positive tuned from gpt-oss, hold the concord response format, and are sized for actual deployments, the 120b mannequin matches on a single H100 class GPU, the 20b mannequin targets 16GB stage {hardware}, and each are Apache 2.0 on Hugging Face.

- On inside multi coverage evaluations and on the 2022 moderation dataset, the safeguard fashions outperform gpt-5-thinking and the gpt-oss baselines, however OpenAI notes that the small margin over the inner Security Reasoner will not be statistically vital.

- OpenAI recommends utilizing these fashions in a layered moderation pipeline, along with neighborhood assets akin to ROOST, so platforms can categorical customized taxonomies, audit the chain of thought, and replace insurance policies with out touching weights.

OpenAI is taking an inside security sample and making it reproducible, which is a very powerful a part of this launch. The fashions are open weight, coverage conditioned and Apache 2.0, so platforms can lastly apply their very own taxonomies as an alternative of accepting mounted labels. The truth that gpt-oss-safeguard matches and typically barely exceeds the inner Security Reasoner on the 2022 moderation dataset, whereas outperforming gpt-5-thinking on multi coverage accuracy, however with a non statistically vital margin, exhibits the method is already usable. The advisable layered deployment is reasonable for manufacturing.