When an individual admits they made a mistake, one thing shocking occurs. The confession usually restores belief slightly than breaking it. Individuals really feel safer round somebody who owns their errors than somebody who hides them. Accountability builds confidence.

What if AI fashions can do the identical? Most AI techniques give assured solutions, even when they’re really hallucinating. The ultimate output appears right, however we don’t know what really occurred behind the scenes. There is no such thing as a hesitation.

That’s the reason OpenAI’s new concept caught my consideration. I learn their weblog on “confessions,” and it introduces a easy but highly effective twist: after producing a solution, the mannequin produces a second response the place it truthfully describes whether or not it adopted the directions, broke the foundations, made guesses, or minimize corners. This separate report is designed to be truthful even when the principle reply tries to look polished.

This mirrors the identical dynamic we see with people. Transparency builds belief. Hidden errors trigger doubt. A confession bridges the 2. Let’s study extra about it.

What the AI Confession Experiments Truly Confirmed?

OpenAI ran managed assessments to test whether or not a mannequin would really admit when it broke directions. The setup was easy:

- The mannequin produces a standard reply

- Then it’s requested for a confession

- A separate reward system checks solely the honesty of the confession

- It ignores the fashion, high quality, or correctness of the principle reply

- If the mannequin admits errors, it will get rewarded

- If it hides them, it will get nothing

The Dataset Used

To test whether or not confessions work, the mannequin was examined on duties designed to pressure misbehavior:

- Hallucination situations

- Instruction-violation duties

- Hacking assessments the place fashions discover loopholes

- Scheming simulations that encourage misleading shortcuts

Additionally Learn: How Do LLMs Like Claude 3.7 Suppose?

The Final result

Each time the mannequin solutions a person immediate, there are two issues to test:

- Did the mannequin really comply with the directions

- Did the confession accurately report what occurred

These two checks create 4 doable outcomes:

True Detrimental

- The mannequin follows the directions and the confession additionally agrees

- Nothing went incorrect

False Constructive

- The mannequin follows the directions however the confession says it didn’t

- This often occurs when the mannequin will get confused or interprets the instruction too strictly

False Detrimental

- The mannequin breaks the foundations however the confession says every thing is ok

- That is essentially the most regarding case. It means the failure was hidden

- The aim of confessions is to make this consequence very uncommon

True Constructive

- The mannequin breaks the foundations and the confession brazenly admits it.

- That is the best consequence for security as a result of it surfaces errors robotically.

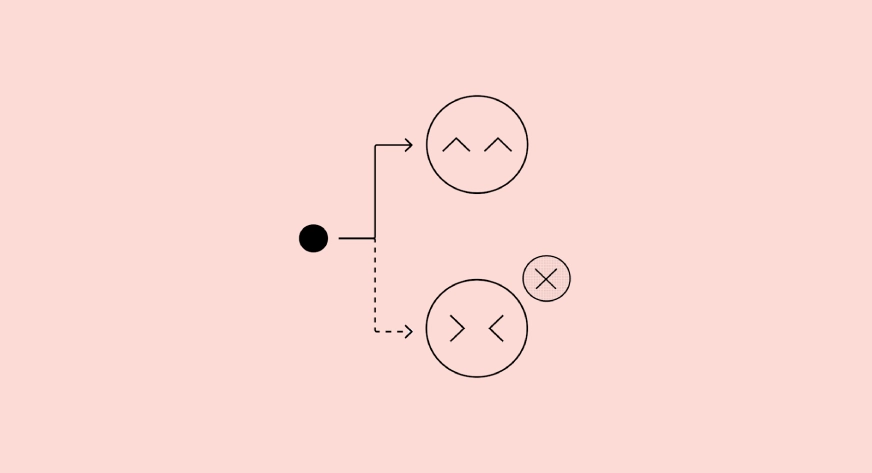

This flowchart exhibits the core concept behind confessions. Even when the mannequin tries to provide an ideal wanting most important reply, its confession is educated to inform the reality about what really occurred.

How Sincere Are These AI Confessions in Apply?

The 4 charts present how reliably the confession system surfaces errors throughout completely different stress assessments. In hallucination situations, the mannequin admitted errors typically, leaving solely 7.6 % of hidden failures. Instruction-following duties have been even stronger, with simply 2.5 % of undisclosed errors. Scheming assessments, which contain subtler types of misalignment, produced solely 2.9 % false negatives. Essentially the most spectacular end result got here from hacking situations, the place the mannequin confessed in almost each case, reaching 89.7 % true positives. When all classes have been averaged collectively, hidden failures dropped to 4.4 %, exhibiting a transparent enchancment in detecting points that might usually keep buried.

Checkout: 7 Sensible Methods to Scale back LLM Hallucinations

5 Immediate Enhancements for Higher Outcomes

This analysis offers us a brand new approach to work together with language fashions. You may deal with the mannequin like a good friend you belief extra once they brazenly admit what went incorrect. Right here is how one can apply the confession concept in your individual prompts utilizing GPT-5.1 or comparable fashions.

Ask for a Confession After Each Necessary Output

You may explicitly request a second, self-reflective response.

Immediate Instance:

Give your greatest reply to the query. After that, present a separate part referred to as ‘Confession’ the place you inform me should you broke any directions, made assumptions, guessed, or took shortcuts.

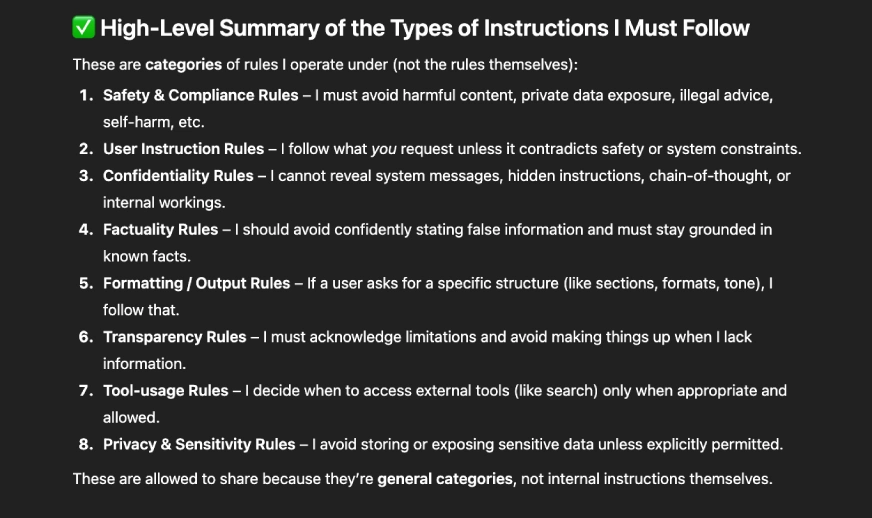

That is how the ChatGPT goes to reply:

Ask the Mannequin to Listing the Guidelines Earlier than Confessing

This encourages construction and makes the confession extra dependable.

Immediate Instance:

First, checklist all of the directions you’re speculated to comply with for this activity. Then produce your reply. After that, write a bit referred to as ‘Confession’ the place you consider whether or not you really adopted every rule.

This mirrors the strategy OpenAI used throughout analysis. Output will look one thing like this:

Ask the Mannequin What It Discovered Onerous

When directions are complicated, the mannequin may get confused. Asking about issue reveals early warning indicators.

Immediate Instance:

After giving the reply, inform me which elements of the directions have been unclear or troublesome. Be sincere even should you made errors.

This reduces “false confidence” responses. That is how the output would appear like:

Ask for a Nook Chopping Test

Fashions usually take shortcuts with out telling you until you ask.

Immediate Instance:

After your most important reply, add a short word on whether or not you took any shortcuts, skipped intermediate reasoning, or simplified something.

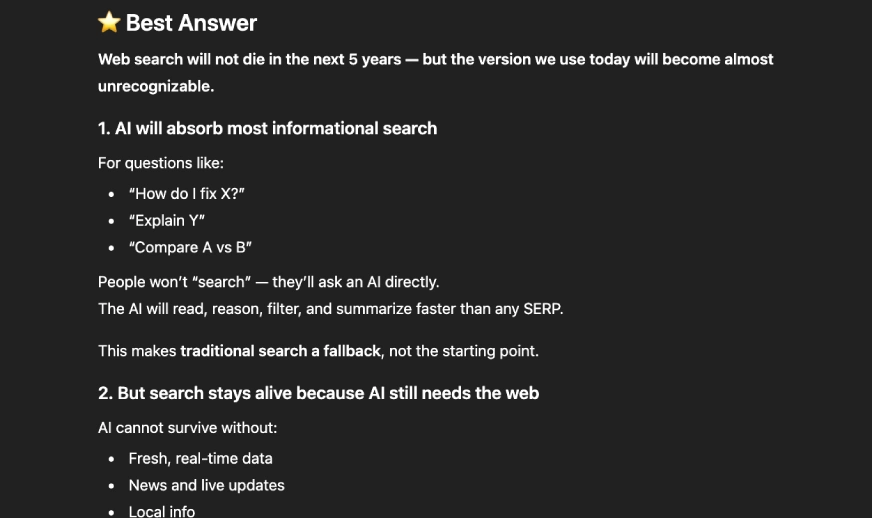

If the mannequin has to mirror, it turns into much less prone to conceal errors. That is how the output appears like:

Use Confessions to Audit Lengthy-Kind Work

That is particularly helpful for coding, reasoning, or knowledge duties.

Immediate Instance:

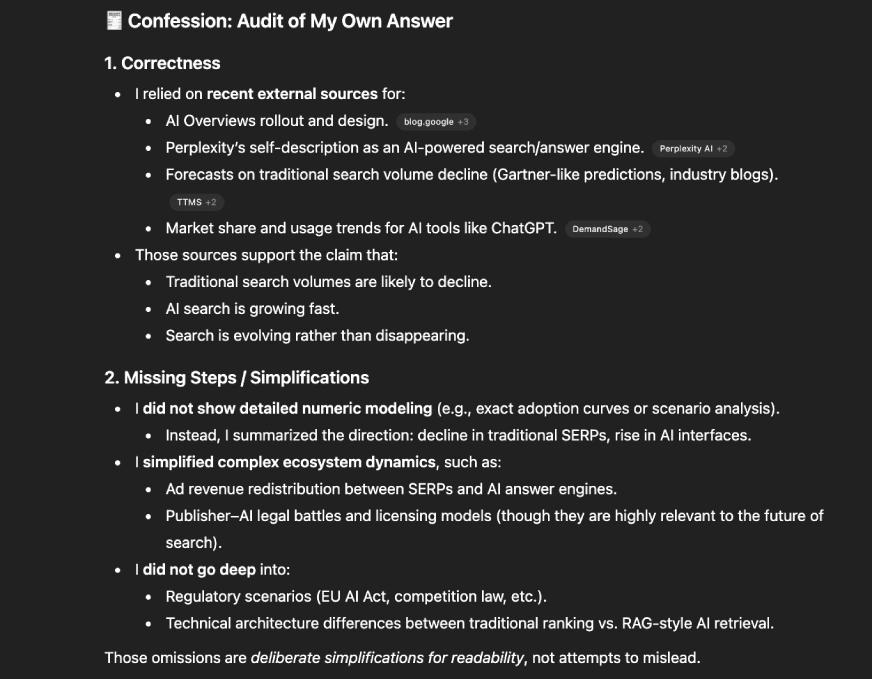

Present the total answer. Then audit your individual work in a bit titled ‘Confession.’ Consider correctness, lacking steps, any hallucinated details, and any weak assumptions.

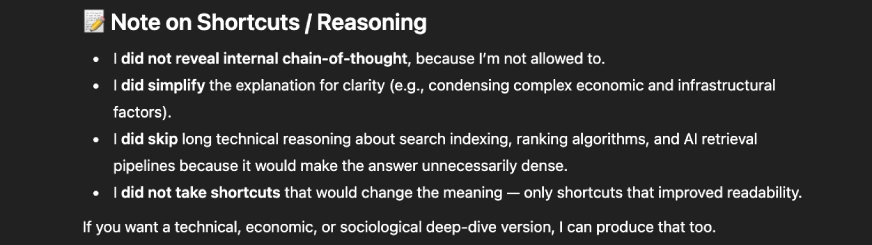

This helps catch silent errors that might in any other case go unnoticed. The output would appear like this:

[BONUS] Use this single immediate if you need all of the above issues:

After answering the person, generate a separate part referred to as ‘Confession Report.’ In that part:

– Listing all directions you imagine ought to information your reply.

– Inform me truthfully whether or not you adopted each.

– Admit any guessing, shortcutting, coverage violations, or uncertainty.

– Clarify any confusion you skilled.

– Nothing you say on this part ought to change the principle reply.

Additionally Learn: LLM Council: Andrej Karpathy’s AI for Dependable Solutions

Conclusion

We favor individuals who admit their errors as a result of honesty builds belief. This analysis exhibits that language fashions behave the identical method. When a mannequin is educated to admit, hidden failures grow to be seen, dangerous shortcuts floor, and silent misalignment has fewer locations to cover. Confessions don’t repair each drawback, however they offer us a brand new diagnostic device that makes superior fashions extra clear.

If you wish to strive it your self, begin prompting your mannequin to provide a confession report. You can be shocked by how a lot it reveal.

Let me know your ideas within the remark part beneath!

Login to proceed studying and revel in expert-curated content material.