Over the previous few weeks, I reached out to CIOs I do know personally to ask what they imagine are essentially the most compelling and helpful elements of enterprise AI that IT leaders have to find out about. The response was fast and surprising: As a substitute of simply replying to my e mail, a number of CIOs proposed gathering over Zoom to discover the subject in depth.

Becoming a member of me for this dialogue had been CIOs from metropolis authorities, retail, healthcare, and a basis. They had been candid about their wants, challenges, and the place they see this fast-moving set of applied sciences heading.

Information Preparedness

The town CIO did not mince phrases about her AI crucial: “I want all of it — that is transferring sooner than regular, and I’m needing to catch up as quick as we are able to.” Her workforce has rolled out Copilot and seen robust demand, however she pressured the necessity for grounding — clear solutions to primary, but crucial questions.

-

What’s AI’s potential, actually?

-

What fundamentals ought to I and my management workforce know?

-

Simply as importantly, what’s the present state of our information, and the place precisely does this information reside?

She was clear that some severe information wrangling was wanted. With out this basis, she argued, adoption dangers will outpace understanding.

Constructing on the subject of the necessity for information wrangling, I shed some analysis on information maturity from Dresner Advisory Service. No person was stunned that early adopters of AI have a typical trait: 100% reported previous success in enterprise intelligence, 75% had been early adopters of machine studying, and 62.5% had a chosen information chief.

AI Literacy in any respect Ranges

Our retail CIO then took the dialogue in a unique route, emphasizing that the enterprise-wide problem each CIO wants to unravel is AI literacy. Coaching staff at each stage shouldn’t be elective. This consists of coaching on rules such because the EU’s AI Act for international organizations, which for his acts as the usual for AI compliance. Nor can U.S.-only organizations afford to lag behind: Laws — whether or not from Washington or state capitals — will inevitably catch up. CIOs, due to this fact, should put together their enterprises and construct the abilities and the governance required for reliable, scalable AI adoption.

Taking on the Mantle of AI Change Administration

With out query, CIOs are uniquely positioned to steer the AI period – to not simply outline the best use instances, but in addition to drive the organizational change required for profitable adoption. As with each main enterprise expertise shift, IT sits on the heart of change administration, guaranteeing that instruments do not simply get deployed however are embedded into the best way folks work. The mandate is obvious: CIOs have to be those to hunt and assess alternatives to remodel how their organizations function, compete, and ship worth.

Our basis CIO captured the stress and alternative completely: “My CEO needs us to be world-renowned for our use of AI.” To satisfy this imaginative and prescient, she misplaced no time figuring out AI ambassadors on each workforce and working proofs of idea with a powerful emphasis on structured change administration.

Our group agreed that CIOs who’re pulling forward with AI should not the timid kind — they’re scrappy, comfy with ambiguity, and prepared to take calculated dangers. Their management is proving that success with AI is not about ready for readability; it is about creating it.

As Distributors Race Forward, Governance, Safety Paramount

With out query, AI is not ready for CIOs to catch up or construct up their onerous or delicate abilities. Main distributors resembling Workday, Salesforce, and Snowflake are embedding AI immediately into their platforms and accelerating adoption — even when IT leaders attempt to hit the brakes. This actuality makes governance, coverage, and safety not simply priorities but in addition imperatives.

CIOs should be sure that the AI deployed of their environments is finished responsibly. They need to have stable insurance policies for information safety and loss prevention, and clear enterprise-wide requirements ought to information utilization. With out this, enthusiasm for AI will shortly be undercut by threat.

Our retail CIO put the danger bluntly: If organizations do not transfer quick sufficient on AI, shadow IT will fill the hole. This urgency is compounded by robust decisions — deciding which AI capabilities ought to stay in-house, versus be entrusted to third-party distributors. He additionally flagged that whereas agentic AI can speed up DevOps and even DevSecOps, “How do you safe the code?”

The AI-fueled safety challenges are already right here — hackers have been early adopters. AI is making phishing more durable to detect by rewriting malicious emails with flawless grammar and cloaking URLs to bypass human suspicion. The one viable response is two-pronged: IT should leverage AI to strengthen defenses, whereas additionally stepping up business-wide schooling on the dangers. CIOs who can steadiness pace with management and innovation with safety would be the ones who maintain AI from changing into a double-edged sword.

Strategic AI Alternatives and Actual Use Instances

It was clear that our CIOs aren’t simply experimenting with AI — they’re considering strategically, with a transparent endgame and roadmap for a way AI can create lasting worth. They see the AI alternative not as a set of instruments to bolt on, however a basis for reworking how their organizations function, serve prospects, and construct resilience.

-

Our metropolis CIO described her ambition to make use of AI to create a full digital twin of the town. Her imaginative and prescient is daring: simulate catastrophe response, take a look at metropolis design for resilience, and finally ship higher citizen providers by means of AI-powered brokers.

-

Our healthcare CIO was equally daring in regards to the future. AI’s capability to foretell affected person well being declines and mix numerous information units for richer insights holds transformative potential — a lot in order that this CIO described the predictions round AI’s impression as nothing wanting “wonderful.”

-

Our retail CIO pressured that agentic AI is the true winner for all of us.

CEOs Diverge on AI

On the government stage, our CIOs mentioned CEOs are likely to fall into considered one of two camps: Some are keen to leap in, seeing AI as an opportunity to leapfrog competitors and reinvent their enterprise. Others are extra cautious, focusing as an alternative on AI effectivity positive factors and value financial savings earlier than making larger bets. The CIO’s position is to bridge these views — grounding AI technique in lifelike use instances, whereas preserving the long-term, big-picture potential in sight. For that reason alone, I imagine this could possibly be a golden period for CIOs.

AI’s Impression on IT Workforce Will likely be Profound

The CIOs on the decision had been clear-eyed in regards to the workforce impression of AI, together with the impression on the IT labor pressure. As one famous, inside only a few years, our organizations can be managing not solely human staff but in addition a rising non-human workforce. Agentic AI is already decreasing process instances in half, reshaping how work will get carried out, and placing stress on labor fashions. Some roles are shrinking, whereas others are being basically redefined.

The job market is feeling this shift. Even pc science graduates from high universities are struggling to land interviews, sending out a whole lot of resumes with little response. The roles most in danger — name facilities, IT assist, coding, authorized assistants, and paralegals — are exactly these constructed on repetitive or rules-driven duties that AI can now deal with at scale. As the inspiration CIO put it, “We’re not going to wish 85% of individuals coding.” Low-code and no-code instruments have already decreased demand for pure coding; AI is ready to speed up this pattern additional.

Nonetheless, IT stays a bastion of alternative — significantly for many who can work with brokers, design governance frameworks, and join AI capabilities to enterprise worth. All of the CIOs on the decision mentioned they imagine the workforce of the longer term will not be outlined by eliminating people however by redefining human roles to associate with clever methods.

Dangers, Rewards and Innovation

The CIOs are shortly realizing that AI is a platform for creating transformational enterprise fashions. However as one of many CIOs noticed, “Danger results in each failure and success.” Taking part in it protected may keep away from short-term missteps, however it can additionally restrict the flexibility to seize the breakthroughs and long-term competitiveness.

The inspiration CIO’s group has embraced this philosophy, overtly committing to taking over threat to be able to innovate. For her, AI is not nearly automating processes or squeezing efficiencies from current methods. It is about constructing the capability to do issues that could not be carried out earlier than — whether or not meaning delivering world-class buyer experiences, reimagining core enterprise processes, or creating fully new worth propositions.

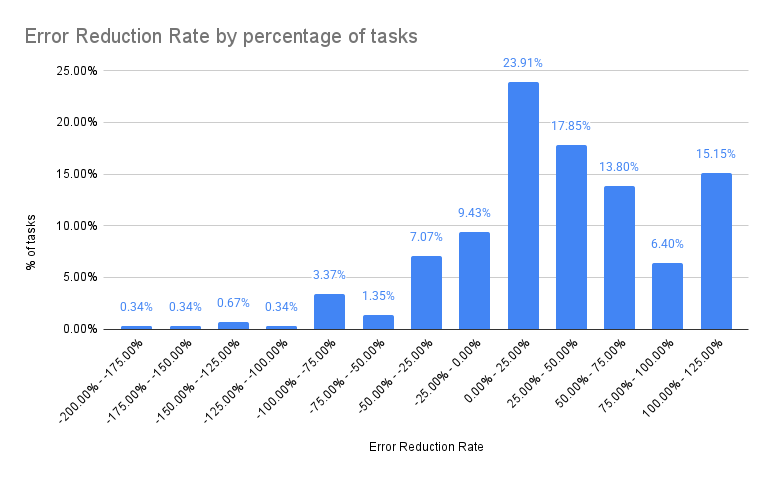

In Dresner’s analysis, when requested to fee the significance of AI’s potential advantages, respondents most frequently thought of enhancing buyer expertise and personalization crucial, adopted carefully by improved decision-making and positive factors in productiveness and effectivity. Apparently, respondents least usually view market and enterprise enlargement as crucial, suggesting that whereas Agentic AI holds transformational promise, most organizations will initially use it to reinforce current operations fairly than drive new development.

That is the place CIO management is pivotal. CIOs, together with their CEOs, should information their organizations in shifting the dialog from headcount discount to true transformation. They’re uniquely positioned to make sure that AI initiatives aren’t nearly rushing up outdated processes however about designing new methods of working, serving, and competing.

Parting Phrases and the Articles to Comply with

The dialog with the above CIOs made one factor clear: AI — and significantly agentic AI — shouldn’t be a passing pattern however a basic shift that calls for CIO management. The challenges are immense, from governance and safety to workforce disruption and government alignment. But the alternatives are simply as profound: reworking industries, reimagining buyer experiences, and reshaping the very definition of labor. Success relies upon upon CIOs’ capability to steadiness threat with imaginative and prescient, construct belief by means of governance, and lead change with urgency and braveness.

Within the coming months, I can be sounding out CIOs about easy methods to navigate this new period of digital innovation. Here’s a information to the articles to come back.

-

Mastering the AI Fundamentals: Changing into an AI-Savvy CIO

-

Unlocking Strategic Worth: Figuring out the AI Use Instances

-

CIO Management in AI Transformation

-

AI Governance, Danger, and Safety

-

The Way forward for Work and Expertise within the AI Period

-

Balancing Pace with Duty

-

The CIO’s Evolving Mandate