For us deep studying practitioners, the world is – not flat, however – linear, largely. Or piecewise linear. Like different

linear approximations, or possibly much more so, deep studying will be extremely profitable at making predictions. However let’s

admit it – generally we simply miss the joys of the nonlinear, of excellent, outdated, deterministic-yet-unpredictable chaos. Can we

have each? It appears like we are able to. On this submit, we’ll see an software of deep studying (DL) to nonlinear time collection

prediction – or reasonably, the important step that predates it: reconstructing the attractor underlying its dynamics. Whereas this

submit is an introduction, presenting the subject from scratch, additional posts will construct on this and extrapolate to observational

datasets.

What to anticipate from this submit

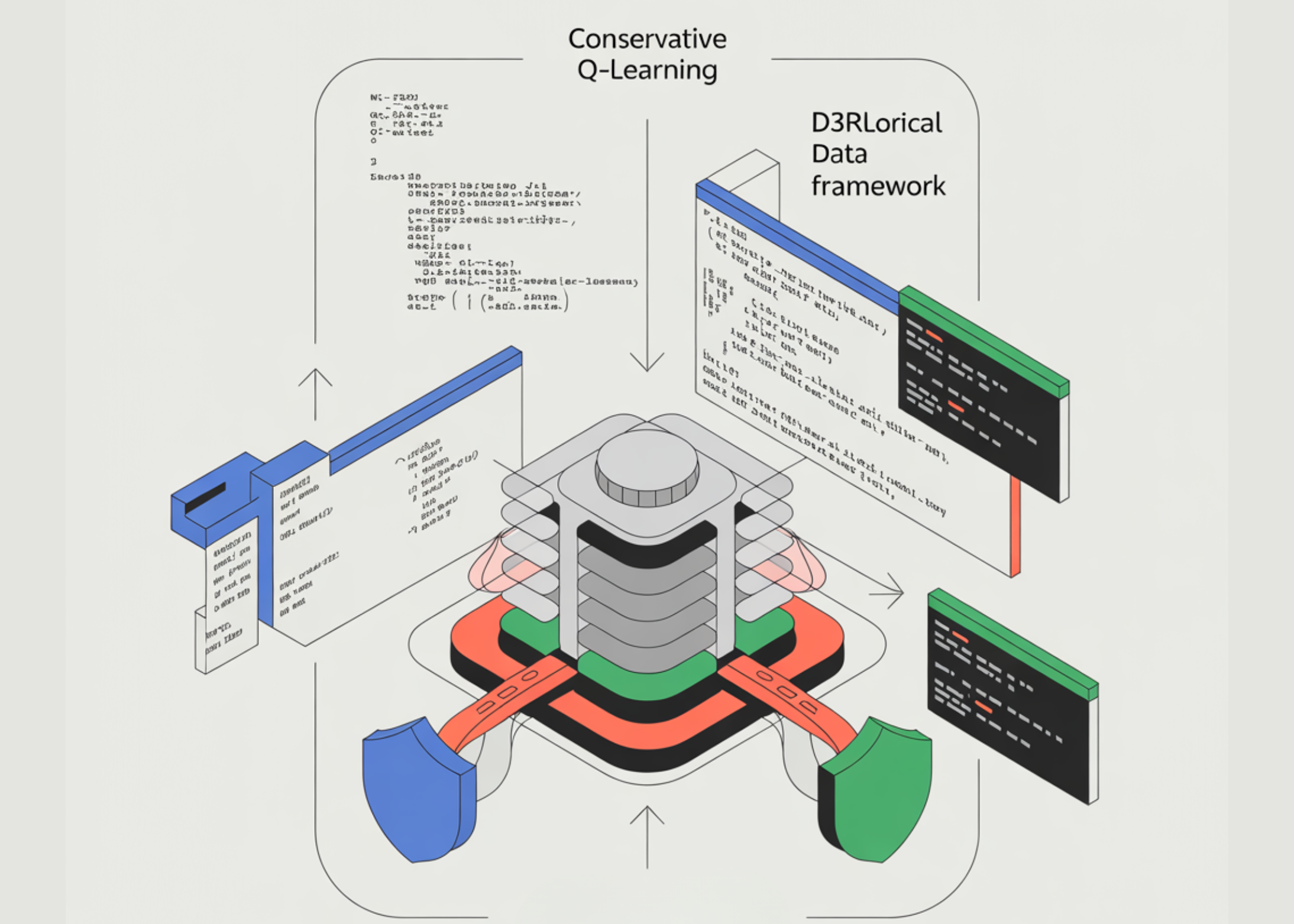

In his 2020 paper Deep reconstruction of unusual attractors from time collection (Gilpin 2020), William Gilpin makes use of an

autoencoder structure, mixed with a regularizer implementing the false nearest neighbors statistic

(Kennel, Brown, and Abarbanel 1992), to reconstruct attractors from univariate observations of multivariate, nonlinear dynamical programs. If

you’re feeling you utterly perceive the sentence you simply learn, chances are you’ll as properly immediately leap to the paper – come again for the

code although. If, alternatively, you’re extra aware of the chaos in your desk (extrapolating … apologies) than

chaos principle chaos, learn on. Right here, we’ll first go into what it’s all about, after which, present an instance software,

that includes Edward Lorenz’s well-known butterfly attractor. Whereas this preliminary submit is primarily purported to be a enjoyable introduction

to a captivating matter, we hope to comply with up with functions to real-world datasets sooner or later.

Rabbits, butterflies, and low-dimensional projections: Our drawback assertion in context

In curious misalignment with how we use “chaos” in day-to-day language, chaos, the technical idea, could be very totally different from

stochasticity, or randomness. Chaos might emerge from purely deterministic processes – very simplistic ones, even. Let’s see

how; with rabbits.

Rabbits, or: Delicate dependence on preliminary circumstances

You might be aware of the logistic equation, used as a toy mannequin for inhabitants progress. Typically it’s written like this –

with (x) being the dimensions of the inhabitants, expressed as a fraction of the maximal dimension (a fraction of potential rabbits, thus),

and (r) being the expansion charge (the speed at which rabbits reproduce):

[

x_{n + 1} = r x_n (1 – x_n)

]

This equation describes an iterated map over discrete timesteps (n). Its repeated software ends in a trajectory

describing how the inhabitants of rabbits evolves. Maps can have fastened factors, states the place additional operate software goes

on producing the identical outcome endlessly. Instance-wise, say the expansion charge quantities to (2.1), and we begin at two (fairly

totally different!) preliminary values, (0.3) and (0.8). Each trajectories arrive at a set level – the identical fastened level – in fewer

than 10 iterations. Had been we requested to foretell the inhabitants dimension after 100 iterations, we might make a really assured

guess, regardless of the of beginning worth. (If the preliminary worth is (0), we keep at (0), however we will be fairly sure of that as

properly.)

What if the expansion charge had been considerably increased, at (3.3), say? Once more, we instantly examine trajectories ensuing from preliminary

values (0.3) and (0.9):

This time, don’t see a single fastened level, however a two-cycle: Because the trajectories stabilize, inhabitants dimension inevitably is at

one among two potential values – both too many rabbits or too few, you could possibly say. The 2 trajectories are phase-shifted, however

once more, the attracting values – the attractor – is shared by each preliminary circumstances. So nonetheless, predictability is fairly

excessive. However we haven’t seen all the things but.

Let’s once more improve the expansion charge some. Now this (actually) is chaos:

Even after 100 iterations, there is no such thing as a set of values the trajectories recur to. We will’t be assured about any

prediction we’d make.

Or can we? In spite of everything, we have now the governing equation, which is deterministic. So we must always be capable to calculate the dimensions of

the inhabitants at, say, time (150)? In precept, sure; however this presupposes we have now an correct measurement for the beginning

state.

How correct? Let’s examine trajectories for preliminary values (0.3) and (0.301):

At first, trajectories appear to leap round in unison; however throughout the second dozen iterations already, they dissociate extra and

extra, and more and more, all bets are off. What if preliminary values are actually shut, as in, (0.3) vs. (0.30000001)?

It simply takes a bit longer for the disassociation to floor.

What we’re seeing right here is delicate dependence on preliminary circumstances, a vital precondition for a system to be chaotic.

In an nutshell: Chaos arises when a deterministic system exhibits delicate dependence on preliminary circumstances. Or as Edward

Lorenz is claimed to have put it,

When the current determines the long run, however the approximate current doesn’t roughly decide the long run.

Now if these unstructured, random-looking level clouds represent chaos, what with the all-but-amorphous butterfly (to be

displayed very quickly)?

Butterflies, or: Attractors and unusual attractors

Truly, within the context of chaos principle, the time period butterfly could also be encountered in several contexts.

Firstly, as so-called “butterfly impact,” it’s an instantiation of the templatic phrase “the flap of a butterfly’s wing in

_________ profoundly impacts the course of the climate in _________.” On this utilization, it’s largely a

metaphor for delicate dependence on preliminary circumstances.

Secondly, the existence of this metaphor led to a Rorschach-test-like identification with two-dimensional visualizations of

attractors of the Lorenz system. The Lorenz system is a set of three first-order differential equations designed to explain

atmospheric convection:

[

begin{aligned}

& frac{dx}{dt} = sigma (y – x)

& frac{dy}{dt} = rho x – x z – y

& frac{dz}{dt} = x y – beta z

end{aligned}

]

This set of equations is nonlinear, as required for chaotic conduct to seem. It additionally has the required dimensionality, which

for easy, steady programs, is a minimum of 3. Whether or not we truly see chaotic attractors – amongst which, the butterfly –

depends upon the settings of the parameters (sigma), (rho) and (beta). For the values conventionally chosen, (sigma=10),

(rho=28), and (beta=8/3) , we see it when projecting the trajectory on the (x) and (z) axes:

The butterfly is an attractor (as are the opposite two projections), however it’s neither a degree nor a cycle. It’s an attractor

within the sense that ranging from quite a lot of totally different preliminary values, we find yourself in some sub-region of the state house, and we

don’t get to flee no extra. That is simpler to see when watching evolution over time, as on this animation:

Now, to plot the attractor in two dimensions, we threw away the third. However in “actual life,” we don’t often have too a lot

data (though it might generally appear to be we had). We’d have numerous measurements, however these don’t often mirror

the precise state variables we’re serious about. In these instances, we might wish to truly add data.

Embeddings (as a non-DL time period), or: Undoing the projection

Assume that as an alternative of all three variables of the Lorenz system, we had measured only one: (x), the speed of convection. Typically

in nonlinear dynamics, the strategy of delay coordinate embedding (Sauer, Yorke, and Casdagli 1991) is used to reinforce a collection of univariate

measurements.

On this technique – or household of strategies – the univariate collection is augmented by time-shifted copies of itself. There are two

choices to be made: What number of copies so as to add, and the way large the delay ought to be. For example, if we had a scalar collection,

1 2 3 4 5 6 7 8 9 10 11 ...

a three-dimensional embedding with time delay 2 would seem like this:

1 3 5

2 4 6

3 5 7

4 6 8

5 7 9

6 8 10

7 9 11

...

Of the 2 choices to be made – variety of shifted collection and time lag – the primary is a choice on the dimensionality of

the reconstruction house. Varied theorems, reminiscent of Taken’s theorem,

point out bounds on the variety of dimensions required, supplied the dimensionality of the true state house is thought – which,

in real-world functions, usually will not be the case.The second has been of little curiosity to mathematicians, however is necessary

in observe. In reality, Kantz and Schreiber (Kantz and Schreiber 2004) argue that in observe, it’s the product of each parameters that issues,

because it signifies the time span represented by an embedding vector.

How are these parameters chosen? Concerning reconstruction dimensionality, the reasoning goes that even in chaotic programs,

factors which might be shut in state house at time (t) ought to nonetheless be shut at time (t + Delta t), supplied (Delta t) could be very

small. So say we have now two factors which might be shut, by some metric, when represented in two-dimensional house. However in three

dimensions, that’s, if we don’t “venture away” the third dimension, they’re much more distant. As illustrated in

(Gilpin 2020):

If this occurs, then projecting down has eradicated some important data. In second, the factors had been false neighbors. The

false nearest neighbors (FNN) statistic can be utilized to find out an ample embedding dimension, like this:

For every level, take its closest neighbor in (m) dimensions, and compute the ratio of their distances in (m) and (m+1)

dimensions. If the ratio is bigger than some threshold (t), the neighbor was false. Sum the variety of false neighbors over all

factors. Do that for various (m) and (t), and examine the ensuing curves.

At this level, let’s look forward on the autoencoder method. The autoencoder will use that very same FNN statistic as a

regularizer, along with the standard autoencoder reconstruction loss. This may lead to a brand new heuristic concerning embedding

dimensionality that includes fewer choices.

Going again to the basic technique for an instantaneous, the second parameter, the time lag, is much more troublesome to type out

(Kantz and Schreiber 2004). Often, mutual data is plotted for various delays after which, the primary delay the place it falls beneath some

threshold is chosen. We don’t additional elaborate on this query as it’s rendered out of date within the neural community method.

Which we’ll see now.

Studying the Lorenz attractor

Our code intently follows the structure, parameter settings, and information setup used within the reference

implementation William supplied. The loss operate, particularly, has been ported

one-to-one.

The overall thought is the next. An autoencoder – for instance, an LSTM autoencoder as offered right here – is used to compress

the univariate time collection right into a latent illustration of some dimensionality, which is able to represent an higher certain on the

dimensionality of the realized attractor. Along with imply squared error between enter and reconstructions, there shall be a

second loss time period, making use of the FNN regularizer. This ends in the latent items being roughly ordered by significance, as

measured by their variance. It’s anticipated that someplace within the itemizing of variances, a pointy drop will seem. The items

earlier than the drop are then assumed to encode the attractor of the system in query.

On this setup, there’s nonetheless a option to be made: methods to weight the FNN loss. One would run coaching for various weights

(lambda) and search for the drop. Certainly, this might in precept be automated, however given the novelty of the tactic – the

paper was printed this yr – it is smart to give attention to thorough evaluation first.

Knowledge era

We use the deSolve package deal to generate information from the Lorenz equations.

library(deSolve)

library(tidyverse)

parameters <- c(sigma = 10,

rho = 28,

beta = 8/3)

initial_state <-

c(x = -8.60632853,

y = -14.85273055,

z = 15.53352487)

lorenz <- operate(t, state, parameters) {

with(as.checklist(c(state, parameters)), {

dx <- sigma * (y - x)

dy <- x * (rho - z) - y

dz <- x * y - beta * z

checklist(c(dx, dy, dz))

})

}

instances <- seq(0, 500, size.out = 125000)

lorenz_ts <-

ode(

y = initial_state,

instances = instances,

func = lorenz,

parms = parameters,

technique = "lsoda"

) %>% as_tibble()

lorenz_ts[1:10,]

# A tibble: 10 x 4

time x y z

1 0 -8.61 -14.9 15.5

2 0.00400 -8.86 -15.2 15.9

3 0.00800 -9.12 -15.6 16.3

4 0.0120 -9.38 -16.0 16.7

5 0.0160 -9.64 -16.3 17.1

6 0.0200 -9.91 -16.7 17.6

7 0.0240 -10.2 -17.0 18.1

8 0.0280 -10.5 -17.3 18.6

9 0.0320 -10.7 -17.7 19.1

10 0.0360 -11.0 -18.0 19.7

We’ve already seen the attractor, or reasonably, its three two-dimensional projections, in determine 6 above. However now our situation is

totally different. We solely have entry to (x), a univariate time collection. Because the time interval used to numerically combine the

differential equations was reasonably tiny, we simply use each tenth statement.

obs <- lorenz_ts %>%

choose(time, x) %>%

filter(row_number() %% 10 == 0)

ggplot(obs, aes(time, x)) +

geom_line() +

coord_cartesian(xlim = c(0, 100)) +

theme_classic()

Preprocessing

The primary half of the collection is used for coaching. The info is scaled and reworked into the three-dimensional kind anticipated

by recurrent layers.

library(keras)

library(tfdatasets)

library(tfautograph)

library(reticulate)

library(purrr)

# scale observations

obs <- obs %>% mutate(

x = scale(x)

)

# generate timesteps

n <- nrow(obs)

n_timesteps <- 10

gen_timesteps <- operate(x, n_timesteps) {

do.name(rbind,

purrr::map(seq_along(x),

operate(i) {

begin <- i

finish <- i + n_timesteps - 1

out <- x[start:end]

out

})

) %>%

na.omit()

}

# practice with begin of time collection, take a look at with finish of time collection

x_train <- gen_timesteps(as.matrix(obs$x)[1:(n/2)], n_timesteps)

x_test <- gen_timesteps(as.matrix(obs$x)[(n/2):n], n_timesteps)

# add required dimension for options (we have now one)

dim(x_train) <- c(dim(x_train), 1)

dim(x_test) <- c(dim(x_test), 1)

# some batch dimension (worth not essential)

batch_size <- 100

# remodel to datasets so we are able to use customized coaching

ds_train <- tensor_slices_dataset(x_train) %>%

dataset_batch(batch_size)

ds_test <- tensor_slices_dataset(x_test) %>%

dataset_batch(nrow(x_test))

Autoencoder

With newer variations of TensorFlow (>= 2.0, actually if >= 2.2), autoencoder-like fashions are finest coded as customized fashions,

and skilled in an “autographed” loop.

The encoder is centered round a single LSTM layer, whose dimension determines the utmost dimensionality of the attractor. The

decoder then undoes the compression – once more, primarily utilizing a single LSTM.

# dimension of the latent code

n_latent <- 10L

n_features <- 1

encoder_model <- operate(n_timesteps,

n_features,

n_latent,

title = NULL) {

keras_model_custom(title = title, operate(self) {

self$noise <- layer_gaussian_noise(stddev = 0.5)

self$lstm <- layer_lstm(

items = n_latent,

input_shape = c(n_timesteps, n_features),

return_sequences = FALSE

)

self$batchnorm <- layer_batch_normalization()

operate (x, masks = NULL) {

x %>%

self$noise() %>%

self$lstm() %>%

self$batchnorm()

}

})

}

decoder_model <- operate(n_timesteps,

n_features,

n_latent,

title = NULL) {

keras_model_custom(title = title, operate(self) {

self$repeat_vector <- layer_repeat_vector(n = n_timesteps)

self$noise <- layer_gaussian_noise(stddev = 0.5)

self$lstm <- layer_lstm(

items = n_latent,

return_sequences = TRUE,

go_backwards = TRUE

)

self$batchnorm <- layer_batch_normalization()

self$elu <- layer_activation_elu()

self$time_distributed <- time_distributed(layer = layer_dense(items = n_features))

operate (x, masks = NULL) {

x %>%

self$repeat_vector() %>%

self$noise() %>%

self$lstm() %>%

self$batchnorm() %>%

self$elu() %>%

self$time_distributed()

}

})

}

encoder <- encoder_model(n_timesteps, n_features, n_latent)

decoder <- decoder_model(n_timesteps, n_features, n_latent)

Loss

As already defined above, the loss operate we practice with is twofold. On the one hand, we examine the unique inputs with

the decoder outputs (the reconstruction), utilizing imply squared error:

mse_loss <- tf$keras$losses$MeanSquaredError(

discount = tf$keras$losses$Discount$SUM)

As well as, we attempt to hold the variety of false neighbors small, by way of the next regularizer.

loss_false_nn <- operate(x) {

# authentic values utilized in Kennel et al. (1992)

rtol <- 10

atol <- 2

k_frac <- 0.01

ok <- max(1, ground(k_frac * batch_size))

tri_mask <-

tf$linalg$band_part(

tf$ones(

form = c(n_latent, n_latent),

dtype = tf$float32

),

num_lower = -1L,

num_upper = 0L

)

batch_masked <- tf$multiply(

tri_mask[, tf$newaxis,], x[tf$newaxis, reticulate::py_ellipsis()]

)

x_squared <- tf$reduce_sum(

batch_masked * batch_masked,

axis = 2L,

keepdims = TRUE

)

pdist_vector <- x_squared +

tf$transpose(

x_squared, perm = c(0L, 2L, 1L)

) -

2 * tf$matmul(

batch_masked,

tf$transpose(batch_masked, perm = c(0L, 2L, 1L))

)

all_dists <- pdist_vector

all_ra <-

tf$sqrt((1 / (

batch_size * tf$vary(1, 1 + n_latent, dtype = tf$float32)

)) *

tf$reduce_sum(tf$sq.(

batch_masked - tf$reduce_mean(batch_masked, axis = 1L, keepdims = TRUE)

), axis = c(1L, 2L)))

all_dists <- tf$clip_by_value(all_dists, 1e-14, tf$reduce_max(all_dists))

top_k <- tf$math$top_k(-all_dists, tf$forged(ok + 1, tf$int32))

top_indices <- top_k[[1]]

neighbor_dists_d <- tf$collect(all_dists, top_indices, batch_dims = -1L)

neighbor_new_dists <- tf$collect(

all_dists[2:-1, , ],

top_indices[1:-2, , ],

batch_dims = -1L

)

# Eq. 4 of Kennel et al. (1992)

scaled_dist <- tf$sqrt((

tf$sq.(neighbor_new_dists) -

tf$sq.(neighbor_dists_d[1:-2, , ])) /

tf$sq.(neighbor_dists_d[1:-2, , ])

)

# Kennel situation #1

is_false_change <- (scaled_dist > rtol)

# Kennel situation #2

is_large_jump <-

(neighbor_new_dists > atol * all_ra[1:-2, tf$newaxis, tf$newaxis])

is_false_neighbor <-

tf$math$logical_or(is_false_change, is_large_jump)

total_false_neighbors <-

tf$forged(is_false_neighbor, tf$int32)[reticulate::py_ellipsis(), 2:(k + 2)]

reg_weights <- 1 -

tf$reduce_mean(tf$forged(total_false_neighbors, tf$float32), axis = c(1L, 2L))

reg_weights <- tf$pad(reg_weights, checklist(checklist(1L, 0L)))

activations_batch_averaged <-

tf$sqrt(tf$reduce_mean(tf$sq.(x), axis = 0L))

loss <- tf$reduce_sum(tf$multiply(reg_weights, activations_batch_averaged))

loss

}

MSE and FNN are added , with FNN loss weighted in accordance with the important hyperparameter of this mannequin:

This worth was experimentally chosen because the one finest conforming to our look-for-the-highest-drop heuristic.

Mannequin coaching

The coaching loop intently follows the aforementioned recipe on methods to

practice with customized fashions and tfautograph.

train_loss <- tf$keras$metrics$Imply(title='train_loss')

train_fnn <- tf$keras$metrics$Imply(title='train_fnn')

train_mse <- tf$keras$metrics$Imply(title='train_mse')

train_step <- operate(batch) {

with (tf$GradientTape(persistent = TRUE) %as% tape, {

code <- encoder(batch)

reconstructed <- decoder(code)

l_mse <- mse_loss(batch, reconstructed)

l_fnn <- loss_false_nn(code)

loss <- l_mse + fnn_weight * l_fnn

})

encoder_gradients <- tape$gradient(loss, encoder$trainable_variables)

decoder_gradients <- tape$gradient(loss, decoder$trainable_variables)

optimizer$apply_gradients(

purrr::transpose(checklist(encoder_gradients, encoder$trainable_variables))

)

optimizer$apply_gradients(

purrr::transpose(checklist(decoder_gradients, decoder$trainable_variables))

)

train_loss(loss)

train_mse(l_mse)

train_fnn(l_fnn)

}

training_loop <- tf_function(autograph(operate(ds_train) {

for (batch in ds_train) {

train_step(batch)

}

tf$print("Loss: ", train_loss$outcome())

tf$print("MSE: ", train_mse$outcome())

tf$print("FNN loss: ", train_fnn$outcome())

train_loss$reset_states()

train_mse$reset_states()

train_fnn$reset_states()

}))

optimizer <- optimizer_adam(lr = 1e-3)

for (epoch in 1:200) {

cat("Epoch: ", epoch, " -----------n")

training_loop(ds_train)

}

After 2 hundred epochs, general loss is at 2.67, with the MSE element at 1.8 and FNN at 0.09.

Acquiring the attractor from the take a look at set

We use the take a look at set to examine the latent code:

# A tibble: 6,242 x 10

V1 V2 V3 V4 V5 V6 V7 V8 V9 V10

1 0.439 0.401 -0.000614 -0.0258 -0.00176 -0.0000276 0.000276 0.00677 -0.0239 0.00906

2 0.415 0.504 0.0000481 -0.0279 -0.00435 -0.0000970 0.000921 0.00509 -0.0214 0.00921

3 0.389 0.619 0.000848 -0.0240 -0.00661 -0.000171 0.00106 0.00454 -0.0150 0.00794

4 0.363 0.729 0.00137 -0.0143 -0.00652 -0.000244 0.000523 0.00450 -0.00594 0.00476

5 0.335 0.809 0.00128 -0.000450 -0.00338 -0.000307 -0.000561 0.00407 0.00394 -0.000127

6 0.304 0.828 0.000631 0.0126 0.000889 -0.000351 -0.00167 0.00250 0.0115 -0.00487

7 0.274 0.769 -0.000202 0.0195 0.00403 -0.000367 -0.00220 -0.000308 0.0145 -0.00726

8 0.246 0.657 -0.000865 0.0196 0.00558 -0.000359 -0.00208 -0.00376 0.0134 -0.00709

9 0.224 0.535 -0.00121 0.0162 0.00608 -0.000335 -0.00169 -0.00697 0.0106 -0.00576

10 0.211 0.434 -0.00129 0.0129 0.00606 -0.000306 -0.00134 -0.00927 0.00820 -0.00447

# … with 6,232 extra rows

Because of the FNN regularizer, the latent code items ought to be ordered roughly by lowering variance, with a pointy drop

showing some place (if the FNN weight has been chosen adequately).

For an fnn_weight of 10, we do see a drop after the primary two items:

predicted %>% summarise_all(var)

# A tibble: 1 x 10

V1 V2 V3 V4 V5 V6 V7 V8 V9 V10

1 0.0739 0.0582 1.12e-6 3.13e-4 1.43e-5 1.52e-8 1.35e-6 1.86e-4 1.67e-4 4.39e-5

So the mannequin signifies that the Lorenz attractor will be represented in two dimensions. If we nonetheless wish to plot the

full (reconstructed) state house of three dimensions, we must always reorder the remaining variables by magnitude of

variance. Right here, this ends in three projections of the set V1, V2 and V4:

Wrapping up (for this time)

At this level, we’ve seen methods to reconstruct the Lorenz attractor from information we didn’t practice on (the take a look at set), utilizing an

autoencoder regularized by a customized false nearest neighbors loss. It is very important stress that at no level was the community

offered with the anticipated answer (attractor) – coaching was purely unsupervised.

It is a fascinating outcome. In fact, considering virtually, the subsequent step is to acquire predictions on heldout information. Given

how lengthy this textual content has grow to be already, we reserve that for a follow-up submit. And once more in fact, we’re enthusiastic about different

datasets, particularly ones the place the true state house will not be recognized beforehand. What about measurement noise? What about

datasets that aren’t utterly deterministic? There’s a lot to discover, keep tuned – and as all the time, thanks for

studying!

Gilpin, William. 2020.

“Deep Reconstruction of Unusual Attractors from Time Sequence.” https://arxiv.org/abs/2002.05909.

Kantz, Holger, and Thomas Schreiber. 2004. Nonlinear Time Sequence Evaluation. Cambridge College Press.

Kennel, Matthew B., Reggie Brown, and Henry D. I. Abarbanel. 1992.

“Figuring out Embedding Dimension for Part-House Reconstruction Utilizing a Geometrical Development.” Phys. Rev. A 45 (March): 3403–11.

https://doi.org/10.1103/PhysRevA.45.3403.

Sauer, Tim, James A. Yorke, and Martin Casdagli. 1991.

“Embedology.” Journal of Statistical Physics 65 (3-4): 579–616.

https://doi.org/10.1007/BF01053745.

Strang, Gilbert. 2019. Linear Algebra and Studying from Knowledge. Wellesley Cambridge Press.

Strogatz, Steven. 2015. Nonlinear Dynamics and Chaos: With Functions to Physics, Biology, Chemistry, and Engineering. Westview Press.

Take pleasure in this weblog? Get notified of latest posts by electronic mail:

Posts additionally obtainable at r-bloggers