That is Half 2 of a multi-part collection on utilizing AI brokers for economics analysis. Half 1 launched the panorama. This entry develops the speculation underlying loads of the arguments I’ll make in subsequent posts, however that is additionally a principle I’ve been posting about it for a yr or two on right here. I simply needed it multi function place, plus I needed to indicate you the cool slides I made. That is additionally based mostly on a chat I gave on the Boston Fed Monday, and I simply needed to additionally lay out that discuss in case anybody needed to learn it too. A few of it will get a bit of repetitive, plus a few of you’ve appear me write about this or current on it, however like I stated, I needed to get it down on paper.

The Manufacturing of Cognitive Output

Let’s begin with one thing acquainted to economists: a manufacturing perform.

Cognitive tasks—research, code, evaluation, homework—are produced with two inputs:

-

H = Human time

-

M = Machine time

The manufacturing perform is solely:

The query that issues for the whole lot that follows is: What’s the form of the isoquants?

Pre-AI: The World of Quasi-Concave Manufacturing

For producing devices and widgets, the thought is that we work with manufacturing features that take capital and labor, combine it collectively, and get devices and widgets. However that’s noncontroversial relating to devices and widgets, kind of issues, bodily stuff. However what about cognitive output? What about homework, analysis, concepts, artwork? What finest describes these manufacturing features?

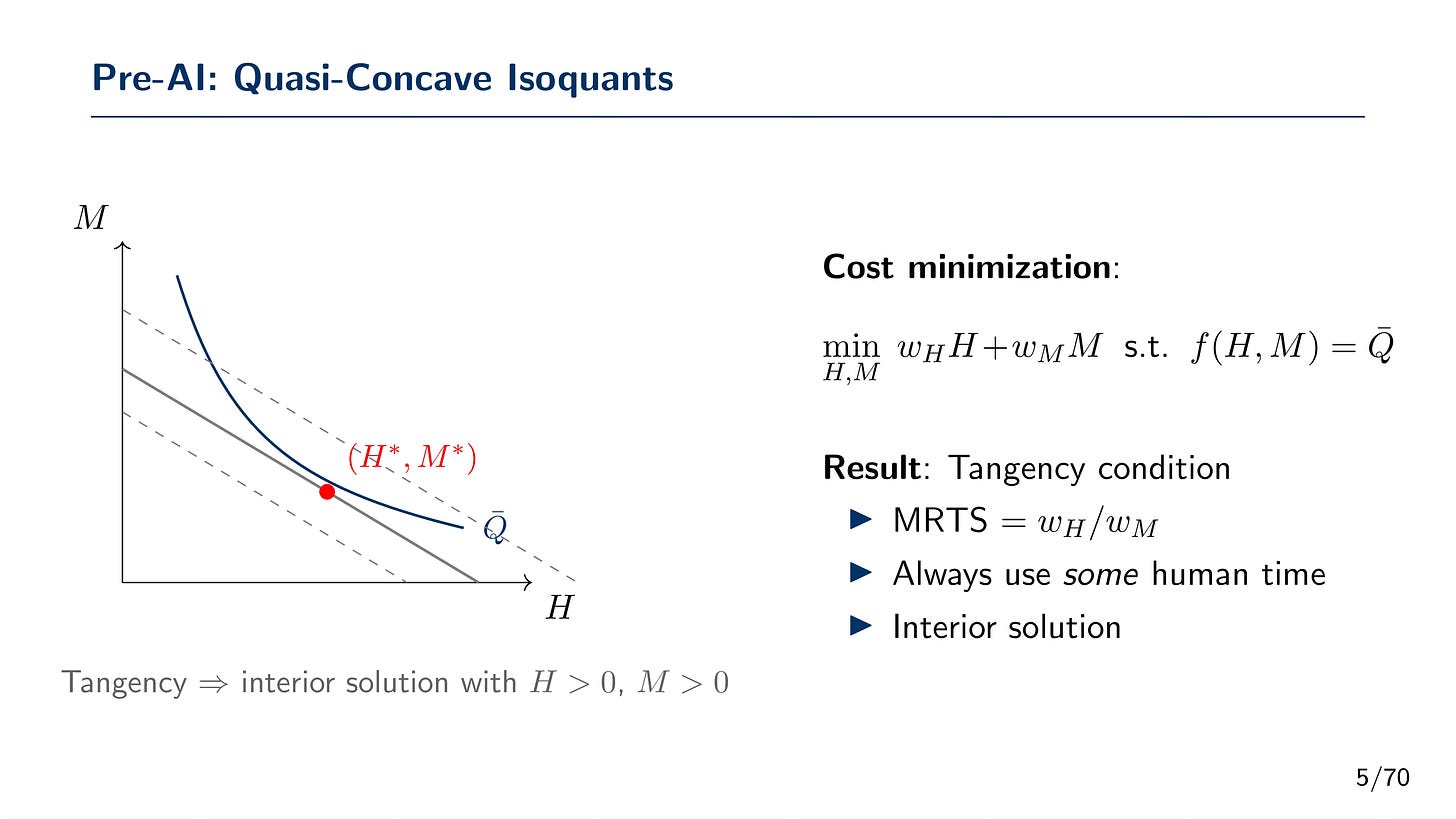

Earlier than AI, the manufacturing of cognitive output had a regular property: quasi-concave manufacturing features with concave isoquants. Why? Effectively, this can be a foundational assumption in microeconomics, and it held for good cause. We are likely to suppose you can’t do something with out utilizing at the very least some labor, and so in core micro principle, you often encourage manufacturing by specifying manufacturing features that fulfill that property, of which quasi-concave is one.

So let’s specify now that one produces cognitive output, not with factories and steam engines, however with human time inputs in addition to machine time inputs (often thought to have a possibility price and that we lease available on the market as such). What does quasi-concavity imply right here? It implies that the isoquants curve towards the origin however additionally they by no means contact the x (human time inputs) and y (machine time inputs) axes. To provide any cognitive output at all—to full any homework task, to write down any analysis paper—you wanted some human time. All the time. How a lot relies on the cognitive output, however quasi-concavity implies that you’ll, irrespective of the relative costs of machine and human time, want at the very least a few of each.

So have a look at the above image of an isoquant minimize from the stomach of a quasi-concave manufacturing perform. It’s curved like I stated and because it’s a set isoquant, we will consider it as some analysis output, like a track, a scientific paper, or homework. The price of producing it’s the weighted sum of human time (H) and machine time (M) the place the weights are the costs/wages of renting that point at market costs. And the answer for a profit-maximizing agency is to decide on to make that individual output, Q-bar), utilizing an optimum mixture of machine and labor time that minimizes price topic to being on that isoquant, which is to set the marginal fee of technical substitution (MRTS) equal to the relative costs of human to machine time. Given the isoquant is curved, however the associated fee perform is a straight line, we finish with an inside answer utilizing at the very least some machines and a few human time.

Now what’s the usage of machine time earlier than AI precisely that’s getting used to provide cognitive output? Perhaps it’s a guitar. Perhaps it’s a calculator, a phrase processor. It’s statistical software program that inverts matrices for you so that you just aren’t spending the remainder of your life inverting a big matrix by hand. The machine does a job you as soon as did manually.

However the hot button is that human time was all the time strictly constructive. You couldn’t produce cognitive output with out spending time considering, struggling, studying.

Put up-AI: The World of Linear Isoquants

So, I don’t suppose it’s controversial in December 2025 to state what I feel is the plain which is that generative AI has radically modified the manufacturing applied sciences for producing cognitive output. Simply what it has performed, and the way it has performed it, and whether or not it has made it higher is issues individuals debate, however undoubtedly it has. We want solely have a look at papers discovering loads of “ChatGPT phrases” exhibiting up in papers — persons are utilizing generative AI to do scientific work. So one thing has modified.

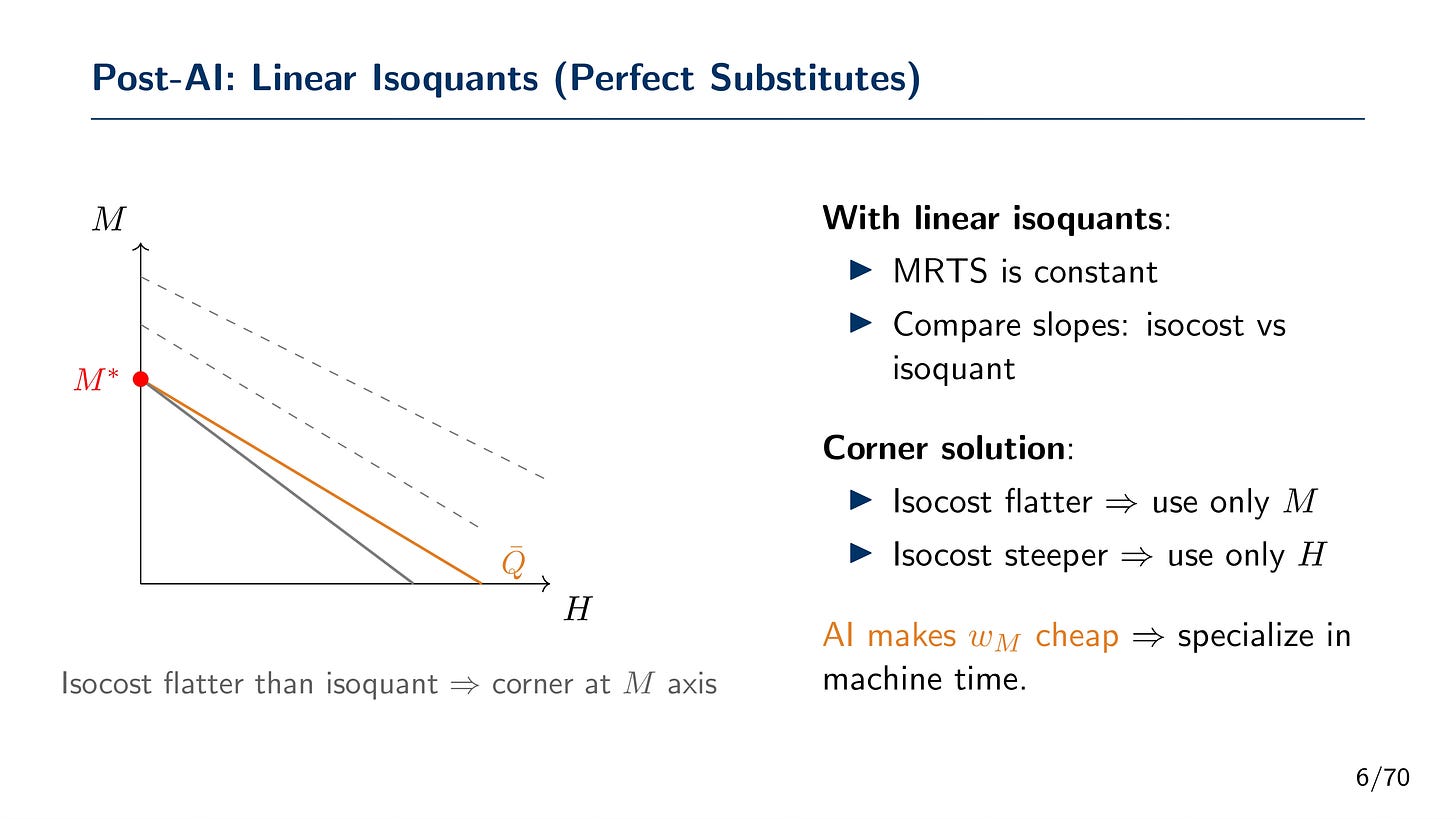

For the aim of my principle, I’ll body it in manufacturing phrases. For a lot of cognitive duties, the isoquants are not quasi-concave.

They’re now linear isoquants. And that has enormous penalties.

When manufacturing features produce linear isoquants, it means machine time and human time are good substitutes.

And this adjustments the whole lot about price minimization.

With linear isoquants, the tangency situation not applies. As an alternative, you examine slopes. The isocost line has slope -w_H/w_M. The isoquant has slope -a/b. In the event that they’re not equal—and generically they gained’t be—you get a nook answer, which implies that the rational, profit-maximizing scientist/artist/creator will select the least expensive quantity of human or machine time — not a few of each. One or the opposite.

If the isocost is flatter than the isoquant: use solely M.

If the isocost is steeper than the isoquant: use solely H.

And right here’s what’s occurred in my view: AI has made w_M terribly low-cost. The price of machine time for cognitive duties has collapsed. Notably given these are costs on the margin of time use, not the whole or common price. And since we pay for gen AI on a subscription foundation, not a per-use foundation, gen AI will all the time be cheaper than human time which has at worst a leisure-based shadow worth. That’s except we begin taxing gen AI on the margin, that’s, however that’s for one more submit.

So the rational cost-minimizer chooses the nook: H = 0 (zero human time inputs), M > 0 (all machine time inputs). For the primary time in human historical past, we will produce cognitive output with zero human time.

The Drawback: Human Capital Requires Consideration, Consideration Requires Time

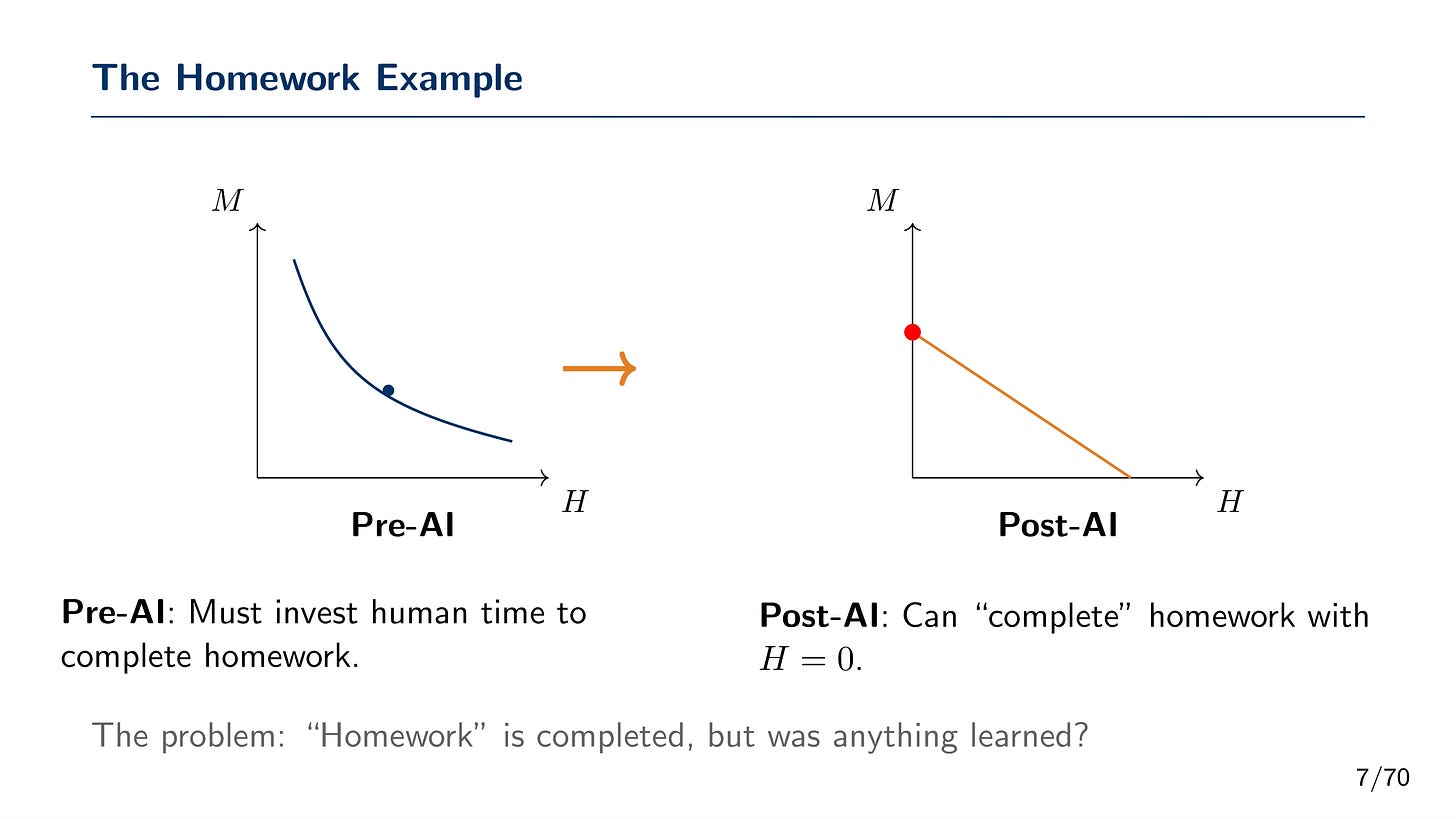

Right here’s the place the speculation will get interesting—and troubling. I’ll use my favourite instance right here — homework. The coed should produce homework, which is a specific sort of output produced by college students, prescribed by academics. The homework will get “accomplished.” The analysis report will get “written.” However was something discovered?

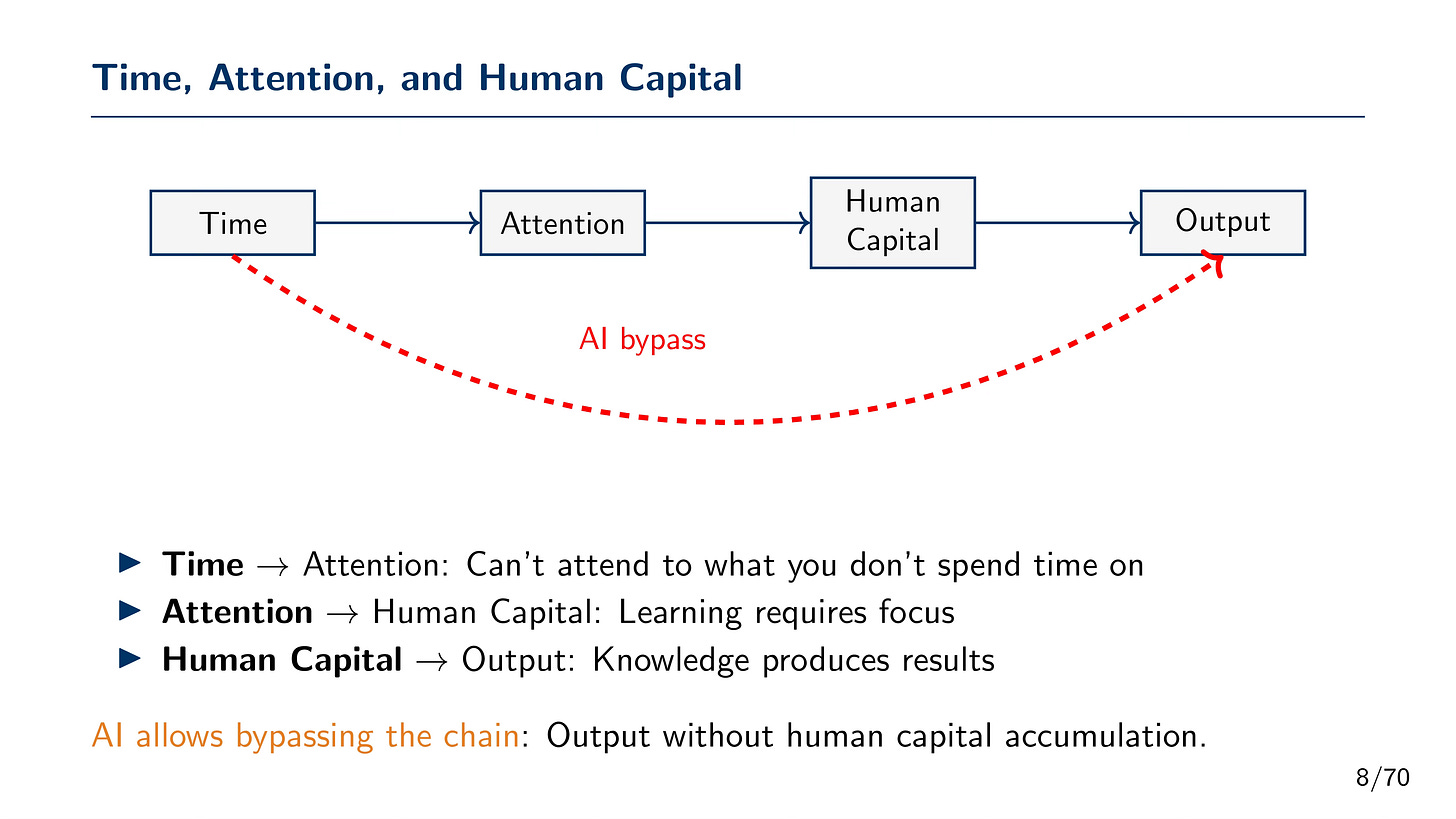

Human capital just isn’t produced by magic. It’s produced via a selected chain proven on this slide.

Every hyperlink on this chain is crucial to producing cognitive output. Observe the direct results between time, consideration, information, and at last, the output itself.

-

Time → Consideration: You can not attend to what you don’t spend time on. Consideration is time directed narrowly at mental puzzles.

-

Consideration → Human Capital: Studying requires focus. Wrestle is pedagogically essential. The issue is the purpose.

-

Human Capital → Output: Data produces outcomes. Experience allows judgment.

However AI creates a bypass. It gives a direct route:

No human time. No consideration. And no human capital accumulation. Simply output. We get analysis output (e.g., songs, homework, scientific papers) with out human capital accumulation.

This bypass is very environment friendly for producing output. However it severs the connection between manufacturing and studying. We will now full the homework with out doing the homework. We will produce the analysis with out understanding the analysis.

Two Pathways to Cognitive Output

Let me state this extra formally. There are actually two distinct manufacturing pathways:

Pathway 1 (Conventional):

(textual content{Human Time} rightarrow textual content{Consideration} rightarrow textual content{Human Capital} rightarrow textual content{Output})

Pathway 2 (AI Bypass):

(textual content{AI} rightarrow textual content{Output})

Pathway 1 is sluggish, expensive, and produces each output and human capital as joint merchandise. Pathway 2 is quick, low-cost, and produces output solely. So, we have to ask ourselves — will we care about output solely? Will we care about human capital solely? Will we care about each?

Cheap individuals will no probably have totally different opinions on that, as to border it that method when it comes to preferences is to instantly invite the impossibility of reconciling these preferences. There’s probably single reply to that. Some won’t like the place we’re going, the place machines produce our songs and scientific papers, and a few will find it irresistible. However my level is extra constructive for now and that’s to easily level out {that a} rational agent going through linear isoquants and comparatively low-cost machine time will all the time select Pathway 2 as a result of on the margin, they need to! That’s what price minimization tells us.

However right here’s the paradox: the selection that minimizes price for any single job might maximize prices throughout a lifetime of duties. Human capital depreciates. Abilities atrophy. And in the event you’re simply beginning out—a scholar, an early-career researcher—you might by no means purchase the human capital within the first place.

So Ought to We Care? The Productiveness Curve and the Hazard Zone

Let me present you the way this performs out dynamically. And in my framing, you’ll most likely be capable to inform that I’m someplace within the center normatively between a purely Luddite method of eschewing the usage of AI for producing cognitive output totally and permitting it to go fully unchecked.

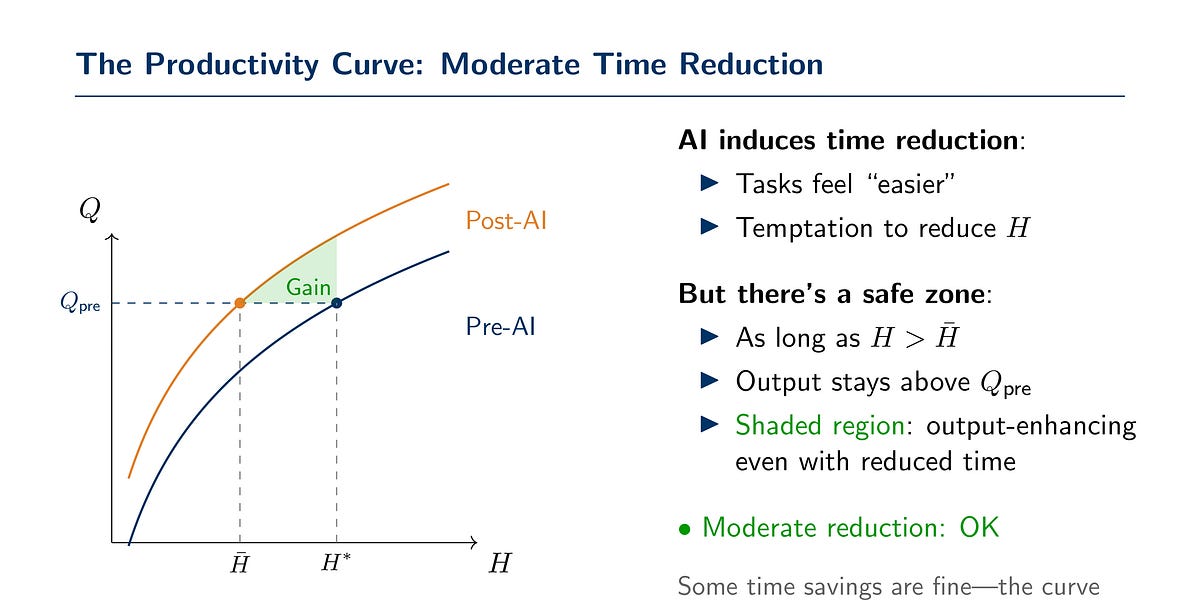

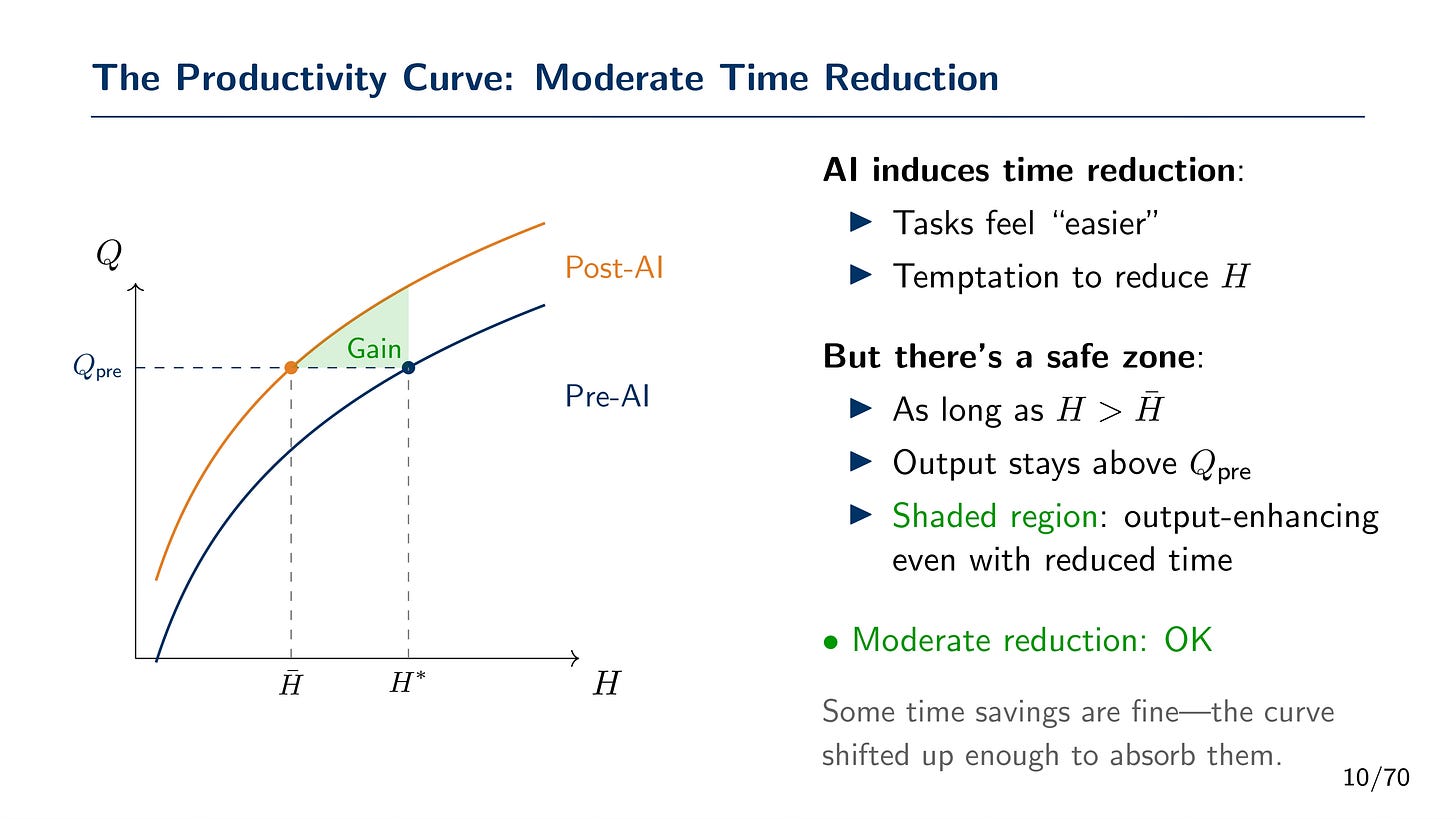

First I’ll think about that holding mounted capital and machine time, the manufacturing of cognitive output will exhibit diminishing marginal returns to human time. However, I’ll merely assert that maybe AI will shift the productiveness curve upward. For any given quantity of human time H, in different phrases, now you can produce extra output Q than earlier than.

For those who keep your human time on the pre-AI stage H*, you seize pure productiveness good points. Similar time, extra output. That is unambiguously good. I’ve written about this earlier than, however simply am saying it once more so you’ll be able to see that fairly graphic!

However right here’s the temptation: if duties really feel simpler, why not cut back human time? The curve shifted up, so certainly you’ll be able to afford to dial again.

And certainly, there’s a protected zone. It’s protected in that an individual is decreasing time in direction of cognitive outputs and but their very own private human capital accumulation has grown. That looks as if win-win if you’re considering it relative to a counterfactual. You’ll be able to cut back human time considerably and nonetheless find yourself producing greater than earlier than. The upward shift absorbs among the decreased enter.

However there’s a threshold. Name it H-bar.

Under that threshold lies the hazard zone.

Within the hazard zone, you’ve decreased human time a lot that regardless of the productivity-enhancing know-how, you’re truly producing much less than you probably did earlier than AI. The behavioral response overwhelms the technological enchancment.

That is the paradox: a productivity-enhancing know-how could make us worse off if it induces an excessive amount of substitution away from human enter. That is one thing Ricardo notes within the third version of his guide, it’s one thing Malthus had famous, it’s one thing that Paul Samuelson wrote about, and it’s one thing that modern economists like Acemoglu, Johnson and Restrepo have all famous. And this has relevance insofar as human capital continues in long term equilibrium to find out wages. The wealth of countries versus the wages of countries.

Why This May Be Totally different

You would possibly object: Haven’t we all the time offloaded cognitive work to machines? I don’t invert matrices by hand. I don’t search for logarithm tables. My laptop does these issues, and I don’t fear about my matrix-inversion human capital depreciating.

Truthful level. However I feel this time is totally different, for a selected cause.

After we offloaded matrix inversion to computer systems, we offloaded a *routine* subtask inside a bigger cognitive course of that also required human time and a focus. The economist nonetheless needed to specify the mannequin, interpret the outcomes, choose whether or not the assumptions have been believable. The pc was a device inside a human-directed workflow.

What’s new about AI is that it could deal with the *total* cognitive workflow. Not simply the routine subtasks, however the judgment, the interpretation, the specification. You’ll be able to ask it to “write a paper about X” and it’ll produce one thing that appears like a paper about X.

This implies the price of producing cognitive output drops towards zero. And when the associated fee drops towards zero, the query turns into: Who’s the marginal researcher? What occurs to general human capital within the financial system when cognitive output will be produced with out human cognition?

The Consideration Drawback

Let me dig deeper into consideration, as a result of I feel it’s the crux of the matter.

Consideration just isn’t free. It’s expensive and resource-intensive. It makes use of the thoughts’s capability. It requires time directed narrowly at mental puzzles, usually puzzles which might be irritating, complicated, and troublesome.

However consideration can be the important thing to discovery. Scientists report this universally: they love the work. They love the sensation of discovery. There’s an mental hedonism in fixing arduous issues, in understanding one thing that was beforehand mysterious.

After we launch human time from cognitive manufacturing, we essentially launch consideration. You can not attend to what you don’t spend time on. And when consideration falls, the intrinsic rewards of mental work disappear. What’s left are the extrinsic rewards—financial incentives, profession development, publications.

If intrinsic rewards fade and solely extrinsic rewards stay, then the usage of AI for cognitive manufacturing turns into dominant. People turn out to be managers of the method, pushing buttons, however nothing extra.

Perhaps that is superb. Perhaps we’re snug being managers. Perhaps the outputs matter greater than the method.

However I believe one thing is misplaced. The enjoyment of understanding is misplaced. The depth of experience is misplaced. And finally, the flexibility to confirm and direct the AI could also be misplaced, as a result of verification requires the very human capital that the AI bypass prevents us from accumulating.

Who watches the watchers, when the watchers not perceive what they’re watching?

Coming Quickly: The Setup for Half 3 in My Sequence

So right here’s the place we’re:

-

The Productiveness Zone: Human time is maintained. Consideration is preserved. Human capital accumulates. Output improves. AI augments the human course of.

-

The Hazard Zone: Human time collapses. Consideration disappears. Human capital depreciates or by no means varieties. Output might even decline regardless of higher know-how.

The distinction between these zones just isn’t the know-how. It’s the behavioral response to the know-how. It’s whether or not people keep engagement or launch it totally.

Within the subsequent entry, I’ll argue one thing which will appear paradoxical: AI brokers—not chatbots, not copy-paste workflows, however true agentic AI that operates in your terminal and executes code—may truly be *higher* for preserving human consideration than easier generative AI instruments.

Why? As a result of brokers require supervision. They require path. They require you to grasp sufficient to confirm what they’re doing. The “vibe coding” approach—copy code from ChatGPT, paste, run, copy error, paste, repeat—requires nearly no consideration. You’re a messenger between the AI and your IDE.

However working with an AI agent is extra like managing an excellent however junior collaborator. You need to know what you need. You need to consider whether or not what it produces is sensible. You need to catch its errors. That is cognitively demanding. And that demand could also be precisely what retains us on the best facet of the curve.

Most of us don’t have an Ivy Leaguer’s entry to a military of sensible predocs, RAs, and challenge managers. Most of us don’t have a well-endowed lab like Raj Chetty. However I feel AI brokers give us all three—predocs, challenge managers, and RAs—and all of a sudden we’re at a radical shift in our private manufacturing chance frontiers.

The important thing gained’t be merely the know-how. Will probably be **progressive workflows** that keep human engagement whereas leveraging machine functionality.

Extra on that subsequent time.

These slides are from a chat I gave on the Federal Reserve Financial institution of Boston in December 2025. The deck was produced with help from Claude Code (Anthropic’s Claude Opus 4.5).