TL;DR

LLM-as-a-Decide programs could be fooled by confident-sounding however mistaken solutions, giving groups false confidence of their fashions. We constructed a human-labeled dataset and used our open-source framework syftr to systematically take a look at choose configurations. The outcomes? They’re within the full put up. However right here’s the takeaway: don’t simply belief your choose — take a look at it.

After we shifted to self-hosted open-source fashions for our agentic retrieval-augmented era (RAG) framework, we had been thrilled by the preliminary outcomes. On powerful benchmarks like FinanceBench, our programs appeared to ship breakthrough accuracy.

That pleasure lasted proper up till we regarded nearer at how our LLM-as-a-Decide system was grading the solutions.

The reality: our new judges had been being fooled.

A RAG system, unable to seek out knowledge to compute a monetary metric, would merely clarify that it couldn’t discover the data.

The choose would reward this plausible-sounding clarification with full credit score, concluding the system had accurately recognized the absence of information. That single flaw was skewing outcomes by 10–20% — sufficient to make a mediocre system look state-of-the-art.

Which raised a important query: in the event you can’t belief the choose, how are you going to belief the outcomes?

Your LLM choose is perhaps mendacity to you, and also you received’t know until you rigorously take a look at it. The perfect choose isn’t at all times the most important or most costly.

With the appropriate knowledge and instruments, nonetheless, you’ll be able to construct one which’s cheaper, extra correct, and extra reliable than gpt-4o-mini. On this analysis deep dive, we present you the way.

Why LLM judges fail

The problem we uncovered went far past a easy bug. Evaluating generated content material is inherently nuanced, and LLM judges are liable to refined however consequential failures.

Our preliminary difficulty was a textbook case of a choose being swayed by confident-sounding reasoning. For instance, in a single analysis a few household tree, the choose concluded:

“The generated reply is related and accurately identifies that there’s inadequate data to find out the particular cousin… Whereas the reference reply lists names, the generated reply’s conclusion aligns with the reasoning that the query lacks crucial knowledge.”

In actuality, the data was out there — the RAG system simply did not retrieve it. The choose was fooled by the authoritative tone of the response.

Digging deeper, we discovered different challenges:

- Numerical ambiguity: Is a solution of three.9% “shut sufficient” to three.8%? Judges usually lack the context to resolve.

- Semantic equivalence: Is “APAC” a suitable substitute for “Asia-Pacific: India, Japan, Malaysia, Philippines, Australia”?

- Defective references: Typically the “floor reality” reply itself is mistaken, leaving the choose in a paradox.

These failures underscore a key lesson: merely choosing a robust LLM and asking it to grade isn’t sufficient. Good settlement between judges, human or machine, is unattainable with no extra rigorous method.

Constructing a framework for belief

To handle these challenges, we would have liked a approach to consider the evaluators. That meant two issues:

- A high-quality, human-labeled dataset of judgments.

- A system to methodically take a look at completely different choose configurations.

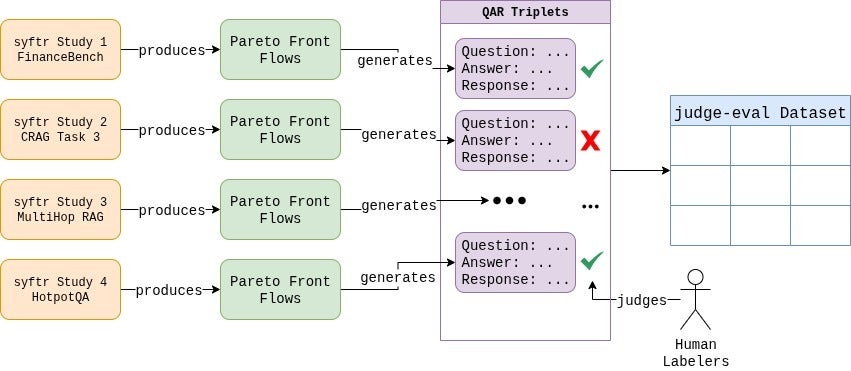

First, we created our personal dataset, now out there on HuggingFace. We generated tons of of question-answer-response triplets utilizing a variety of RAG programs.

Then, our crew hand-labeled all 807 examples.

Each edge case was debated, and we established clear, constant grading guidelines.

The method itself was eye-opening, displaying simply how subjective analysis could be. Ultimately, our labeled dataset mirrored a distribution of 37.6% failing and 62.4% passing responses.

Subsequent, we would have liked an engine for experimentation. That’s the place our open-source framework, syftr, got here in.

We prolonged it with a brand new JudgeFlow class and a configurable search house to range LLM selection, temperature, and immediate design. This made it potential to systematically discover — and determine — the choose configurations most aligned with human judgment.

Placing the judges to the take a look at

With our framework in place, we started experimenting.

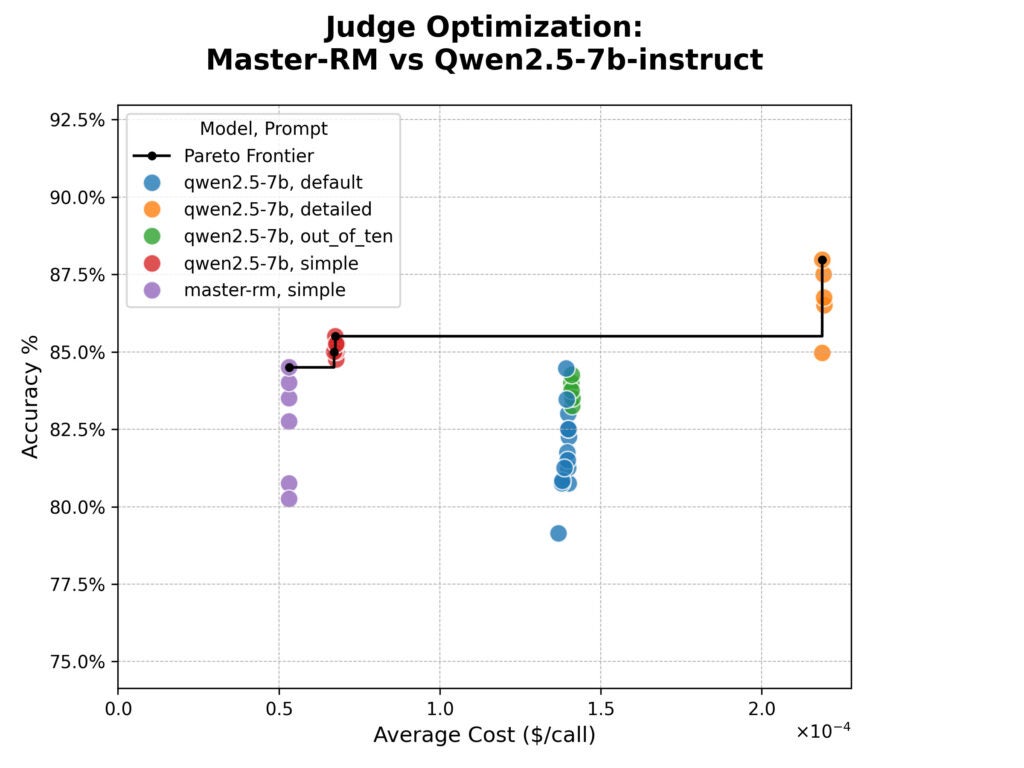

Our first take a look at targeted on the Grasp-RM mannequin, particularly tuned to keep away from “reward hacking” by prioritizing content material over reasoning phrases.

We pitted it in opposition to its base mannequin utilizing 4 prompts:

- The “default” LlamaIndex CorrectnessEvaluator immediate, asking for a 1–5 ranking

- The identical CorrectnessEvaluator immediate, asking for a 1–10 ranking

- A extra detailed model of the CorrectnessEvaluator immediate with extra specific standards.

- A easy immediate: “Return YES if the Generated Reply is right relative to the Reference Reply, or NO if it’s not.”

The syftr optimization outcomes are proven under within the cost-versus-accuracy plot. Accuracy is the straightforward p.c settlement between the choose and human evaluators, and value is estimated based mostly on the per-token pricing of Collectively.ai‘s internet hosting providers.

The outcomes had been shocking.

Grasp-RM was no extra correct than its base mannequin and struggled with producing something past the “easy” immediate response format because of its targeted coaching.

Whereas the mannequin’s specialised coaching was efficient in combating the consequences of particular reasoning phrases, it didn’t enhance total alignment to the human judgements in our dataset.

We additionally noticed a transparent trade-off. The “detailed” immediate was probably the most correct, however practically 4 instances as costly in tokens.

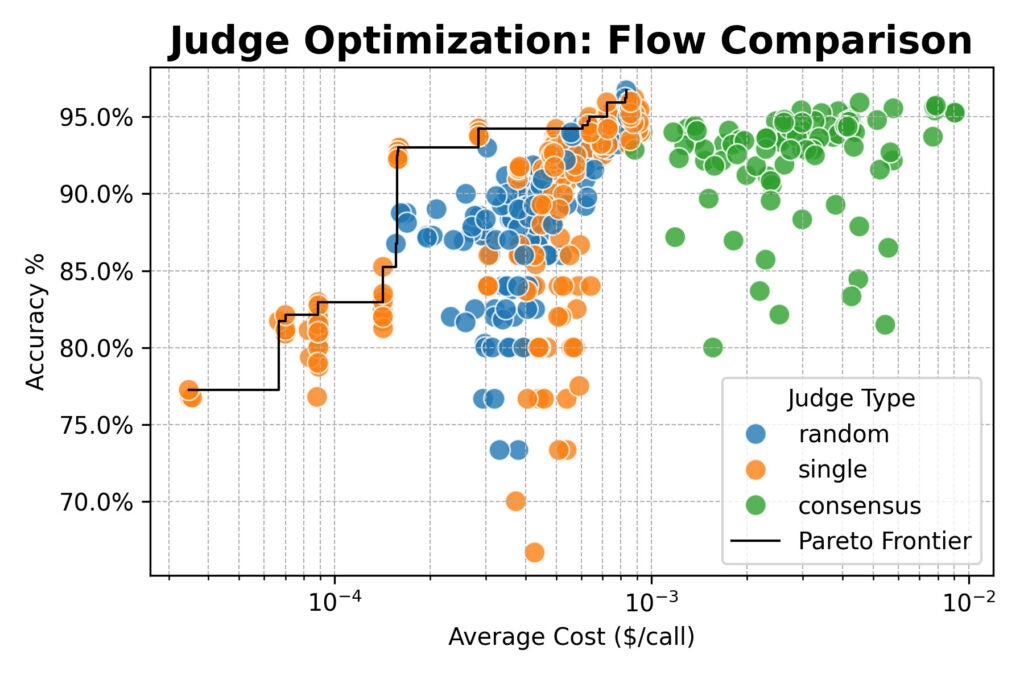

Subsequent, we scaled up, evaluating a cluster of huge open-weight fashions (from Qwen, DeepSeek, Google, and NVIDIA) and testing new choose methods:

- Random: Choosing a choose at random from a pool for every analysis.

- Consensus: Polling 3 or 5 fashions and taking the bulk vote.

Right here the outcomes converged: consensus-based judges provided no accuracy benefit over single or random judges.

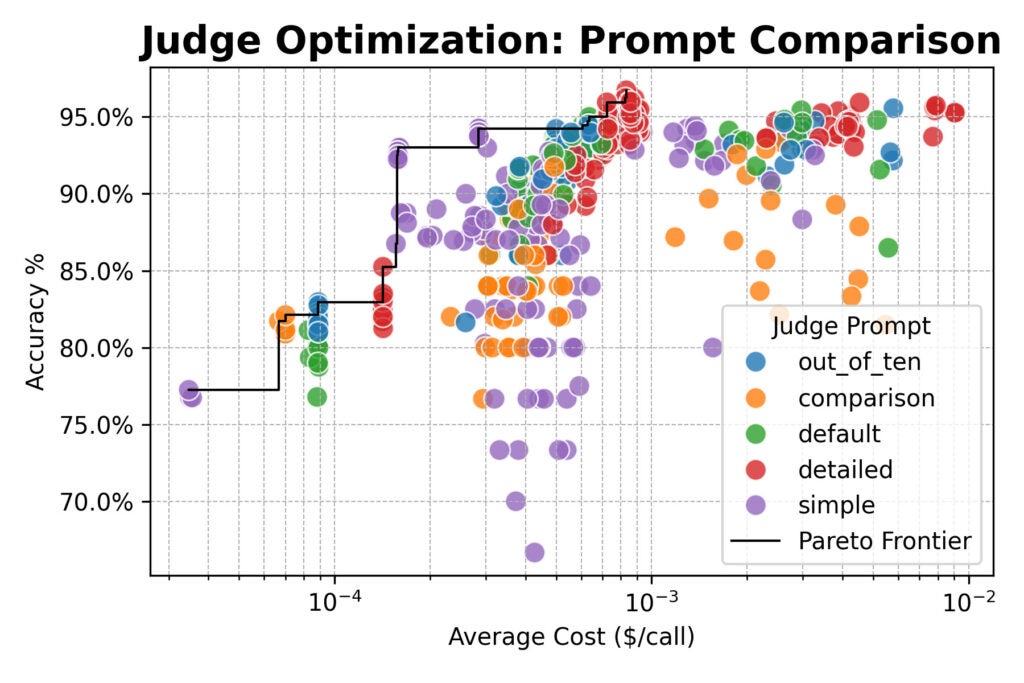

All three strategies topped out round 96% settlement with human labels. Throughout the board, the best-performing configurations used the detailed immediate.

However there was an essential exception: the straightforward immediate paired with a robust open-weight mannequin like Qwen/Qwen2.5-72B-Instruct was practically 20× cheaper than detailed prompts, whereas solely giving up a number of proportion factors of accuracy.

What makes this resolution completely different?

For a very long time, our rule of thumb was: “Simply use gpt-4o-mini.” It’s a typical shortcut for groups searching for a dependable, off-the-shelf choose. And whereas gpt-4o-mini did carry out effectively (round 93% accuracy with the default immediate), our experiments revealed its limits. It’s only one level on a much wider trade-off curve.

A scientific method offers you a menu of optimized choices as a substitute of a single default:

- High accuracy, regardless of the associated fee. A consensus stream with the detailed immediate and fashions like Qwen3-32B, DeepSeek-R1-Distill, and Nemotron-Tremendous-49B achieved 96% human alignment.

- Funds-friendly, fast testing. A single mannequin with the straightforward immediate hit ~93% accuracy at one-fifth the price of the gpt-4o-mini baseline.

By optimizing throughout accuracy, value, and latency, you can also make knowledgeable decisions tailor-made to the wants of every challenge — as a substitute of betting the whole lot on a one-size-fits-all choose.

Constructing dependable judges: Key takeaways

Whether or not you utilize our framework or not, our findings might help you construct extra dependable analysis programs:

- Prompting is the most important lever. For the best human alignment, use detailed prompts that spell out your analysis standards. Don’t assume the mannequin is aware of what “good” means to your job.

- Easy works when velocity issues. If value or latency is important, a easy immediate (e.g., “Return YES if the Generated Reply is right relative to the Reference Reply, or NO if it’s not.”) paired with a succesful mannequin delivers wonderful worth with solely a minor accuracy trade-off.

- Committees deliver stability. For important evaluations the place accuracy is non-negotiable, polling 3–5 various, highly effective fashions and taking the bulk vote reduces bias and noise. In our research, the top-accuracy consensus stream mixed Qwen/Qwen3-32B, DeepSeek-R1-Distill-Llama-70B, and NVIDIA’s Nemotron-Tremendous-49B.

- Greater, smarter fashions assist. Bigger LLMs constantly outperformed smaller ones. For instance, upgrading from microsoft/Phi-4-multimodal-instruct (5.5B) with an in depth immediate to gemma3-27B-it with a easy immediate delivered an 8% enhance in accuracy — at a negligible distinction in value.

From uncertainty to confidence

Our journey started with a troubling discovery: as a substitute of following the rubric, our LLM judges had been being swayed by lengthy, plausible-sounding refusals.

By treating analysis as a rigorous engineering downside, we moved from doubt to confidence. We gained a transparent, data-driven view of the trade-offs between accuracy, value, and velocity in LLM-as-a-Decide programs.

Extra knowledge means higher decisions.

We hope our work and our open-source dataset encourage you to take a more in-depth take a look at your personal analysis pipelines. The “finest” configuration will at all times rely in your particular wants, however you not must guess.

Able to construct extra reliable evaluations? Discover our work in syftr and begin judging your judges.