Code-oriented giant language fashions moved from autocomplete to software program engineering techniques. In 2025, main fashions should repair actual GitHub points, refactor multi-repo backends, write exams, and run as brokers over lengthy context home windows. The principle query for groups will not be “can it code” however which mannequin suits which constraints.

Listed here are seven fashions (and techniques round them) that cowl most actual coding workloads right this moment:

- OpenAI GPT-5 / GPT-5-Codex

- Anthropic Claude 3.5 Sonnet / Claude 4.x Sonnet with Claude Code

- Google Gemini 2.5 Professional

- Meta Llama 3.1 405B Instruct

- DeepSeek-V2.5-1210 (with DeepSeek-V3 because the successor)

- Alibaba Qwen2.5-Coder-32B-Instruct

- Mistral Codestral 25.01

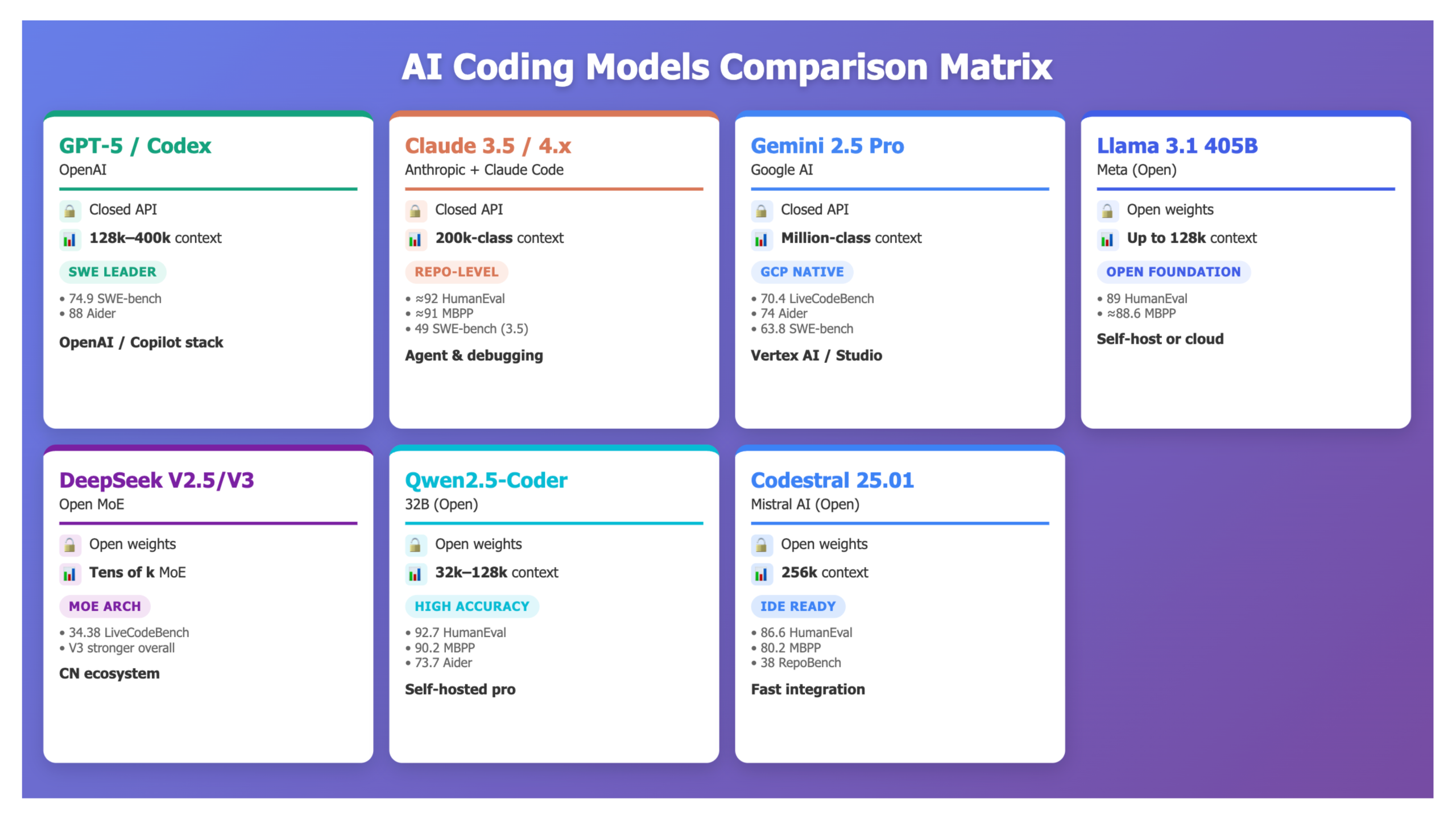

The purpose of this comparability is to not rank them on a single rating. The purpose is to indicate which system to choose for a given benchmark goal, deployment mannequin, governance requirement, and IDE or agent stack.

Analysis dimensions

We examine on six steady dimensions:

- Core coding high quality: HumanEval, MBPP / MBPP EvalPlus, code technology and restore high quality on normal Python duties.

- Repo and bug-fix efficiency: SWE-bench Verified (actual GitHub points), Aider Polyglot (whole-file edits), RepoBench, LiveCodeBench.

- Context and long-context conduct: Documented context limits and sensible conduct in lengthy periods.

- Deployment mannequin: Closed API, cloud service, containers, on-premises or totally self-hosted open weights.

- Tooling and ecosystem: Native brokers, IDE extensions, cloud integration, GitHub and CI/CD assist.

- Price and scaling sample: Token pricing for closed fashions, {hardware} footprint and inference sample for open fashions.

1. OpenAI GPT-5 / GPT-5-Codex

OpenAI’s GPT-5 is the flagship reasoning and coding mannequin and the default in ChatGPT. For real-world code, OpenAI experiences:

- SWE-bench Verified: 74.9%

- Aider Polyglot: 88%

Each benchmarks simulate actual engineering: SWE-bench Verified runs towards upstream repos and exams; Aider Polyglot measures whole-file multi-language edits.

Context and variants

- gpt-5 (chat) API: 128k token context.

- gpt-5-pro / gpt-5-codex: as much as 400k mixed context within the mannequin card, with typical manufacturing limits round ≈272k enter + 128k output for reliability.

GPT-5 and GPT-5-Codex can be found in ChatGPT (Plus / Professional / Workforce / Enterprise) and by way of the OpenAI API; they’re closed-weight, cloud-hosted solely.

Strengths

- Highest printed SWE-bench Verified and Aider Polyglot scores amongst broadly accessible fashions.

- Very sturdy at multi-step bug fixing with “considering” (chain-of-thought) enabled.

- Deep ecosystem: ChatGPT, Copilot, and lots of third-party IDE and agent platforms use GPT-5 backends.

Limits

- No self-hosting; all visitors should undergo OpenAI or companions.

- Lengthy-context calls are costly for those who stream full monorepos, so that you want retrieval and diff-only patterns.

Use while you need most repo-level benchmark efficiency and are snug with a closed, cloud API.

2. Anthropic Claude 3.5 Sonnet / Claude 4.x + Claude Code

Claude 3.5 Sonnet was Anthropic’s principal coding workhorse earlier than the Claude 4 line. Anthropic highlights it as SOTA on HumanEval, and impartial comparisons report:

- HumanEval: ≈ 92%

- MBPP EvalPlus: ≈ 91%

In 2025, Anthropic launched Claude 4 Opus, Sonnet, and Sonnet 4.5, positioning Sonnet 4.5 as its greatest coding and agent mannequin thus far.

Claude Code stack

Claude Code is a repo-aware coding system:

- Managed VM linked to your GitHub repo.

- File looking, enhancing, exams, and PR creation.

- SDK for constructing customized brokers that use Claude as a coding backend.

Strengths

- Very sturdy HumanEval / MBPP, good empirical conduct on debugging and code evaluate.

- Manufacturing-grade coding agent atmosphere with persistent VM and GitHub workflows.

Limits

- Closed and cloud-hosted, just like GPT-5 in governance phrases.

- Printed SWE-bench Verified numbers for Claude 3.5 Sonnet are under GPT-5, although Claude 4.x is probably going nearer.

Use while you want explainable debugging, code evaluate, and a managed repo-level agent and may settle for a closed deployment.

3. Google Gemini 2.5 Professional

Gemini 2.5 Professional is Google DeepMind’s principal coding and reasoning mannequin for builders. It experiences following efficiency/outcomes:

- LiveCodeBench v5: 70.4%

- Aider Polyglot (whole-file enhancing): 74.0%

- SWE-bench Verified: 63.8%

These outcomes place Gemini 2.5 Professional above many earlier fashions and solely behind Claude 3.7 and GPT-5 on SWE-bench Verified.

Context and platform

- Lengthy-context functionality marketed as much as 1M tokens throughout the Gemini household; 2.5 Professional is the steady tier utilized in Gemini Apps, Google AI Studio, and Vertex AI.

- Tight integration with GCP providers, BigQuery, Cloud Run, and Google Workspace.

Strengths

- Good mixture of LiveCodeBench, Aider, SWE-bench scores plus first-class GCP integration.

- Robust alternative for “information plus software code” while you need the identical mannequin for SQL, analytics helpers, and backend code.

Limits

- Closed and tied to Google Cloud.

- For pure SWE-bench Verified, GPT-5 and the most recent Claude Sonnet 4.x are stronger.

Use when your workloads already run on GCP / Vertex AI and also you need a long-context coding mannequin inside that stack.

4. Meta Llama 3.1 405B Instruct

Meta’s Llama 3.1 household (8B, 70B, 405B) is open-weight. The 405B Instruct variant is the high-end choice for coding and common reasoning. It experiences following efficiency/outcomes:

- HumanEval (Python): 89.0

- MBPP (base or EvalPlus): ≈ 88.6

These scores put Llama 3.1 405B among the many strongest open fashions on traditional code benchmarks.

The official mannequin card states that Llama 3.1 fashions outperform many open and closed chat fashions on frequent benchmarks and are optimized for multilingual dialogue and reasoning.

Strengths

- Excessive HumanEval / MBPP scores with open weights and permissive licensing.

- Robust common efficiency (MMLU, MMLU-Professional, and many others.), so one mannequin can serve each product options and coding brokers.

Limits

- 405B parameters imply excessive serving value and latency until you’ve a big GPU cluster.

- For strictly code benchmarks at a set compute finances, specialised fashions similar to Qwen2.5-Coder-32B and Codestral 25.01 are extra cost-efficient.

Use while you need a single open basis mannequin with sturdy coding and common reasoning, and also you management your personal GPU infrastructure.

5. DeepSeek-V2.5-1210 (and DeepSeek-V3)

DeepSeek-V2.5-1210 is an upgraded Combination-of-Specialists mannequin that merges the chat and coder strains. The mannequin card experiences:

- LiveCodeBench (08.01–12.01): improved from 29.2% to 34.38%

- MATH-500: 74.8% → 82.8%

DeepSeek has since launched DeepSeek-V3, a 671B-parameter MoE with 37B energetic per token, skilled on 14.8T tokens. The efficiency is akin to main closed fashions on many reasoning and coding benchmarks, and public dashboards present V3 forward of V2.5 on key duties.

Strengths

- Open MoE mannequin with strong LiveCodeBench outcomes and good math efficiency for its measurement.

- Environment friendly active-parameter depend vs whole parameters.

Limits

- V2.5 is now not the flagship; DeepSeek-V3 is now the reference mannequin.

- Ecosystem is lighter than OpenAI / Google / Anthropic; groups should assemble their very own IDE and agent integrations.

Use while you need a self-hosted MoE coder with open weights and are prepared to maneuver to DeepSeek-V3 because it matures.

6. Qwen2.5-Coder-32B-Instruct

Qwen2.5-Coder is Alibaba’s code-specific LLM household. The technical report and mannequin card describe six sizes (0.5B to 32B) and continued pretraining on over 5.5T tokens of code-heavy information.

The official benchmarks for Qwen2.5-Coder-32B-Instruct listing:

- HumanEval: 92.7%

- MBPP: 90.2%

- LiveCodeBench: 31.4%

- Aider Polyglot: 73.7%

- Spider: 85.1%

- CodeArena: 68.9%

Strengths

- Very sturdy HumanEval / MBPP / Spider outcomes for an open mannequin; usually aggressive with closed fashions in pure code duties.

- A number of parameter sizes make it adaptable to totally different {hardware} budgets.

Limits

- Much less fitted to broad common reasoning than a generalist like Llama 3.1 405B or DeepSeek-V3.

- Documentation and ecosystem are catching up in English-language tooling.

Use while you want a self-hosted, high-accuracy code mannequin and may pair it with a common LLM for non-code duties.

7. Mistral Codestral 25.01

Codestral 25.01 is Mistral’s up to date code technology mannequin. Mistral’s announcement and follow-up posts state that 25.01 makes use of a extra environment friendly structure and tokenizer and generates code roughly 2× sooner than the bottom Codestral mannequin.

Benchmark experiences:

- HumanEval: 86.6%

- MBPP: 80.2%

- Spider: 66.5%

- RepoBench: 38.0%

- LiveCodeBench: 37.9%

Codestral 25.01 helps over 80 programming languages and a 256k token context window, and is optimized for low-latency, high-frequency duties similar to completion and FIM.

Strengths

- Excellent RepoBench / LiveCodeBench scores for a mid-size open mannequin.

- Designed for quick interactive use in IDEs and SaaS, with open weights and a 256k context.

Limits

- Absolute HumanEval / MBPP scores sit under Qwen2.5-Coder-32B, which is predicted at this parameter class.

Use while you want a compact, quick open code mannequin for completions and FIM at scale.

Face to face comparability

| Characteristic | GPT-5 / GPT-5-Codex | Claude 3.5 / 4.x + Claude Code | Gemini 2.5 Professional | Llama 3.1 405B Instruct | DeepSeek-V2.5-1210 / V3 | Qwen2.5-Coder-32B | Codestral 25.01 |

|---|---|---|---|---|---|---|---|

| Core process | Hosted common mannequin with sturdy coding and brokers | Hosted fashions plus repo-level coding VM | Hosted coding and reasoning mannequin on GCP | Open generalist basis with sturdy coding | Open MoE coder and chat mannequin | Open code-specialized mannequin | Open mid-size code mannequin |

| Context | 128k (chat), as much as 400k Professional / Codex | 200k-class (varies by tier) | Lengthy-context, million-class throughout Gemini line | As much as 128k in lots of deployments | Tens of okay, MoE scaling | 32B with typical 32k–128k contexts relying on host | 256k context |

| Code benchmarks (examples) | 74.9 SWE-bench, 88 Aider | ≈92 HumanEval, ≈91 MBPP, 49 SWE-bench (3.5); 4.x stronger however much less printed | 70.4 LiveCodeBench, 74 Aider, 63.8 SWE-bench | 89 HumanEval, ≈88.6 MBPP | 34.38 LiveCodeBench; V3 stronger on blended benchmarks | 92.7 HumanEval, 90.2 MBPP, 31.4 LiveCodeBench, 73.7 Aider | 86.6 HumanEval, 80.2 MBPP, 38 RepoBench, 37.9 LiveCodeBench |

| Deployment | Closed API, OpenAI / Copilot stack | Closed API, Anthropic console, Claude Code | Closed API, Google AI Studio / Vertex AI | Open weights, self-hosted or cloud | Open weights, self-hosted; V3 by way of suppliers | Open weights, self-hosted or by way of suppliers | Open weights, accessible on a number of clouds |

| Integration path | ChatGPT, OpenAI API, Copilot | Claude app, Claude Code, SDKs | Gemini Apps, Vertex AI, GCP | Hugging Face, vLLM, cloud marketplaces | Hugging Face, vLLM, customized stacks | Hugging Face, business APIs, native runners | Azure, GCP, customized inference, IDE plugins |

| Greatest match | Max SWE-bench / Aider efficiency in hosted setting | Repo-level brokers and debugging high quality | GCP-centric engineering and information + code | Single open basis mannequin | Open MoE experiments and Chinese language ecosystem | Self-hosted high-accuracy code assistant | Quick open mannequin for IDE and product integration |

What to make use of when?

- You need the strongest hosted repo-level solver: Use GPT-5 / GPT-5-Codex. Claude Sonnet 4.x is the closest competitor, however GPT-5 has the clearest SWE-bench Verified and Aider numbers right this moment.

- You need a full coding agent over a VM and GitHub: Use Claude Sonnet + Claude Code for repo-aware workflows and lengthy multi-step debugging periods.

- You might be standardized on Google Cloud: Use Gemini 2.5 Professional because the default coding mannequin inside Vertex AI and AI Studio.

- You want a single open common basis: Use Llama 3.1 405B Instruct while you need one open mannequin for software logic, RAG, and code.

- You need the strongest open code specialist: Use Qwen2.5-Coder-32B-Instruct, and add a smaller common LLM for non-code duties if wanted.

- You need MoE-based open fashions: Use DeepSeek-V2.5-1210 now and plan for DeepSeek-V3 as you progress to the most recent improve.

- You might be constructing IDEs or SaaS merchandise and wish a quick open code mannequin: Use Codestral 25.01 for FIM, completion, and mid-size repo work with 256k context.

GPT-5, Claude Sonnet 4.x, and Gemini 2.5 Professional now outline the higher certain of hosted coding efficiency, particularly on SWE-bench Verified and Aider Polyglot. On the identical time, open fashions similar to Llama 3.1 405B, Qwen2.5-Coder-32B, DeepSeek-V2.5/V3, and Codestral 25.01 present that it’s sensible to run high-quality coding techniques by yourself infrastructure, with full management over weights and information paths.

For many software program engineering groups, the sensible reply is a portfolio: one or two hosted frontier fashions for the toughest multi-service refactors, plus one or two open fashions for inside instruments, regulated code bases, and latency-sensitive IDE integrations.

References

- OpenAI – Introducing GPT-5 for builders (SWE-bench Verified, Aider Polyglot) (openai.com)

- Vellum, Runbear and different benchmark summaries for GPT-5 coding efficiency (vellum.ai)

- Anthropic – Claude 3.5 Sonnet and Claude 4 bulletins (Anthropic)

- Kitemetric and different third-party Claude 3.5 Sonnet coding benchmark opinions (Kite Metric)

- Google – Gemini 2.5 Professional mannequin web page and Google / Datacamp benchmark posts (Google DeepMind)

- Meta – Llama 3.1 405B mannequin card and analyses of HumanEval / MBPP scores (Hugging Face)

- DeepSeek – DeepSeek-V2.5-1210 mannequin card and replace notes; group protection on V3 (Hugging Face)

- Alibaba – Qwen2.5-Coder technical report and Hugging Face mannequin card (arXiv)

- Mistral – Codestral 25.01 announcement and benchmark summaries (Mistral AI)