That is Half 18 of an ongoing collection on utilizing Claude Code for analysis. But it surely’s additionally my third submit on utilizing Claude Code to duplicate a paper that used pure language processing strategies (particularly, a Roberta mannequin with human annotators). The primary half (under) set it up and included a video recording of it.

In Half 2 (linked above), I confirmed you the punchline: gpt-4o-mini agreed with the unique RoBERTa classifier on solely 69% of particular person speeches — however the mixture traits had been nearly similar. Partisan polarization, the country-of-origin patterns, the historic arc — all of it survived.

That end result bothered me. Not as a result of it was mistaken, however as a result of I didn’t perceive why it labored. A 3rd of the labels modified. That’s over 100,000 speeches reclassified. How do you reshuffle 100,000 labels and get the identical reply?

Right now’s half covers the puzzle of why these outcomes work in any respect — and the place we’re going subsequent. Right now I spent an hour with Claude Code attempting to determine that out. Beneath is what occurred and is described in one other video. I don’t full the progress, however you’ll be able to see once more me considering out loud and dealing by way of the questions that remained.

Thanks for following together with this collection. It’s a labor of affection. All Claude Code posts are free after they first exit, although the whole lot goes behind the paywall finally. Usually I flip a coin on what will get paywalled, however for Claude Code, each new submit begins free. In the event you like this collection, I hope you’ll contemplate changing into a paying subscriber — it’s solely $5/month or $50/12 months, the minimal Substack permits.

The Conjecture: Symmetric Noise

The opposite day, I reclassified a big corpus of congressional speeches and presidential communications into three classes: anti-immigration, pro-immigration and impartial. It wasn’t precisely a replication of this paper from PNAS, however extra like an extension, as their authentic paper used a Roberta mannequin with 7500 annotated speeches from round 7 or so college students who learn and categorized the speeches, thus coaching the mannequin on them, after which predicted the opposite 195,000 off it. I prolonged it utilizing gpt-4o-mini, with none human classification, to see to what diploma it agreed with the unique and whether or not that disagreement mattered. And I discovered vital reclassification and but the reclassification had no impact on mixture traits in addition to varied ordering inside subgroups.

I’ve discovered this puzzling however had a conjecture which was that the gpt-4o-mini reclassification was coming from the “marginal” or “edge speeches”, and for the reason that mixture measure was a web immigration measure of anti minus professional, possibly it was simply averaging out noise leaving the underlying traits the identical.

I stored eager about this when it comes to a easy analogy. Think about two graders scoring essays as A, B, or C. They disagree on a 3rd of the essays — however they report the identical class common each semester.

That solely works if the disagreements cancel. If the strict grader downgrades borderline A’s to B’s and additionally downgrades borderline C’s to B’s at roughly the identical charge, the B pile grows however the common doesn’t transfer. The sign is the hole between A and C. The noise is the stuff within the center.

That’s what I suspected was occurring right here. The important thing measure in Card et al. is web tone — the proportion of pro-immigration speeches minus the proportion of anti-immigration speeches. If gpt-4o-mini reclassifies marginal Professional speeches as Impartial and marginal Anti speeches as Impartial at comparable charges, the distinction is preserved. The noise cancels within the subtraction.

However I wished to check this formally, and I wished to do it utilizing Claude Code in order that I might proceed for instance to folks utilizing Claude Code within the context of precise analysis which is a combination of knowledge assortment, conjecture, testing hypotheses empirically, making a pipeline of replicable code, and summarizing ends in “stunning decks” in order that I might replicate on them later.

Two Exams for Symmetry

First, on the video you will notice that I had Claude Code devise after which construct two empirical assessments.

-

Check 1 computed the web reclassification affect: for every decade, what’s the distinction in web tone between the unique labels and the LLM labels? If the reclassification is symmetric, that distinction ought to hover round zero.

-

Check 2 decomposed the flows. As a substitute of trying on the web impact, we tracked the 2 streams individually: the Professional→Impartial reclassification charge and the Anti→Impartial reclassification charge over time. In the event that they observe one another, the cancellation has a structural clarification.

The outcomes had been trustworthy. The symmetry which I had hypothesized isn’t excellent — the t-test rejects actual symmetry (p < 0.001) which was a take a look at I didn’t explicitly request, however which Claude Code pursued given my basic request for a statistical take a look at. Anti→Impartial stream is constantly bigger than Professional→Impartial, with a symmetry ratio of about 0.82. The LLM is a little more skeptical of anti-immigration classifications than pro-immigration ones. And also you noticed that truly within the authentic transition matrix as a result of there was extra reclassification going from Professional→Impartial simply trying on the mixture information. Plus the pattern sizes had been totally different (bigger for the unique Professional than the unique Anti classes) and really massive. So all this actually did was affirm what was all the time there in entrance of my eyes.

However the residual is small. Imply delta web tone is about 5 share factors — modest relative to the 40-60 level partisan swings that outline the story. The mechanism is uneven however correlated, and averaging over massive samples absorbs what’s left.

Jason Fletcher’s Query

My good friend Jason Fletcher requested query: does the settlement break down for older speeches? Congressional language within the Eighteen Eighties is nothing just like the 2010s. They wrote in a distinct type, and who is aware of how up to date LLMs deal with outdated versus new speeches. If gpt-4o-mini is a creature of contemporary textual content, we’d count on it to wrestle with Nineteenth-century rhetoric. However since there are a number of texts within the coaching information, possibly they deal with it the identical. Perhaps younger college students at Princeton will wrestle moreso than LLMs with older textual content. It’s extra an empirical conjecture than the rest.

So Claude Code constructed two extra assessments: settlement charges by decade and era-specific transition matrices. I’ll dive into the precise outcomes tomorrow abruptly, however the punching for at this time was probably not a complete shock to me. The general settlement barely strikes. It’s 70% within the Eighteen Eighties and 69% within the fashionable period. The LLM handles Nineteenth-century speech about in addition to Twenty first-century speech. Which match my priors on LLM strengths.

However beneath that steady floor, the composition rotates dramatically. Professional settlement rises from 44% to 68%. Impartial falls from 91% to 80%. So regardless that they cancel out in mixture, there are some distinctive patterns. It’s a totally different type of balancing act. This appeared extra in line with some off my priors concerning the biases of the human annotators that created the unique labels. Maybe people are simply higher at labeling the current (i.e., 68% agree on professional speeches) than the previous (i.e., 44%). Which is an attention-grabbing discovering in all probability price eager about.

Two Extra Issues Working In a single day

However then as soon as we had the symmetry story, I wished to push additional. And right here was the thought I went with on the video recording. Each of which required accumulating extra information, and that gave me an opportunity to each showcase some outdated tips (i.e., utilizing gpt-4o-mini for cheap batch requests, however doing it utilizing Claude Code to create the scripts in order that he viewer/reader might see it themselves how simple it was), and a few new ones as nicely. Particularly —

-

Classifying a thermometer. As a substitute of classifying speeches into three bins, I despatched all 305,000 speeches again to OpenAI scored on a extra steady scale from -100 (anti-immigration) to +100 (pro-immigration). It was technically nonetheless multi-valued, however starting from -100 to +100, we are going to not less than get a pleasant image to see what this distribution of speeches seems like in response to gpt-4o-mini. However the thought was borne out of my speculation concerning the reclassification occurring for the marginal speeches. Particularly, if the reclassified speeches cluster considerably symmetrically round zero on this multi-valued scale starting from -100 to +100, then that is likely to be proof that confirms they had been all the time marginal instances sitting on the choice boundary and thus reclassifying was merely on noisy speeches that on common canceled one another out thus leaving the traits largely intact within the extension. These batches are processing at OpenAI now.

-

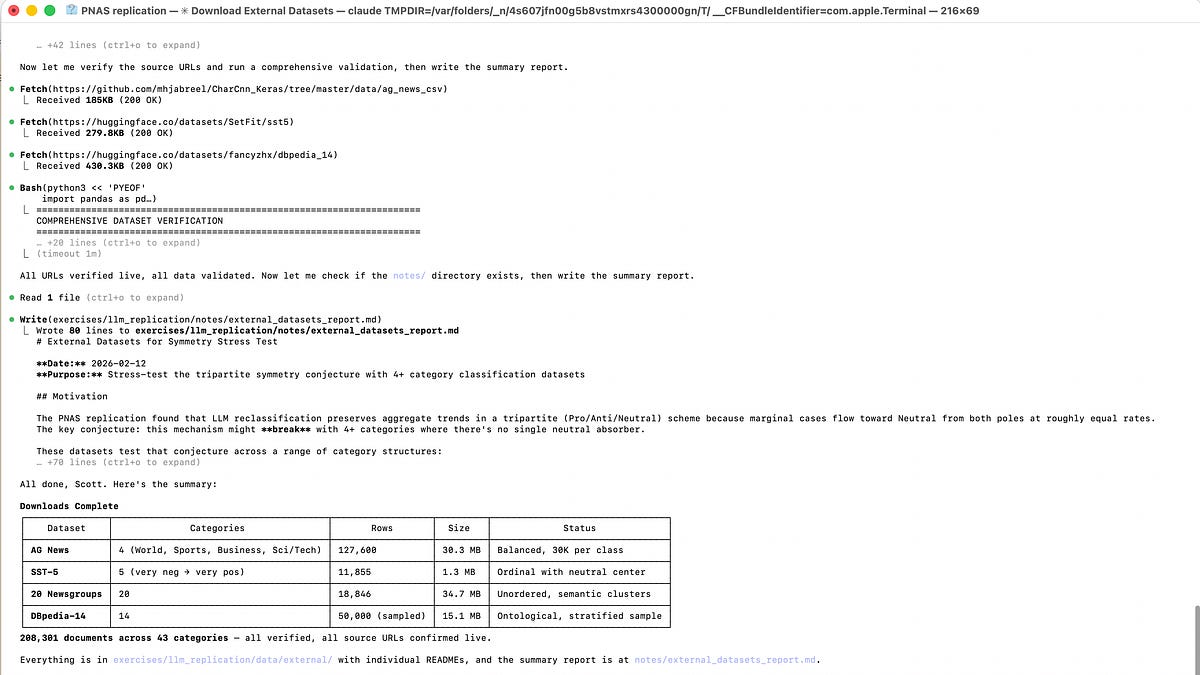

Exterior datasets. The opposite a part of my conjecture, although, needed to do with the unique classification being anti, impartial and pro-immigration. Was the discovering that the LLM reclassification was each substantial and had no impact in any respect on the unique discovering merely an artifact of that tripartite classification? Why? As a result of that authentic classification has a built-in symmetry mechanism — two poles and a center — which must break with 4 or extra classes. As an illustration, if the classification was not “ordered” however was extra of a definite classes (e.g., by race), then it’s not clear you must even theoretically get the identical end result as what we discovered. So to check this, I spun up a second Claude Code agent utilizing

--dangerously-skip-permissionswithin the terminal to go looking GitHub, Kaggle, and replication packages for datasets with 4+ human-annotated classes. On the time of this writing, that course of is completed. It solely took 4 minutes to internet crawl, discover these datasets, and retailer them domestically. I’ll evaluation this tomorrow on a brand new submit.

What’s Subsequent

Tomorrow I’ll have thermometer outcomes. I’ll obtain them on video, analyze them and report findings in a brand new deck. I will even do the identical in actual time with the exterior datasets. If the thermometer exhibits reclassified speeches clustering close to zero, that’s robust proof for the marginal-cases story. If the 4+ class datasets present the identical sample, the speculation generalizes. In the event that they don’t, tripartite classification is particular and that’s attention-grabbing too.

That is the a part of analysis I like — when you could have a conjecture and the information to check it’s actually being collected in a single day. However what I like is that that complete a part of it was facilitated by Claude Code which is giving me again time to suppose as a substitute of endeavor the tedious duties of coding this up.

Thanks once more for studying and supporting the substack, which is a labor of affection! I hope you discover these workout routines helpful. Please contemplate changing into a paying subscriber of the substack! At $5/month, it’s fairly a deal! Tune in tomorrow to see what I discover!