I’m going to oscillate between essays about Claude Code, explainers about Claude Code and video tutorials utilizing Claude Code. At present is an essay. Like the opposite Claude Code posts, this one is free to everybody, however be aware that after just a few days, all the things on this substack goes behind a paywall. For the time being, although, let me present you a development of images of me attempting to help the Patriots on Sunday by sporting the entire Boston sports activities memorabilia I personal. Sadly, it didn’t assist in any respect — or did it? What would the rating have been had I not wore all this I ponder?

Thanks everybody for supporting this substack! For under the cup of a espresso each month, you can also get swarms of emails about Claude Code, causal inference, hyperlinks to a billion issues, and photos of me sporting an apron. So take into account changing into a paying subscriber!

Tyler Cowen has this transfer he’s been doing for years. Every time one thing disruptive reveals up — crypto, distant work, AI — he asks the identical query: What’s the equilibrium? Not the partial equilibrium. Not your greatest response. The Nash equilibrium — the place everyone seems to be enjoying their greatest response to everybody else’s greatest response, and no one has any incentive to deviate. The place does this factor settle?

I’ve been utilizing Claude Code since mid-November. I bear in mind the primary time — I used it to fuzzy impute gender and race in a dataset of names. I assumed I used to be simply taking a look at one other chatbot. However I saved utilizing it, saved taking extra steps, and saved being shocked at what I used to be doing with it. By late November, I’d used it on a undertaking that was sufficiently exhausting, and that was once I knew. The pace, the vary of duties, the standard — it didn’t have an simply discernible higher sure. Extra work, much less time, higher work — but in addition completely different work. Duties I wouldn’t have tried as a result of the execution price was too excessive all of a sudden grew to become possible. Some mixture of extra, quicker, higher, and new always. It was, for me, the primary actual shift in marginal product per unit of time I’d skilled, even counting ChatGPT. I felt a real urgency to inform each utilized social scientist who would hear: It’s important to attempt Claude Code. It’s important to belief me.

However right here’s the factor about being an economist. You’ll be able to really feel huge surplus and concurrently know that surplus is precisely what aggressive forces eat. In aggressive markets, anybody who can enter will enter, as long as their anticipated positive aspects exceed the prices of entry. They preserve coming into till the marginal entrant earns zero financial revenue. The query isn’t whether or not Claude Code is efficacious proper now. The query is what occurs when everybody in your discipline has it.

I believe 4 issues occur.

The zero revenue situation is just not a principle. It’s nearer to a drive of nature. You see it in eating places, in retail, in educational subfields. Wherever surplus is seen and limitations are surmountable, folks present up. And when sufficient folks present up, the excess will get competed away.

Take into consideration spreadsheets. When VisiCalc after which Lotus 1-2-3 arrived, the early adopters had an infinite benefit. Accountants who might use a spreadsheet had been value greater than accountants who couldn’t. That benefit was actual and huge. Nevertheless it didn’t final, as a result of the limitations to studying spreadsheets had been low and the advantages had been seen. Finally everybody realized spreadsheets. The aggressive benefit disappeared. However accounting bought higher. The work itself improved. The equilibrium wasn’t larger rents for spreadsheet customers. It was larger high quality work throughout the board, on the similar compensation.

I believe that’s the place Claude Code is headed for utilized social science — however I wish to be trustworthy about what “the work will get higher” really means, as a result of the proof is just not as clear as the passion suggests. A pre-registered RCT by METR discovered that skilled open-source builders had been 19% slower with AI coding instruments on their very own repositories. And right here’s the half that ought to make each early adopter uncomfortable: these builders believed they had been 20% quicker. The perception-reality hole was 39 share factors.

Now — essential caveats. That examine had 16 builders utilizing Cursor Professional, not Claude Code, on mature software program repositories they already knew intimately. These weren’t utilized social scientists doing empirical analysis. The exterior validity to our context is genuinely unsure. However the discovering that folks systematically overestimate their AI-assisted productiveness is value sitting with, as a result of none of us are exempt from that bias. CodeRabbit’s evaluation of 470 GitHub pull requests discovered AI-generated code had 1.7 instances extra points than human-written code. And Anthropic’s personal analysis discovered that builders utilizing AI scored 17% decrease on code comprehension quizzes than those that coded by hand.

However the spreadsheet analogy really predicts this. Spreadsheets didn’t simply make accounting higher — additionally they created completely new classes of error. Round references. Hidden components errors. The Reinhart-Rogoff Excel error that influenced European austerity coverage. Each productiveness device creates new failure modes alongside real enhancements. The trustworthy prediction for AI in analysis might be this: the ceiling of what’s doable rises, the ground of high quality rises for individuals who beforehand couldn’t do the work, and a brand new class of AI-assisted error emerges that we are going to want norms and establishments to catch. The work will get higher on common. However overconfidence is an actual and current threat, particularly for individuals who already know what they’re doing.

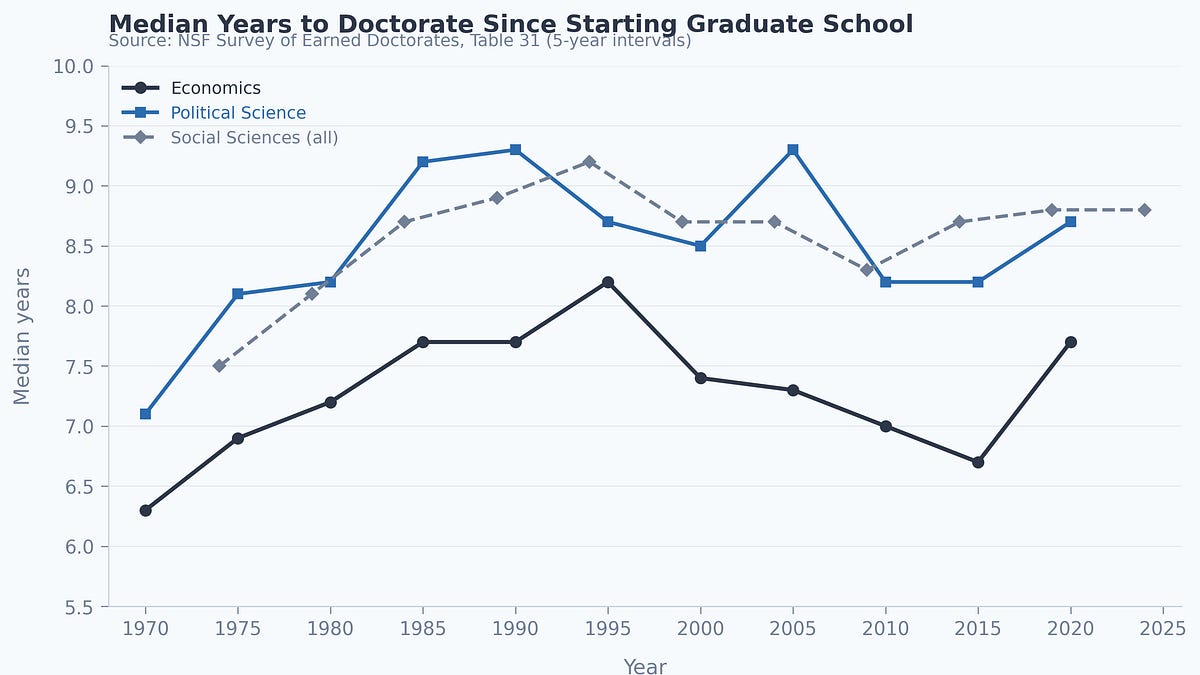

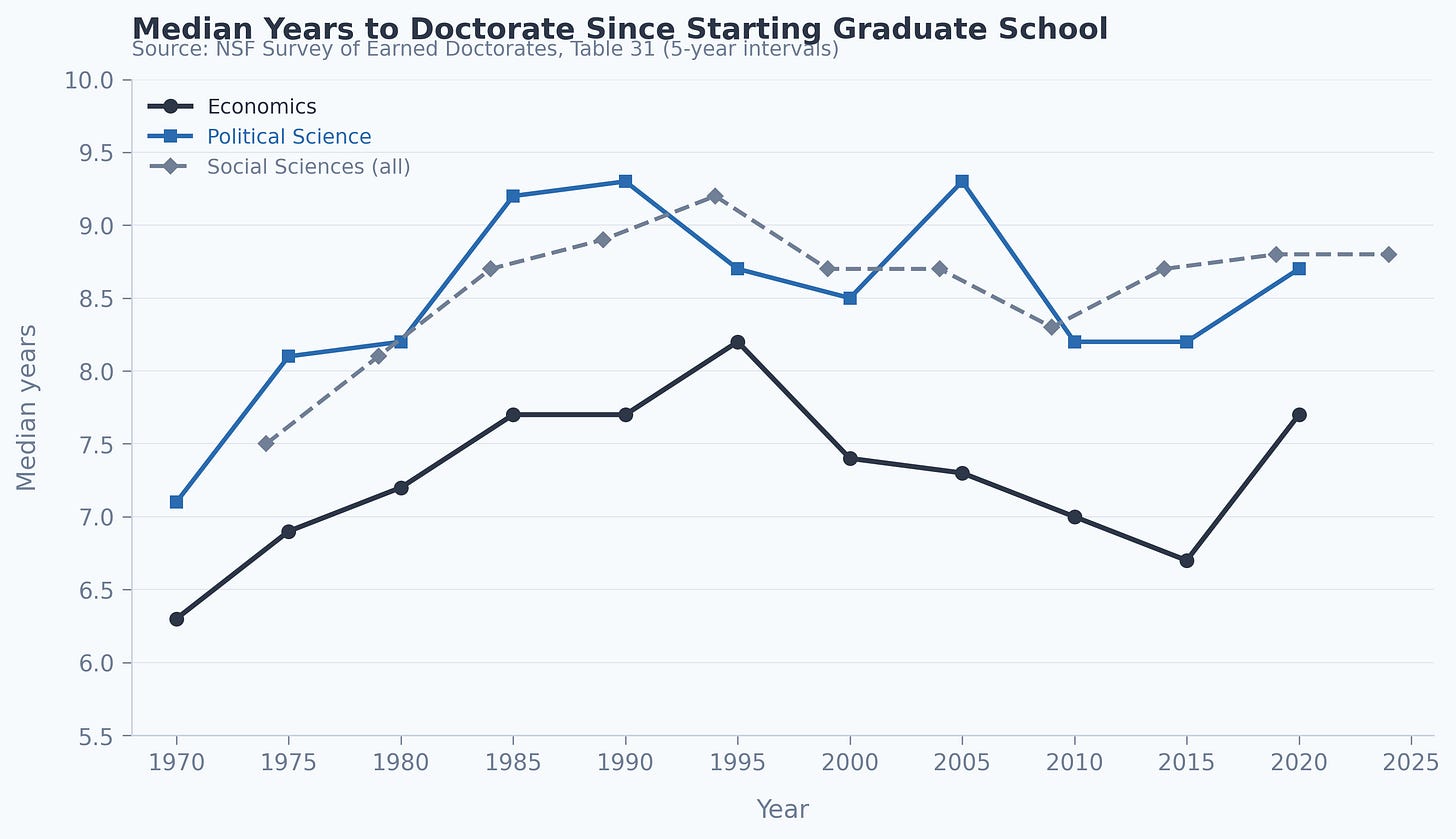

Have a look at the determine. The NSF’s Survey of Earned Doctorates reveals that the median economics PhD takes about 6.5 to 7.5 years from beginning graduate faculty — it peaked at 8.2 years in 1995, fell to six.7 by 2015, and jumped again to 7.7 in 2020 (probably pushed by delays attributable to the pandemic’s impact in the marketplace for brand new PhDs). Political science has even longer instances, starting from 8 to 9 years. Inventory, Siegfried, and Finegan tracked 586 college students who entered 27 economics PhD packages in Fall 2002 and located that by October 2010, solely 59% had earned their PhD — 37% had dropped out completely.

That was earlier than AI brokers. And it was earlier than the economics job market collapsed. Paul Goldsmith-Pinkham’s monitoring of JOE postings reveals complete listings fell to 604 as of November 2025 — a 50% decline from 2024 and 20% under COVID ranges. Federal Reserve and financial institution regulator postings: zero. Not low. Zero. EconJobMarket knowledge reveals North American assistant professor positions down 39% year-over-year. Roughly 1,400 new economics PhDs at the moment are competing for about 400 tenure-track slots.

Add the enrollment cliff — WICHE initiatives a 13% decline in highschool graduates from peak by 2041 — and the NIH overhead cap that will redirect $4 to $5 billion yearly away from analysis universities, and you’ve got a occupation being squeezed from each course concurrently.

Now take into account the proof on who AI helps. None of those research are about Claude Code particularly — we don’t but have RCTs on AI brokers for utilized social scientists, and that absence is itself value noting. However the sample throughout adjoining domains is constant. Brynjolfsson, Li, and Raymond studied almost 5,200 buyer help brokers utilizing GPT-based instruments (QJE 2025) and located that AI elevated productiveness by 14% on common — however by 34% for the least skilled staff. Two months with AI equaled six months with out it. Mollick and colleagues discovered the identical sample at Boston Consulting Group utilizing GPT-4: 12.2% extra duties, 25.1% quicker, 40% larger high quality — with below-average performers gaining 43% versus 17% for high performers. However — and that is essential — when consultants used GPT-4 on analytical duties requiring judgment exterior the AI’s functionality frontier, they carried out 19 share factors worse than consultants with out AI. Mollick calls this the jagged frontier. There are duties the place AI makes you higher and duties the place it makes you worse, and you can not at all times inform which is which upfront. These had been GPT-3 and GPT-4 period chatbots — not agentic instruments like Claude Code that may execute code, handle recordsdata, and run multi-step analysis workflows. Whether or not that distinction narrows or widens the frontier is an open empirical query.

I wish to watch out right here, although, as a result of there’s a significant query about whether or not any of those research inform us what we have to find out about Claude Code particularly. Claude Code is just not a chatbot. It’s an AI agent — it runs in your machine, executes code, manages recordsdata, searches the online, builds and debugs multi-step analysis workflows autonomously. The Brynjolfsson examine was a couple of GPT-3.5 chatbot helping buyer help reps. The Mollick examine was about GPT-4 answering consulting prompts. The METR examine was about Cursor Professional, an AI coding assistant, on software program repositories. None of them studied what occurs when an utilized social scientist has an agent that may independently clear knowledge, run regressions, construct figures, verify replication packages, and iterate on all of it. We merely don’t have that examine but. The exterior validity from chatbots and code assistants to agentic analysis instruments is genuinely unsure, and anybody who tells you in any other case is guessing.

What we can say is that this: the constant sample throughout each adjoining examine is that AI helps the least skilled essentially the most, on duties throughout the frontier. Graduate college students — who’ve the least expertise, essentially the most to study, and the worst job market in fashionable reminiscence — are the inhabitants probably to profit, regardless of the magnitude seems to be. The case for getting these instruments into their fingers doesn’t require believing AI helps everybody equally, and even realizing the exact impact dimension. It requires believing the course is true. And on that, the proof throughout each area factors the identical approach.

I’ve at all times believed in an environment friendly market speculation for good concepts. Not concepts within the summary — particular, actionable analysis alternatives. The pure experiment you observed. The dataset no one else has used. The coverage variation that creates clear identification. If aggressive capital markets hack away alternatives till we ought to be shocked to discover a greenback on the bottom, why wouldn’t the identical be true for analysis concepts? In a aggressive educational market, actually nice concepts don’t sit round unclaimed for lengthy until some barrier protects them.

The one factor that protected me from being continually scooped was that I labored in an space most individuals discovered repugnant — the economics of intercourse work. For years I used to be largely off alone with perhaps ten different economists. All of us had been both coauthors or sufficiently unfold out that we didn’t overlap. Repugnance was my barrier to entry — social, not informational or monetary. It’s why it was such a shock when, in the future within the spring of 2009, I learn within the Windfall Journal that Rhode Island had by accident legalized indoor intercourse work twenty-nine years earlier, and a choose named Elaine Bucci had dominated it authorized in 2003. I wrote Manisha Shah and stated holy crap. That undertaking took 9 years of my life.

However repugnance is endogenous. It’s a sense, not a government-mandated monopoly allow. And a device like Claude Code doesn’t simply compress time — it modifications what you’re prepared to try. It finds knowledge sources you didn’t know existed. It writes and debugs code for empirical methods you wouldn’t have tried as a result of the execution price was too excessive. It builds shows and documentation that used to take days. The window for any given analysis alternative is shrinking not simply because folks can work quicker, however as a result of the set of duties persons are prepared to undertake has expanded. The identical device that allows you to do extra lets everybody else do extra.

Now — how a lot compression are we really speaking about? Acemoglu’s task-based framework is the correct approach to consider this: AI doesn’t automate jobs, it automates duties, and the mixture impact relies on what share of duties it may well profitably deal with. He estimates about 5% of financial duties and a GDP improve of roughly 1.5% over the subsequent decade. The Solow Paradox — you possibly can see the pc age in all places besides within the productiveness statistics — might properly repeat. The analysis manufacturing operate might not shift as dramatically because it feels from the within.

However even when the compression is extra modest than it feels, the course is obvious. Execution limitations are falling. If in case you have a genuinely good concept and the information is accessible, the anticipated time earlier than another person executes it’s shorter than it was two years in the past. It’s possible you’ll not have to panic. However you in all probability shouldn’t sit on it both. Whether or not the aggressive stress on concepts is rising by loads or a bit, one of the best response is identical: transfer.

So if the equilibrium entails widespread adoption, what’s slowing it down? Worth.

Claude Max prices $100 a month for 5x utilization or $200 a month for 20x. To make use of Claude Code critically — all day, throughout analysis and educating — you want Max. The decrease tiers hit price limits that destroy momentum on the worst moments. Think about if R or Stata merely stopped working with out warning whilst you had been in a circulation state underneath a deadline. That’s what the $20/month tier appears like. 2 hundred {dollars} a month is $2,400 a yr. Graduate scholar stipends run $25,000 to $35,000 earlier than taxes. That’s 8 to 10% of after-tax earnings. The individuals who would profit most — the least skilled, the proof says — are those least in a position to afford it.

The economics of the answer are textbook. Graduate college students have decrease willingness to pay. Marginal price of serving them is close to zero at Anthropic’s scale. You’ll be able to’t resell Claude Code tokens — no arbitrage is feasible. These are the precise circumstances for welfare-improving third-degree worth discrimination. Anthropic already has a Claude for Schooling program, with Northeastern, LSE, and Champlain School as early adopters. Good. However push tougher — work straight with graduate departments, not simply universities. Get PhD college students on Max-equivalent plans at costs their stipends can soak up.

However this isn’t solely Anthropic’s drawback. Departments ought to be constructing Max subscriptions into PhD funding packages. The ROI is simple: if the Brynjolfsson and Mollick numbers maintain even partially for duties throughout the frontier, we’re speaking significant enhancements in pace and high quality for the scholars who want it most, plus quicker time to diploma — saving a yr of stipend, workplace area, and advising bandwidth for yearly shaved. A Max subscription is $2,400 a yr. That’s lower than one convention journey. In a market the place JOE postings are down 50% and funding is underneath risk from each course, something that makes your college students extra aggressive and your program extra environment friendly is just not optionally available. If I had been a division chair, that is what I’d be engaged on proper now.

And for college: in case your college received’t assist you to run Claude Code on their machines — and my hunch is most received’t, as soon as they perceive what AI brokers really do on a system — then get your individual laptop and your individual subscription. Universities lag on all the things. They received’t let you might have Dropbox. They received’t allow you to improve your working system. They don’t seem to be going to be wonderful with an AI agent executing arbitrary code on their community. That’s their proper. Nevertheless it means you will have to do your actual work by yourself machine. This isn’t dystopian. That is in all probability this fall.

So the place does this go away you? Two eventualities.

Within the first, adoption stays sluggish. Repugnance and opposition in the direction of AI persists, institutional inertia wins, most of your friends don’t undertake. In that world, early adopters keep constructive surplus for a very long time. You’re strapped to a jet engine whereas everybody else pedals.

Within the second, adoption accelerates. Norms shift, costs fall, instruments enhance. Most of your friends undertake. Now the excess will get competed away, and the price of not adopting turns into actively adverse.

This isn’t an essay claiming AI will remodel all the things. The proof is extra difficult than that. The positive aspects are uneven. Skilled researchers might not profit as a lot as they assume — and they’re significantly unhealthy at realizing when AI helps versus hurting. Judgment-heavy duties stay stubbornly human. The macro productiveness results could also be modest. However the equilibrium logic doesn’t care about any of that. It doesn’t require everybody to realize equally. It requires sufficient folks to realize sufficient that non-adoption turns into expensive. And the reply to that’s nearly actually sure. Wendell Berry refused to make use of a pc to put in writing. He was the exception, not the equilibrium.

In each eventualities, one of the best response is identical: undertake. The payoff matrix is uneven. The draw back of adopting early is small — you spent some time and cash studying a device. The draw back of not adopting whereas others do is massive — you’re much less productive than your competitors in a market the place the zero revenue situation is not going to be type to you.

There isn’t a situation through which I’m not paying for Max.