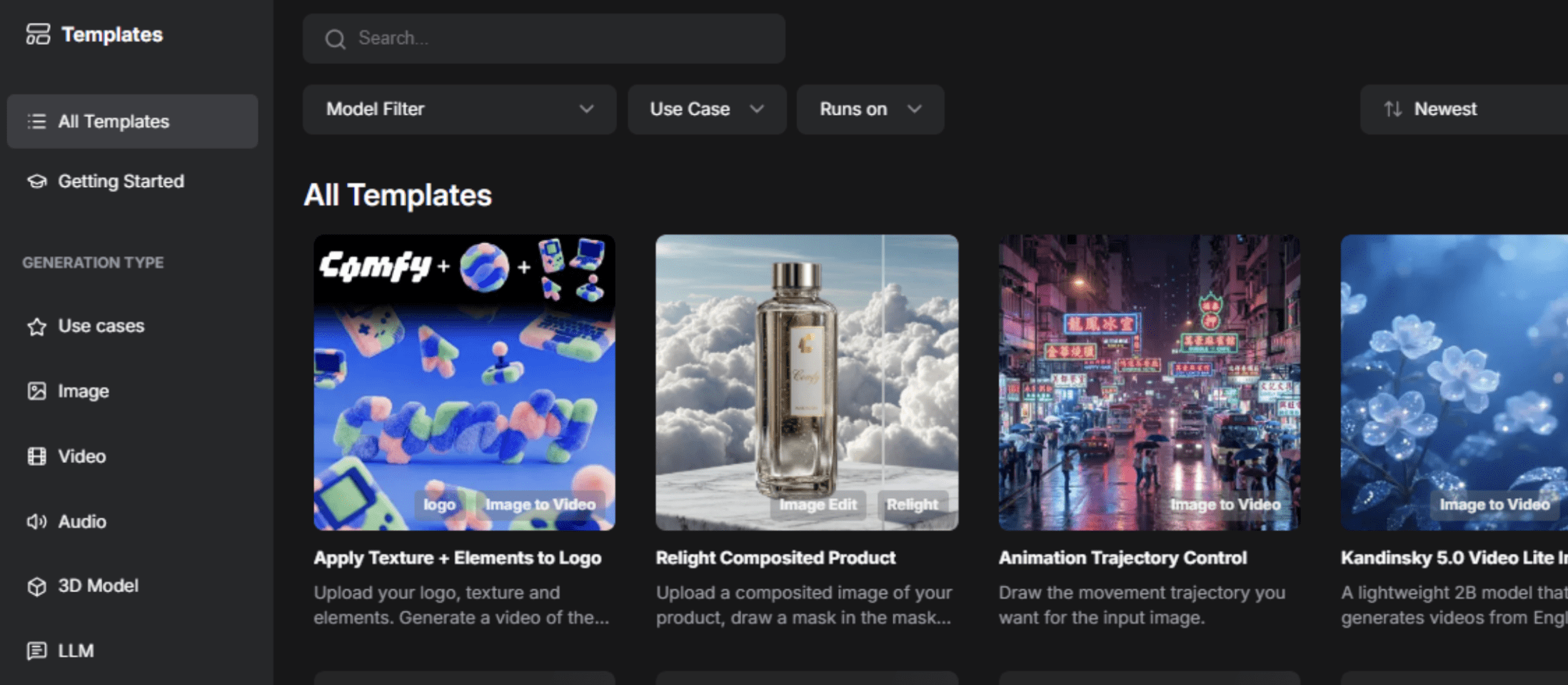

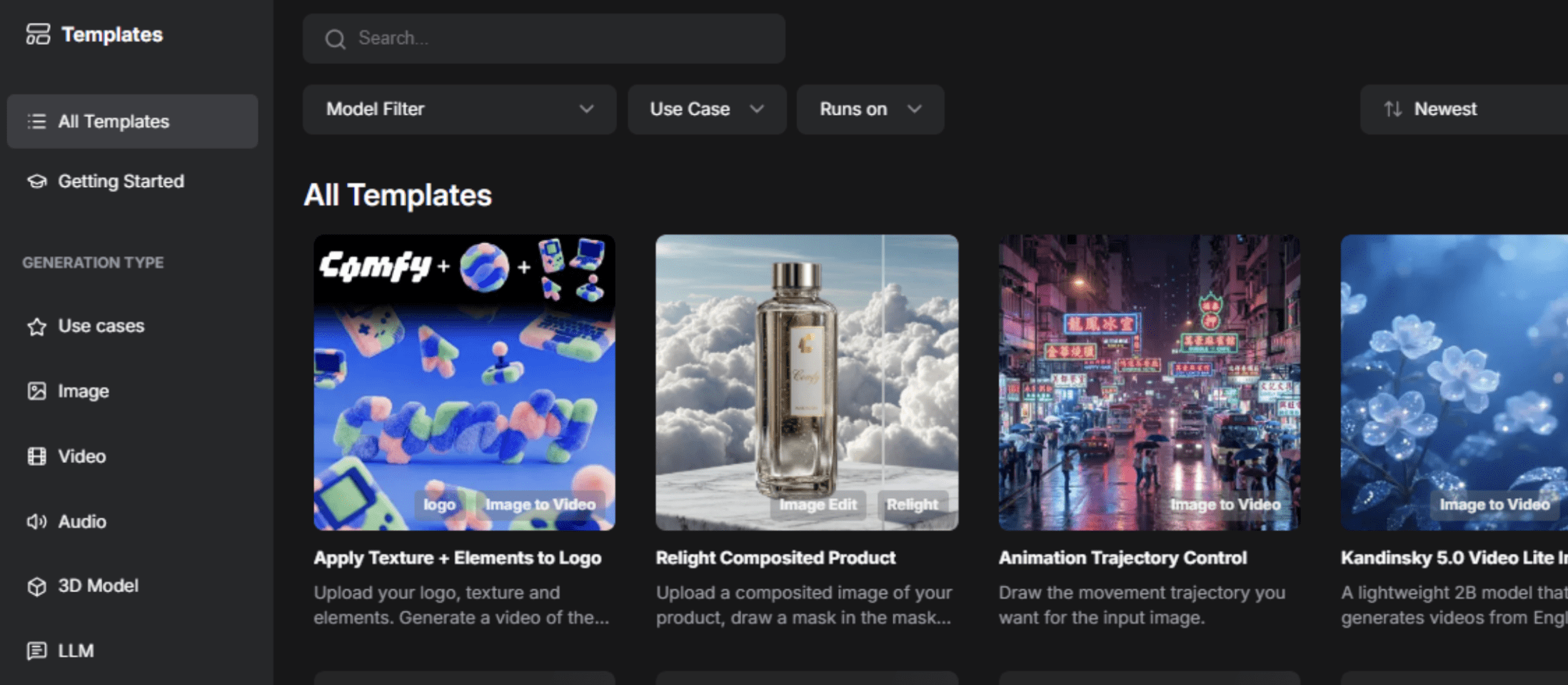

Picture by Creator

ComfyUI has modified how creators and builders strategy AI-powered picture technology. In contrast to conventional interfaces, the node-based structure of ComfyUI offers you unprecedented management over your artistic workflows. This crash course will take you from a whole newbie to a assured person, strolling you thru each important idea, function, and sensible instance you might want to grasp this highly effective device.

Picture by Creator

ComfyUI is a free, open-source, node-based interface and the backend for Secure Diffusion and different generative fashions. Consider it as a visible programming surroundings the place you join constructing blocks (known as “nodes”) to create complicated workflows for producing pictures, movies, 3D fashions, and audio.

Key benefits over conventional interfaces:

- You’ve gotten full management to construct workflows visually with out writing code, with full management over each parameter.

- It can save you, share, and reuse total workflows with metadata embedded within the generated information.

- There aren’t any hidden prices or subscriptions; it’s fully customizable with customized nodes, free, and open supply.

- It runs domestically in your machine for quicker iteration and decrease operational prices.

- It has prolonged performance, which is sort of limitless with customized nodes that may meet your particular wants.

# Selecting Between Native and Cloud-Primarily based Set up

Earlier than exploring ComfyUI in additional element, you will need to resolve whether or not to run it domestically or use a cloud-based model.

| Native Set up | Cloud-Primarily based Set up |

|---|---|

| Works offline as soon as put in | Requires a relentless web connection |

| No subscription charges | Might contain subscription prices |

| Full knowledge privateness and management | Much less management over your knowledge |

| Requires highly effective {hardware} (particularly a great NVIDIA GPU) | No highly effective {hardware} required |

| Handbook set up and updates required | Automated updates |

| Restricted by your pc’s processing energy | Potential pace limitations throughout peak utilization |

If you’re simply beginning, it is strongly recommended to start with a cloud-based resolution to study the interface and ideas. As you develop your abilities, take into account transitioning to a neighborhood set up for larger management and decrease long-term prices.

# Understanding the Core Structure

Earlier than working with nodes, it’s important to know the theoretical basis of how ComfyUI operates. Consider it as a multiverse between two universes: the crimson, inexperienced, blue (RGB) universe (what we see) and the latent area universe (the place computation occurs).

// The Two Universes

The RGB universe is our observable world. It comprises common pictures and knowledge that we are able to see and perceive with our eyes. The latent area (AI universe) is the place the “magic” occurs. It’s a mathematical illustration that fashions can perceive and manipulate. It’s chaotic, crammed with noise, and comprises the summary mathematical construction that drives picture technology.

// Utilizing the Variational Autoencoder

The variational autoencoder (VAE) acts as a portal between these universes.

- Encoding (RGB — Latent) takes a visual picture and converts it into the summary latent illustration.

- Decoding (Latent — RGB) takes the summary latent illustration and converts it again to a picture we are able to see.

This idea is vital as a result of many nodes function inside a single universe, and understanding it is going to enable you to join the correct nodes collectively.

// Defining Nodes

Nodes are the basic constructing blocks of ComfyUI. Every node is a self-contained perform that performs a particular process. Nodes have:

- Inputs (left aspect): The place knowledge flows in

- Outputs (proper aspect): The place processed knowledge flows out

- Parameters: Settings you modify to regulate the node’s habits

// Figuring out Coloration-Coded Knowledge Sorts

ComfyUI makes use of a shade system to point what sort of knowledge flows between nodes:

| Coloration | Knowledge Sort | Instance |

|---|---|---|

| Blue | RGB Photographs | Common seen pictures |

| Pink | Latent Photographs | Photographs in latent illustration |

| Yellow | CLIP | Textual content transformed to machine language |

| Pink | VAE | Mannequin that converts between universes |

| Orange | Conditioning | Prompts and management directions |

| Inexperienced | Textual content | Easy textual content strings (prompts, file paths) |

| Purple | Fashions | Checkpoints and mannequin weights |

| Teal/Turquoise | ControlNets | Management knowledge for guiding technology |

Understanding these colours is essential. They let you know immediately whether or not nodes can join to one another.

// Exploring Vital Node Sorts

Loader nodes import fashions and knowledge into your workflow:

CheckPointLoader: Masses a mannequin (usually containing the mannequin weights, Contrastive Language-Picture Pre-training (CLIP), and VAE in a single file).Load Diffusion Mannequin: Masses mannequin parts individually (for newer fashions like Flux that don’t bundle parts).VAE Loader: Masses the VAE decoder individually.CLIP Loader: Masses the textual content encoder individually.

Processing nodes remodel knowledge:

CLIP Textual content Encodeconverts textual content prompts into machine language (conditioning).KSampleris the core picture technology engine.VAE Decodeconverts latent pictures again to RGB.

Utility nodes help workflow administration:

- Primitive Node: Permits you to enter values manually.

- Reroute Node: Cleans up workflow visualization by redirecting connections.

- Load Picture: Imports pictures into your workflow.

- Save Picture: Exports generated pictures.

# Understanding the KSampler Node

The KSampler is arguably an important node in ComfyUI. It’s the “robotic builder” that really generates your pictures. Understanding its parameters is essential for creating high quality pictures.

// Reviewing KSampler Parameters

Seed (Default: 0)

The seed is the preliminary random state that determines which random pixels are positioned at first of technology. Consider it as your place to begin for randomization.

- Fastened Seed: Utilizing the identical seed with the identical settings will all the time produce the identical picture.

- Randomized Seed: Every technology will get a brand new random seed, producing totally different pictures.

- Worth Vary: 0 to 18,446,744,073,709,551,615.

Steps (Default: 20)

Steps outline the variety of denoising iterations carried out. Every step progressively refines the picture from pure noise towards your required output.

- Low Steps (10-15): Quicker technology, much less refined outcomes.

- Medium Steps (20-30): Good stability between high quality and pace.

- Excessive Steps (50+): Higher high quality however considerably slower.

CFG Scale (Default: 8.0, Vary: 0.0-100.0)

The classifier-free steerage (CFG) scale controls how strictly the AI follows your immediate.

Analogy — Think about giving a builder a blueprint:

- Low CFG (3-5): The builder glances on the blueprint then does their very own factor — artistic however might ignore directions.

- Excessive CFG (12+): The builder obsessively follows each element of the blueprint — correct however might look stiff or over-processed.

- Balanced CFG (7-8 for Secure Diffusion, 1-2 for Flux): The builder principally follows the blueprint whereas including pure variation.

Sampler Title

The sampler is the algorithm used for the denoising course of. Widespread samplers embody Euler, DPM++ 2M, and UniPC.

Scheduler

Controls how noise is scheduled throughout the denoising steps. Schedulers decide the noise discount curve.

- Regular: Normal noise scheduling.

- Karras: Typically offers higher outcomes at decrease step counts.

Denoise (Default: 1.0, Vary: 0.0-1.0)

That is certainly one of your most vital controls for image-to-image workflows. Denoise determines what proportion of the enter picture to exchange with new content material:

- 0.0: Don’t change something — output might be an identical to enter

- 0.5: Hold 50% of the unique picture, regenerate 50% as new

- 1.0: Fully regenerate — ignore the enter picture and begin from pure noise

# Instance: Producing a Character Portrait

Immediate: “A cyberpunk android with neon blue eyes, detailed mechanical elements, dramatic lighting.”

Settings:

- Mannequin: Flux

- Steps: 20

- CFG: 2.0

- Sampler: Default

- Decision: 1024×1024

- Seed: Randomize

Adverse immediate: “low high quality, blurry, oversaturated, unrealistic.”

// Exploring Picture-to-Picture Workflows

Picture-to-image workflows construct on the text-to-image basis, including an enter picture to information the technology course of.

State of affairs: You’ve gotten {a photograph} of a panorama and wish it in an oil portray type.

- Load your panorama picture

- Constructive Immediate: “oil portray, impressionist type, vibrant colours, brush strokes”

- Denoise: 0.7

// Conducting Pose-Guided Character Technology

State of affairs: You generated a personality you’re keen on however need a totally different pose.

- Load your unique character picture

- Constructive Immediate: “Identical character description, standing pose, arms at aspect”

- Denoise: 0.3

# Putting in and Setting Up ComfyUI

Cloud-Primarily based (Best for Newcomers)

Go to RunComfy.com and click on on launch Cozy Cloud on the high right-hand aspect. Alternatively, you possibly can merely enroll in your browser.

Picture by Creator

Picture by Creator

// Utilizing Home windows Moveable

- Earlier than you obtain, you will need to have a {hardware} setup together with an NVIDIA GPU with CUDA help or macOS (Apple Silicon).

- Obtain the transportable Home windows construct from the ComfyUI GitHub releases web page.

- Extract to your required location.

- Run

run_nvidia_gpu.bat(in case you have an NVIDIA GPU) orrun_cpu.bat. - Open your browser to http://localhost:8188.

// Performing Handbook Set up

- Set up Python: Obtain model 3.12 or 3.13.

- Clone Repository:

git clone https://github.com/comfyanonymous/ComfyUI.git - Set up PyTorch: Comply with platform-specific directions on your GPU.

- Set up Dependencies:

pip set up -r necessities.txt - Add Fashions: Place mannequin checkpoints in

fashions/checkpoints. - Run:

python major.py

# Working With Totally different AI Fashions

ComfyUI helps quite a few state-of-the-art fashions. Listed below are the present high fashions:

| Flux (Beneficial for Realism) | Secure Diffusion 3.5 | Older Fashions (SD 1.5, SDXL) |

|---|---|---|

| Wonderful for photorealistic pictures | Nicely-balanced high quality and pace | Extensively fine-tuned by the neighborhood |

| Quick technology | Helps numerous kinds | Huge low-rank adaptation (LoRA) ecosystem |

| CFG: 1-3 vary | CFG: 4-7 vary | Nonetheless wonderful for particular workflows |

# Advancing Workflows With Low-Rank Diversifications

Low-rank diversifications (LoRAs) are small adapter information that fine-tune fashions for particular kinds, topics, or aesthetics with out modifying the bottom mannequin. Widespread makes use of embody character consistency, artwork kinds, and customized ideas. To make use of one, add a “Load LoRA” node, choose your file, and join it to your workflow.

// Guiding Picture Technology with ControlNets

ControlNets present spatial management over technology, forcing the mannequin to respect pose, edge maps, or depth:

- Drive particular poses from reference pictures

- Keep object construction whereas altering type

- Information composition primarily based on edge maps

- Respect depth data

// Performing Selective Picture Modifying with Inpainting

Inpainting lets you regenerate solely particular areas of a picture whereas preserving the remaining intact.

Workflow: Load picture — Masks portray — Inpainting KSampler — End result

// Rising Decision with Upscaling

Use upscale nodes after technology to extend decision with out regenerating all the picture. Well-liked upscalers embody RealESRGAN and SwinIR.

# Conclusion

ComfyUI represents an important shift in content material creation. Its node-based structure offers you energy beforehand reserved for software program engineers whereas remaining accessible to learners. The educational curve is actual, however each idea you study opens new artistic prospects.

Start by making a easy text-to-image workflow, producing some pictures, and adjusting parameters. Inside weeks, you may be creating subtle workflows. Inside months, you may be pushing the boundaries of what’s doable within the generative area.

Shittu Olumide is a software program engineer and technical author keen about leveraging cutting-edge applied sciences to craft compelling narratives, with a eager eye for element and a knack for simplifying complicated ideas. You may also discover Shittu on Twitter.