What Are Neural Networks?

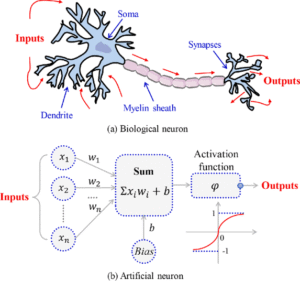

Neural networks are computational models inspired by the human brain, designed to recognize patterns, make decisions, and solve complex problems. They form the backbone of modern artificial intelligence (AI) and machine learning (ML), powering applications like image recognition, natural language processing, and self-driving cars.

*(Illustration: Side-by-side comparison of biological neurons vs. artificial neurons.)*

Why Are Neural Networks Important?

– **Adaptability**: Learn from data without explicit programming.

– **Pattern Recognition**: Excel at identifying trends in large datasets.

– **Automation**: Enable AI systems to perform tasks like speech recognition and fraud detection.

How Do Neural Networks Work?

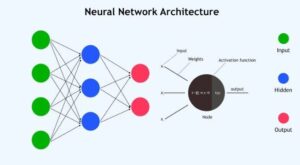

A neural network consists of interconnected **layers of artificial neurons (nodes)** that process input data to produce an output.

Key Components:

1. **Input Layer** – Receives raw data (e.g., pixels in an image).

2. **Hidden Layers** – Perform computations (weights and biases adjust during training).

3. **Output Layer** – Produces the final prediction (e.g., classifying an image as “cat” or “dog”).

*(Diagram: Basic structure of a neural network with labeled layers.)*

**The Math Behind Neural Networks**

Each neuron applies:

\[ \text{Output} = \text{Activation Function}(\text{Weighted Sum of Inputs} + \text{Bias}) \]

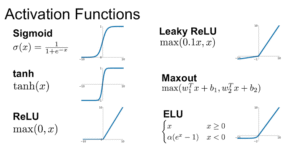

**Common Activation Functions:**

| **Function** | **Graph** | **Use Case** |

|——————|———-|————-|

| **Sigmoid** | *(S-shaped curve)* | Binary classification (0 or 1). |

| **ReLU (Rectified Linear Unit)** | *(Flat for x<0, linear for x≥0)* | Deep learning (fast computation). |

| **Softmax** | *(Probabilistic output summing to 1)* | Multi-class classification. |

*(Graph: Comparison of activation functions.)*

Types of Neural Networks

Different architectures are suited for different tasks:

| **Type** | **Structure** | **Application** |

|———————-|————–|—————–|

| **Feedforward (FFNN)** | Simple, one-directional flow. | Basic classification tasks. |

| **Convolutional (CNN)** | Uses filters for spatial hierarchies. | Image & video recognition. |

| **Recurrent (RNN)** | Loops allow memory of past inputs. | Time-series data, language modeling. |

| **Transformer** | Self-attention mechanisms. | ChatGPT, translation models. |

Training a Neural Network

Neural networks learn by adjusting weights through **backpropagation** and **gradient descent**.

### **Steps in Training:**

1. **Forward Pass** – Compute predictions.

2. **Loss Calculation** – Compare predictions to true values.

3. **Backpropagation** – Adjust weights to minimize error.

4. **Optimization** – Use algorithms like **Stochastic Gradient Descent (SGD)**.

Applications of Neural Networks

Neural networks are revolutionizing industries:

✅ **Healthcare** – Diagnosing diseases from medical scans.

✅ **Finance** – Fraud detection and stock prediction.

✅ **Autonomous Vehicles** – Real-time object detection.

✅ **Entertainment** – Recommendation systems (Netflix, Spotify).

Challenges & Limitations:

Despite their power, neural networks face hurdles:

– **Data Hunger** – Require massive labeled datasets.

– **Black Box Problem** – Hard to interpret decisions.

– **Computational Cost** – Training deep networks needs GPUs/TPUs.

Future of Neural Networks

Advancements like **spiking neural networks (SNNs)** and **quantum machine learning** could push AI even further.

Conclusion

Neural networks are transforming AI by mimicking human learning processes. Understanding their structure, training, and applications is key to leveraging their potential in solving real-world problems.